Mastering Software Development in R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Installing R

Installing R Russell Almond August 29, 2020 Objectives When you finish this lesson, you will be able to 1) Start and Stop R and R Studio 2) Download, install and run the tidyverse package. 3) Get help on R functions. What you need to Download R is a programming language for statistics. Generally, the way that you will work with R code is you will write scripts—small programs—that do the analysis you want to do. You will also need a development environment which will allow you to edit and run the scripts. I recommend RStudio, which is pretty easy to learn. In general, you will need three things for an analysis job: • R itself. R can be downloaded from https://cloud.r-project.org. If you use a package manager on your computer, R is likely available there. The most common package managers are homebrew on Mac OS, apt-get on Debian Linux, yum on Red hat Linux, or chocolatey on Windows. You may need to search for ‘cran’ to find the name of the right package. For Debian Linux, it is called r-base. • R Studio development environment. R Studio https://rstudio.com/products/rstudio/download/. The free version is fine for what we are doing. 1 There are other choices for development environments. I use Emacs and ESS, Emacs Speaks Statistics, but that is mostly because I’ve been using Emacs for 20 years. • A number of R packages for specific analyses. These can be downloaded from the Comprehensive R Archive Network, or CRAN. Go to https://cloud.r-project.org and click on the ‘Packages’ tab. -

The Rockerverse: Packages and Applications for Containerisation

PREPRINT 1 The Rockerverse: Packages and Applications for Containerisation with R by Daniel Nüst, Dirk Eddelbuettel, Dom Bennett, Robrecht Cannoodt, Dav Clark, Gergely Daróczi, Mark Edmondson, Colin Fay, Ellis Hughes, Lars Kjeldgaard, Sean Lopp, Ben Marwick, Heather Nolis, Jacqueline Nolis, Hong Ooi, Karthik Ram, Noam Ross, Lori Shepherd, Péter Sólymos, Tyson Lee Swetnam, Nitesh Turaga, Charlotte Van Petegem, Jason Williams, Craig Willis, Nan Xiao Abstract The Rocker Project provides widely used Docker images for R across different application scenarios. This article surveys downstream projects that build upon the Rocker Project images and presents the current state of R packages for managing Docker images and controlling containers. These use cases cover diverse topics such as package development, reproducible research, collaborative work, cloud-based data processing, and production deployment of services. The variety of applications demonstrates the power of the Rocker Project specifically and containerisation in general. Across the diverse ways to use containers, we identified common themes: reproducible environments, scalability and efficiency, and portability across clouds. We conclude that the current growth and diversification of use cases is likely to continue its positive impact, but see the need for consolidating the Rockerverse ecosystem of packages, developing common practices for applications, and exploring alternative containerisation software. Introduction The R community continues to grow. This can be seen in the number of new packages on CRAN, which is still on growing exponentially (Hornik et al., 2019), but also in the numbers of conferences, open educational resources, meetups, unconferences, and companies that are adopting R, as exemplified by the useR! conference series1, the global growth of the R and R-Ladies user groups2, or the foundation and impact of the R Consortium3. -

Installing R and Cran Binaries on Ubuntu

INSTALLING R AND CRAN BINARIES ON UBUNTU COMPOUNDING MANY SMALL CHANGES FOR LARGER EFFECTS Dirk Eddelbuettel T4 Video Lightning Talk #006 and R4 Video #5 Jun 21, 2020 R PACKAGE INSTALLATION ON LINUX • In general installation on Linux is from source, which can present an additional hurdle for those less familiar with package building, and/or compilation and error messages, and/or more general (Linux) (sys-)admin skills • That said there have always been some binaries in some places; Debian has a few hundred in the distro itself; and there have been at least three distinct ‘cran2deb’ automation attempts • (Also of note is that Fedora recently added a user-contributed repo pre-builds of all 15k CRAN packages, which is laudable. I have no first- or second-hand experience with it) • I have written about this at length (see previous R4 posts and videos) but it bears repeating T4 + R4 Video 2/14 R PACKAGES INSTALLATION ON LINUX Three different ways • Barebones empty Ubuntu system, discussing the setup steps • Using r-ubuntu container with previous setup pre-installed • The new kid on the block: r-rspm container for RSPM T4 + R4 Video 3/14 CONTAINERS AND UBUNTU One Important Point • We show container use here because Docker allows us to “simulate” an empty machine so easily • But nothing we show here is limited to Docker • I.e. everything works the same on a corresponding Ubuntu system: your laptop, server or cloud instance • It is also transferable between Ubuntu releases (within limits: apparently still no RSPM for the now-current Ubuntu 20.04) -

![R Generation [1] 25](https://docslib.b-cdn.net/cover/5865/r-generation-1-25-805865.webp)

R Generation [1] 25

IN DETAIL > y <- 25 > y R generation [1] 25 14 SIGNIFICANCE August 2018 The story of a statistical programming they shared an interest in what Ihaka calls “playing academic fun language that became a subcultural and games” with statistical computing languages. phenomenon. By Nick Thieme Each had questions about programming languages they wanted to answer. In particular, both Ihaka and Gentleman shared a common knowledge of the language called eyond the age of 5, very few people would profess “Scheme”, and both found the language useful in a variety to have a favourite letter. But if you have ever been of ways. Scheme, however, was unwieldy to type and lacked to a statistics or data science conference, you may desired functionality. Again, convenience brought good have seen more than a few grown adults wearing fortune. Each was familiar with another language, called “S”, Bbadges or stickers with the phrase “I love R!”. and S provided the kind of syntax they wanted. With no blend To these proud badge-wearers, R is much more than the of the two languages commercially available, Gentleman eighteenth letter of the modern English alphabet. The R suggested building something themselves. they love is a programming language that provides a robust Around that time, the University of Auckland needed environment for tabulating, analysing and visualising data, one a programming language to use in its undergraduate statistics powered by a community of millions of users collaborating courses as the school’s current tool had reached the end of its in ways large and small to make statistical computing more useful life. -

Working with R Steph Locke (Locke Data)

Working with R Steph Locke (Locke Data) Contents Preamble 7 About this book ....................... 7 What you need to already know ............... 7 Steph Locke .......................... 8 Locke Data .......................... 8 Acknowledgements ...................... 9 Conventions .......................... 9 Feedback ........................... 10 I R at a high level 13 1 About R 15 1.1 History ......................... 15 1.2 CRAN .......................... 16 1.3 Key points to know about R .............. 16 1.4 Summary ........................ 17 2 Why use R? 19 2.1 Data wrangling ..................... 19 2.2 Data science ....................... 20 2.3 Data visualisation ................... 22 2.4 Summary ........................ 25 3 Using RStudio 27 3.1 The console ....................... 27 3.2 Scripts .......................... 29 3.3 Code completion .................... 30 3.4 Projects ......................... 30 3.5 Summary ........................ 32 3 4 CONTENTS 4 Useful resources 33 4.1 The built-in help .................... 33 4.2 Online .......................... 35 4.3 Books .......................... 36 4.4 In-person ........................ 38 4.5 Summary ........................ 38 II R building blocks 39 5 R data types 41 5.1 Numbers ......................... 42 5.2 Text ........................... 44 5.3 Logical values ...................... 47 5.4 Dates .......................... 48 5.5 Missings ......................... 50 5.6 Summary ........................ 51 5.7 R Data Types Exercises ................ 52 6 -

Introduction to R, Day 1: “Getting Oriented” Michael Chimenti and Diana Kolbe Dec 5 & 6 2019 Iowa Institute of Human Genetics

Introduction to R, Day 1: “Getting oriented” Michael Chimenti and Diana Kolbe Dec 5 & 6 2019 Iowa Institute of Human Genetics Slides adapted from HPC Bio at Univ. of Illinois: https://wiki.illinois.edu/wiki/pages/viewpage.action?pageId=705021292 Distributed under a CC Attribution ShareAlike v4.0 license (adapted work): https://creativecommons.org/licenses/by-sa/4.0/ Learning objectives: 1. Be able to describe what R is and how the programming environment works. 2. Be able to use RStudio to install add-on packages on your own computer. 3. Be able to read, understand and write simple R code 4. Know how to get help. 5. Be able to describe the differences between R, RStudio and Bioconductor What is R? (www.r-project.org) • "... a system for statistical computation and graphics" consisting of: 1. A simple and effective programming language 2. A run-time environment with graphics • Many statistical procedures available for R; currently 15,111 additional add-on packages in CRAN • Completely free, open source, and available for Windows, Unix/Linux, and Mac What is Bioconductor? (www.bioconductor.org) • “… open source, open development software project to provide tools for the analysis and comprehension of high-throughput genomic data” • Primarily based on R language (functions can be in other languages), and run in R environment • Current release consists of 1741 software packages (sets of functions) for specific tasks • Also maintains 948 annotation packages for many commercial arrays and model organisms plus 371 experiment data packages and -

Introduction to Ggplot2

https://opr.princeton.edu/workshops/58 Introduction to ggplot2 Dawn Koffman Office of Population Research Princeton University October 2020 1 https://opr.princeton.edu/workshops/58 Part 1: Concepts and Terminology “It’s hard to succinctly describe how ggplot2 works because it embodies a deep philosophy of visualisation.” - From https://ggplot2.tidyverse.org 2 https://opr.princeton.edu/workshops/58 R Package: ggplot2 Used to produce statistical graphics, author = Hadley Wickham "attempt to take the good things about base and lattice graphics and improve on them with a strong, underlying model “ described in ggplot2 Elegant Graphs for Data Analysis, Second Edition, 2016 based on The Grammar of Graphics by Leland Wilkinson, 2005 "... describes the meaning of what we do when we construct statistical graphics ... More than a taxonomy ... Computational system based on the underlying mathematics of representing statistical functions of data." - does not limit developer to a set of pre-specified graphics adds some concepts to grammar which allow it to work well with R 3 https://opr.princeton.edu/workshops/58 qplot() ggplot2 provides two ways to produce plot objects: qplot() # quick plot – not covered in this workshop uses some concepts of The Grammar of Graphics, but doesn’t provide full capability and designed to be very similar to plot() and simple to use may make it easy to produce basic graphs but may delay understanding philosophy of ggplot2 ggplot() # grammar of graphics plot – focus of this workshop provides fuller implementation of The Grammar of Graphics may have steeper learning curve but allows much more flexibility when building graphs 4 https://opr.princeton.edu/workshops/58 Grammar Defines Components of Graphics data: in ggplot2, data must be stored as an R data frame coordinate system: describes 2-D space that data is projected onto - for example, Cartesian coordinates, polar coordinates, map projections, .. -

The Voice of the R Community

The Voice of the R Community David Smith @revodavid Director R Consortium R Consortium’s mission is to work with and provide support to key organizations and groups developing, maintaining, distributing and using R. The R Community Survey • Survey of R users Worldwide – 29 survey questions provided in English, Spanish and Chinese • Opened July 1, 2017; closed Aug 31, 2017 • 3618 Responses – 98% of respondents reported using R – 2% of respondents used R in the past, or planned to in future • Many verbatim (free-text responses) – Lots of interesting feedback! Where did the responses come from? 1616 respondents selected their location on an interactive map Demographics of respondents R usage by respondents • 83% use R as their primary data analysis tool • 88% use R for work – 37% use R in production • 37% have been using R for more than 5 years Where R users work Of respondents: 35% Academic 65% Industry What R interface do respondents use? 90% of respondents use R via RStudio (multiple selections allowed) What do people do with R? 37% of respondents have authored or contributed to an R package What other tools do respondents use? 66% also use Excel 44% also use Python (multiple selections allowed) What is “Big Data” for R users? 17% of respondents typically analyze 1M records or more Satisfaction with R 80% of respondents were “completely satisfied” or “very satisfied” by R overall What is the best aspect of working with R? Key themes: community and ecosystem the R language itself packages online resources What is the best aspect of working -

Tools for Analyzing R Code the Tidy Way by Lucy D’Agostino Mcgowan, Sean Kross, Jeffrey Leek

CONTRIBUTED RESEARCH ARTICLE 226 Tools for Analyzing R Code the Tidy Way by Lucy D’Agostino McGowan, Sean Kross, Jeffrey Leek Abstract With the current emphasis on reproducibility and replicability, there is an increasing need to examine how data analyses are conducted. In order to analyze the between researcher variability in data analysis choices as well as the aspects within the data analysis pipeline that contribute to the variability in results, we have created two R packages: matahari and tidycode. These packages build on methods created for natural language processing; rather than allowing for the processing of natural language, we focus on R code as the substrate of interest. The matahari package facilitates the logging of everything that is typed in the R console or in an R script in a tidy data frame. The tidycode package contains tools to allow for analyzing R calls in a tidy manner. We demonstrate the utility of these packages as well as walk through two examples. Introduction With the current emphasis on reproducibility and replicability, there is an increasing need to examine how data analyses are conducted (Goecks et al., 2010; Peng, 2011; McNutt, 2014; Miguel et al., 2014; Ioannidis et al., 2014; Richard, 2014; Leek and Peng, 2015; Nosek et al., 2015; Sidi and Harel, 2018). In order to accurately replicate a result, the exact methods used for data analysis need to be recorded, including the specific analytic steps taken as well as the software utilized (Waltemath and Wolkenhauer, 2016). Studies across multiple disciplines have examined the global set of possible data analyses that can be conducted on a specific data set (Silberzhan et al., 2018). -

Chapter 1. Preparing Data for Analysis and Visualization in R

distribute or 1 post, PREPARINGcopy, DATA FOR ANALYSISnot AND VISUALIZATION IN R DoThe R-Team and the Pot Policy Problem Copyright ©2021 by SAGE Publications, Inc. This work may not be reproduced or distributed in any form or by any means without express written permission of the publisher. 1.1 Choosing and learning R Leslie walked past her adviser’s office and stopped. She backed up to read a flyer hanging on the wall. The flyer announced a new local chapter of R-Ladies (see Box 1.1). Yes! she thought. She’d been wanting to learn R the entire year. 1.1 R-Ladies R-Ladies is a global group with chapters in cities around the world. The mission of R-Ladies is to increase gender diversity in the R community. To learn more, visit the R-Ladies Global website at https://rladies.org/ and the R-Ladies Global Twitter feed, @RLadiesGlobal. Leslie arrived at the R-Ladies event early. “Hi, I’m Leslie,” she told the firstdistribute woman she met. “Hey! Great to meet you. I’m Nancy,” answered the woman as they shook hands. “And I’m Kiara, one of Nancy’s friends,” said another woman, half-huggingor Nancy as she reached out to shake Leslie’s hand. “Can we guess you’re here to learn more about R?” Leslie nodded. “You’ve come to the right place,” said Nancy. “But let’s introduce ourselves first. I’m an experienced data scientist working for a biotech startup, and I love to code.” “You might call me a data management guru,”post, Kiara said. -

Tidyverse, Tidymodels, R-Lib, and Gt R Packages: Regulatory Compliance and Validation Issues

tidyverse, tidymodels, r-lib, and gt R packages: Regulatory Compliance and Validation Issues A Guidance Document for the use of affiliated R packages in Regulated Clinical Trial Environments September 2020 RStudio PBC 250 Northern Ave Boston, MA USA 02210 Tel: (+1) 844 448 1212 Email: [email protected] 1.Purpose and Introduction The purpose of this document is to demonstrate that certain R packages affiliated with the tidyverse[15], tidymodels[14], and r-lib[8] GitHub organizations, along with the gt package[2], when used in a qualified fashion, can support the appropriate regulatory requirements for validated systems, thus ensuring that resulting electronic records are “trustworthy, reliable and generally equivalent to paper records.” For R, please see the document below: R: Regulatory Compliance and Validation Issues A Guidance Document for the Use of R in Regulated Clinical Trial Environments[10] What qualifies as a record? Validation guidance is a result of regulation, most notably the US Food and Drug Administration’s 21 CFR Part 11[5]. This regulation was originally written to apply to health records. The definition of a record has subsequently been clarified and extended. A key consideration for companies subject to this regulation is determining the extent to which analytic software systems such as R, and the outputs they produce (plots, tables, models, predicted values, etc.) constitute records or derived records subject to Part 11 compliance[5]. RStudio, following the work of the R core foundation and the R Validation Hub, does not consider the outputs created, in whole or in part, by R and R packages as records directly subject to compliance regulations[10]. -

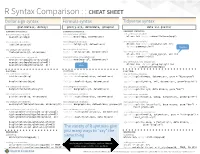

R Syntax Comparison : : CHEAT SHEET

R Syntax Comparison : : CHEAT SHEET Dollar sign syntax Formula syntax Tidyverse syntax goal(data$x, data$y) goal(y~x|z, data=data, group=w) data %>% goal(x) SUMMARY STATISTICS: SUMMARY STATISTICS: SUMMARY STATISTICS: one continuous variable: one continuous variable: one continuous variable: mean(mtcars$mpg) mosaic::mean(~mpg, data=mtcars) mtcars %>% dplyr::summarize(mean(mpg)) one categorical variable: one categorical variable: one categorical variable: table(mtcars$cyl) mosaic::tally(~cyl, data=mtcars) mtcars %>% dplyr::group_by(cyl) %>% dplyr::summarize(n()) the pipe two categorical variables: two categorical variables: table(mtcars$cyl, mtcars$am) mosaic::tally(cyl~am, data=mtcars) two categorical variables: mtcars %>% dplyr::group_by(cyl, am) %>% one continuous, one categorical: one continuous, one categorical: dplyr::summarize(n()) mean(mtcars$mpg[mtcars$cyl==4]) mosaic::mean(mpg~cyl, data=mtcars) mean(mtcars$mpg[mtcars$cyl==6]) one continuous, one categorical: mtcars %>% dplyr::group_by(cyl) %>% mean(mtcars$mpg[mtcars$cyl==8]) tilde dplyr::summarize(mean(mpg)) PLOTTING: PLOTTING: PLOTTING: one continuous variable: one continuous variable: one continuous variable: hist(mtcars$disp) lattice::histogram(~disp, data=mtcars) ggplot2::qplot(x=mpg, data=mtcars, geom = "histogram") boxplot(mtcars$disp) lattice::bwplot(~disp, data=mtcars) ggplot2::qplot(y=disp, x=1, data=mtcars, geom="boxplot") one categorical variable: one categorical variable: one categorical variable: barplot(table(mtcars$cyl)) mosaic::bargraph(~cyl, data=mtcars) ggplot2::qplot(x=cyl,