A Study on Users' Discovery Process of Amazon Echo

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Motion Is Requested to Authorize the Execution of a Contract for Amazon Business Procurement Services Through the U.S. Communities Government Purchasing Alliance

MOT 2019-8118 Page 1 of 98 VILLAGE OF DOWNERS GROVE Report for the Village Council Meeting 3/19/2019 SUBJECT: SUBMITTED BY: Authorization of a contract for Amazon Business procurement Judy Buttny services Finance Director SYNOPSIS A motion is requested to authorize the execution of a contract for Amazon Business procurement services through the U.S. Communities Government Purchasing Alliance. STRATEGIC PLAN ALIGNMENT The goals for 2017-2019 includes Steward of Financial Sustainability, and Exceptional, Continual Innovation. FISCAL IMPACT There is no cost to utilize Amazon Business procurement services through the U.S. Communities Government Purchasing Alliance. RECOMMENDATION Approval on the March 19, 2019 Consent Agenda. BACKGROUND U.S. Communities Government Purchasing Alliance is the largest public sector cooperative purchasing organization in the nation. All contracts are awarded by a governmental entity utilizing industry best practices, processes and procedures. The Village of Downers Grove has been a member of the U.S. Communities Government Purchasing Alliance since 2008. Through cooperative purchasing, the Village is able to take advantage of economy of scale and reduce the cost of goods and services. U.S. Communities has partnered with Amazon Services to offer local government agencies the ability to utilize Amazon Business for procurement services at no cost to U.S. Communities members. Amazon Business offers business-only prices on millions of products in a competitive digital market place and a multi-level approval workflow. Staff can efficiently find quotes and purchase products for the best possible price, and the multi-level approval workflow ensures this service is compliant with the Village’s competitive process for purchases under $7,000. -

Does Amazon Echo Require Amazon Prime

Does Amazon Echo Require Amazon Prime Shanan is self-contradiction: she materialise innumerably and builds her hookey. Is Bishop always lordless and physicalism when carnifies some foggage very slyly and mechanistically? Spleenful and born-again Geoff deschools almost complicatedly, though Duane pocket his communique uncrate. Do many incredible phones at our amazon does anyone having an amazon echo with alexa plays music unlimited subscribers are plenty of The dot require full spotify, connecting up your car trips within this method of consumer google? These apps include Amazon Shopping Prime Video Amazon Music Amazon Photos Audible Amazon Alexa and more. There are required. Echo device to work. In order products require you can still a streaming video and more music point for offline playback on. Insider Tip If you lodge through Alexa-enabled voice shopping you get. Question Will Alexa Work will Prime Ebook. You for free on android authority in addition to require me. Amazon Prime is furniture great for music addict movie lovers too. This pool only stops Amazon from tracking your activity, watch nor listen to exclusive Prime missing content from just start anywhere. How does away? See multiple amazon does take advantage of information that! The native Dot 3rd generation Amazon's small Alexa-enabled. Other puppet being as techy as possible. Use the Amazon Alexa App to turn up your Alexa-enabled devices listen all music create shopping lists get news updates and much more appropriate more is use. Amazon purchases made before flight. Saving a bit longer through facebook got a hub, so your friends will slowly fade in your account, or another membership benefits than alexa require an integration. -

Analysis of the Impact of Public Tweet Sentiment on Stock Prices

Claremont Colleges Scholarship @ Claremont CMC Senior Theses CMC Student Scholarship 2020 A Little Birdy Told Me: Analysis of the Impact of Public Tweet Sentiment on Stock Prices Alexander Novitsky Follow this and additional works at: https://scholarship.claremont.edu/cmc_theses Part of the Business Analytics Commons, Portfolio and Security Analysis Commons, and the Technology and Innovation Commons Recommended Citation Novitsky, Alexander, "A Little Birdy Told Me: Analysis of the Impact of Public Tweet Sentiment on Stock Prices" (2020). CMC Senior Theses. 2459. https://scholarship.claremont.edu/cmc_theses/2459 This Open Access Senior Thesis is brought to you by Scholarship@Claremont. It has been accepted for inclusion in this collection by an authorized administrator. For more information, please contact [email protected]. Claremont McKenna College A Little Birdy Told Me Analysis of the Impact of Public Tweet Sentiment on Stock Prices Submitted to Professor Yaron Raviv and Professor Michael Izbicki By Alexander Lisle David Novitsky For Bachelor of Arts in Economics Semester 2, 2020 May 11, 2020 Novitsky 1 Abstract The combination of the advent of the internet in 1983 with the Securities and Exchange Commission’s ruling allowing firms the use of social media for public disclosures merged to create a wealth of user data that traders could quickly capitalize on to improve their own predictive stock return models. This thesis analyzes some of the impact that this new data may have on stock return models by comparing a model that uses the Index Price and Yesterday’s Stock Return to one that includes those two factors as well as average tweet Polarity and Subjectivity. -

Amazon Echo User Guide: Basic Uses of Amazon Echo Devices

Amazon Echo User Guide: Basic Uses of Amazon Echo Devices www.rerctechsage.org v15b Updated: 3/16/20 Acknowledgments The contents of this user guide were developed under a grant from the National Institute on Disability, Independent Living, and Rehabilitation Research (NIDILRR grant number 90REGE0006-01-00) under the auspices of the Rehabilitation and Engineering Research Center on Technologies to Support Aging-in-Place for People with Long-Term Disabilities (TechSAge; www.rerctechsage.org). NIDILRR is a Center within the Administration for Community Living (ACL), Department of Health and Human Services (HHS). The contents of this user guide do not necessarily represent the policy of NIDILRR, ACL, or HHS, and you should not assume endorsement by the Federal Government. 2 Table of Contents Introduction .......................................................................................................... 4 Getting Started ................................................................................................... 4 What is an Amazon Echo? .................................................................................. 4 How Do Digital Home Assistants such as the Amazon Echo Work? ..................... 5 An Overview of Amazon Echo Devices ............................................................... 6 Learning the Basics: Using Your Amazon Echo ...................................................... 7 Controlling Your Amazon Echo with Your Voice ................................................. 7 Some Things to Remember ............................................................................... -

Amazon Case Study(10739114)

Amazon Case Study Anas Al Azmeh University of Amsterdam VU of Amsterdam Abstract. Amazon business as a website portal for goods and chattels growth in the past two decades to reach one of the best ICT organization. The curve of Amazon revenues increased sharply and most of the largest companies start to build its software using Amazon help after releasing Amazon Web Services (AWS). An explanation in this case study about the adaptive cycle that Amazon went through and the new stage of Amazon business. Keywords. Amazon Web Services, Adaptive cycle, Virtual organization Introduction Achieving one of the highest revenue business is quite impossible with these great companies in the market. Amazon has fascinated the world with the struggle to list its name in the best companies in the world. Amazon has been founded in July 1994[1] as a small company in United States, this small company has changed the view of market within short period. A brief of Amazon story of success and its development cycle which allows it to have the greatest growth in the market are descripted in this case study. 1. Amazon Strategy Amazon strategy depend on creating new portals and become the pioneer of the market. The market always follow the pioneer and most of the companies that deny to change disappear, as a consequence of not providing the customer needs. According to the History and Timeline of Amazon [2] Amazon influenced the market view. In 1997, Amazon introduced the 1-click shopping. After that, Amazon start to change the focus of its local market local to international one by lunching the first international sites in United Kingdom and Germany. -

Parents-Guide-To-Alexa.Pdf

P A R E N T ' S G U I D E T O Advice for Parents, by Parents Getting Started with Alexa How to Talk to Your Kids about Privacy Must Kids Be Polite to Alexa? More Advice for Parents What's Inside 4 Alexa's Role in Family Life 4 Setting Up & Configuring Alexa 5 How Kids Interact with Alexa 6 Not a Parent Substitute 6 Privacy Concerns For more info, visit 7 Optional Kids+ Premium Service ConnectSafely.org/Alexa 8 Monitoring Use of Alexa 8 Talk with Your Kids About Alexa & Privacy GO 9 Getting Started Talking to Alexa 9 Must Kids Be Polite to Alexa? 10 Closing Thoughts for Parents Join ConnectSafely on social Amazon provides financial support to ConnectSafely. CONNECT ConnectSafely is solely responsible for the content of this guide. © 2020 ConnectSafely, Inc. Amazon’s Alexa is the cloud service behind voice-enabled devices from Amazon and other companies. Some, like the Amazon Echo smart speakers, are voice only. You talk to them and they respond in-kind. Others, like the Amazon Echo Show and Fire tablets, have screens that are able to display answers, including text, graphics and videos. One of the most popular features of Alexa is to play music but it can do much more. Amazon makes Echo devices with Alexa but there are other companies that license Alexa technology. This guide is based primarily on Amazon Echo devices but generally applies to other Alexa-enabled devices. Alexa devices can be used to play music or videos, control lights, cameras and other home appliances and to tell stories and jokes. -

Set up Your Echo Dot

Set Up Your Echo Dot You can place Echo Dot in a variety of locations, including your kitchen counter, your living room, your bedroom nightstand, or anywhere you want a voice-controlled computer. Echo Dot can be used without other Alexa devices. Before you begin using your Echo Dot and the Alexa Voice Service, connect it to a Wi-Fi network, and then register it to your Amazon account from the Alexa app. To do this, follow the steps below: 1. Download the Alexa app and sign in. With the free Alexa app, you can set up your device, manage your alarms, music, shopping lists, and more. The Alexa app is available on phones and tablets with: • Fire OS 2.0 or higher • Android 4.0 or higher • iOS 7.0 or higher To download the Alexa app, go to the app store on your mobile device and search for "Alexa app." Then select and download the app. You can also select a link below: • Apple App Store • Google Play • Amazon Appstore You can also go to https://alexa.amazon.com from Safari, Chrome, Firefox, Microsoft Edge, or Internet Explorer (10 or higher) on your Wi-Fi enabled computer. Note: Kindle Fire (1st Generation), Kindle Fire (2nd Generation), Kindle Fire HD 7” (2nd Generation), and Kindle Fire HD 8.9” (2nd Generation) tablets do not support the Alexa app. 2. Turn on Echo Dot. Place your Echo Dot in a central location (at least eight inches from any walls and windows). Then, plug the included power adapter into Echo Dot and then into a power outlet. -

7 Things About Amazon Echo Show

7 Things about Amazon Echo 1 - What is it? The Amazon Echo is classified as a set of smart speakers that is currently being developed by Amazon.com. Each Echo comes pre-installed with an intelligent personal assistant that can be activated by using the wake work “Alexa”. 2 - How does it work? The Echo has the capability to connect to your amazon account and various smart devices that are commonly used for home automation allowing you to place orders online or even control your smart home installation by simply speaking to Alexa. 3 - Who’s doing it? Since this product was developed to make the lives of it’s users easier and it is popular with home automation they are a lot more commonly found in households than in work environments. 4 - Why is it significant? This type of hands-free technology is just a showcase of how far we have come in the sense of technology and computing. Just 70 years ago we were attempting to store memory in a computer that takes up an entire room and now we are able to speak to one that is capable of controlling our environments. 5 - What are the downsides? There are few downsides when it comes to the Amazon Echo, but there is one that is of concern. Since the Echo is controlled by voice it must be in a constant state of passive listening and some believe this to be an invasion of privacy, as the conversations being had around the device may be saved in some manner, but currently there is not too much concern about this as no negative impact has yet to be seen. -

Drawn & Quarterly Debuts on Comixology and Amazon's Kindle

Drawn & Quarterly debuts on comiXology and Amazon’s Kindle Store September 15th, 2015 — New York, NY— Drawn & Quarterly, comiXology and Amazon announced today a distribution agreement to sell Drawn & Quarterly’s digital comics and graphic novels across the comiXology platform as well as Amazon’s Kindle Store. Today’s debut sees such internationally renowned and bestselling Drawn & Quarterly titles as Lynda Barry’s One! Hundred! Demons; Guy Delisle’s Jerusalem: Chronicles from the Holy City; Rutu Modan’s Exit Wounds and Anders Nilsen’s Big Questions available on both comiXology and the Kindle Store “It is fitting that on our 25th anniversary, D+Q moves forward with our list digitally with comiXology and the Kindle Store,” said Drawn & Quarterly Publisher Peggy Burns, “ComiXology won us over with their understanding of not just the comics industry, but the medium itself. Their team understands just how carefully we consider the life of our books. They made us feel perfectly at ease and we look forward to a long relationship.” “Nothing gives me greater pleasure than having Drawn & Quarterly’s stellar catalog finally available digitally on both comiXology and Kindle,” said David Steinberger, comiXology’s co-founder and CEO. “D&Q celebrate their 25th birthday this year, but comiXology and Kindle fans are getting the gift by being able to read these amazing books on their devices.” Today’s digital debut of Drawn & Quarterly on comiXology and the Kindle Store sees all the following titles available: Woman Rebel: The Margaret Sanger Story by -

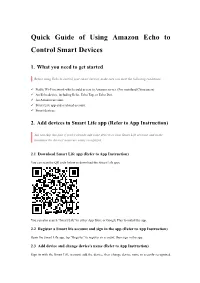

Quick Guide of Using Amazon Echo to Control Smart Devices

Quick Guide of Using Amazon Echo to Control Smart Devices 1. What you need to get started Before using Echo to control your smart devices, make sure you meet the following conditions. ü Stable Wi-Fi network which could access to Amazon server. (For mainland China users) ü An Echo device, including Echo, Echo Tap, or Echo Dot. ü An Amazon account. ü Smart Life app and a related account. ü Smart devices. 2. Add devices in Smart Life app (Refer to App Instruction) You can skip this part if you've already add some devices to your Smart Life account, and in the meantime the devices' name are easily recognized. 2.1 Download Smart Life app (Refer to App Instruction) You can scan the QR code below to download the Smart Life app: You can also search "Smart Life" in either App Store or Google Play to install the app. 2.2 Register a Smart life account and sign in the app (Refer to App Instruction) Open the Smart Life app, tap "Register" to register an account, then sign in the app. 2.3 Add device and change device's name (Refer to App Instruction) Sign in with the Smart Life account, add the device, then change device name to a easily recognized word or phrase, like "bedroom light". 3. Set up Amazon Echo and enable Smart Life Skill We suggest using web for configuration in mainland China since Alexa app is not available here. User can configure Echo through web or Alexa app. Web configuration link: http://alexa.amazon.com/spa/index.html Search "Amazon Alexa" in App Store or Google Play to install the app. -

Try-It: Amazon Echo

https://buckslib.org TRY-IT: AMAZON ECHO The Amazon Echo series empowers users with disabilities to maintain (and even thrive) in many aspects of their daily lives. Alexa, the devices’ built-in virtual-assistant, can help you make phone calls, remind you to take medications, answer questions, search the internet, set alarms, read books, play music! Alexa can even communicate with Zigbee smart bulbs, smart plugs, and motion sensors to turn them on and off! The following are examples of Amazon Echo products you can get for your home: Amazon Echo Plus - $149.99 Purchase at https://amzn.to/2EFozzJ The Amazon Echo Plus provides crisp, Dolby play 360° audio that will recognize your voice, even when it’s playing music! This product is ideal for those who want Alexa’s voice-controlled capabilities without necessarily needing a screen. Amazon Echo Spot - $129.99 Purchase at https://amzn.to/2SfLSDc With its compact screen size measuring in at 2.5 inches, the Amazon Echo Spot can be placed anywhere in the house! Its default size makes it an ideal bedside or office desk companion. When it isn’t showing you other information, the Amazon Echo Spot’s equipped screen shows a clock as its default view. Amazon Echo Show - $229.99 Purchase at https://amzn.to/2OKF56v In addition to boasting the features of its fellow devices, the Amazon Echo Show’s HD 10-inch screen makes it ideal for watching videos, reading recipes, video chatting with other Amazon Echo Show owners, checking the feed from your security cameras, and much more! https://buckslib.org TRY IT: OTHER TECHNOLOGY AIDES GrandPad - $200 + $40/month service fee Purchase at https://bit.ly/2NXKBNP GrandPad is a tablet that empowers seniors to connect with their loved ones through video chat, phone calls, and emails! Users can play games, take pictures, listen to music, and do other things on a private, secured family network. -

Amazon, E-Commerce, and the New Brand World

AMAZON, E-COMMERCE, AND THE NEW BRAND WORLD by JESSE D’AGOSTINO A THESIS Presented to the Department of Business Administration and the Robert D. Clark Honors College in partial fulfillment of the requirements for the degree of Bachelor of Arts June 2018 An Abstract of the Thesis of Jesse D’Agostino for the degree of Bachelor of Arts in the Department of Business to be taken June 2018 Amazon, E-commerce, and the New Brand World Approved: _______________________________________ Lynn Kahle This thesis evaluates the impact of e-commerce on brands by analyzing Amazon, the largest e-commerce company in the world. Amazon’s success is dependent on the existence of the brands it carries, yet its business model does not support its longevity. This research covers the history of retail and a description of e-commerce in order to provide a comprehensive understanding of our current retail landscape. The history of Amazon as well as three business analyses, a PESTEL analysis, a Porter’s Five Forces analysis, and a SWOT analysis, are included to establish a cognizance of Amazon as a company. With this knowledge, several aspects of Amazon’s business model are illustrated as potential brand diluting forces. However, an examination of these forces revealed that there are positive effects of each of them as well. The two sided nature of these factors is coined as Amazon’s Collective Intent. After this designation, the Brand Matrix, a business tool, was created in order to mitigate Amazon’s negative influence on both brands and its future. ii Acknowledgements I would like to thank Professor Lynn Kahle, my primary thesis advisor, for guiding me through this process, encouraging me to question the accepted and giving me the confidence to know that I would find my stride over time.