Report Toleadspace T on Sabbatical

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Waikato Bay of Plenty

Waikato Bay of Plenty SECONDARY SCHOOLS ATHLETICS CHAMPIONSHIPS Wednesday 18th March 2020 9.00 am Start Tauranga Domain, Tauranga www.wsss.org.nz/track-field/ www.facebook.com/sportwaikatoseondaryschools Kayla Goodwin – Sacred Heart Girls College 2015 - 2019 2018 - Waibop SS Senior Girls 100m Hurdles, Long & Triple Jump Champion, 2nd High Jump 2019 - Youth Olympics 2019 - 9th Triple Jump 2019 - NZ Senior Women - 1st Long Jump & Triple Jump 2019 - NZ Women 20 1st Heptathlon, Triple & 100m Hurdles 2019 – NZSS championships – Triple Jump 1st & 2nd Long Jump 2020 – NZ Senior Women 1st Triple Jump & 3rd Long Jump 2020 – NZ Under20 Women 1st Heptathlon, 1st Triple, 1st long Jump & 2nd 100m Hurdles Current record holder for New Zealand Under 18, Under 19 and Under 20 Triple Jump Photo Acknowledgements Kayla Goodwin – courtesy Alan MacDonald Email: [email protected] WAIKATO BAY OF PLENTY SECONDARY SCHOOLS ATHLETICS ASSOCIATION 2018-2019 Chairman: Tony Rogers WSSSA Executive Sports Director Secretary: Angela Russek St Peters Schools Treasurer: Brad Smith Tauranga Boys’ College Auditor: Karen Hind Athletics Waikato BOP Delegate: Julz Marriner Tauranga Girls’ College North Island SSAA Delegates: Angela Russek St Peter’s School Brad Smith Tauranga Boys College WBOP Selectors and Team Managers for North Island SS Team: Ryan Overmayer Hillcrest High Angela Russek St Peters School Sonia Waddell St Peters School Delegate Tauranga Girls College Tony Rogers WSSSA North Island Secondary School Track & Field Championships Porritt Stadium, Hamilton - Saturday 4 – Sunday 5 April The first three competitors in each event are automatically selected for the Waikato Bay of Plenty Team to compete at the North Island Secondary School Championships April 4th - 5th at Porritt Stadium, Hamilton. -

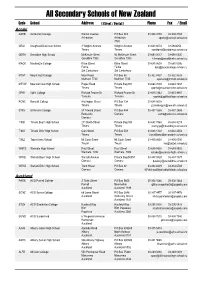

Secondary Schools of New Zealand

All Secondary Schools of New Zealand Code School Address ( Street / Postal ) Phone Fax / Email Aoraki ASHB Ashburton College Walnut Avenue PO Box 204 03-308 4193 03-308 2104 Ashburton Ashburton [email protected] 7740 CRAI Craighead Diocesan School 3 Wrights Avenue Wrights Avenue 03-688 6074 03 6842250 Timaru Timaru [email protected] GERA Geraldine High School McKenzie Street 93 McKenzie Street 03-693 0017 03-693 0020 Geraldine 7930 Geraldine 7930 [email protected] MACK Mackenzie College Kirke Street Kirke Street 03-685 8603 03 685 8296 Fairlie Fairlie [email protected] Sth Canterbury Sth Canterbury MTHT Mount Hutt College Main Road PO Box 58 03-302 8437 03-302 8328 Methven 7730 Methven 7745 [email protected] MTVW Mountainview High School Pages Road Private Bag 907 03-684 7039 03-684 7037 Timaru Timaru [email protected] OPHI Opihi College Richard Pearse Dr Richard Pearse Dr 03-615 7442 03-615 9987 Temuka Temuka [email protected] RONC Roncalli College Wellington Street PO Box 138 03-688 6003 Timaru Timaru [email protected] STKV St Kevin's College 57 Taward Street PO Box 444 03-437 1665 03-437 2469 Redcastle Oamaru [email protected] Oamaru TIMB Timaru Boys' High School 211 North Street Private Bag 903 03-687 7560 03-688 8219 Timaru Timaru [email protected] TIMG Timaru Girls' High School Cain Street PO Box 558 03-688 1122 03-688 4254 Timaru Timaru [email protected] TWIZ Twizel Area School Mt Cook Street Mt Cook Street -

Ngāti Hinerangi Deed of Settlement

Ngāti Hinerangi Deed of Settlement Our package to be ratified by you Crown Offer u Commercial Redress u $8.1 million u 5 commercial properties u 52 right of first refusals u Cultural Redress u 14 DOC and Council properties to be held as reserves or unencumbered u 1 overlay classification u 2 deeds of recognition u 11 statutory acknowledgements u Letters of introduction/recognition, protocols, advisory mechanisms and relationship agreements u 1 co-governance position for Waihou River. Commercial Redress u $8.1m Quantum (Cash) u Subject to any purchase of 5 Commercial Properties u Manawaru School Site and house (Land only), Manawaru u Part Waihou Crown Forest Lease (Southern portion) Manawaru u 9 Inaka Place, Matamata u 11 Arawa St, Matamata (Land only) u Matamata Police Station (Land only) u 52 Right of First Refusals u Te Poi School, Te Poi (MOE) u Matamata College (MOE) u Matamata Primary (MOE) u Omokoroa Point School (MOE) u Weraiti u 47 HNZC Properties Cultural Redress u Historical Account u Crown Apology u DoC Properties u Te Ara O Maurihoro Historical Reserves (East and West) (Thompsons Track) u Ngā Tamāhine e Rua Scenic Reserve (Pt Maurihoro Scenic Reserve) u Te Tuhi Track (East and West) (Kaimai Mamaku Conservation Park) u Te Taiaha a Tangata Historical Reserve (Whenua-a-Kura) u Waipapa Scenic Reserve(Part Waipapa River Scenic Reserve) u Te Hanga Scenic Reserve (Kaimai Mamaku conservation Park) u Te Mimiha o Tuwhanga Scenic Reserve(Tuwhanga) u Te Wai o Ngati Hinerangi Scenic Reserve (Te Wai o Ngaumuwahine 2) u Ngati Hinerangi Recreational Reserve (Waihou R. -

![Jljne 24.] the NEW ZEALAND GAZETTE. 1687](https://docslib.b-cdn.net/cover/2896/jljne-24-the-new-zealand-gazette-1687-652896.webp)

Jljne 24.] the NEW ZEALAND GAZETTE. 1687

JlJNE 24.] THE NEW ZEALAND GAZETTE. 1687 MILI',rARY" .AREA No. 2 (PAE~A}-~ootdMed. MILIT4.~Y AREA No. 2 (PAEROA)--ccmtinuea. 553336 Mo.Ara, James Bertie, gold-miner, Royoroft St., Waihi. 552856 Mapp, Clifford Lewis, dairy-farmer, Walton-Kiwitahi Rd., 516003 :M:cAra, John Leslie, electrician, Waikinci. · · Walton. 584680 McCarthy, Winston, dairy-farmer, No. 1 Rural Mail Delivery, 586707 Margan, .Cuthbert Dudley, ma.star butcher, Wilson St., Waih,i, Te Puke. 544118 Markland, .George William, electrician, Ohope, Wha.katane. 582595 McClinchie, Robert Matthew, farmer, Omokoroa, Tauranga. 540100 Marshall, Eric Thomas, school-teacher, Main Rd., Katikati. 514922 McConnell, Alick James, boilermaker, Kopu, Thames. 546820 Marshall, Walter George, bank clerk, 15 Seddon St., Rot.orua. 514923 McConnell, Sinclair, shop-assistant, Waimana. 512736 Marshall, William Leslie, farm-manager, Thames Rd. 528705 McCulloch, Colin James, farmer, Motumaoho, Morrinsville. 626695 Martelli, Donald Ferguson, farm hand, Reporoa. 576533 McCulloch, Norman Angus, engineer, Ruapehu St., Taupo. 608206 Martin, Albert Walther, farmer, Otailarakau, Te Puke. 580781 McCullough, Robert, carpenter, Katikati. 590352 Martin, Everard Garlick, farmer, Ngakuru Rural Delivery; 618893 McDonald, Alexander Donald, farmer, care of Mr. F. E. · Rotorua. Hughes, Waharoa, Matamata. ·· 552227 Martin, Frederick James, farmer, Campbell Rd., Walton. 574114 McDonald, Archibald Duncan, radio serviceman, Rewi St., 584415 Martin, Hugh, farmer, Broadlands, Repormt, via Rotorua. Te Aroha. 604358 Masters, Ernest Osborne; timber-worker, Post-office, Mourea, 627172 McDonnell, Thomas Clifford, farmer, Station Rd., Matamata. Rotorua. 502372 :M:cDowalJ, Arnold Stuart, bank clerk, 7 Johnstone St., Te 494370 Mathers, William David, farmer, Trig Rd., Waihi. Aroha. 541619 Mathews, Herbert Mostyn, farmer, ,No. 7 Rd., Springdale, 482039 McDuff, Lawrence George, road ganger, Harvey St., East Waitoa. -

452 Morgan Road Matamata

Matamata Horse Trial 20-21 Feb 2021 452 Morgan Road Matamata Event Secretary: Email: [email protected] Entries: online via Equestrian Entries at www.equestrianentries.co.nz Entries close: Tuesday 9 Feb 2021 Payment can be made by DC to 03 0363 0002486-00 Reference riders name & class Course Designers: Tich Massey CCI4*S Campbell Draper CCI3*S CCI2*S CCN2* Bing Allen CCN105/1* Denise McGiven CCN95 & CCN80 All horses and riders in Class 1-3 must be FEI registered. 1. Randlab CCI4*-S Dressage Test CCI4*A2021 PM $1000 Rug/Trophy $700 $600 $400 $300 EF $290 2. Dunstan Horse Feeds CCI3*-S Dressage Test CCI3*A2021 PM $500 Rug/Trophy $350 $250 $180 $180 EF $250 3. Maxlife Batteries CCI2*-S Dressage Test CCI2*A2021 PM $350 Rug/Trophy $250 $200 $150 $100 EF $200 4. OJZ Promotions Rosettes CCN2*-S Dressage Test B5 PM $300/Rug, $250 $170, $150 $100 EF $170 5. Harcourts Kevin Deane Real Estate CCN1*-S Dressage Test B5 (X/C1.05, S/J 1.10) PM $150/Rug $120 $110 $90 EF $130 6. Matamata-Piako District Council CCN105-S Open Dressage Test A5 PM $150/Rug/Trophy $120 $110, $90, $80 EF $110 7. Giltrap Agrizone CCN105-S Restricted to riders that have not competed in 2* (1* pre 2019) or above since Jan 2010 Dressage Test A5 PM $150 Rug $120 $110 $90 $80 EF $110 8. BNZ CCN95-S Open Dressage Test A3 Rug & Trophy 1st Rosettes 1-5 EF $90 9. NZ Bloodstock CCN95-S (restricted to Thoroughbred’s) Dressage Test A3 Rug 1st Rosettes 1-5 EF $90 10. -

Council Agenda - 26-08-20 Page 99

Council Agenda - 26-08-20 Page 99 Project Number: 2-69411.00 Hauraki Rail Trail Enhancement Strategy • Identify and develop local township recreational loop opportunities to encourage short trips and wider regional loop routes for longer excursions. • Promote facilities that will make the Trail more comfortable for a range of users (e.g. rest areas, lookout points able to accommodate stops without blocking the trail, shelters that provide protection from the elements, drinking water sources); • Develop rest area, picnic and other leisure facilities to help the Trail achieve its full potential in terms of environmental, economic, and public health benefits; • Promote the design of physical elements that give the network and each of the five Sections a distinct identity through context sensitive design; • Utilise sculptural art, digital platforms, interpretive signage and planting to reflect each section’s own specific visual identity; • Develop a design suite of coordinated physical elements, materials, finishes and colours that are compatible with the surrounding landscape context; • Ensure physical design elements and objects relate to one another and the scale of their setting; • Ensure amenity areas co-locate a set of facilities (such as toilets and seats and shelters), interpretive information, and signage; • Consider the placement of emergency collection points (e.g. by helicopter or vehicle) and identify these for users and emergency services; and • Ensure design elements are simple, timeless, easily replicated, and minimise visual clutter. The design of signage and furniture should be standardised and installed as a consistent design suite across the Trail network. Small design modifications and tweaks can be made to the suite for each Section using unique graphics on signage, different colours, patterns and motifs that identifies the unique character for individual Sections along the Trail. -

Next Top Engineering Scientist 2015 Judges Report

Next Top Engineering Scientist 2015 Judges report The seventh annual “Next Top Engineering Scientist competition” was held from 9am to 6pm on Saturday August 1st, 2015. The question posed was “If a New Zealand student uploads a video clip that goes viral, how long will it take before 1% of the world’s population has seen it?” Teams calculated answers that ranged from just a few minutes through to never. The quality of submissions was generally high, with many teams using innovative approaches to solving the problem, including an increasing number of teams making use of computer programming. As with previous years the competition problem was purposefully constructed to be open-ended in nature. To answer the problem required teams to make sensible assumptions around various aspects of the problem including (but not limited to): • The definition of a viral video • The characteristics of the video (e.g. language, length and genre) • The potential audience • The propagation channels (e.g. youtube, facebook, twitter, etc.) Participation Statistics We had 179 teams from 68 schools participate this year (from Dargaville and Whangarei up in the north down to Oamaru and Dunedin in the South). 146 teams had four members and 33 teams had three members. The break down by year level was as follows: Year 11 1 Mixed year 11/12 2 Mixed year 11/13 1 Year 12 70 Mixed year 12/13 29 Year 13 76 A total of 173 teams managed to get a report in by the 6pm deadline and we had many “Action shot” photos submitted during the course of the day. -

Kaimai Wind Farm Tourism and Recreation Impact Assessment

Kaimai Wind Farm Tourism and Recreation Impact Assessment REPORT TO VENTUS ENERGY LTD MAY 2018 Document Register Version Report date V 1 Final draft 31 August 2017 V2 GS comments 10 Oct 2017 V3 Visual assessment, cycle route 30 Nov 2017 V4 Updated visual assessment 16 February 2018 V5 Turbine update 30 May 2018 Acknowledgements This report has been prepared by TRC Tourism Ltd. Disclaimer Any representation, statement, opinion or advice, expressed or implied in this document is made in good faith but on the basis that TRC Tourism is not liable to any person for any damage or loss whatsoever which has occurred or may occur in relation to that person taking or not taking action in respect of any representation, statement or advice referred to in this document. K AIMAI WIND FARM TOURISM AND RECREATION IMPACT ASSESSMENT 2 Contents Contents ................................................................................................................. 3 1 Introduction and Background ........................................................................... 4 2 Existing Recreation and Tourism Setting ........................................................... 5 3 Assessment of Potential Effects ...................................................................... 12 4 Recommendations and Mitigation .................................................................. 18 5 Conclusion ...................................................................................................... 20 K AIMAI WIND FARM TOURISM AND RECREATION IMPACT ASSESSMENT -

Middle Earth: Hobbit & Lord of the Rings Tour

MIDDLE EARTH: HOBBIT & LORD OF THE RINGS TOUR 16 DAY MIDDLE EARTH: HOBBIT & LORD OF THE RINGS TOUR YOUR LOGO PRICE ON 16 DAYS MIDDLE EARTH: HOBBIT & LORD OF THE RINGS TOUR REQUEST Day 1 ARRIVE AUCKLAND Day 5 OHAKUNE / WELLINGTON Welcome to New Zealand! We are met on arrival at Auckland This morning we drive to the Mangawhero Falls and the river bed where International Airport before being transferred to our hotel. Tonight, a Smeagol chased and caught a fish, before heading south again across the welcome dinner is served at the hotel. Central Plateau and through the Manawatu Gorge to arrive at the garden of Fernside, the location of Lothlorién in Featherston. Continue south Day 2 AUCKLAND / WAITOMO CAVES / HOBBITON / ROTORUA before arriving into New Zealand’s capital city Wellington, home to many We depart Auckland and travel south crossing the Bombay Hills through the of the LOTR actors and crew during production. dairy rich Waikato countryside to the famous Waitomo Caves. Here we take a guided tour through the amazing limestone caves and into the magical Day 6 WELLINGTON Glowworm Grotto – lit by millions of glow-worms. From Waitomo we travel In central Wellington we walk to the summit of Mt Victoria (Outer Shire) to Matamata to experience the real Middle-Earth with a visit to the Hobbiton and visit the Embassy Theatre – home to the Australasian premieres of Movie Set. During the tour, our guides escorts us through the ten-acre site ‘The Fellowship of the Ring’ and ‘The Two Towers’ and world premiere recounting fascinating details of how the Hobbiton set was created. -

Matamata Piako District

Matamata Piako District Demographic Profile 1986- 2031 Professor Natalie Jackson, Director, NIDEA with Shefali Pawar New Zealand Regional Demographic Profiles 1986-2031. No. 7 March 2013 Matamata -Piako District: Demographic Profile 1986-2031 Referencing information: Jackson, N.O. with Pawar, S. (2013). Matamata-Piako District: Demographic Profile 1986-2031. New Zealand Regional Demographic Profiles 1986-2031. No. 7. University of Waikato. National Institute of Demographic and Economic Analysis. ISSN 2324-5484 (Print) ISSN 2324-5492 (Online) Te Rūnanga Tātari Tatauranga | National Institute of Demographic and Economic Analysis Te Whare Wānanga o Waikato | The University of Waikato Private Bag 3105 | Hamilton 3240 | Waikato, New Zealand Email: [email protected] | visit us at: www.waikato.ac.nz/nidea/ Disclaimer While all reasonable care has been taken to ensure that information contained in this document is true and accurate at the time of publication/release, changed circumstances after publication may impact on the accuracy of that information. II Table of Contents Executive Summary 1 What you need to know about these data 4 Feature article – Population ageing in a nutshell 6 1. Population Trends 10 1.1 Population Size and Growth 10 1.2 Ethnic Composition and Growth 11 2. Components of Change 14 2.1 Natural Increase and Net Migration 14 2.2 Births, Deaths and Natural Increase 17 3. Components of Change by Age 18 3.1 Expected versus Actual Population 18 3.2 Expected versus Actual Change by Component 20 4. Age Structure and Population Ageing 21 4.1 Numerical and Structural Ageing 21 4.2 Labour Market Implications 25 4.3 Ethnic Age Composition and Ageing 25 5. -

Our Social Infrastructure

Our Social Infrastructure 115 Our Social Infrastructure Social infrastructure is our schools, our hospitals and all the facilities and services that communities regard as essential to their future 116 Our Social Infrastructure 6.1 Outside Agencies Meeting Our Community’s Needs Rural communities rely on many services being provided from a central, regional or national base, in particular health, support and social services. During consultation, residents said they felt that the current support was not ideal. Yet it was recognised that most agencies are doing their best. One of the difficulties is getting local people interested and involved in the planning processes of these agencies. As a community we could improve by encouraging people and agencies to work together better on issues that affect them. Community Outcomes: • Local people will be interested and getting involved in the planning processes of external agencies • As a community we will be encouraging people and agencies to work together Indicator State Trend 6.1a Participation rates with external agencies K ? This indicator is measuring the participation rates of Matamata-Piako residents/organisations with external agencies. An example would be submissions by Matamata-Piako residents towards Environment Waikato Plan Changes. There is currently no procedure in place to measure this indicator. Refer to the section ‘Additional Information’ to see what is being done regarding data gaps generally. Indicator State Trend 6.1b Number of programmes, protocols and partnerships in place with K ? external agencies Council has a number of programmes, protocols and partnerships in place with external agencies. There is currently no specific procedure in place to measure this indicator. -

Matamata College Seniorball See Page 11 for Highlights

Matamata College SeniorBall See page 11 for highlights KEEP WARM AND COSY - COME CHECK OUT OUR GREAT RANGE OF HEATING OPTIONS OPENING HOURS 7 Waharoa Road mitre10.co.nz East, Matamata Find us on Monday - Friday 7.30am - 5.30pm Saturday 8.30am - 4.00pm Phone 07 888 6362 Sunday 9.00am - 4.00pm MATAMATA ISSUE 444 • 1 June 2021 • Phone 07 888 4489 • Email: [email protected] • www.sceneonline.co.nz • PRICELESS Stuff with Caron... www.sceneonline.co.nz i there and welcome to June! I don’t know about you but very first Scene hit the street (May 25, 2005) and goodness, when HI have to wonder where the last six months have gone. It I look back, what an amazing journey it’s been. Milly, Michelle seems like yesterday was the beginning of a new year and here and I thank each and every one of you that continue to offer we are already half way through it - it just doesn’t seem right! your support, your encouragement and your belief in what we So anyway, with today being the official farmer’s change over produce each Tuesday and it’s incredibly appreciated. We love day and new families arriving to the district, I’m sure there will be what we do and we hope our little five minute silence has more than a few rural dwellers pretty thankful to have finally got become an anticipated part of your week too. the last load done, the beds made and some resemblance o f For me personally, it’s hard to believe my boys were dinner on.