Next Top Engineering Scientist 2015 Judges Report

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Our Students Farewell Mr Cole

college Our Students Farewell Mr Cole n an emotional week of ‘lasts,’ retiring Head of College, Mr Steve Cole was honoured at many gatherings from Bruce House and the Old I The students had worked out that during his eight year tenure, Mr Collegians Dinner to a very poignant assembly run by the students in Cole had read and signed around 2000 mid-year reports and 2000 his final few days. With the Massed Choir, Bruce House Haka group end-of year-reports every year, which add up to a whopping 32,000 and The Pipes and Drums of Saint Kentigern in attendance, the tables reports read and signed over the years! They felt it was fitting that he were turned as Head Girl, Izzy Denholm and Head Boy, Matthew should hear one more, his own report as written by the students of Stirling had the last word, reading a report card for Mr Cole as seen Saint Kentigern. through the eyes of the student body! NAME Mr Steve Cole College ENROLMENT DATE July 2009 Saint Kentigern GRADUATION DATE Report April 2017 YEAR ONE AND TWO: Mr Cole has arrived at Saint Kentigern as a keen Student comments: Mr Cole continues to display support, passion and and committed Headmaster from the outset and he has immediately immersed dedication, but this year the students have become particularly fond of Mr himself in all aspects of College life. Mr Cole is already known on campus by all Cole’s endearing sense of humour, his love for good jokes, his track suit pants, the students for his tremendous support and dedication. -

Are You Moving? from the President of the Old Collegians

OLD COLLEGIANS From The President of the Old Collegians he final weeks of the 2016 school calendars are fast Toshiba, ANZ, Lexel Systems, Jack’s Coffee, Camelot Group, Test Tapproaching with final exams, sports and graduation dinners & Tag and Spices Thai. The winning Four was the Bayleys No 2 sponsored by the Old Collegian’s Association held at each Team, led by OC Nick Bayley. Our thanks to OC’s John Gilbert campus, the annual Year 10 College Field Centre trip to Mt and Robert Johnstone for marshalling the hole-in-one to ensure Ruapehu, and the excitement of the summer vacation signalling fair play. Thanks also to Trust Board member, Rosemary Harris the end of another year of excellence at Saint Kentigern. for her involvement on the day and patrolling the course with refreshments with long standing committee member, Sandy The SKOCA vs College annual Netball event was held in October Campbell, and her forthright attempts to provide some golfing- and, with some high-scoring players joining the SKOCA team in etiquette directives to those few considered to lack such skills – all the last two years, a tight game was witnessed. It was pleasing to with variable success! see SKOCA regain the cup after the last couple of years’ losses, with a final score of 40-43. Players, parents and supporters The Auckland Recent Old Collegians (18-26 years) event enjoyed refreshments after the game, and clearly there will be was held in mid-November in Parnell to provide a fellowship some further top players opportunity for recent past students/members returning home joining the SKOCA ranks for for Christmas after university exams, OE, as well as recent College next year’s challenge. -

Auckland District Round Results

Auckland District Round Results Thursday 10 – Sunday 13 June 2021 BEST GROUP OVERALL GoodFellas, ACG Parnell College and Saint Kentigern College Sunny Le, Piano, ACG Parnell College Charles Sang, Piano, Saint Kentigern College Alex Bian, Percussion, Saint Kentigern College Jason Wong, Percussion, Saint Kentigern College Jenny McLeod | Music for Four HIGHLY COMMENDED Oddities, King’s College, St Kentigern College, Rangitoto College AHA, Cornwall Park District School, Parnell District School, Sunnyhills Primary School Trio Disordinata, King’s College, Tauranga Girls’ College, Westlake Boys High School Red Hedgehog, Macleans College, Glendowie College, Carmel College ADJUDICATOR AWARD Russian Empire, Westlake Girls High School, St Kenrigern College, Takapuna Grammar School, Westlake Boys High School KBB AWARDS Trio Burlesque, St Peter’s College, ACG Parnell College Petrus, Diocesan School for Girls, St Peter’s College, St Kentigern College Ornate , St Kentigern College, Avondale College, Auckland Grammar School, Diocesan School for Girls, St Cuthbert’s College, St Peter’s College Trio Anima, Rangitoto College The 2021 NZCT Chamber Music Contest is made possible with generous funding from: Auckland District Round Results Thursday 10 – Sunday 13 June 2021 BEST PERFORMANCE OF A NZ WORK GoodFellas, ACG Parnell College, St Kentigern College Jenny McLeod | Music for Four BEST PERFORMANCE OF AN ORIGINAL COMPOSITION WORK Moon Stars, Mt Albert Grammar School Mekal Covic | Two Worlds JUNIOR AWARD Quintet Brilliante, Auckland Grammar School, St Kentigern College, Macleans College NEWCOMERS AWARD Haydnseek, Diocesan School for Girls MOST ENGAGING PERFORMANCE AWARD Trio Élénk, Diocesan School for Girls The 2021 NZCT Chamber Music Contest is made possible with generous funding from: . -

Waikato Bay of Plenty

Waikato Bay of Plenty SECONDARY SCHOOLS ATHLETICS CHAMPIONSHIPS Wednesday 18th March 2020 9.00 am Start Tauranga Domain, Tauranga www.wsss.org.nz/track-field/ www.facebook.com/sportwaikatoseondaryschools Kayla Goodwin – Sacred Heart Girls College 2015 - 2019 2018 - Waibop SS Senior Girls 100m Hurdles, Long & Triple Jump Champion, 2nd High Jump 2019 - Youth Olympics 2019 - 9th Triple Jump 2019 - NZ Senior Women - 1st Long Jump & Triple Jump 2019 - NZ Women 20 1st Heptathlon, Triple & 100m Hurdles 2019 – NZSS championships – Triple Jump 1st & 2nd Long Jump 2020 – NZ Senior Women 1st Triple Jump & 3rd Long Jump 2020 – NZ Under20 Women 1st Heptathlon, 1st Triple, 1st long Jump & 2nd 100m Hurdles Current record holder for New Zealand Under 18, Under 19 and Under 20 Triple Jump Photo Acknowledgements Kayla Goodwin – courtesy Alan MacDonald Email: [email protected] WAIKATO BAY OF PLENTY SECONDARY SCHOOLS ATHLETICS ASSOCIATION 2018-2019 Chairman: Tony Rogers WSSSA Executive Sports Director Secretary: Angela Russek St Peters Schools Treasurer: Brad Smith Tauranga Boys’ College Auditor: Karen Hind Athletics Waikato BOP Delegate: Julz Marriner Tauranga Girls’ College North Island SSAA Delegates: Angela Russek St Peter’s School Brad Smith Tauranga Boys College WBOP Selectors and Team Managers for North Island SS Team: Ryan Overmayer Hillcrest High Angela Russek St Peters School Sonia Waddell St Peters School Delegate Tauranga Girls College Tony Rogers WSSSA North Island Secondary School Track & Field Championships Porritt Stadium, Hamilton - Saturday 4 – Sunday 5 April The first three competitors in each event are automatically selected for the Waikato Bay of Plenty Team to compete at the North Island Secondary School Championships April 4th - 5th at Porritt Stadium, Hamilton. -

CSA Championships Qualifiers 2021.Xlsx

College Sport Auckland Athletics Championships 30 March 2021 at Mt Smart Stadium Qualifiers by School Yellow = Qualifiers (automatic qualifiers from zone meets) Orange = Qualifiers (next best performances & dispensations) Red = Reserves Events with no athletes highlighted are open entry 4.15PM High Jump 1.40m Junior Boys Q James Liu Acg Parnell College 9:00AM Hammer 3kg Intermediate Girls Jasmine He Acg Parnell College 9:00AM Hammer 4kg Intermediate Boys Luca Hulena Acg Parnell College 2.08PM 100m Intermediate Girls Q Talia Van Rooyen Acg Parnell College 4.54PM 80m Hurdles 0.762 Intermediate Girls Q Talia Van Rooyen Acg Parnell College 11.50AM 4x100m Relay Intermediate Girls Q Acg Strathallan College Acg Strathallan College 1.45PM High Jump 1.25m Junior Girls Q Alexandra Hope Acg Strathallan College 4.15PM Long Jump Junior Girls Q Alexandra Hope Acg Strathallan College 1.45PM Triple Jump Intermediate Girls Q Annalie Malins Acg Strathallan College 2.03PM 100m Junior Boys Q Benjamin Saseve Acg Strathallan College 5.30PM Discus 1kg Junior Boys Q Callum Lawson Acg Strathallan College 10.10AM 3000m Junior Girls Q Georgia Lynch Acg Strathallan College 12.12PM 800m Junior Girls Q Georgia Lynch Acg Strathallan College 2.40PM 1500m Junior Girls Q Georgia Lynch Acg Strathallan College 12.30PM Triple Jump Junior Girls Q Georgia Lynch Acg Strathallan College 11:15AM High Jump 1.50m Intermediate Boys Q Harrison Agnew Acg Strathallan College 4.15PM Triple Jump Intermediate Boys Q Justin Deng Acg Strathallan College 12.30PM Triple Jump Junior Girls -

The Big Sing Auckland 2018 Timetable

The Big Sing Auckland 2018 Timetable Tuesday 12 June Wednesday 13 June Thursday 14 June 8.50 WELCOME (Session 1) 8.50 WELCOME (Session 3) 8.50 WELCOME (Session 5) 9.00 Saint Kentigern College - Massed Choir 9.00 Auckland Grammar School - Grammar Voices 9.00 . Epsom Girls' Grammar School - Canto Vivo 9.15 Westlake Girls' High School - Kotahi Voices 9.15 Westlake Girls' High School - Cantare 9.15 Diocesan School for Girls - Bella Cantoris McAuley High School - McAuley College Choir Te Kura Taumata o Panguru - Manawanui Westlake Boys' High School - Momentum Lynfield College - A Choir'd Taste St Mary's College - Marian Voices Sacred Heart College - Sacred Heart College Chorale Saint Kentigern College - Middle College Girls' Choir St Cuthbert's College - Black Watch Singers Selwyn College - Harmonic Fuse Westlake Girls' High School - Nota Bella Rangitoto College - SOS Sancta Maria College - Sanctus BREAK BREAK BREAK 11.00 Takapuna Grammar School - Leonessa 11.00 Massey High School - Sublimitas 11.00 Epsom Girls' Grammar School - Epsom Singers ACG Parnell College Choir Rangitoto College - The Minuettes Whangaparaoa College - Senior Choir St Paul's College - P.O.E.T. Albany Junior and Senior High School Choir Rosehill College - Rosehill Harmony Otahuhu College - Magnique Auckland Grammar School - Grammarphonics Ormiston Junior College - OJC Choir Orewa College - Four Octaves St Cuthbert's College - Saints Alive One Tree Hill College - Peka Totara Takapuna Grammar School - Sultans of Sing Rangitoto College - The Fundamentals Diocesan School for -

Honours Board

HONOURS BOARD 2018 Selwyn College Helm: Eli Liefting, Michelle Keenan, Craig Keenan, Harry Butler 2. Rosmini College Francesco Kayrouz, Cambell Stanton, Thomas Hyde, Roman Shipton 3. Westlake Boys High School A Robbie McCutcheon, Henry Wilson, Ryan Harden, Charlie Loughran 4. St Kentigern College Jack Frewin, Adam Mustill, Ryan Walker, Jack Floyd 5. Kristin School B Jack Rush, Ben Gentry, Claude Loomes, Henry Elworthy 6. Westlake Boys High School B Thomas Mulcahy, Brayden Hamilton, William Clough, Blake McGlashan 7. Auckland Grammar B Lewis Anderson, George Angus, Monty Alderson, Henry Angus 8. Kings College Hamish McLaren, Chester Duffett Oscar Little, Olivia Scantlebury 9. Takapuna Grammar Jono Weston, Danielle Schon, Josh Schon, Conrad Butler 10. Auckland Grammar A Sam Morgan, Pat Morgan, Will Shapland, Henry McLachlan 11. Kristin School A Josh Berry, James Baker, Carrington Brady, Thomas Crook 12. Whangarei Boys High School Oliver Murphy, Robbie Hopkins, Liam Holroyd, Josh Smith 13. Avondale College Gavin Ninnes, Jessica Ninnes, Sam Charles, Max Dowling 14. Cambridge High School Hamish Elliott, Callum Ewing, Sarah Eastwood, TeHaerenga Mulvey-Griggs 15. KeriKeri High School B Cleo Boyd, Sam Dobbs, Wilson Hookway, Emily Jones 16. KeriKeri High School A Kaya Tobin, Rodrigo Pinochet, Maeve White, Chris Geerkens 17. St Cuthberts College Madison Louwenberg, Ines Horton, Mia Horton, Elizabeth Hayman 2017 KeriKeri High School Helm: Frankie Dair, Alice Autet, Jack Dalbeth-Hudson, Jack Bunce 2. Marlborough College Ali Gifford, Iolo Adams, Toby Gregory, Nick Williams 3. Westlake Boys High School Tom Fyfe, Blake Nicholson, Harry Milne, Matt Jacobi 4. Selwyn College Harry Butler, Eli Liefting, Craig Keenan, Michelle Keenan 5. -

ACG Strathallan College Alfriston College Aorere College Auckland

ACG Strathallan College Alfriston College Aorere College Auckland Seventh Day Adventist High School De La Salle College Destiny School James Cook High School Kia Aroha College Mangere College Manurewa High School Mission Heights Junior College Ormiston Junior College Ormiston Senior College Otahuhu College Papakura High School Papatoetoe High School Pukekohe High School Rosehill College Sir Edmund Hillary Collegiate Southern Cross Campus Tangaroa College Week commencing 22nd June – week ending 26th September 2020 Pullman Park (TBC) • Junior: Year 9 or 10 only in the year of competition • U17: Players must be aged either 15, 16, or 17 years as at 31 December in the year in which the competition is held. • Open: Players must be aged at least 15 years on the first day that the competition commences, and must not be over 19 years as at 31 December in the year the competition is held • Entries must be submitted online via EnterNOW by 12.00pm (midday) Monday 8th June 2020. • All teams must supply 1 x referee for each of their scheduled games, see referee section below for more details • All grades are required to register their team players on EnterNOW before the entry closing date (12.00pm Monday 8th June 2020). • Each team shall consist of no more than 12 team members entitled to play. • Players are only allowed to be registered in one team, they cannot play down but once they have played up three times they must remain in that team. • The 3 game rule will apply for players in all grades . • Teams withdrawn after the entry closing date will still be liable for the entry fee. -

The New Zealand Economics Competition 2011

The New Zealand Economics Competition 2011 Awards presentation Wednesday 16 November 2011 The University of Auckland Business School Department of Economics 11075 Economics Competition Programme 2012.indd 1 11/10/2011 2:45:06 PM Programme MC Professor Tim Hazledine Department of Economics, The University of Auckland Business School Welcome Professor Basil Sharp Department of Economics, The University of Auckland Business School Presentation of awards High Distinction Guest speaker Hugh Fletcher MCom(Hons) Auckland, MBA Stanford, BSc, BCom Auckland Presentation of awards High Distinction Presentation of trophies and awards National winners Closing Please join us in the main foyer of the Owen G Glenn Building for discussion, photos and refreshments at the end of the awards presentations. Events at The University of Auckland Business School may be photographed or filmed for educational purposes. Where a photograph or film/video footage featuring an individual is to be used for promotional materials the consent of the individual will be obtained. Consents will not be sought in other circumstances. 3 11075 Economics Competition Programme 2012.indd 3 11/10/2011 2:45:07 PM 2011 New Zealand Economics Competition Welcome to the New Zealand Economics Competition Awards presentation evening. The Competition has been administered by the Department of Economics at The University of Auckland Business School since 2008. The 55 minute examination paper contains 40 multiple choice questions that test classroom theory and general knowledge of current economic issues. The New Zealand Economics Competition has grown in size and reputation since its inception in 1997, becoming the largest and most prestigious competition of its kind for secondary students. -

Nzchefs National Salon 2015 Medal Results - All Classes

NZChefs National Salon 2015 Medal Results - All Classes Class K001 Pasta and Mushroom Class Type Training Sponsor Cucina Foods and Meadow Mushrooms Medal Number Name Place of Work City Silver 955 Liu Xin Yang The Cornell Education Group Auckland 1010 Silver 953 Darryn Neville Chand The Cornell Education Group Auckland 1010 Silver 761 Bhargava Purna Teja Gadde NSIA Auckland Silver 952 Jonghwa Kim The Cornell Education Group Auckland 1010 Silver 954 Yanyan Ou The Cornell Education Group Auckland 1010 Silver 746 Woosub Kim NZMA Auckland 1060 Gold 756 Nikki Steenson Waikato Institute of Technology Class K002 Salmon Class Type Training Sponsor Akaroa Salmon Medal Number Name Place of Work City Merit 773 Patrick Richard Kilmister Stratford High School/Colonel Malones Stratford Taranaki Bronze 721 Evan Rockliffe Ibis Tainui Hamilton Hamilton Bronze 959 Manuel Jr. Navarro The Cornell Education Group Auckland 1010 Bronze 762 Mandeep Singh Whitireia Polyechnic Auckland Bronze 956 Moon Koo Kim The Cornell Education Group Auckland 1010 Bronze 716 Longi Ah-Lam Novotel Bronze 957 Trung Tran Chi Hoang The Cornell Education Group Auckland 1010 Silver 790 Blake John Haines Bracken Dunedin Dunedin Silver 936 John Bland EIT Hawkes Bay Silver 755 Rohan Katarya NZMA Auckland Silver 744 Zhuobin Wu NZMA Auckland Silver 958 Heekyoung Kim The Cornell Education Group Auckland 1010 Silver 896 Jiajun Feng Ribier Restaurant Auckland Silver 657 Joelle Snook Aoraki Polytechnic / Fusion Restaurant Timaru Silver 777 Dino Dino Rosies Auckland 1740 Class K004 Soup Class -

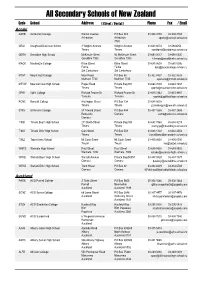

Secondary Schools of New Zealand

All Secondary Schools of New Zealand Code School Address ( Street / Postal ) Phone Fax / Email Aoraki ASHB Ashburton College Walnut Avenue PO Box 204 03-308 4193 03-308 2104 Ashburton Ashburton [email protected] 7740 CRAI Craighead Diocesan School 3 Wrights Avenue Wrights Avenue 03-688 6074 03 6842250 Timaru Timaru [email protected] GERA Geraldine High School McKenzie Street 93 McKenzie Street 03-693 0017 03-693 0020 Geraldine 7930 Geraldine 7930 [email protected] MACK Mackenzie College Kirke Street Kirke Street 03-685 8603 03 685 8296 Fairlie Fairlie [email protected] Sth Canterbury Sth Canterbury MTHT Mount Hutt College Main Road PO Box 58 03-302 8437 03-302 8328 Methven 7730 Methven 7745 [email protected] MTVW Mountainview High School Pages Road Private Bag 907 03-684 7039 03-684 7037 Timaru Timaru [email protected] OPHI Opihi College Richard Pearse Dr Richard Pearse Dr 03-615 7442 03-615 9987 Temuka Temuka [email protected] RONC Roncalli College Wellington Street PO Box 138 03-688 6003 Timaru Timaru [email protected] STKV St Kevin's College 57 Taward Street PO Box 444 03-437 1665 03-437 2469 Redcastle Oamaru [email protected] Oamaru TIMB Timaru Boys' High School 211 North Street Private Bag 903 03-687 7560 03-688 8219 Timaru Timaru [email protected] TIMG Timaru Girls' High School Cain Street PO Box 558 03-688 1122 03-688 4254 Timaru Timaru [email protected] TWIZ Twizel Area School Mt Cook Street Mt Cook Street -

A 40 Year History

New Zealand Secondary Schools Athletics Association National Secondary School Cross Country Championships A 40 Year History Introduction The New Zealand Secondary Schools Athletics Association is proud to publish a forty- year history of the New Zealand Secondary Schools Cross Country Championships. Participation in the event between 1974 and 2013 totals well over 10,000 athletes from all but a handful of schools from around the country. With an annual involvement of over 1000 students it has become one of the largest secondary school sporting events in New Zealand. The idea for this document was born during the 1995 NZSSCC Championships in Masterton. At this time (before the internet), results were published in a hard copy booklet. In this particular year the first three place getters in the individual, and three and six person team categories were published for the first twenty-one years of the events history. This accompanied the full set of 1995 results. After this event, the majority of results were published electronically. Unfortunately, many of these results were lost in the mid to late nineties because there was no dedicated NZSSAA website. Sincere thanks need to be given to Don Chadderton for providing the first twenty years’ of results. Without these early results a significant part of athletics New Zealand’s history would have eventually been forgotten. These include the 1974 performance of Alison Rowe, who would later go on to win both the 1981 Boston and New York marathons. As well as Burnside High School’s 1978 performance in the junior boys event where they completed the perfect three-man score of six points.