Proceedings in One File

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Human Interaction with Technology for Working, Communicating, and Learning: Advancements

An Excellent Addition to Your Library! Released: December 2011 Human Interaction with Technology for Working, Communicating, and Learning: Advancements Anabela Mesquita (ISCAP/IPP and Algoritmi Centre, University of Minho, Portugal) The way humans interact with technology is undergoing a tremendous change. It is hard to imagine the lives we live today without the benefits of technology that we take for granted. Applying research in computer science, engineer- ing, and information systems to non-technical descriptions of technology, such as human interaction, has shaped and continues to shape our lives. Human Interaction with Technology for Working, Communicating, and Learning: Advancements provides a framework for conceptual, theoretical, and applied research in regards to the relationship between technology and humans. This book is unique in the sense that it does not only cover technology, but also science, research, and the relationship between these fields and individuals’ experience. This book is a must have for anyone interested in this research area, as it provides a voice for all users and a look into our future. Topics Covered: • Anthropological Consequences • Philosophy of Technology • Experiential Learning • Responsibility of Artificial Agents • Influence of Gender on Technology • Technological Risks and Their Human Basis • Knowledge Management • Technology Assessments • Perceptions and Conceptualizations • Technology Ethics of Technology • Phenomenology of E-Government ISBN: 9781613504659; © 2012; 421 pp. Print: US $175.00 | Perpetual: US $265.00 | Print + Perpetual: US $350.00 Market: This premier publication is essential for all academic and research library reference collections. It is a crucial tool for academicians, researchers, and practitioners and is ideal for classroom use. Anabela Mesquita is a professor at the Institute of Administration and Accountancy (ISCAP)/Polytechnic School of Porto (IPP), Portugal. -

Second Call for Papers

SECOND CALL FOR PAPERS 3rd FRENCH CONFERENCE ON SOCIAL AND ENVIRONMENTAL ACCOUNTING RESEARCH (3rd CSEAR France Conference) June 11-12, 2015 ESSEC Business School, France The Centre for Social and Environmental Accounting Research (CSEAR) has held an annual conference in the UK (often referred to as the Summer School) for more than two decades, as well as in other parts of the world for several years in Europe, Australia, New Zealand, and in both North and South Americas. Similar to other CSEAR conferences, the 3rd CSEAR France Conference will be a deliberately informal discussion forum gathering researchers, teachers, students and practitioners at all levels of experience to further enhance research in new instruments, policies and strategies related to social and environmental accounting in the very widest sense. The spirit of the conference is interdisciplinary and focuses on a high level of interaction, discussion and debate in a friendly and supportive atmosphere. As such, research papers from perspectives beyond accounting and management control such as organizational theory, marketing, finance, strategy, etc., are welcome. The conference will provide participants an opportunity to present their research projects, ranging from discussing preliminary findings to full papers. Submissions are welcome in either French or English. For this 3rd CSEAR France conference, we are pleased to welcome Professor Brendan O’Dwyer, Professor of Accounting at the University of Amsterdam, as our plenary speaker. For the practitioner forum, we are equally pleased to welcome Adam Koniuszewski, Chief Operating Officer at Green Cross International. There will also be a Special Issue of Advances in Environmental Accounting and Management on the “Current Developments in Social and Environmental Accounting” (a call for papers has been issued and is available on the conference website). -

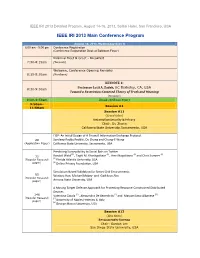

IEEE IRI 2013 Main Conference Program

IEEE IRI 2013 Detailed Program, August 14-16, 2013, Sofitel Hotel, San Francisco, USA IEEE IRI 2013 Main Conference Program August 14, 2013, Wednesday (Day 1) 8:00 am - 5:00 pm Conference Registration (Conference Registration Desk at Ballroom Foyer) Informal Meet & Greet - Breakfast 7:30-8:15am (Veranda) Welcome, Conference Opening Remarks 8:15-8:30am (Bordeaux) KEYNOTE 1: Professor Lotfi A. Zadeh, UC Berkeley, CA, USA 8:30-9:30am Toward a Restriction-Centered Theory of Truth and Meaning (Bordeaux) 9:30–9:50am Break (Ballroom Foyer) 9:50am- Session A1 11:50am Session A11 (Grand Salon) Information Security & Privacy Chair: Du Zhang California State University, Sacramento, USA FIEP: An Initial Design of A Firewall Information Exchange Protocol 20 Sandeep Reddy Pedditi, Du Zhang and Chung-E Wang (Application Paper) California State University, Sacramento, USA Predicting Susceptibility to Social Bots on Twitter (1) (1) (1) (2) 33 Randall Wald , Taghi M. Khoshgoftaar , Amri Napolitano and Chris Sumner (1) (Regular Research Florida Atlantic University, USA paper) (2) Online Privacy Foundation, USA Simulation-Based Validation for Smart Grid Environments 85 Wonkyu Han, Michael Mabey and Gail-Joon Ahn (Regular Research paper) Arizona State University, USA A Moving Target Defense Approach for Protecting Resource-Constrained Distributed Devices 146 Valentina Casola (1) , Alessandra De Benedictis (1) and Massimiliano Albanese (2) (Regular Research (1) paper) University of Naples Federico II, Italy (2) George Mason University, USA Session A12 (Blue -

Bibliometric Study in Support of Norway's Strategy for International Research Collaboration

Bibliometric Study in Support of Norway's Strategy for International Research Collaboration Final report Bibliometric Study in Support of Norway's Strategy for International Research Collaboration Final report © The Research Council of Norway 2014 The Research Council of Norway P.O.Box 2700 St. Hanshaugen N–0131 OSLO Telephone: +47 22 03 70 00 Telefax: +47 22 03 70 01 [email protected] www.rcn.no/english The report can be ordered at: www.forskningsradet.no/publikasjoner or green number telefax: +47 800 83 001 Design cover: Design et cetera AS Printing: 07 Gruppen/The Research Council of Norway Number of copies: 150 Oslo, March 2014 ISBN 978-82-12-03310-8 (print) ISBN 978-82-12-03311-5 (pdf) Bibliometric Study in Support of Norway’s Strategy for International Research Collaboration Final Report March 14, 2014 Authors Alexandre Beaudet David Campbell Grégoire Côté Stefanie Haustein Christian Lefebvre Guillaume Roberge Other contributors Philippe Deschamps Leroy Fife Isabelle Labrosse Rémi Lavoie Aurore Nicol Bastien St-Louis Lalonde Matthieu Voorons Contact information Grégoire Côté, Vice-President, Bibliometrics [email protected] Brussels | Montreal | Washington Bibliometric Study in Support of Norway’s Strategy for Final Report International Research Collaboration Contents Contents .................................................................................................................. i Tables ................................................................................................................... iii -

On the Necessity of Multiple University Rankings

COLLNET Journal of Scientometrics and Information Management ISSN : 0973 – 7766 (Print) 2168 – 930X (Online) DOI : 10.1080/09737766.2018.1550043 On the necessity of multiple university rankings Lefteris Angelis Nick Bassiliades Yannis Manolopoulos Nowadays university rankings are ubiquitous commodities; a plethora of them is published every year by private enterprises, state authorities and universities. University rankings are very popular to governments, journalists, university administrations and families as well. At the same time, they are heavily criticized as being very subjective and contradic- tory to each other. University rankings have been studied with respect to political, educational and data management aspects. In this paper, we Lefteris Angelis* focus on a specific research question regarding the alignment of some School of Informatics well-known such rankings, ultimately targeting to investigate the use- Aristotle University of fulness of the variety of all these rankings. First, we describe in detail Thessaloniki the methodology to collect and homogenize the data and, second, we Thessaloniki 54124 statistically analyze these data to examine the correlation among the dif- Greece ferent rankings. The results show that despite their statistically signifi- [email protected] cant correlation, there are many cases of high divergence and instability, which can be reduced by ordered categorization. Our conclusion is that Nick Bassiliades if, in principle, someone accepts the reliability of university rankings, School of Informatics the necessity and the usefulness, of all of them is questionable since Aristotle University of only few of them could be sufficient representatives of the whole set. Thessaloniki The overabundance of university rankings is especially conspicuous for Thessaloniki 54124 the top universities. -

Proposal Evaluation Panel (PEP) and Site Characterization Panel (SCP) Meeting: 19 – 21 June 2013 Santa Cruz, CA USA

Proposal Evaluation Panel (PEP) and Site Characterization Panel (SCP) Meeting: 19 – 21 June 2013 Santa Cruz, CA USA Proposal Evaluation Panel – PEP -------------------------------------------------------------------------------------------------------- Richard Arculus** Australian National University Jang-Jun Bahk Korea Institute of Geoscience & Mineral Resources (KIGAM) Jennifer Biddle University of Delaware Tim Bralower** Pennsylvania State University Beth Christensen Adelphi University Peter Clift Louisiana State University Adélie Delacour Université Jean Monnet Jörg Geldmacher GEOMAR-Helmholtz Centre for Ocean Research Marguerite Godarda Montpellier 2 University Verener Heuer University of Bremen Barbara John University of Wyoming Jun-ichi Kimurab JAMSTEC Dick Kroon* The University of Edinburgh Kathie Marsagliac California State University, Northridge Lisa McNeill University of Southampton Katsuyoshi Michibayashi Shizuoka University Tomoaki Morishita Kanazawa University Craig Moyerd Western Washington University Masafumi Murayama Kochi University Clive Neal University of Notre Dame Hiroshi Nishi Tohoku University Matt O'Regan Stockholm University Koichiro Obana Japan Agency for Marine-Earth Science and Technology (JAMSTEC) Stuart Robinson University College London Amelia Shevenell** University of South Florida Ashok Singhvi Physical Research Laboratory David Smith University of Rhode Island Michael Strasser** ETH Zurich Nabil Sultan IFREMER Yohey Suzuki The University of Tokyo Yoshinori Takano** Japan Agency for Marine-Earth Science -

NTS-Asia-Monograph-Coronavirus

Table of Contents Foreword 2 About the Authors 3 Acknowledgements 4 Introduction 5 Chapter One Pathogen Research Networks in China: Origins and Steady Development 8 Chapter Two Chinese Biosafety Level 4 Laboratories and Their Key International Linkages 21 Chapter Three Critical Assistance from Virology Networks Abroad 26 Chapter Four The Future of Chinese Virology Laboratories: China as “Number One”? 37 Appendix Graphs of Transnational Networks: China, the West and the Rest 42 Bibliography 51 NTS-Asia Monograph 1 Foreword The importance of paying close attention to health security has become more urgent than ever as the world continues to deal with the devastating impact of COVID-19 pandemic. Since its outbreak in 2020, the pandemic caused by a novel coronavirus SARS-Cov-2 has already resulted in millions of lives lost and inflicted untold human misery and suffering to people globally. The Centre for Non-Traditional Security (NTS) Studies of the S. Rajaratnam School of International Studies (RSIS), Nanyang Technological University, is one of the leading centres in Asia that highlights the critical linkages between non-traditional security challenges, like climate change and health security, to national and global security. As we have seen, the COVID-19 pandemic is more than a public health emergency of international concern. Its severe impacts cut across economic security, food security, environmental security and personal security, among others. As the international community continues to grapple with the consequential impact of COVID-19, it is sobering to note that other pandemics are expected to emerge. Thus, the need to advance the global agenda of pandemic preparedness and response cannot be overstated given the evolving nature of emerging infectious diseases. -

Ocean Science in Canada: Meeting the Challenge, Seizing the Opportunity

OCEAN SCIENCE IN CANADA: MEETING THE CHALLENGE, SEIZING THE OPPORTUNITY Appendices Science Advice in the Public Interest OCEAN SCIENCE IN CANADA: MEETING THE CHALLENGE, SEIZING THE OPPORTUNITY The Expert Panel on Canadian Ocean Science ii Ocean Science in Canada: Meeting the Challenge, Seizing the Opportunity Table of Contents Appendix A Additional Information on Canada’s Capacity in Ocean Science .......................................1 A1 Human Capacity............................................................................................................................................ 1 A2 Organizations and Networks .......................................................................................................................... 2 A3 Ocean Science Funding ................................................................................................................................. 3 Appendix B Bibliometric Comparisons of Canada to Other Leading Countries in Ocean Science .....5 B1 Bibliometric Entities ....................................................................................................................................... 5 B2 Canada’s Position in Ocean Science Output Relative to Other Leading Countries by Research Theme ...... 5 B3 Collaboration Between Canadian Organizations in Ocean Science .............................................................. 11 B4 Collaboration Between International Organizations in Ocean Science ......................................................... 13 B5 Canada’s International -

INSTITUTE of HUMAN GENETICS December 2013

INSTITUTE OF HUMAN GENETICS Scientifi c Report CNRS UPR 1142 - MONTPELLIER - FRANCE http://www.igh.cnrs.fr December 2013 LAYOUT & DESIGN Catherine Larose, Communication and Training Program, IGH Montpellier PICTURES Office de tourisme Montpellier Cyril Sarrauste de Menthière, IGH Montpellier COVER ILLUSTRATION Stem Cell Reports 2013, Drosophila ovarioles showing the presence of germline stem cells (GSCs) at the anterior tip of the germarium in wild-type ovarioles (long structures) and the lack of GSCs in ovarioles mutant for the CCR4 deadenylase (short structures). Staining was with DAPI (blue), anti-Vasa as a marker of germ cells (red), and 1B1 to label the spherical spectrosome in GSCs (green). The CCR4 deadenlylase is required for GSC self-renewal through its role in translational repression of differentiation mRNAs. For more information on how CCR4 acts in GSC self-renewal and on its interactors and mRNA target. Simonelig, M., IGH, Montpellier http://www.igh.cnrs.fr CONTACT / DIRECTION Institut de Génétique Humaine Dr. Giacomo CAVALLI 141 Rue de la Cardonille 34396 MONTPELLIER cedex 5 FRANCE Phone : +33 (0)4 34 35 99 04 / + 33 (0)4 34 35 99 70 Fax : +33 (0)4 34 35 99 99 [email protected] Contents Introduction 2 GENOME DYNAMICS Department 8 Giacomo Cavalli 9 Séverine Chambeyron 11 Jérôme Déjardin 13 Bernard de Massy 15 Reini fernandez de Luco 17 Nicolas Gilbert 19 Rosemary Kiernan 21 Marcel Méchali 23 GENETICS & DEVELOPMENT Department 26 Brigitte Boizet 27 Jean-Maurice Dura 29 Anne Fernandez & Ned Lamb 31 Kzrysztof Rogowski -

INSTITUTE of HUMAN GENETICS August 2011

INSTITUTE OF HUMAN GENETICS Scientific Report August 2011 CNRS UPR 1142 - MONTPELLIER - FRANCE http://www.igh.cnrs.fr Contents Introduction 2 GENOME DYNAMICS Department 8 Ounissa Aït Ahmed 9 Giacomo Cavalli 11 Séverine Chambeyron 13 Jérôme Déjardin 15 Bernard de Massy 17 Nicolas Gilbert 19 Rosemary Kiernan 21 Marcel Méchali 23 GENETICS & DEVELOPMENT Department 26 Brigitte Boizet 27 Jean-Maurice Dura 29 Anne Fernandez & Ned Lamb 31 Kzrysztof Rogowski 33 Martine Simonelig 35 MOLECULAR BASES OF HUMAN DISEASES Department 38 Monsef Benkirane 39 Angelos Constantinou 41 Pierre Corbeau 43 Dominique Giorgi & Sylvie Rouquier 45 Marie-Paule Lefranc 47 Sylvain Lehmann 49 Domanico Maiorano 51 Philippe Pasero 53 ADMINISTRATION AND OTHER SERVICES 56 SEMINAR SPEAKERS 66 PUBLICATIONS 73 Giacomo Cavalli Director Philippe Pasero Associate Director Brigitte Mangoni-Jory Administrator RESEARCH Administration Services « Genome Dynamics » Department Information technology Secretariat Assistant Director : B. De Massy department Anne-Pascale Botonnet Giacomo Cavalli : Chromatin and Cell Biology Guillaume Gielly - Jacques Faure Marcel Méchali : Réplication et Genome Dynamics Administrative Secretariat Web development & Jérôme Dejardin : Biology of Repetitive Silke Conquet Iconography Sequences Cyril Sarrauste de Menthière Séverine Chambeyron : RNA Silencing and Control of Transposition Financial Management Bernard De Massy : Meiosis and Sahondra Rakotondramasy Cell Imaging Facility Recombination Marie-Claire Merriot Julien Cau Ounissa Aït Ahmed : Meiosis and Chromosome -

Fostering of Advanced Female Scientists at Ochanomizu University - Be the Next Marie Curie!

【Name of Institution】 Division of Advanced Sciences, Graduate School of Humanities and Sciences Ochanomizu University 【Project Title】 Fostering of Advanced Female Scientists at Ochanomizu University - Be the Next Marie Curie! - 【Project Outline】 In this program, students are sent to the European research institutes where many female researchers have been raised to national prominence. The aim of the program corresponds to the Third Phase of Basic Program for Sciences and Technology that promotes the increase of newly hired female researchers’ ratio in natural science institutes to 25 %. This three-step program is also targeted to establish a successful model of human resource development that shall be prevailed in and out of our institute. Three characteristics of the program are stated below. 1) This program is designed to broaden academic horizon of the students. In this program, students majoring in physics or chemistry have to take subjects from related fields of their study. 2) The selected students will be enrolled in a support program in which they will take various courses such as language training, presentation skills, crisis management and cross-cultural understanding to acquire basis of self-presentation and global view. 3) In conjunction with the first step of this program in which master’s students will participate in the 1-semester or 4 months oversee training program, the second step, the oversee research program for doctoral students, will accomplish its overall goal of effective human resource development. From experience during the first step of the program, students will become aware of the importance of broadening their own views and may exert themselves at school work. -

Project Fact Sheet

ERASMUS MUNDUS EXTERNAL COOPERATION WINDOW (EM ECW) Call for Proposals: EACEA/35/08 - Lot 1 (Algeria, Morocco and Tunisia) Coordinating Institution: Montpellier 2 University Sciences and Technology, France Members of the partnership: University of Liege - Belgium University of Cadiz - Spain University of the Balearic Islands - Spain Aix-Marseille 2 University - France Montpellier SupAgro - France Montpellier 1 University - France Paul Valéry Montpellier 3 University - France University of Perpignan Via Domitia - France University of Genoa - Italy University of Nice Sophia Antipolis - France Abderrahmane Mira University of Bejaïa - Algeria Mentouri University of Constantine - Algeria University of Oran - Algeria Cadi Ayyad University of Marrakech - Morocco Mohamed V Agdal University - Morocco Abdelmalek Essaâdi University - Morocco University of Sfax - Tunisia University of Sousse - Tunisia University of 7th of November at Carthage – Tunisia Associates: Academic Agency of Francophonie (A.U.F.) - France European University center of Montpellier and Languedoc-Roussillon - France Natura Network - Czech Republic Euro-mediterrranean University Téthys - France Association CONTACT - France Provence-Alpes-Côte d’Azur Regional Council - France Languedoc-Roussillon Regional Council - France Montpellier Metropolitan Area Authority - France DERBI Cluster - France Technology Park of Sousse - Tunisia National Agronomic Institute of Tunisia (INAT) - Tunisia National High School of Forestry (ENFI) - Morocco National School of agriculture of Meknes (ENA)