On the Necessity of Multiple University Rankings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Human Interaction with Technology for Working, Communicating, and Learning: Advancements

An Excellent Addition to Your Library! Released: December 2011 Human Interaction with Technology for Working, Communicating, and Learning: Advancements Anabela Mesquita (ISCAP/IPP and Algoritmi Centre, University of Minho, Portugal) The way humans interact with technology is undergoing a tremendous change. It is hard to imagine the lives we live today without the benefits of technology that we take for granted. Applying research in computer science, engineer- ing, and information systems to non-technical descriptions of technology, such as human interaction, has shaped and continues to shape our lives. Human Interaction with Technology for Working, Communicating, and Learning: Advancements provides a framework for conceptual, theoretical, and applied research in regards to the relationship between technology and humans. This book is unique in the sense that it does not only cover technology, but also science, research, and the relationship between these fields and individuals’ experience. This book is a must have for anyone interested in this research area, as it provides a voice for all users and a look into our future. Topics Covered: • Anthropological Consequences • Philosophy of Technology • Experiential Learning • Responsibility of Artificial Agents • Influence of Gender on Technology • Technological Risks and Their Human Basis • Knowledge Management • Technology Assessments • Perceptions and Conceptualizations • Technology Ethics of Technology • Phenomenology of E-Government ISBN: 9781613504659; © 2012; 421 pp. Print: US $175.00 | Perpetual: US $265.00 | Print + Perpetual: US $350.00 Market: This premier publication is essential for all academic and research library reference collections. It is a crucial tool for academicians, researchers, and practitioners and is ideal for classroom use. Anabela Mesquita is a professor at the Institute of Administration and Accountancy (ISCAP)/Polytechnic School of Porto (IPP), Portugal. -

IREG Inventory of International University Rankings

IREG Inventory of International University Rankings 2021 The purpose of IREG Observatory on Academic Ranking and Excellence is to strengthen public awareness and understanding of university rankings and their role in reflecting quality of higher education and academic excellence. from the IREG Observatory Statute IREG Observatory on Academic Ranking and Excellence (IREG stands for International Ranking Expert Group) www.ireg-observatory.org IREG Inventory of International University Rankings 2021 Brussels-Warsaw 2021 www.ireg-observatory.org/en/inventory-international-rankings The „IREG Inventory of International University Rankings” was prepared by the Perspektywy Education Foundation at the request of the IREG Observatory on Academic Ranking and Excellence whose aim is the improvement of the quality of academic rankings and quality of higher education. IREG Observatory on Academic Ranking and Excellence rue Washington 40 1050 Brussels, Belgium www.ireg-observatory.org PERSPEKTYWY Education Foundation 31 Nowogrodzka Str., 00-511 Warsaw, Poland www.perspektywy.org © IREG Observatory on Academic Ranking and Excellence This publication is based on information made available by ranking organizations. The publisher has made every effort to ensure the accuracy and completeness of the information contained in this publication, however, it takes no responsibility for any errors or omissions. The information listed is subject to change. Edited by Waldemar Siwinski, Richard Holmes, Justyna Kopanska DTP: Artur Zebrowski, Karolina Sitnicka This publication is available at www.ireg-observatory.org/en/inventory-international-rankings Warsaw 2021 ISBN: 978-83-61239-61-1 3 Executive summary IREG Observatory on Academic Ranking and Excellence initiated a project called “IREG Inventory of International University Rankings (Global and Regional)” as a part of its statutory mission. -

Second Call for Papers

SECOND CALL FOR PAPERS 3rd FRENCH CONFERENCE ON SOCIAL AND ENVIRONMENTAL ACCOUNTING RESEARCH (3rd CSEAR France Conference) June 11-12, 2015 ESSEC Business School, France The Centre for Social and Environmental Accounting Research (CSEAR) has held an annual conference in the UK (often referred to as the Summer School) for more than two decades, as well as in other parts of the world for several years in Europe, Australia, New Zealand, and in both North and South Americas. Similar to other CSEAR conferences, the 3rd CSEAR France Conference will be a deliberately informal discussion forum gathering researchers, teachers, students and practitioners at all levels of experience to further enhance research in new instruments, policies and strategies related to social and environmental accounting in the very widest sense. The spirit of the conference is interdisciplinary and focuses on a high level of interaction, discussion and debate in a friendly and supportive atmosphere. As such, research papers from perspectives beyond accounting and management control such as organizational theory, marketing, finance, strategy, etc., are welcome. The conference will provide participants an opportunity to present their research projects, ranging from discussing preliminary findings to full papers. Submissions are welcome in either French or English. For this 3rd CSEAR France conference, we are pleased to welcome Professor Brendan O’Dwyer, Professor of Accounting at the University of Amsterdam, as our plenary speaker. For the practitioner forum, we are equally pleased to welcome Adam Koniuszewski, Chief Operating Officer at Green Cross International. There will also be a Special Issue of Advances in Environmental Accounting and Management on the “Current Developments in Social and Environmental Accounting” (a call for papers has been issued and is available on the conference website). -

Performance Ranking of Scientific Papers for World Universities

Performance Ranking of Scientific Papers for World Universities Sito: http://nturanking.lis.ntu.edu.tw/ Nazione: Taiwan Il ranking viene pubblicato dalla National Taiwan University Ranking dal 2007. La classifica è basata su una serie di indicatori bibliometrici (8 raggruppati in 3 aree) ricavabili dalla banca dati Clarivate Analitics. Le aree riguardano Produttività, Impatto ed Eccellenza della ricerca scientifica, con peso rispettivamente 25%, 35%, 40%. 7 indicatori su 8 dipendono dalla dimensione degli atenei, pertanto questo ranking è molto influenzato dal fattore dimensionale. Numero Posizione Numero Posizione di Atenei di Bari di Atenei di Bari italiani tra gli in nel in Atenei Primo ateneo in Ranking Anno classifica Mondo classifica italiani Italia Performance Ranking of 2020 500 360 26 15 Padova Scientific Papers for World Universities 2019 500 374 28 15 Padova 2018 500 372 28 14 Padova CWTS Leiden Ranking Sito: https://www.leidenranking.com/ Nazione: Paesi Bassi La classifica della CWTS Leiden è un ranking internazionale basato su dati e analisi bibliometrici e curato dal Centre for Science and Technology Studies (CWTS) della Leiden University dal 2007. Il Direzione Generale – Staff Sviluppo Organizzativo, Programmazione, Controllo e Valutazione – U.O. Statistiche di Ateneo Palazzo Ateneo P.zza Umberto I, 70121 Bari (Italia) – tel. 080-5714001 - [email protected] - www.uniba.it 1 database usato per ricavare i dati bibliometrici è quello di Clarivate Analytics (Web of Science). Al contrario di altri ranking della ricerca, ad esempio Taiwan ranking e URAP, gli indicatori prodotti da Leiden ranking non vengono aggregati per ottenere un unico valore di sintesi; per ciascuno viene presentato un ranking che considera o meno il grado di proprietà (fraction counting vs full counting). -

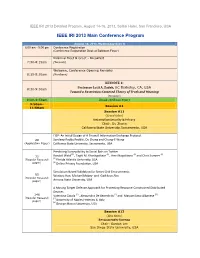

IEEE IRI 2013 Main Conference Program

IEEE IRI 2013 Detailed Program, August 14-16, 2013, Sofitel Hotel, San Francisco, USA IEEE IRI 2013 Main Conference Program August 14, 2013, Wednesday (Day 1) 8:00 am - 5:00 pm Conference Registration (Conference Registration Desk at Ballroom Foyer) Informal Meet & Greet - Breakfast 7:30-8:15am (Veranda) Welcome, Conference Opening Remarks 8:15-8:30am (Bordeaux) KEYNOTE 1: Professor Lotfi A. Zadeh, UC Berkeley, CA, USA 8:30-9:30am Toward a Restriction-Centered Theory of Truth and Meaning (Bordeaux) 9:30–9:50am Break (Ballroom Foyer) 9:50am- Session A1 11:50am Session A11 (Grand Salon) Information Security & Privacy Chair: Du Zhang California State University, Sacramento, USA FIEP: An Initial Design of A Firewall Information Exchange Protocol 20 Sandeep Reddy Pedditi, Du Zhang and Chung-E Wang (Application Paper) California State University, Sacramento, USA Predicting Susceptibility to Social Bots on Twitter (1) (1) (1) (2) 33 Randall Wald , Taghi M. Khoshgoftaar , Amri Napolitano and Chris Sumner (1) (Regular Research Florida Atlantic University, USA paper) (2) Online Privacy Foundation, USA Simulation-Based Validation for Smart Grid Environments 85 Wonkyu Han, Michael Mabey and Gail-Joon Ahn (Regular Research paper) Arizona State University, USA A Moving Target Defense Approach for Protecting Resource-Constrained Distributed Devices 146 Valentina Casola (1) , Alessandra De Benedictis (1) and Massimiliano Albanese (2) (Regular Research (1) paper) University of Naples Federico II, Italy (2) George Mason University, USA Session A12 (Blue -

Bibliometric Study in Support of Norway's Strategy for International Research Collaboration

Bibliometric Study in Support of Norway's Strategy for International Research Collaboration Final report Bibliometric Study in Support of Norway's Strategy for International Research Collaboration Final report © The Research Council of Norway 2014 The Research Council of Norway P.O.Box 2700 St. Hanshaugen N–0131 OSLO Telephone: +47 22 03 70 00 Telefax: +47 22 03 70 01 [email protected] www.rcn.no/english The report can be ordered at: www.forskningsradet.no/publikasjoner or green number telefax: +47 800 83 001 Design cover: Design et cetera AS Printing: 07 Gruppen/The Research Council of Norway Number of copies: 150 Oslo, March 2014 ISBN 978-82-12-03310-8 (print) ISBN 978-82-12-03311-5 (pdf) Bibliometric Study in Support of Norway’s Strategy for International Research Collaboration Final Report March 14, 2014 Authors Alexandre Beaudet David Campbell Grégoire Côté Stefanie Haustein Christian Lefebvre Guillaume Roberge Other contributors Philippe Deschamps Leroy Fife Isabelle Labrosse Rémi Lavoie Aurore Nicol Bastien St-Louis Lalonde Matthieu Voorons Contact information Grégoire Côté, Vice-President, Bibliometrics [email protected] Brussels | Montreal | Washington Bibliometric Study in Support of Norway’s Strategy for Final Report International Research Collaboration Contents Contents .................................................................................................................. i Tables ................................................................................................................... iii -

Analyzing the Activities of Visitors of the Leiden Ranking Website

STI 2018 Conference Proceedings Proceedings of the 23rd International Conference on Science and Technology Indicators All papers published in this conference proceedings have been peer reviewed through a peer review process administered by the proceedings Editors. Reviews were conducted by expert referees to the professional and scientific standards expected of a conference proceedings. Chair of the Conference Paul Wouters Scientific Editors Rodrigo Costas Thomas Franssen Alfredo Yegros-Yegros Layout Andrea Reyes Elizondo Suze van der Luijt-Jansen The articles of this collection can be accessed at https://hdl.handle.net/1887/64521 ISBN: 978-90-9031204-0 © of the text: the authors © 2018 Centre for Science and Technology Studies (CWTS), Leiden University, The Netherlands This ARTICLE is licensed under a Creative Commons Atribution-NonCommercial-NonDetivates 4.0 International Licensed Analyzing the activities of visitors of the Leiden Ranking website Nees Jan van Eck and Ludo Waltman [email protected]; [email protected] Centre for Science and Technology Studies, Leiden University, PO box 905, 2300 AX Leiden (The Netherlands) Introduction In the scientometric literature, university rankings are discussed primarily from a methodological point of view (e.g., Waltman et al., 2012). In this paper, we take a different perspective. In our view, constructing a high-quality university ranking requires not only an advanced understanding of methodological issues but also a sufficient level of knowledge of the way in which university rankings are used. The use of university rankings has been studied using questionnaires and interviews (e.g., Hazelkorn, 2015). We take an alternative approach by analyzing the activities of visitors of a university ranking website. -

Proposal Evaluation Panel (PEP) and Site Characterization Panel (SCP) Meeting: 19 – 21 June 2013 Santa Cruz, CA USA

Proposal Evaluation Panel (PEP) and Site Characterization Panel (SCP) Meeting: 19 – 21 June 2013 Santa Cruz, CA USA Proposal Evaluation Panel – PEP -------------------------------------------------------------------------------------------------------- Richard Arculus** Australian National University Jang-Jun Bahk Korea Institute of Geoscience & Mineral Resources (KIGAM) Jennifer Biddle University of Delaware Tim Bralower** Pennsylvania State University Beth Christensen Adelphi University Peter Clift Louisiana State University Adélie Delacour Université Jean Monnet Jörg Geldmacher GEOMAR-Helmholtz Centre for Ocean Research Marguerite Godarda Montpellier 2 University Verener Heuer University of Bremen Barbara John University of Wyoming Jun-ichi Kimurab JAMSTEC Dick Kroon* The University of Edinburgh Kathie Marsagliac California State University, Northridge Lisa McNeill University of Southampton Katsuyoshi Michibayashi Shizuoka University Tomoaki Morishita Kanazawa University Craig Moyerd Western Washington University Masafumi Murayama Kochi University Clive Neal University of Notre Dame Hiroshi Nishi Tohoku University Matt O'Regan Stockholm University Koichiro Obana Japan Agency for Marine-Earth Science and Technology (JAMSTEC) Stuart Robinson University College London Amelia Shevenell** University of South Florida Ashok Singhvi Physical Research Laboratory David Smith University of Rhode Island Michael Strasser** ETH Zurich Nabil Sultan IFREMER Yohey Suzuki The University of Tokyo Yoshinori Takano** Japan Agency for Marine-Earth Science -

NTS-Asia-Monograph-Coronavirus

Table of Contents Foreword 2 About the Authors 3 Acknowledgements 4 Introduction 5 Chapter One Pathogen Research Networks in China: Origins and Steady Development 8 Chapter Two Chinese Biosafety Level 4 Laboratories and Their Key International Linkages 21 Chapter Three Critical Assistance from Virology Networks Abroad 26 Chapter Four The Future of Chinese Virology Laboratories: China as “Number One”? 37 Appendix Graphs of Transnational Networks: China, the West and the Rest 42 Bibliography 51 NTS-Asia Monograph 1 Foreword The importance of paying close attention to health security has become more urgent than ever as the world continues to deal with the devastating impact of COVID-19 pandemic. Since its outbreak in 2020, the pandemic caused by a novel coronavirus SARS-Cov-2 has already resulted in millions of lives lost and inflicted untold human misery and suffering to people globally. The Centre for Non-Traditional Security (NTS) Studies of the S. Rajaratnam School of International Studies (RSIS), Nanyang Technological University, is one of the leading centres in Asia that highlights the critical linkages between non-traditional security challenges, like climate change and health security, to national and global security. As we have seen, the COVID-19 pandemic is more than a public health emergency of international concern. Its severe impacts cut across economic security, food security, environmental security and personal security, among others. As the international community continues to grapple with the consequential impact of COVID-19, it is sobering to note that other pandemics are expected to emerge. Thus, the need to advance the global agenda of pandemic preparedness and response cannot be overstated given the evolving nature of emerging infectious diseases. -

Proceedings in One File

1st Symposium on Information Management and Big Data 8th, 9th and 10th September 2014 Cusco - Peru PROCEEDINGS © SIMBig, Andina University of Cusco and the authors of individual articles. Proceeding Editors: J. A. Lossio-Ventura and H. Alatrista-Salas TABLE OF CONTENTS SIMBig 2014 Conference Organization Committee SIMBig 2014 Conference Reviewers SIMBig 2014 Paper Contents SIMBig 2014 Keynote Speaker Presentations Full and Short Papers The SIMBig 2014 Organization Committee confirms that full and concise papers accepted for this publication: • Meet the definition of research in relation to creativity, originality, and increasing humanity's stock of knowledge; • Are selected on the basis of a peer review process that is independent, qualified expert review; • Are published and presented at a conference having national and international significance as evidenced by registrations and participation; and • Are made available widely through the Conference web site. Disclaimer: The SIMBig 2014 Organization Committee accepts no responsibility for omissions and errors. 3 SIMBig 2014 Organization Committee GENERAL CHAIR • Juan Antonio LOSSIO VENTURA, Montpellier 2 University, LIRMM, Montpellier, France • Hugo ALATRISTA SALAS, UMR TETIS, Irstea, France LOCAL CHAIR • Cristhian GANVINI VALCARCEL Andina University of Cusco, Peru • Armando FERMIN PEREZ, National University of San Marcos, Peru • Cesar A. BELTRAN CASTAÑON, GRPIAA, Pontifical Catholic University of Peru • Marco RIVAS PEÑA, National University of San Marcos, Peru 4 SIMBig 2014 Conference Reviewers • Salah Ait-Mokhtar, Xerox Research Centre Europa, FRANCE • Jérôme Azé, LIRMM - University of Montpellier 2, FRANCE • Cesar A. Beltrán Castañón, GRPIAA - Pontifical Catholic University of Peru, PERU • Sandra Bringay, LIRMM - University of Montpellier 3, FRANCE • Oscar Corcho, Ontology Engineering Group - Polytechnic University of Madrid, SPAIN • Gabriela Csurka, Xerox Research Centre Europa, FRANCE • Mathieu d'Aquin, Knowledge Media institute (KMi) - Open University, UK • Ahmed A. -

Ocean Science in Canada: Meeting the Challenge, Seizing the Opportunity

OCEAN SCIENCE IN CANADA: MEETING THE CHALLENGE, SEIZING THE OPPORTUNITY Appendices Science Advice in the Public Interest OCEAN SCIENCE IN CANADA: MEETING THE CHALLENGE, SEIZING THE OPPORTUNITY The Expert Panel on Canadian Ocean Science ii Ocean Science in Canada: Meeting the Challenge, Seizing the Opportunity Table of Contents Appendix A Additional Information on Canada’s Capacity in Ocean Science .......................................1 A1 Human Capacity............................................................................................................................................ 1 A2 Organizations and Networks .......................................................................................................................... 2 A3 Ocean Science Funding ................................................................................................................................. 3 Appendix B Bibliometric Comparisons of Canada to Other Leading Countries in Ocean Science .....5 B1 Bibliometric Entities ....................................................................................................................................... 5 B2 Canada’s Position in Ocean Science Output Relative to Other Leading Countries by Research Theme ...... 5 B3 Collaboration Between Canadian Organizations in Ocean Science .............................................................. 11 B4 Collaboration Between International Organizations in Ocean Science ......................................................... 13 B5 Canada’s International -

Strategically Engaging with International Rankings – Experiences from Three Universities

STRATEGICALLY ENGAGING WITH INTERNATIONAL RANKINGS – EXPERIENCES FROM THREE UNIVERSITIES The Illuminate Consulting Group 22 January 2019 ICG © 2019 AIEA Conference International Rankings – 22 February 2019 1 DISCLAIMER • This presentation was delivered by ICG, Auburn University, Case Western Reserve University, and the University of Rochester on 22 February 2019 at the AIEA Conference in San Francisco. • The presentation shall be considered incomplete without oral clarification. • The opinions expressed in this presentation are those of the authors alone. • ICG makes no warranty regarding any claim or data presented in this presentation, and does not take any responsibility for any third party acting upon information contained in this presentation. • Copyright ICG, 2019. All rights reserved. ICG © 2019 AIEA Conference International Rankings – 22 February 2019 2 CONTENTS Introduction and Housekeeping Overview of International Rankings Auburn, CWRU, and Rochester in Key International Rankings Auburn’s Rankings Journey Rochester's Rankings Journey CWRU's Rankings Journey Panelist Discussion Audience Discussion ICG © 2019 AIEA Conference International Rankings – 22 February 2019 3 PRESENTERS AND CHAIR: BIOS Bios • David Fleshler serves as the Vice Provost for International Affairs at Case Western Reserve University. He has been involved in a number of leadership roles in international education, including service with the AIEA. David received his Bachelor's degree from the University of Michigan, and a JD from Boston College Law School. • Andy Gillespie serves as the Assistant Provost for International Programs at Auburn University. He has been involved in a number of leadership roles in international education, including service with the AIEA. He holds a Bachelor’s degree in Natural Resource Management from the State University of New York, a Master’s degree in Forest Biology from the University of New Hampshire, and a Ph.D.