Using Machine Learning to Understand Text for Pharmacovigilance: a Systematic Review

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Drug Safety in Translational Paediatric Research: Practical Points to Consider for Paediatric Safety Profiling and Protocol Development: a Scoping Review

pharmaceutics Review Drug Safety in Translational Paediatric Research: Practical Points to Consider for Paediatric Safety Profiling and Protocol Development: A Scoping Review Beate Aurich 1 and Evelyne Jacqz-Aigrain 1,2,* 1 Department of Pharmacology, Saint-Louis Hospital, 75010 Paris, France; [email protected] 2 Paris University, 75010 Paris, France * Correspondence: [email protected] Abstract: Translational paediatric drug development includes the exchange between basic, clinical and population-based research to improve the health of children. This includes the assessment of treatment related risks and their management. The objectives of this scoping review were to search and summarise the literature for practical guidance on how to establish a paediatric safety specification and its integration into a paediatric protocol. PubMed, Embase, Web of Science, and websites of regulatory authorities and learned societies were searched (up to 31 December 2020). Retrieved citations were screened and full texts reviewed where applicable. A total of 3480 publica- tions were retrieved. No article was identified providing practical guidance. An introduction to the practical aspects of paediatric safety profiling and protocol development is provided by combining health authority and learned society guidelines with the specifics of paediatric research. The paedi- atric safety specification informs paediatric protocol development by, for example, highlighting the Citation: Aurich, B.; Jacqz-Aigrain, E. need for a pharmacokinetic study prior to a paediatric trial. It also informs safety related protocol Drug Safety in Translational Paediatric Research: Practical Points sections such as exclusion criteria, safety monitoring and risk management. In conclusion, safety to Consider for Paediatric Safety related protocol sections require an understanding of the paediatric safety specification. -

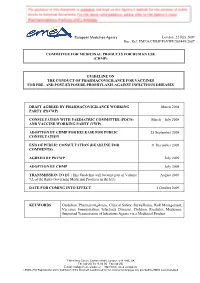

Guideline on the Conduct of Pharmacovigilance for Vaccines for Pre- and Post-Exposure Prophylaxis Against Infectious Diseases

European Medicines Agency London, 22 July 2009 Doc. Ref. EMEA/CHMP/PhVWP/503449/2007 COMMITTEE FOR MEDICINAL PRODUCTS FOR HUMAN USE (CHMP) GUIDELINE ON THE CONDUCT OF PHARMACOVIGILANCE FOR VACCINES FOR PRE- AND POST-EXPOSURE PROPHYLAXIS AGAINST INFECTIOUS DISEASES DRAFT AGREED BY PHARMACOVIGILANCE WORKING March 2008 PARTY (PhVWP) CONSULTATION WITH PAEDIATRIC COMMITTEE (PDCO) March – July 2008 AND VACCINE WORKING PARTY (VWP) ADOPTION BY CHMP FOR RELEASE FOR PUBLIC 25 September 2008 CONSULTATION END OF PUBLIC CONSULTATION (DEADLINE FOR 31 December 2008 COMMENTS) AGREED BY PhVWP July 2009 ADOPTION BY CHMP July 2009 TRANSMISSION TO EC (This Guideline will become part of Volume August 2009 9A of the Rules Governing Medicinal Products in the EU). DATE FOR COMING INTO EFFECT 1 October 2009 KEYWORDS Guideline, Pharmacovigilance, Clinical Safety, Surveillance, Risk Management, Vaccines, Immunisation, Infectious Diseases, Children, Paediatric Medicines, Suspected Transmission of Infectious Agents via a Medicinal Product 7 Westferry Circus, Canary Wharf, London, E14 4HB, UK Tel. (44-20) 74 18 84 00 Fax (44-20) E-mail: [email protected] http://www.emea.europa.eu ©EMEA 2009 Reproduction and/or distribution of this document is authorised for non commercial purposes only provided the EMEA is acknowledged GUIDELINE ON THE CONDUCT OF PHARMACOVIGILANCE FOR VACCINES FOR PRE- AND POST-EXPOSURE PROPHYLAXIS AGAINST INFECTIOUS DISEASES TABLE OF CONTENTS EXECUTIVE SUMMARY_______________________________________________________ 3 1. Introduction ____________________________________________________________ -

Guidance for Industry E2E Pharmacovigilance Planning

Guidance for Industry E2E Pharmacovigilance Planning U.S. Department of Health and Human Services Food and Drug Administration Center for Drug Evaluation and Research (CDER) Center for Biologics Evaluation and Research (CBER) April 2005 ICH Guidance for Industry E2E Pharmacovigilance Planning Additional copies are available from: Office of Training and Communication Division of Drug Information, HFD-240 Center for Drug Evaluation and Research Food and Drug Administration 5600 Fishers Lane Rockville, MD 20857 (Tel) 301-827-4573 http://www.fda.gov/cder/guidance/index.htm Office of Communication, Training and Manufacturers Assistance, HFM-40 Center for Biologics Evaluation and Research Food and Drug Administration 1401 Rockville Pike, Rockville, MD 20852-1448 http://www.fda.gov/cber/guidelines.htm. (Tel) Voice Information System at 800-835-4709 or 301-827-1800 U.S. Department of Health and Human Services Food and Drug Administration Center for Drug Evaluation and Research (CDER) Center for Biologics Evaluation and Research (CBER) April 2005 ICH Contains Nonbinding Recommendations TABLE OF CONTENTS I. INTRODUCTION (1, 1.1) ................................................................................................... 1 A. Background (1.2) ..................................................................................................................2 B. Scope of the Guidance (1.3)...................................................................................................2 II. SAFETY SPECIFICATION (2) ..................................................................................... -

Automatic Correction of Real-Word Errors in Spanish Clinical Texts

sensors Article Automatic Correction of Real-Word Errors in Spanish Clinical Texts Daniel Bravo-Candel 1,Jésica López-Hernández 1, José Antonio García-Díaz 1 , Fernando Molina-Molina 2 and Francisco García-Sánchez 1,* 1 Department of Informatics and Systems, Faculty of Computer Science, Campus de Espinardo, University of Murcia, 30100 Murcia, Spain; [email protected] (D.B.-C.); [email protected] (J.L.-H.); [email protected] (J.A.G.-D.) 2 VÓCALI Sistemas Inteligentes S.L., 30100 Murcia, Spain; [email protected] * Correspondence: [email protected]; Tel.: +34-86888-8107 Abstract: Real-word errors are characterized by being actual terms in the dictionary. By providing context, real-word errors are detected. Traditional methods to detect and correct such errors are mostly based on counting the frequency of short word sequences in a corpus. Then, the probability of a word being a real-word error is computed. On the other hand, state-of-the-art approaches make use of deep learning models to learn context by extracting semantic features from text. In this work, a deep learning model were implemented for correcting real-word errors in clinical text. Specifically, a Seq2seq Neural Machine Translation Model mapped erroneous sentences to correct them. For that, different types of error were generated in correct sentences by using rules. Different Seq2seq models were trained and evaluated on two corpora: the Wikicorpus and a collection of three clinical datasets. The medicine corpus was much smaller than the Wikicorpus due to privacy issues when dealing Citation: Bravo-Candel, D.; López-Hernández, J.; García-Díaz, with patient information. -

Ecological Impacts of Veterinary Pharmaceuticals: More Transparency – Better Protection of the Environment

Ecological Impacts of Veterinary Pharmaceuticals: More Transparency – Better Protection of the Environment Avoiding Environmental Pollution from Veterinary Pharmaceuticals To reduce the contamination of air, soil, and bodies of pharmaceuticals more stringently, monitoring systematically water caused by veterinary medicinal products used in their occurrence in the environment, converting animal hus- agriculture and livestock farming, effective measures bandry practices to preserve animals’ health with a minimal must be taken throughout the entire life cycle of these use of antibiotics, and enforcing legal regulations to ensure products – from production and authorisation to appli- implementation of all the measures outlined here. cation and disposal. In the context of the revision of veterinary medicinal products All stakeholders – whether they are farmers, veterinarians, legislation that is currently underway, this background paper consumers, or political decision makers – are called upon focuses on three measures that would contribute to mak- to contribute to reducing contamination of the environment ing information on the occurrence of veterinary drugs in the caused by pharmaceutical residues and to improving pro- environment and their eco-toxicological effects more widely tection of the environment and human health. Appropriate available and enhance protection of the environment from measures include establishing “clean” production plants, contamination with veterinary pharmaceuticals. developing pharmaceuticals with reduced environmental -

Natural Language Toolkit Based Morphology Linguistics

Published by : International Journal of Engineering Research & Technology (IJERT) http://www.ijert.org ISSN: 2278-0181 Vol. 9 Issue 01, January-2020 Natural Language Toolkit based Morphology Linguistics Alifya Khan Pratyusha Trivedi Information Technology Information Technology Vidyalankar Institute of Technology Vidyalankar Institute of Technology, Mumbai, India Mumbai, India Karthik Ashok Prof. Kanchan Dhuri Information Technology, Information Technology, Vidyalankar Institute of Technology, Vidyalankar Institute of Technology, Mumbai, India Mumbai, India Abstract— In the current scenario, there are different apps, of text to different languages, grammar check, etc. websites, etc. to carry out different functionalities with respect Generally, all these functionalities are used in tandem with to text such as grammar correction, translation of text, each other. For e.g., to read a sign board in a different extraction of text from image or videos, etc. There is no app or language, the first step is to extract text from that image and a website where a user can get all these functions/features at translate it to any respective language as required. Hence, to one place and hence the user is forced to install different apps do this one has to switch from application to application or visit different websites to carry out those functions. The which can be time consuming. To overcome this problem, proposed system identifies this problem and tries to overcome an integrated environment is built where all these it by providing various text-based features at one place, so that functionalities are available. the user will not have to hop from app to app or website to website to carry out various functions. -

Practice with Python

CSI4108-01 ARTIFICIAL INTELLIGENCE 1 Word Embedding / Text Processing Practice with Python 2018. 5. 11. Lee, Gyeongbok Practice with Python 2 Contents • Word Embedding – Libraries: gensim, fastText – Embedding alignment (with two languages) • Text/Language Processing – POS Tagging with NLTK/koNLPy – Text similarity (jellyfish) Practice with Python 3 Gensim • Open-source vector space modeling and topic modeling toolkit implemented in Python – designed to handle large text collections, using data streaming and efficient incremental algorithms – Usually used to make word vector from corpus • Tutorial is available here: – https://github.com/RaRe-Technologies/gensim/blob/develop/tutorials.md#tutorials – https://rare-technologies.com/word2vec-tutorial/ • Install – pip install gensim Practice with Python 4 Gensim for Word Embedding • Logging • Input Data: list of word’s list – Example: I have a car , I like the cat → – For list of the sentences, you can make this by: Practice with Python 5 Gensim for Word Embedding • If your data is already preprocessed… – One sentence per line, separated by whitespace → LineSentence (just load the file) – Try with this: • http://an.yonsei.ac.kr/corpus/example_corpus.txt From https://radimrehurek.com/gensim/models/word2vec.html Practice with Python 6 Gensim for Word Embedding • If the input is in multiple files or file size is large: – Use custom iterator and yield From https://rare-technologies.com/word2vec-tutorial/ Practice with Python 7 Gensim for Word Embedding • gensim.models.Word2Vec Parameters – min_count: -

Question Answering by Bert

Running head: QUESTION AND ANSWERING USING BERT 1 Question and Answering Using BERT Suman Karanjit, Computer SCience Major Minnesota State University Moorhead Link to GitHub https://github.com/skaranjit/BertQA QUESTION AND ANSWERING USING BERT 2 Table of Contents ABSTRACT .................................................................................................................................................... 3 INTRODUCTION .......................................................................................................................................... 4 SQUAD ............................................................................................................................................................ 5 BERT EXPLAINED ...................................................................................................................................... 5 WHAT IS BERT? .......................................................................................................................................... 5 ARCHITECTURE ............................................................................................................................................ 5 INPUT PROCESSING ...................................................................................................................................... 6 GETTING ANSWER ........................................................................................................................................ 8 SETTING UP THE ENVIRONMENT. .................................................................................................... -

![Arxiv:2009.12534V2 [Cs.CL] 10 Oct 2020 ( Tasks Many Across Progress Exciting Seen Have We Ilo Paes Akpetandde Language Deep Pre-Trained Lack Speakers, Billion a ( Al](https://docslib.b-cdn.net/cover/3654/arxiv-2009-12534v2-cs-cl-10-oct-2020-tasks-many-across-progress-exciting-seen-have-we-ilo-paes-akpetandde-language-deep-pre-trained-lack-speakers-billion-a-al-593654.webp)

Arxiv:2009.12534V2 [Cs.CL] 10 Oct 2020 ( Tasks Many Across Progress Exciting Seen Have We Ilo Paes Akpetandde Language Deep Pre-Trained Lack Speakers, Billion a ( Al

iNLTK: Natural Language Toolkit for Indic Languages Gaurav Arora Jio Haptik [email protected] Abstract models, trained on a large corpus, which can pro- We present iNLTK, an open-source NLP li- vide a headstart for downstream tasks using trans- brary consisting of pre-trained language mod- fer learning. Availability of such models is criti- els and out-of-the-box support for Data Aug- cal to build a system that can achieve good results mentation, Textual Similarity, Sentence Em- in “low-resource” settings - where labeled data is beddings, Word Embeddings, Tokenization scarce and computation is expensive, which is the and Text Generation in 13 Indic Languages. biggest challenge for working on NLP in Indic By using pre-trained models from iNLTK Languages. Additionally, there’s lack of Indic lan- for text classification on publicly available 1 2 datasets, we significantly outperform previ- guages support in NLP libraries like spacy , nltk ously reported results. On these datasets, - creating a barrier to entry for working with Indic we also show that by using pre-trained mod- languages. els and data augmentation from iNLTK, we iNLTK, an open-source natural language toolkit can achieve more than 95% of the previ- for Indic languages, is designed to address these ous best performance by using less than 10% problems and to significantly lower barriers to do- of the training data. iNLTK is already be- ing NLP in Indic Languages by ing widely used by the community and has 40,000+ downloads, 600+ stars and 100+ • sharing pre-trained deep language models, forks on GitHub. -

Unlocking the Power of Pharmacovigilance* an Adaptive Approach to an Evolving Drug Safety Environment

Unlocking the power of pharmacovigilance* An adaptive approach to an evolving drug safety environment PricewaterhouseCoopers’ Health Research Institute Contents Executive summary 01 Background 02 The challenge to the pharmaceutical industry 02 Increased media scrutiny 02 Greater regulatory and legislative scrutiny 04 Investment in pharmacovigilance 05 The reactive nature of pharmacovigilance 06 Unlocking the power of pharmacovigilance 07 Organizational alignment 07 Operations management 07 Data management 08 Risk management 10 The three strategies of effective pharmacovigilance 11 Strategy 1: Align and clarify roles, responsibilities, and communications 12 Strategy 2: Standardize pharmacovigilance processes and data management 14 Strategy 3: Implement proactive risk minimization 20 Conclusion 23 Endnotes 24 Glossary 25 Executive summary The idea that controlled clinical incapable of addressing shifts in 2. Standardize pharmacovigilance trials can establish product safety public expectations and regulatory processes and data management and effectiveness is a core principle and media scrutiny. This reality has • Align operational activities across of the pharmaceutical industry. revealed issues in the four areas departments and across sites Neither the clinical trials process involved in patient safety operations: • Implement process-driven standard nor the approval procedures of the organizational alignment, operations operating procedures, work U.S. Food and Drug Administration management, data management, and instructions, and training -

Natural Language Processing Based Generator of Testing Instruments

California State University, San Bernardino CSUSB ScholarWorks Electronic Theses, Projects, and Dissertations Office of aduateGr Studies 9-2017 NATURAL LANGUAGE PROCESSING BASED GENERATOR OF TESTING INSTRUMENTS Qianqian Wang Follow this and additional works at: https://scholarworks.lib.csusb.edu/etd Part of the Other Computer Engineering Commons Recommended Citation Wang, Qianqian, "NATURAL LANGUAGE PROCESSING BASED GENERATOR OF TESTING INSTRUMENTS" (2017). Electronic Theses, Projects, and Dissertations. 576. https://scholarworks.lib.csusb.edu/etd/576 This Project is brought to you for free and open access by the Office of aduateGr Studies at CSUSB ScholarWorks. It has been accepted for inclusion in Electronic Theses, Projects, and Dissertations by an authorized administrator of CSUSB ScholarWorks. For more information, please contact [email protected]. NATURAL LANGUAGE PROCESSING BASED GENERATOR OF TESTING INSTRUMENTS A Project Presented to the Faculty of California State University, San Bernardino In Partial Fulfillment of the Requirements for the DeGree Master of Science in Computer Science by Qianqian Wang September 2017 NATURAL LANGUAGE PROCESSING BASED GENERATOR OF TESTING INSTRUMENTS A Project Presented to the Faculty of California State University, San Bernardino by Qianqian Wang September 2017 Approved by: Dr. Kerstin VoiGt, Advisor, Computer Science and EnGineerinG Dr. TonG Lai Yu, Committee Member Dr. George M. Georgiou, Committee Member © 2017 Qianqian Wang ABSTRACT Natural LanGuaGe ProcessinG (NLP) is the field of study that focuses on the interactions between human lanGuaGe and computers. By “natural lanGuaGe” we mean a lanGuaGe that is used for everyday communication by humans. Different from proGramminG lanGuaGes, natural lanGuaGes are hard to be defined with accurate rules. NLP is developinG rapidly and it has been widely used in different industries. -

Causality in Pharmacoepidemiology and Pharmacovigilance: a Theoretical Excursion

DOI: 10.1590/1980-5497201700030010 ARTIGO ORIGINAL / ORIGINAL ARTICLE Causalidade em farmacoepidemiologia e farmacovigilância: uma incursão teórica Causality in Pharmacoepidemiology and Pharmacovigilance: a theoretical excursion Daniel Marques MotaI,II, Ricardo de Souza KuchenbeckerI RESUMO: O artigo teceu algumas considerações sobre causalidade em farmacoepidemiologia e farmacovigilância. Inicialmente, fizemos uma breve introdução sobre a importância do tema, ressaltando que o entendimento da relação causal é considerado como uma das maiores conquistas das ciências e que tem sido, ao longo dos tempos, uma preocupação contínua e central de filósofos e epidemiologistas. Na sequência, descrevemos as definições e os tipos de causas, demonstrando suas influências no pensamento farmacoepidemiológico. Logo a seguir, apresentamos o modelo multicausal de Rothman como um dos fundantes para a explicação da causalidade múltipla, e o tema da determinação da causalidade. Concluímos com alguns comentários e reflexões sobre causalidade na perspectiva da vigilância sanitária, particularmente, para as ações de regulação em farmacovigilância. Palavras-chave: Aplicações da epidemiologia. Causalidade. Efeitos colaterais e reações adversas relacionados a medicamentos. Farmacoepidemiologia. Farmacovigilância. Vigilância sanitária. IPrograma de Pós-Graduação em Epidemiologia, Faculdade de Medicina, Universidade Federal do Rio Grande do Sul – Porto Alegre (RS), Brasil. IIAgência Nacional de Vigilância Sanitária – Brasília (DF), Brasil. Autor correspondente: Daniel Marques Mota. Programa de Pós-Gradução em Epidemiologia da Faculdade de Medicina da Universidade Federal do Rio Grande do Sul. Rua Ramiro Barcelos, 2.400, 2º andar, CEP: 90035-003, Porto Alegre, RS, Brasil. E-mail: [email protected] Conflito de interesses: nada a declarar – Fonte de financiamento: nenhuma. 475 REV BRAS EPIDEMIOL JUL-SET 2017; 20(3): 475-486 MOTA, D.M., KUCHENBECKER, R.S.