A Graybeard's Worst Nightmare

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vpro-1085-R Course for RHV/Ovirt/OLVM Implementation & Administration Lab Exercises

vPro-1085-R - Storware vProtect - Implementation & Administration Lab Exercises - v7.md 2/24/2021 vPro-1085-R Course for RHV/oVirt/OLVM Implementation & Administration Lab Exercises Credentials and access details Attribute Value Download URL http://10.40.0.253/lab-materials/vprotect/vpro-1085 vProtect host 10.41.0.4 vProtect username root vProtect password St0rL@bs vProtect Web UI https://10.41.0.4 vProtect Web UI username admin vProtect Web UI password vPr0tect RHV manager UI https://rhv-m.storware.lab/ovirt-engine RHV user admin@internal in vProtect in UI, and admin in RHV manager UI RHV password St0rL@bs Lab 1 - Demo of all-in-one installation In this section we'll show you how to install vProtect components quickly using all-in-one setup scripts. Before installation steps please update and then reboot system dnf -y update Remote repository (option 1) 1. Export VPROTECT_REPO variable to point to the repository URL export VPROTECT_REPO=http://10.40.0.253/vprotect/current/el8 2. Execute script: bash < <(curl -s http://repo.storware.eu/vprotect/vprotect-local-install.sh) 1 / 31 vPro-1085-R - Storware vProtect - Implementation & Administration Lab Exercises - v7.md 2/24/2021 Lab 2 - Installation with RPMs In this section you're going to install vProtect using RPMs - so that all necessary steps are done Prerequisites 1. Access vlab.vpro.proxy.v3 2. Open putty on your vlab.vpro.proxy.v3 3. Connect to vProtect machine with a root access 4. Use your CentOS 8 minimal 5. Make sure your OS is up to date: dnf -y update If kernel is updated, then You need to reboot your operating system. -

Red Hat Enterprise Linux 8 Installing, Managing, and Removing User-Space Components

Red Hat Enterprise Linux 8 Installing, managing, and removing user-space components An introduction to AppStream and BaseOS in Red Hat Enterprise Linux 8 Last Updated: 2021-06-25 Red Hat Enterprise Linux 8 Installing, managing, and removing user-space components An introduction to AppStream and BaseOS in Red Hat Enterprise Linux 8 Legal Notice Copyright © 2021 Red Hat, Inc. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/ . In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, the Red Hat logo, JBoss, OpenShift, Fedora, the Infinity logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. Linux ® is the registered trademark of Linus Torvalds in the United States and other countries. Java ® is a registered trademark of Oracle and/or its affiliates. XFS ® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL ® is a registered trademark of MySQL AB in the United States, the European Union and other countries. -

Release Notes for Fedora 22

Fedora 22 Release Notes Release Notes for Fedora 22 Edited by The Fedora Docs Team Copyright © 2015 Fedora Project Contributors. The text of and illustrations in this document are licensed by Red Hat under a Creative Commons Attribution–Share Alike 3.0 Unported license ("CC-BY-SA"). An explanation of CC-BY-SA is available at http://creativecommons.org/licenses/by-sa/3.0/. The original authors of this document, and Red Hat, designate the Fedora Project as the "Attribution Party" for purposes of CC-BY-SA. In accordance with CC-BY-SA, if you distribute this document or an adaptation of it, you must provide the URL for the original version. Red Hat, as the licensor of this document, waives the right to enforce, and agrees not to assert, Section 4d of CC-BY-SA to the fullest extent permitted by applicable law. Red Hat, Red Hat Enterprise Linux, the Shadowman logo, JBoss, MetaMatrix, Fedora, the Infinity Logo, and RHCE are trademarks of Red Hat, Inc., registered in the United States and other countries. For guidelines on the permitted uses of the Fedora trademarks, refer to https:// fedoraproject.org/wiki/Legal:Trademark_guidelines. Linux® is the registered trademark of Linus Torvalds in the United States and other countries. Java® is a registered trademark of Oracle and/or its affiliates. XFS® is a trademark of Silicon Graphics International Corp. or its subsidiaries in the United States and/or other countries. MySQL® is a registered trademark of MySQL AB in the United States, the European Union and other countries. All other trademarks are the property of their respective owners. -

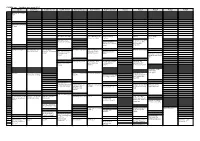

FOSDEM 2017 Schedule

FOSDEM 2017 - Saturday 2017-02-04 (1/9) Janson K.1.105 (La H.2215 (Ferrer) H.1301 (Cornil) H.1302 (Depage) H.1308 (Rolin) H.1309 (Van Rijn) H.2111 H.2213 H.2214 H.3227 H.3228 Fontaine)… 09:30 Welcome to FOSDEM 2017 09:45 10:00 Kubernetes on the road to GIFEE 10:15 10:30 Welcome to the Legal Python Winding Itself MySQL & Friends Opening Intro to Graph … Around Datacubes Devroom databases Free/open source Portability of containers software and drones Optimizing MySQL across diverse HPC 10:45 without SQL or touching resources with my.cnf Singularity Welcome! 11:00 Software Heritage The Veripeditus AR Let's talk about The State of OpenJDK MSS - Software for The birth of HPC Cuba Game Framework hardware: The POWER Make your Corporate planning research Applying profilers to of open. CLA easy to use, aircraft missions MySQL Using graph databases please! 11:15 in popular open source CMSs 11:30 Jockeying the Jigsaw The power of duck Instrumenting plugins Optimized and Mixed License FOSS typing and linear for Performance reproducible HPC Projects algrebra Schema Software deployment 11:45 Incremental Graph Queries with 12:00 CloudABI LoRaWAN for exploring Open J9 - The Next Free It's time for datetime Reproducible HPC openCypher the Internet of Things Java VM sysbench 1.0: teaching Software Installation on an old dog new tricks Cray Systems with EasyBuild 12:15 Making License 12:30 Compliance Easy: Step Diagnosing Issues in Webpush notifications Putting Your Jobs Under Twitter Streaming by Open Source Step. Java Apps using for Kinto Introducing gh-ost the Microscope using Graph with Gephi Thermostat and OGRT Byteman. -

Using System Call Interposition to Automatically Create Portable Software Packages

CDE: Using System Call Interposition to Automatically Create Portable Software Packages Philip J. Guo and Dawson Engler Stanford University April 5, 2011 (This technical report is an extended version of our 2011 USENIX ATC paper) Abstract as dependency hell. Or the user might lack permissions to install software packages in the first place, a common It can be painfully difficult to take software that runs on occurrence on corporate machines and compute clusters one person’s machine and get it to run on another ma- that are administered by IT departments. Finally, the chine. Online forums and mailing lists are filled with user (recalling bitter past experiences) may be reluctant discussions of users’ troubles with compiling, installing, to perturb a working environment by upgrading or down- and configuring software and their myriad of dependen- grading library versions just to try out new software. cies. To eliminate this dependency problem, we created As a testament to the ubiquity of software deployment a system called CDE that uses system call interposition to problems, consider the prevalence of online forums and monitor the execution of x86-Linux programs and pack- mailing list discussions dedicated to troubleshooting in- age up the Code, Data, and Environment required to run stallation and configuration issues. For example, almost them on other x86-Linux machines. The main benefits half of the messages sent to the mailing lists of two ma- of CDE are that creating a package is completely auto- ture research tools, the graph-tool mathematical graph matic, and that running programs within a package re- analysis library [10] and the PADS system for processing quires no installation, configuration, or root permissions. -

Bigfix Patch Centos User Guide

BigFix Patch for CentOS User's Guide Special notice Before using this information and the product it supports, read the information in Notices (on page 91). Edition notice This edition applies to version 9.5 of BigFix and to all subsequent releases and modifications until otherwise indicated in new editions. Contents Special notice................................................................................................................................ 2 Edition notice............................................................................................................................... 3 Chapter 1. Overview.......................................................................................................... 1 What's new in this update release....................................................................................... 2 Supported platforms and updates.......................................................................................8 Supported CentOS repositories..........................................................................................10 Site subscription..................................................................................................................12 Patching method................................................................................................................. 12 Chapter 2. Using the download plug-in........................................................................... 14 Manage Download Plug-ins dashboard overview.............................................................15 -

Oracle® Linux Virtualization Manager Getting Started Guide

Oracle® Linux Virtualization Manager Getting Started Guide F25124-11 September 2021 Oracle Legal Notices Copyright © 2019, 2021 Oracle and/or its affiliates. This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. Government end users are "commercial computer software" or "commercial computer software documentation" pursuant to the applicable Federal Acquisition Regulation and agency-specific supplemental regulations. As such, the use, reproduction, duplication, release, display, disclosure, modification, preparation of derivative works, and/or adaptation of i) Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs), ii) Oracle computer documentation and/or iii) other Oracle data, is subject to the rights and limitations specified in the license contained in the applicable contract. -

Introduction to the Nix Package Manager

Introduction Nix concepts Usage examples Conclusion Introduction to the Nix Package Manager Millian Poquet 2021-05-12 — Datamove (Inria) seminar 1 / 16 Introduction Nix concepts Usage examples Conclusion Why Nix? Control your software environment! Programs/libraries/scripts/configurations + versions Why is it important for us? Use/develop/test/distribute software Manually install many dependencies? No, just type nix-shell Shared env for whole team (tunable) and test machines Bug only on my machine? Means this is hardware or OS related Reproducible research Repeat experiment in exact same environment Introduce or test variation 2 / 16 Introduction Nix concepts Usage examples Conclusion What is Nix? Nix: package manager Download and install packages Shell into well-defined environment (like virtualenv) Transactional (rollback works) Cross-platform: Linux, macOS, Windows (WSL) Nix: programming language Define packages Define environments (set of packages) Functional, DSL NixOS: Linux distribution Declarative system configuration Uses the Nix language Transactional (rollback still works) 3 / 16 Introduction Nix concepts Usage examples Conclusion Nix in numbers Started in 2003 Nix 1: 10k commits, 28k C++ LOC Nixpkgs 2: 285k commits, 55k packages 3 1. https://github.com/NixOS/nix 2. https://github.com/NixOS/nixpkgs 3. https://repology.org/repositories/statistics 4 / 16 Introduction Nix concepts Usage examples Conclusion Presentation summary 2 Nix concepts 3 Usage examples 4 Conclusion 5 / 16 Introduction Nix concepts Usage examples Conclusion Traditional -

Release 3.11.0

CEKit Release 3.11.0 May 17, 2021 Contents 1 About 3 2 Main features 5 3 I’m new, where to start? 7 4 Releases and changelog 9 5 Contact 11 6 Documentation 13 6.1 Getting started guide........................................... 13 6.2 Handbook................................................ 19 6.3 Guidelines................................................ 61 6.4 Descriptor documentation........................................ 75 6.5 Contribution guide............................................ 137 7 Sponsor 143 8 License 145 i ii CEKit, Release 3.11.0 Contents 1 CEKit, Release 3.11.0 2 Contents CHAPTER 1 About Container image creation tool. CEKit helps to build container images from image definition files with strong focus on modularity and code reuse. 3 CEKit, Release 3.11.0 4 Chapter 1. About CHAPTER 2 Main features • Building container images from YAML image definitions using many different builder engines • Integration/unit testing of images 5 CEKit, Release 3.11.0 6 Chapter 2. Main features CHAPTER 3 I’m new, where to start? We suggest looking at the getting started guide. It’s probably the best place to start. Once get through this tutorial, look at handbook which describes how things work. Later you may be interested in the guidelines sections. 7 CEKit, Release 3.11.0 8 Chapter 3. I’m new, where to start? CHAPTER 4 Releases and changelog See the releases page for latest releases and changelogs. 9 CEKit, Release 3.11.0 10 Chapter 4. Releases and changelog CHAPTER 5 Contact • Please join the #cekit IRC channel on Freenode • You can always mail us at: cekit at cekit dot io 11 CEKit, Release 3.11.0 12 Chapter 5. -

Chapter 7 Package Management

Chapter 7 Package Management CERTIFICATION OBJECTIVES 7.01 The Red Hat Package Manager ✓ Two-Minute Drill 7.02 More RPM Commands Q&A Self Test 7.03 Dependencies and the yum Command 7.04 More Package Management Tools fter installation is complete, systems are secured, filesystems are configured, and other initial setup tasks are completed, you still have work to do. Almost certainly before your system is in the state you desire, you will be required to install or remove packages. To make sure the right updates are installed, you need to know how to get a system working with ARed Hat Subscription Management (RHSM) or the repository associated with a rebuild distribution. To accomplish these tasks, you need to understand how to use the rpm and yum commands in detail. Although these are “just” two commands, they are rich in detail. Entire books have been dedicated to the rpm command, such as the Red Hat RPM Guide by Eric 344 Chapter 7 Package Management Foster-Johnson. For many, that degree of in-depth knowledge of the rpm command is no longer necessary, given the capabilities of the yum command and the additional package management tools provided in RHEL 7. CERTIFICATION OBJECTIVE 7.01 The Red Hat Package Manager One of the major duties of a system administrator is software management. New applications are installed. Services are updated. Kernels are patched. Without the right tools, it can be difficult to figure out what software is on a system, what is the latest update, and what applications depend on other software. -

Latest/Conf Ref.Html#files‘

dnf-plugins-extras Documentation Release 4.0.15-1 Tim Lauridsen Jul 19, 2021 Contents 1 DNF kickstart Plugin 3 2 Extras DNF Plugins Release Notes5 3 DNF rpmconf Plugin 15 4 DNF showvars Plugin 17 5 DNF snapper Plugin 19 6 DNF system-upgrade Plugin 21 7 DNF torproxy Plugin 25 8 DNF tracer Plugin 27 9 See Also 29 10 Indices and tables 31 i ii dnf-plugins-extras Documentation, Release 4.0.15-1 This documents extras plugins of DNF: Contents 1 dnf-plugins-extras Documentation, Release 4.0.15-1 2 Contents CHAPTER 1 DNF kickstart Plugin Install packages according to Anaconda kickstart file. 1.1 Synopsis dnf kickstart <ks-file> 1.2 Arguments <ks-file> Path to the kickstart file. 1.3 Examples dnf kickstart mykickstart.ks Install the packages defined in mykickstart.ks. 1.4 See Also • Anaconda Kickstart file documentation 3 dnf-plugins-extras Documentation, Release 4.0.15-1 4 Chapter 1. DNF kickstart Plugin CHAPTER 2 Extras DNF Plugins Release Notes Contents • Extras DNF Plugins Release Notes – 4.0.15 Release Notes – 4.0.14 Release Notes – 4.0.13 Release Notes – 4.0.12 Release Notes – 4.0.10 Release Notes – 4.0.9 Release Notes – 4.0.8 Release Notes – 4.0.7 Release Notes – 4.0.6 Release Notes – 4.0.5 Release Notes – 4.0.4 Release Notes – 4.0.2 Release Notes – 4.0.1 Release Notes – 4.0.0 Release Notes – 3.0.2 Release Notes – 3.0.1 Release Notes – 3.0.0 Release Notes – 2.0.5 Release Notes 5 dnf-plugins-extras Documentation, Release 4.0.15-1 – 2.0.4 Release Notes – 2.0.3 Release Notes – 2.0.2 Release Notes – 2.0.1 Release Notes – 2.0.0 Release Notes – 0.10.0 -

Concurrent Programming Made Simple: the (R)Evolution of Transactional Memory

Concurrent Programming Made Simple: The (r)evolution of Transactional Memory Nuno Diegues∗1 and Torvald Riegely2 1INESC-ID, Lisbon, Portugal 2Red Hat October 1, 2013 ∗[email protected] [email protected] 1 1 Harnessing Concurrency Today, it is commonplace for developers to deal with concurrency in their applications. This reality has been driven by two ongoing revolutions in terms of hardware deployments. On one hand, processors have evolved to a multi- core paradigm in which computational power increases by increasing number of cores rather than by enhancing single thread performance. On the other hand, cloud computing has democratized the access to affordable large- scale distributed platforms. In both cases programmers are faced with a similar problem: if they want to scale out their applications, then they need to tackle the issue of how to synchronize access to data in face of ever growing concurrency levels. For many decades, programmers have been taught to rely on locking mechanisms or centralized components to manage concurrent accesses to data. However, the ongoing architectural trends towards massively parallel/large- scale systems have unveiled the limitations of traditional synchronization schemes — not only can they significantly limit the feasible parallelism, when there may exist tremendous untapped parallel potential; they also force to use intricate programming models that are prone to tricky concurrency bugs, which can be a conundrum to detect and fix. In fact, popular knowledge considers locking approaches simple to understand, but difficult to master. 2 Programming with Transactions To tackle this fundamental problem in modern software development, during recent years both industry and academia have started to adopt Transactional Memory (TM).