NVIDIA GPU COMPUTING: a JOURNEY from PC GAMING to DEEP LEARNING Stuart Oberman | October 2017 GAMING PROENTERPRISE VISUALIZATION DATA CENTER AUTO

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Adaptive Bitrate Streaming in Cloud Gaming Lambert Wang Worcester Polytechnic Institute

CORE Metadata, citation and similar papers at core.ac.uk Provided by DigitalCommons@WPI Worcester Polytechnic Institute Digital WPI Major Qualifying Projects (All Years) Major Qualifying Projects March 2017 Adaptive Bitrate Streaming in Cloud Gaming Lambert Wang Worcester Polytechnic Institute Matthew aJ mes Suarez Worcester Polytechnic Institute Robyn Angela Domanico Worcester Polytechnic Institute Follow this and additional works at: https://digitalcommons.wpi.edu/mqp-all Repository Citation Wang, L., Suarez, M. J., & Domanico, R. A. (2017). Adaptive Bitrate Streaming in Cloud Gaming. Retrieved from https://digitalcommons.wpi.edu/mqp-all/431 This Unrestricted is brought to you for free and open access by the Major Qualifying Projects at Digital WPI. It has been accepted for inclusion in Major Qualifying Projects (All Years) by an authorized administrator of Digital WPI. For more information, please contact [email protected]. Adaptive Bitrate Streaming in Cloud Gaming A Major Qualifying Project submitted to the Faculty of Worcester Polytechnic Institute in partial fulfillment of the requirements for the Degree in Bachelor of Science Robyn Domanico Matt Suarez Lambert Wang frdomanico, mjsuarez, [email protected] Advised by Mark Claypool [email protected] March 19, 2017 1 Abstract Cloud gaming streams games as video from a server directly to a client device making it susceptible to network congestion. Adaptive bitrate streaming estimates the bottleneck capacity of a network and sets appropriate encoding parameters to avoid exceeding the bandwidth of the connection. BBR is a congestion control algorithm as an alternative to current loss-based congestion control. We designed and implemented a bitrate adaptation heuristic based on BBR into GamingAnywhere, an open source cloud gaming platform. -

GPU Developments 2018

GPU Developments 2018 2018 GPU Developments 2018 © Copyright Jon Peddie Research 2019. All rights reserved. Reproduction in whole or in part is prohibited without written permission from Jon Peddie Research. This report is the property of Jon Peddie Research (JPR) and made available to a restricted number of clients only upon these terms and conditions. Agreement not to copy or disclose. This report and all future reports or other materials provided by JPR pursuant to this subscription (collectively, “Reports”) are protected by: (i) federal copyright, pursuant to the Copyright Act of 1976; and (ii) the nondisclosure provisions set forth immediately following. License, exclusive use, and agreement not to disclose. Reports are the trade secret property exclusively of JPR and are made available to a restricted number of clients, for their exclusive use and only upon the following terms and conditions. JPR grants site-wide license to read and utilize the information in the Reports, exclusively to the initial subscriber to the Reports, its subsidiaries, divisions, and employees (collectively, “Subscriber”). The Reports shall, at all times, be treated by Subscriber as proprietary and confidential documents, for internal use only. Subscriber agrees that it will not reproduce for or share any of the material in the Reports (“Material”) with any entity or individual other than Subscriber (“Shared Third Party”) (collectively, “Share” or “Sharing”), without the advance written permission of JPR. Subscriber shall be liable for any breach of this agreement and shall be subject to cancellation of its subscription to Reports. Without limiting this liability, Subscriber shall be liable for any damages suffered by JPR as a result of any Sharing of any Material, without advance written permission of JPR. -

20201130 Gcdv V1.0.Pdf

DE LA RECHERCHE À L’INDUSTRIE Architecture evolutions for HPC 30 Novembre 2020 Guillaume Colin de Verdière Commissariat à l’énergie atomique et aux énergies alternatives - www.cea.fr Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 EVOLUTION DRIVER: TECHNOLOGICAL CONSTRAINTS . Power wall . Scaling wall . Memory wall . Towards accelerated architectures . SC20 main points Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 2 POWER WALL P: power 푷 = 풄푽ퟐ푭 V: voltage F: frequency . Reduce V o Reuse of embedded technologies . Limit frequencies . More cores . More compute, less logic o SIMD larger o GPU like structure © Karl Rupp https://software.intel.com/en-us/blogs/2009/08/25/why-p-scales-as-cv2f-is-so-obvious-pt-2-2 Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 3 SCALING WALL FinFET . Moore’s law comes to an end o Probable limit around 3 - 5 nm o Need to design new structure for transistors Carbon nanotube . Limit of circuit size o Yield decrease with the increase of surface • Chiplets will dominate © AMD o Data movement will be the most expensive operation • 1 DFMA = 20 pJ, SRAM access= 50 pJ, DRAM access= 1 nJ (source NVIDIA) 1 nJ = 1000 pJ, Φ Si =110 pm Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 4 MEMORY WALL . Better bandwidth with HBM o DDR5 @ 5200 MT/s 8ch = 0.33 TB/s Thread 1 Thread Thread 2 Thread Thread 3 Thread o HBM2 @ 4 stacks = 1.64 TB/s 4 Thread Skylake: SMT2 . -

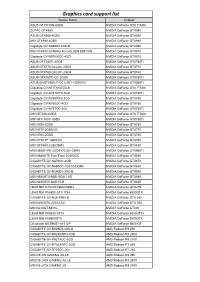

Graphics Card Support List

Graphics card support list Device Name Chipset ASUS GTXTITAN-6GD5 NVIDIA GeForce GTX TITAN ZOTAC GTX980 NVIDIA GeForce GTX980 ASUS GTX980-4GD5 NVIDIA GeForce GTX980 MSI GTX980-4GD5 NVIDIA GeForce GTX980 Gigabyte GV-N980D5-4GD-B NVIDIA GeForce GTX980 MSI GTX970 GAMING 4G GOLDEN EDITION NVIDIA GeForce GTX970 Gigabyte GV-N970IXOC-4GD NVIDIA GeForce GTX970 ASUS GTX780TI-3GD5 NVIDIA GeForce GTX780Ti ASUS GTX770-DC2OC-2GD5 NVIDIA GeForce GTX770 ASUS GTX760-DC2OC-2GD5 NVIDIA GeForce GTX760 ASUS GTX750TI-OC-2GD5 NVIDIA GeForce GTX750Ti ASUS ENGTX560-Ti-DCII/2D1-1GD5/1G NVIDIA GeForce GTX560Ti Gigabyte GV-NTITAN-6GD-B NVIDIA GeForce GTX TITAN Gigabyte GV-N78TWF3-3GD NVIDIA GeForce GTX780Ti Gigabyte GV-N780WF3-3GD NVIDIA GeForce GTX780 Gigabyte GV-N760OC-4GD NVIDIA GeForce GTX760 Gigabyte GV-N75TOC-2GI NVIDIA GeForce GTX750Ti MSI NTITAN-6GD5 NVIDIA GeForce GTX TITAN MSI GTX 780Ti 3GD5 NVIDIA GeForce GTX780Ti MSI N780-3GD5 NVIDIA GeForce GTX780 MSI N770-2GD5/OC NVIDIA GeForce GTX770 MSI N760-2GD5 NVIDIA GeForce GTX760 MSI N750 TF 1GD5/OC NVIDIA GeForce GTX750 MSI GTX680-2GB/DDR5 NVIDIA GeForce GTX680 MSI N660Ti-PE-2GD5-OC/2G-DDR5 NVIDIA GeForce GTX660Ti MSI N680GTX Twin Frozr 2GD5/OC NVIDIA GeForce GTX680 GIGABYTE GV-N670OC-2GD NVIDIA GeForce GTX670 GIGABYTE GV-N650OC-1GI/1G-DDR5 NVIDIA GeForce GTX650 GIGABYTE GV-N590D5-3GD-B NVIDIA GeForce GTX590 MSI N580GTX-M2D15D5/1.5G NVIDIA GeForce GTX580 MSI N465GTX-M2D1G-B NVIDIA GeForce GTX465 LEADTEK GTX275/896M-DDR3 NVIDIA GeForce GTX275 LEADTEK PX8800 GTX TDH NVIDIA GeForce 8800GTX GIGABYTE GV-N26-896H-B -

NVIDIA Quadro RTX for V-Ray Next

NVIDIA QUADRO RTX V-RAY NEXT GPU Image courtesy of © Dabarti Studio, rendered with V-Ray GPU Quadro RTX Accelerates V-Ray Next GPU Rendering Solutions for V-Ray Next GPU V-Ray Next GPU taps into the power of NVIDIA® Quadro® NVIDIA Quadro® provides a wide range of RTX-enabled RTX™ to speed up production rendering with dedicated RT solutions for desktop, mobile, server-based rendering, and Cores for ray tracing and Tensor Cores for AI-accelerated virtual workstations with NVIDIA Quadro Virtual Data denoising.¹ With up to 18X faster rendering than CPU-based Center Workstation (Quadro vDWS) software.2 With up to 96 solutions and enhanced performance with NVIDIA NVLink™, gigabytes (GB) of GPU memory available,3 Quadro RTX V-Ray Next GPU with RTX support provides incredible provides the power you need for the largest professional performance improvements for your rendering workloads. graphics and rendering workloads. “ Accelerating artist productivity is always our top Benchmark: V-Ray Next GPU Rendering Performance Increase on Quadro RTX GPUs priority, so we’re quick to take advantage of the latest ray-tracing hardware breakthroughs. By Quadro RTX 6000 x2 1885 ™ Quadro RTX 6000 104 supporting NVIDIA RTX in V-Ray GPU, we’re Quadro RTX 4000 783 bringing our customers an exciting new boost in PU 1 0 2 4 6 8 10 12 14 16 18 20 their GPU production rendering speeds.” Relatve Performance – Phillip Miller, Vice President, Product Management, Chaos Group Desktop performance Tests run on 1x Xeon old 6154 3 Hz (37 Hz Turbo), 64 B DDR4 RAM Wn10x64 Drver verson 44128 Performance results may vary dependng on the scene NVIDIA Quadro professional graphics solutions are verified and recommended for the most demanding projects by Chaos Group. -

NVIDIA Launches Tegra X1 Mobile Super Chip

NVIDIA Launches Tegra X1 Mobile Super Chip Maxwell GPU Architecture Delivers First Teraflops Mobile Processor, Powering Deep Learning and Computer Vision Applications NVIDIA today unveiled Tegra® X1, its next-generation mobile super chip with over one teraflops of processing power – delivering capabilities that open the door to unprecedented graphics and sophisticated deep learning and computer vision applications. Tegra X1 is built on the same NVIDIA Maxwell™ GPU architecture rolled out only months ago for the world's top-performing gaming graphics card, the GeForce® GTX 980. The 256-core Tegra X1 provides twice the performance of its predecessor, the Tegra K1, which is based on the previous-generation Kepler™ architecture and debuted at last year's Consumer Electronics Show. Tegra processors are built for embedded products, mobile devices, autonomous machines and automotive applications. Tegra X1 will begin appearing in the first half of the year. It will be featured in the newly announced NVIDIA DRIVE™ car computers. DRIVE PX is an auto-pilot computing platform that can process video from up to 12 onboard cameras to run capabilities providing Surround-Vision, for a seamless 360-degree view around the car, and Auto-Valet, for true self-parking. DRIVE CX is a complete cockpit platform designed to power the advanced graphics required across the increasing number of screens used for digital clusters, infotainment, head-up displays, virtual mirrors and rear-seat entertainment. "We see a future of autonomous cars, robots and drones that see and learn, with seeming intelligence that is hard to imagine," said Jen-Hsun Huang, CEO and co-founder, NVIDIA. -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a -

Nvidia Cuda Getting Started Guide for Linux

NVIDIA CUDA GETTING STARTED GUIDE FOR LINUX DU-05347-001_v7.0 | March 2015 Installation and Verification on Linux Systems TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. System Requirements.................................................................................... 1 1.1.1. x86 32-bit Support.................................................................................. 2 1.2. About This Document.................................................................................... 3 Chapter 2. Pre-installation Actions...........................................................................4 2.1. Verify You Have a CUDA-Capable GPU................................................................ 4 2.2. Verify You Have a Supported Version of Linux.......................................................4 2.3. Verify the System Has gcc Installed................................................................... 5 2.4. Choose an Installation Method......................................................................... 5 2.5. Download the NVIDIA CUDA Toolkit....................................................................6 2.6. Handle Conflicting Installation Methods.............................................................. 6 Chapter 3. Package Manager Installation....................................................................8 3.1. Overview.................................................................................................. -

Msi Nvidia Drivers Download Update MSI Graphics Card Driver on Windows 10 & 7

msi nvidia drivers download Update MSI Graphics Card Driver on Windows 10 & 7. Easily! Updating MSI graphics card drivers provides you with high gaming performance. So it’s recommended you keep your graphics card driver up-to- date. In this post, you’ll learn two ways to download and install the latest MSI graphics card driver. Download the MSI graphics card driver manually Download and install the MSI graphics card driver automatically. Way 1: Download the MSI graphics card driver manually. MSI provides the graphics driver on their website, for instance, the NVIDIA graphics card driver and the AMD graphics card driver. So you can check for and download the latest driver you need for your graphics card from MSI’s website. The driver always can be downloaded on the SUPPORT section. Go to MSI website and enter the name of your graphics card and perform a quick search. Then follow the on-screen instructions to download the driver you need. MSI always uploads new drivers to their website. So it’s recommended you to check for the driver release often in order to get the latest driver in time. If you don’t have time and patience to download the driver manually, Way 2 may be a better option for you. Way 2 : Download and install the MSI graphics card driver automatically. If you don’t have the time, patience or computer skills to update the MSI graphics driver manually, you can do it automatically with Driver Easy . Driver Easy will automatically recognize your system and find the correct drivers for it. -

NVIDIA DGX Station the First Personal AI Supercomputer 1.0 Introduction

White Paper NVIDIA DGX Station The First Personal AI Supercomputer 1.0 Introduction.........................................................................................................2 2.0 NVIDIA DGX Station Architecture ........................................................................3 2.1 NVIDIA Tesla V100 ..........................................................................................5 2.2 Second-Generation NVIDIA NVLink™ .............................................................7 2.3 Water-Cooling System for the GPUs ...............................................................7 2.4 GPU and System Memory...............................................................................8 2.5 Other Workstation Components.....................................................................9 3.0 Multi-GPU with NVLink......................................................................................10 3.1 DGX NVLink Network Topology for Efficient Application Scaling..................10 3.2 Scaling Deep Learning Training on NVLink...................................................12 4.0 DGX Station Software Stack for Deep Learning.................................................14 4.1 NVIDIA CUDA Toolkit.....................................................................................16 4.2 NVIDIA Deep Learning SDK ...........................................................................16 4.3 Docker Engine Utility for NVIDIA GPUs.........................................................17 4.4 NVIDIA Container -

Cuda C Programming Guide

CUDA C PROGRAMMING GUIDE PG-02829-001_v10.0 | October 2018 Design Guide CHANGES FROM VERSION 9.0 ‣ Documented restriction that operator-overloads cannot be __global__ functions in Operator Function. ‣ Removed guidance to break 8-byte shuffles into two 4-byte instructions. 8-byte shuffle variants are provided since CUDA 9.0. See Warp Shuffle Functions. ‣ Passing __restrict__ references to __global__ functions is now supported. Updated comment in __global__ functions and function templates. ‣ Documented CUDA_ENABLE_CRC_CHECK in CUDA Environment Variables. ‣ Warp matrix functions now support matrix products with m=32, n=8, k=16 and m=8, n=32, k=16 in addition to m=n=k=16. www.nvidia.com CUDA C Programming Guide PG-02829-001_v10.0 | ii TABLE OF CONTENTS Chapter 1. Introduction.........................................................................................1 1.1. From Graphics Processing to General Purpose Parallel Computing............................... 1 1.2. CUDA®: A General-Purpose Parallel Computing Platform and Programming Model.............3 1.3. A Scalable Programming Model.........................................................................4 1.4. Document Structure...................................................................................... 5 Chapter 2. Programming Model............................................................................... 7 2.1. Kernels......................................................................................................7 2.2. Thread Hierarchy........................................................................................ -

Defendant Apple Inc.'S Proposed Findings of Fact and Conclusions Of

Case 4:20-cv-05640-YGR Document 410 Filed 04/08/21 Page 1 of 325 1 THEODORE J. BOUTROUS JR., SBN 132099 MARK A. PERRY, SBN 212532 [email protected] [email protected] 2 RICHARD J. DOREN, SBN 124666 CYNTHIA E. RICHMAN (D.C. Bar No. [email protected] 492089; pro hac vice) 3 DANIEL G. SWANSON, SBN 116556 [email protected] [email protected] GIBSON, DUNN & CRUTCHER LLP 4 JAY P. SRINIVASAN, SBN 181471 1050 Connecticut Avenue, N.W. [email protected] Washington, DC 20036 5 GIBSON, DUNN & CRUTCHER LLP Telephone: 202.955.8500 333 South Grand Avenue Facsimile: 202.467.0539 6 Los Angeles, CA 90071 Telephone: 213.229.7000 ETHAN DETTMER, SBN 196046 7 Facsimile: 213.229.7520 [email protected] ELI M. LAZARUS, SBN 284082 8 VERONICA S. MOYÉ (Texas Bar No. [email protected] 24000092; pro hac vice) GIBSON, DUNN & CRUTCHER LLP 9 [email protected] 555 Mission Street GIBSON, DUNN & CRUTCHER LLP San Francisco, CA 94105 10 2100 McKinney Avenue, Suite 1100 Telephone: 415.393.8200 Dallas, TX 75201 Facsimile: 415.393.8306 11 Telephone: 214.698.3100 Facsimile: 214.571.2900 Attorneys for Defendant APPLE INC. 12 13 14 15 UNITED STATES DISTRICT COURT 16 FOR THE NORTHERN DISTRICT OF CALIFORNIA 17 OAKLAND DIVISION 18 19 EPIC GAMES, INC., Case No. 4:20-cv-05640-YGR 20 Plaintiff, Counter- DEFENDANT APPLE INC.’S PROPOSED defendant FINDINGS OF FACT AND CONCLUSIONS 21 OF LAW v. 22 APPLE INC., The Honorable Yvonne Gonzalez Rogers 23 Defendant, 24 Counterclaimant. Trial: May 3, 2021 25 26 27 28 Gibson, Dunn & Crutcher LLP DEFENDANT APPLE INC.’S PROPOSED FINDINGS OF FACT AND CONCLUSIONS OF LAW, 4:20-cv-05640- YGR Case 4:20-cv-05640-YGR Document 410 Filed 04/08/21 Page 2 of 325 1 Apple Inc.