Impact of Unreliable Content on Social Media Users During COVID-19 and Stance Detection System

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Report 2021, No. 6

News Agency on Conservative Europe Report 2021, No. 6. Report on conservative and right wing Europe 20th March, 2021 GERMANY 1. jungefreiheit.de (translated, original by jungefreiheit.de, 18.03.2021) "New German media makers" Migrant organization calls for more “diversity” among journalists media BERLIN. The migrant organization “New German Media Makers” (NdM) has reiterated its demand that editorial offices should become “more diverse”. To this end, the association presented a “Diversity Guide” on Wednesday under the title “How German Media Create More Diversity”. According to excerpts on the NdM website, it says, among other things: “German society has changed, it has become more colorful. That should be reflected in the reporting. ”The manual explains which terms journalists should and should not use in which context. 2 When reporting on criminal offenses, “the prejudice still prevails that refugees or people with an international history are more likely to commit criminal offenses than biographically Germans and that their origin is causally related to it”. Collect "diversity data" and introduce "soft quotas" Especially now, when the media are losing sales, there is a crisis of confidence and more competition, “diversity” is important. "More diversity brings new target groups, new customers and, above all, better, more successful journalism." The more “diverse” editorial offices are, the more it is possible “to take up issues of society without prejudice”, the published excerpts continue to say. “And just as we can no longer imagine a purely male editorial office today, we should also no longer be able to imagine white editorial offices. Precisely because of the special constitutional mandate of the media, the question of fair access and the representation of all population groups in journalism is also a question of democracy. -

So Many People Are Perplexed with the Covid-19 Lockdowns, Mask Requirements, Social Distancing, Loss of Jobs, Homes, and Their Very Sanity

So many people are perplexed with the Covid-19 lockdowns, mask requirements, social distancing, loss of jobs, homes, and their very sanity. What lies behind all of this? There have been so many conflicting reports that one has to ask the question, Is there even a pandemic at all? Was there ever one? Let’s have a look. ======================== Strange advance hints (predictive programming) – A 1993 Simpsons episode called “Marge in Chains” actually accurately predicted the coronavirus outbreak. One scene depicted a boardroom meeting between government officials and major media moguls. The chairman opened with these words: “I’d like to call to order this secret conclave of America’s media empires. We are here to come up with the next phony baloney crisis to put Americans back where they belong…” Suddenly a delegate from NBC chimed in: “Well I think…” Then the chairman interrupted him, saying: “NBC, you are here to listen and not speak!” He then said: “I think we should go with a good old fashioned health scare.” Everyone then nodded in approval. Next, a female delegate remarked: “A new disease–no one’s immune–it’s like The Summer of the Shark, except instead of the shark, it’s an epidemic.” – Another Simpsons episode called “A Totally Fun Thing that Bart will Never Do Again,” first aired on April 29, 2012, featured an emergency broadcast announcing the outbreak of the deadly “Pandora Virus,” and how it was “spreading rapidly.” The announcer then said: “This unprecedented threat requires a world-wide quarantine. All ships must remain at sea until further notice. -

Clash of Narratives: the US-China Propaganda War Amid the Global Pandemic

C lash of Narratives: The US-China Propaganda War Amid the Global Pandemic Preksha Shree Chhetri Research Scholar, Jawaharlal Nehru University, Delhi [email protected] Abstract In addition to traditional spheres of competition such as military and economic rivalry, the US-China rivalry is shifting into new areas. The outbreak of Covid-19 seems to have provided yet another domain for competition to both the US and China. Aside from news related to Covid-19, propaganda around Covid-19 have dominated global discussions in the last few months. Propaganda, in the most neutral sense, means to disseminate or promote particular ideas. During the ongoing pandemic, China and the US have been involved in very intense war of words in order to influence the global narrative on Covid-19. Considering the number of narratives presented by two of the world’s strongest actors, the period since the outbreak of the pandemic can be truly regarded as the age of clashing narratives. This analysis attempts to take a close look at the narratives presented by the US and China during the pandemic, consider the impact of disinformation on the day to day lives of people around the world and discuss the criticism against China and the US during the.Pandemic.. Keywords China, US, Covid-19, Propaganda, Disinformation Harold D Lasswell (1927) defines Propaganda time in the US with the creation of Committee on Public Information (CPI). A similar body as ‘management of collective attitudes by called the Office of War Information (OWI) manipulation of significant symbols’. In simple was formed during World War II. -

Fake News in India

Countering ( Misinformation ( Fake News In India Solutions & Strategies Authors Tejeswi Pratima Dodda & Rakesh Dubbudu Factly Media & Research Research, Design & Editing Team Preeti Raghunath Bharath Guniganti Premila Manvi Mady Mantha Uday Kumar Erothu Jyothi Jeeru Shashi Kiran Deshetti Surya Kandukuri Questions or feedback on this report: [email protected] About this report is report is a collaborative eort by Factly Media & Research (Factly) and e Internet and Mobile Association of India (IAMAI). Factly works towards making public data & information more accessible to people through a variety of methods. IAMAI is a young and vibrant association with ambitions of representing the entire gamut of digital businesses in India. Factly IAMAI Rakesh Dubbudu, [email protected] Nilotpal Chakravarti, [email protected] Bharath Guniganti, [email protected] Dr Amitayu Sengupta, [email protected] ACKNOWLEDGEMENT We are grateful to all those with whom we had the pleasure of working for this report. To each member of our team who tirelessly worked to make this report possible. Our gratitude to all our interviewees and respondents who made time to participate, interact and share their opinions, thoughts and concerns about misinformation in India. Special thanks to Claire Wardle of First Dra News for her support, valuable suggestions and penning a foreword for this report. is report would not have seen the light of the day without the insights by the team at Google. We are also thankful to the Government of Telangana for inviting us to the round-table on ‘Fake News’ where we had the opportunity to interact with a variety of stakeholders. We would also like to thank Internet & Mobile Association of India (IAMAI) for being great partners and for all the support extended in the process. -

Coronajihad: COVID-19, Misinformation, and Anti-Muslim Violence in India

#CoronaJihad COVID-19, Misinformation, and Anti-Muslim Violence in India Shweta Desai and Amarnath Amarasingam Abstract About the authors On March 25th, India imposed one of the largest Shweta Desai is an independent researcher and lockdowns in history, confining its 1.3 billion journalist based between India and France. She is citizens for over a month to contain the spread of interested in terrorism, jihadism, religious extremism the novel coronavirus (COVID-19). By the end of and armed conflicts. the first week of the lockdown, starting March 29th reports started to emerge that there was a common Amarnath Amarasingam is an Assistant Professor in link among a large number of the new cases the School of Religion at Queen’s University in Ontario, detected in different parts of the country: many had Canada. He is also a Senior Research Fellow at the attended a large religious gathering of Muslims in Institute for Strategic Dialogue, an Associate Fellow at Delhi. In no time, Hindu nationalist groups began to the International Centre for the Study of Radicalisation, see the virus not as an entity spreading organically and an Associate Fellow at the Global Network on throughout India, but as a sinister plot by Indian Extremism and Technology. His research interests Muslims to purposefully infect the population. This are in radicalization, terrorism, diaspora politics, post- report tracks anti-Muslim rhetoric and violence in war reconstruction, and the sociology of religion. He India related to COVID-19, as well as the ongoing is the author of Pain, Pride, and Politics: Sri Lankan impact on social cohesion in the country. -

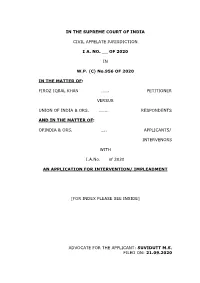

Impleadment Application

IN THE SUPREME COURT OF INDIA CIVIL APPELATE JURISDICTION I A. NO. __ OF 2020 IN W.P. (C) No.956 OF 2020 IN THE MATTER OF: FIROZ IQBAL KHAN ……. PETITIONER VERSUS UNION OF INDIA & ORS. …….. RESPONDENTS AND IN THE MATTER OF: OPINDIA & ORS. ….. APPLICANTS/ INTERVENORS WITH I.A.No. of 2020 AN APPLICATION FOR INTERVENTION/ IMPLEADMENT [FOR INDEX PLEASE SEE INSIDE] ADVOCATE FOR THE APPLICANT: SUVIDUTT M.S. FILED ON: 21.09.2020 INDEX S.NO PARTICULARS PAGES 1. Application for Intervention/ 1 — 21 Impleadment with Affidavit 2. Application for Exemption from filing 22 – 24 Notarized Affidavit with Affidavit 3. ANNEXURE – A 1 25 – 26 A true copy of the order of this Hon’ble Court in W.P. (C) No.956/ 2020 dated 18.09.2020 4. ANNEXURE – A 2 27 – 76 A true copy the Report titled “A Study on Contemporary Standards in Religious Reporting by Mass Media” 1 IN THE SUPREME COURT OF INDIA CIVIL ORIGINAL JURISDICTION I.A. No. OF 2020 IN WRIT PETITION (CIVIL) No. 956 OF 2020 IN THE MATTER OF: FIROZ IQBAL KHAN ……. PETITIONER VERSUS UNION OF INDIA & ORS. …….. RESPONDENTS AND IN THE MATTER OF: 1. OPINDIA THROUGH ITS AUTHORISED SIGNATORY, C/O AADHYAASI MEDIA & CONTENT SERVICES PVT LTD, DA 16, SFS FLATS, SHALIMAR BAGH, NEW DELHI – 110088 DELHI ….. APPLICANT NO.1 2. INDIC COLLECTIVE TRUST, THROUGH ITS AUTHORISED SIGNATORY, 2 5E, BHARAT GANGA APARTMENTS, MAHALAKSHMI NAGAR, 4TH CROSS STREET, ADAMBAKKAM, CHENNAI – 600 088 TAMIL NADU ….. APPLICANT NO.2 3. UPWORD FOUNDATION, THROUGH ITS AUTHORISED SIGNATORY, L-97/98, GROUND FLOOR, LAJPAT NAGAR-II, NEW DELHI- 110024 DELHI …. -

Authors' Pre-Publication Copy Dated March 7, 2021 This Article Has Been

Authors’ pre-publication copy dated March 7, 2021 This article has been accepted for publication in Volume 19, Issue 1 of the Northwestern Journal of Technology & Intellectual Property (Fall 2021) Artificial Intelligence as Evidence1 ABSTRACT This article explores issues that govern the admissibility of Artificial Intelligence (“AI”) applications in civil and criminal cases, from the perspective of a federal trial judge and two computer scientists, one of whom also is an experienced attorney. It provides a detailed yet intelligible discussion of what AI is and how it works, a history of its development, and a description of the wide variety of functions that it is designed to accomplish, stressing that AI applications are ubiquitous, both in the private and public sector. Applications today include: health care, education, employment-related decision-making, finance, law enforcement, and the legal profession. The article underscores the importance of determining the validity of an AI application (i.e., how accurately the AI measures, classifies, or predicts what it is designed to), as well as its reliability (i.e., the consistency with which the AI produces accurate results when applied to the same or substantially similar circumstances), in deciding whether it should be admitted into evidence in civil and criminal cases. The article further discusses factors that can affect the validity and reliability of AI evidence, including bias of various types, “function creep,” lack of transparency and explainability, and the sufficiency of the objective testing of the AI application before it is released for public use. The article next provides an in-depth discussion of the evidentiary principles that govern whether AI evidence should be admitted in court cases, a topic which, at present, is not the subject of comprehensive analysis in decisional law. -

“Shoot the Traitors” WATCH Discrimination Against Muslims Under India’S New Citizenship Policy

HUMAN RIGHTS “Shoot the Traitors” WATCH Discrimination Against Muslims under India’s New Citizenship Policy “Shoot the Traitors” Discrimination Against Muslims under India’s New Citizenship Policy Copyright © 2020 Human Rights Watch All rights reserved. Printed in the United States of America ISBN: 978-1-62313-8202 Cover design by Rafael Jimenez Human Rights Watch is dedicated to protecting the human rights of people around the world. We stand with victims and activists to prevent discrimination, to uphold political freedom, to protect people from inhumane conduct in wartime, and to bring offenders to justice. We investigate and expose human rights violations and hold abusers accountable. We challenge governments and those who hold power to end abusive practices and respect international human rights law. We enlist the public and the international community to support the cause of human rights for all. Human Rights Watch is an international organization with staff in more than 40 countries, and offices in Amsterdam, Beirut, Berlin, Brussels, Chicago, Geneva, Goma, Johannesburg, London, Los Angeles, Moscow, Nairobi, New York, Paris, San Francisco, Tokyo, Toronto, Tunis, Washington DC, and Zurich. For more information, please visit our website: http://www.hrw.org APRIL 2020 ISBN: 978-1-62313-8202 “Shoot the Traitors” Discrimination Against Muslims under India’s New Citizenship Policy Summary ......................................................................................................................... 1 An Inherently Discriminatory -

Rohingyas in India State of Rohingya Muslims in India in Absence of Refugee Law

© File photo, open source Rohingyas in India State of Rohingya Muslims in India in absence of Refugee Law ©Report by Stichting the London Story Kvk 77721306; The Netherland [email protected] www.thelondonstory.org Twitter 1 Foundation London Story is a registered NGO based in Netherlands with a wide network of friends and colleagues working in India. Our focus lies in documenting hate speech and hate crimes against Indian minorities. The report on Rohingya report was prepared by Ms. Snehal Dote with inputs from Dr. Ritumbra Manuvie (corresponding author). The report was internally reviewed for editorial and content sanctity. The facts expressed in the report are sourced from reliable sources and checked for their authenticity. The opinions and interpretations are attributed to the authors alone and the Foundation can not be held liable for the same. Foundation London Story retains all copyrights for this report under a CC license. For concerns and further information, you can reach us out at [email protected] 2 Contents Background ........................................................................................................... 3 Data ....................................................................................................................... 3 India’s International Law Obligations .................................................................... 4 Timeline of Neglect and Discrimination on Grounds of Religion ..................... 5 Rationalizing exclusion, neglect, and dehumanizing of Rohingyas........................ -

Deeply Disturbing

RNI No: TELENG/2017/72414 a jOurnal Of practitiOners Of jOurnalisM Vol. 5 No. 2 Pages: 32 Price: 20 Deeply Disturbing he Information Technology (Guidelines for Intermediaries APRIL and Digital Media Ethics Code) Rules, 2021 issued by the Union Government in the last week of February is deeply 2021 disturbing for their impact on the constitutionally guaranteed T freedom of expression and the freedom of the press. The government is deceptively projecting them as a grievance redressal mech- anism over the content in social media, OTT video streaming services, and digital news media outlets. It is nobody's case that there should be no regulatory mechanism for the explosively growing social media plat- forms, OTT services, and digital news media platforms and their impact on the people. The clubbing of digital news media outlets with the social media and OTT services are flawed as the news media whether digital, Editorial Advisers electronic, or print h ave the same characteristics as they are manned by working journalists who are trained to verify facts, contextualise, give a S N Sinha perspective to a news story unlike the social media platforms which are K Sreenivas Reddy essentially messaging and communication platforms and use the content Devendra Chintan generated by the untrained and not fact-checked. Likewise, digital media websites have news and editorial desks to function as gate keepe rs to fil- L S Herdenia ter unchecked, unverified, and motivated news before going into the pub- lic domain as in the case of print media. As pointed out in several judg- ments of the apex court, censoring, direct or covert is unconstitutional and undemocratic. -

Understanding Violence Against Women in Rural Haryana

INTERNATIONAL HUMAN RIGHTS INTERNSHIP PROGRAM | WORKING PAPER SERIES VOL. 9 | NO. 1 | SUMMER 2020 Consent, Creditability, and Coercion: Understanding Violence Against Women in Rural Haryana Mahima Kapur ABOUT CHRLP Established in September 2005, the Centre for Human Rights and Legal Pluralism (CHRLP) was formed to provide students, professors and the larger community with a locus of intellectual and physical resources for engaging critically with the ways in which law affects some of the most compelling social problems of our modern era, most notably human rights issues. Since then, the Centre has distinguished itself by its innovative legal and interdisciplinary approach, and its diverse and vibrant community of scholars, students and practitioners working at the intersection of human rights and legal pluralism. – 2 – CHRLP is a focal point for innovative legal and interdisciplinary research, dialogue and outreach on issues of human rights and legal pluralism. The Centre’s mission is to provide students, professors and the wider community with a locus of intellectual and physical resources for engaging critically with how law impacts upon some of the compelling social problems of our modern era. A key objective of the Centre is to deepen transdisciplinary collaboration on the complex social, ethical, political and philosophical dimensions of human rights. The current Centre initiative builds upon the human rights legacy and enormous scholarly engagement found in the Universal Declaration of Human Rights. ABOUT THE SERIES The Centre for Human Rights and Legal Pluralism (CHRLP) Working Paper Series enables the dissemination of papers by students who have participated in the Centre’s International Human Rights Internship Program (IHRIP). -

The Contagion of Hate in India

THE CONTAGION OF HATE IN INDIA By Laxmi Murthy Laxmi Murthy is a journalist based in Bangalore, India. Introduction The COVID-19 pandemic that began its sweep This paper attempts to understand the pheno- across the globe in early 2020 has had a menon of hate speech and its potential to devastating effect not only on health, life legitimise discrimination and promote violence and livelihoods, but on the fabric of society against its targets. It lays out the interconnections itself. As with all moments of social crisis, it between Islamophobia, hate speech and acts has deepened existing cleavages, as well as of physical violence against Muslims. The role dented and even demolished already fragile of social media, especially messaging platforms secular democratic structures. While social and like WhatsApp and Facebook, in facilitating economic marginalisation has sharpened, one the easy and rapid spread of fake news and of the starkest consequences has been the rumours and amplifying hate, is also examined. naturalisation of hatred towards India’s largest The complexities of regulating social media minority community. Along with the spread platforms, which have immense political and of COVID-19, Islamophobia spiked and spread corporate backing, have been touched upon. rapidly into every sphere of life. From calls to This paper also looks at the contentious and marginalise,1 isolate,2 segregate3 and boycott contradictory interplay of hate speech with the Muslim communities, to sanctioning the use of constitutionally guaranteed freedom of speech force, hateful prejudice peaked and spilled over and expression and recent jurisprudence on these into violence. An already beleaguered Muslim matters.