Phonological Primes and Mcgurk Fusion

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vowel Quality and Phonological Projection

i Vowel Quality and Phonological Pro jection Marc van Oostendorp PhD Thesis Tilburg University September Acknowledgements The following p eople have help ed me prepare and write this dissertation John Alderete Elena Anagnostop oulou Sjef Barbiers Outi BatEl Dorothee Beermann Clemens Bennink Adams Bo domo Geert Bo oij Hans Bro ekhuis Norb ert Corver Martine Dhondt Ruud and Henny Dhondt Jo e Emonds Dicky Gilb ers Janet Grijzenhout Carlos Gussenhoven Gert jan Hakkenb erg Marco Haverkort Lars Hellan Ben Hermans Bart Holle brandse Hannekevan Ho of Angeliek van Hout Ro eland van Hout Harry van der Hulst Riny Huybregts Rene Kager HansPeter Kolb Emiel Krah mer David Leblanc Winnie Lechner Klarien van der Linde John Mc Carthy Dominique Nouveau Rolf Noyer Jaap and Hannyvan Oosten dorp Paola Monachesi Krisztina Polgardi Alan Prince Curt Rice Henk van Riemsdijk Iggy Ro ca Sam Rosenthall Grazyna Rowicka Lisa Selkirk Chris Sijtsma Craig Thiersch MiekeTrommelen Rub en van der Vijver Janneke Visser Riet Vos Jero en van de Weijer Wim Zonneveld Iwant to thank them all They have made the past four years for what it was the most interesting and happiest p erio d in mylife until now ii Contents Intro duction The Headedness of Syllables The Headedness Hyp othesis HH Theoretical Background Syllable Structure Feature geometry Sp ecication and Undersp ecicati on Skeletal tier Mo del of the grammar Optimality Theory Data Organisation of the thesis Chapter Chapter -

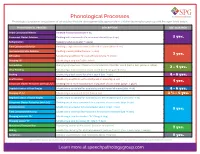

Phonological Processes

Phonological Processes Phonological processes are patterns of articulation that are developmentally appropriate in children learning to speak up until the ages listed below. PHONOLOGICAL PROCESS DESCRIPTION AGE ACQUIRED Initial Consonant Deletion Omitting first consonant (hat → at) Consonant Cluster Deletion Omitting both consonants of a consonant cluster (stop → op) 2 yrs. Reduplication Repeating syllables (water → wawa) Final Consonant Deletion Omitting a singleton consonant at the end of a word (nose → no) Unstressed Syllable Deletion Omitting a weak syllable (banana → nana) 3 yrs. Affrication Substituting an affricate for a nonaffricate (sheep → cheep) Stopping /f/ Substituting a stop for /f/ (fish → tish) Assimilation Changing a phoneme so it takes on a characteristic of another sound (bed → beb, yellow → lellow) 3 - 4 yrs. Velar Fronting Substituting a front sound for a back sound (cat → tat, gum → dum) Backing Substituting a back sound for a front sound (tap → cap) 4 - 5 yrs. Deaffrication Substituting an affricate with a continuant or stop (chip → sip) 4 yrs. Consonant Cluster Reduction (without /s/) Omitting one or more consonants in a sequence of consonants (grape → gape) Depalatalization of Final Singles Substituting a nonpalatal for a palatal sound at the end of a word (dish → dit) 4 - 6 yrs. Stopping of /s/ Substituting a stop sound for /s/ (sap → tap) 3 ½ - 5 yrs. Depalatalization of Initial Singles Substituting a nonpalatal for a palatal sound at the beginning of a word (shy → ty) Consonant Cluster Reduction (with /s/) Omitting one or more consonants in a sequence of consonants (step → tep) Alveolarization Substituting an alveolar for a nonalveolar sound (chew → too) 5 yrs. -

Nasal Assimilation in Quranic Recitation Table of Contents

EmanQuotah Linguistics Senior Paper Hadass Sheffer, Advisor Swarthmore College December 9, 1994 Nasal Assimilation in Quranic Recitation Table of Contents Introduction 1 TheQuran 3 Recitation and Tajwi:d 7 Nasal Assimilation in Quranic Recitation 10 Arabic geminates 12 Nasal assimilation rules 15 Blocking of assimilation by pauses 24 Conclusion 26 Bibliography Grateful acknowledgements to my father, my mother and my brothers, and to Hadass Sheffer and Donna Jo Napoli. Introduction This paper is concerned with the analysis of certain rules governing nasality and nasal assimilation during recitation of the holy Quran. I These rules are a subset of tajwi:d, a set of rules governing the correct prescribed recitation and pronunciation of the Islamic scriptures. The first part of the paper will describe the historical and cultural importance of the Quran and tajwi:d, with the proposition that a tension or conflict between the necessity for clarity and enunciation and the desire for beautification of the divine words of God is the driving force behind tajwi:d's importance. Though the rules are functional rather than "natural," these prescriptive rules can be integrated into a study lexical phonology and feature geometry, as discussed in the second section, since prescriptive rules must work within those rules set by the language's grammar. Muslims consider the Quran a divine and holy text, untampered with and unchangeable by humankind. Western scholars have attempted to identify it as the writings of the Prophet Muhammad, a humanly written text like any other. Viewing the holy Quran in this way ignores the religious, social and linguistic implications of its perceived unchangeability, and does disservice to the beliefs of many Muslims. -

Lecture 5 Sound Change

An articulatory theory of sound change An articulatory theory of sound change Hypothesis: Most common initial motivation for sound change is the automation of production. Tokens reduced online, are perceived as reduced and represented in the exemplar cluster as reduced. Therefore we expect sound changes to reflect a decrease in gestural magnitude and an increase in gestural overlap. What are some ways to test the articulatory model? The theory makes predictions about what is a possible sound change. These predictions could be tested on a cross-linguistic database. Sound changes that take place in the languages of the world are very similar (Blevins 2004, Bateman 2000, Hajek 1997, Greenberg et al. 1978). We should consider both common and rare changes and try to explain both. Common and rare changes might have different characteristics. Among the properties we could look for are types of phonetic motivation, types of lexical diffusion, gradualness, conditioning environment and resulting segments. Common vs. rare sound change? We need a database that allows us to test hypotheses concerning what types of changes are common and what types are not. A database of sound changes? Most sound changes have occurred in undocumented periods so that we have no record of them. Even in cases with written records, the phonetic interpretation may be unclear. Only a small number of languages have historic records. So any sample of known sound changes would be biased towards those languages. A database of sound changes? Sound changes are known only for some languages of the world: Languages with written histories. Sound changes can be reconstructed by comparing related languages. -

Assimilation, Reduction and Elision Reflected in the Selected Song Lyrics of Avenged Sevenfold

Dwi Nita Febriyanti Assimilation, Reduction and Elision Reflected in the Selected Song Lyrics of Avenged Sevenfold Dwi Nita Febriyanti [email protected] English Language Studies, Sanata Dharma University Abstract This paper discusses the phenomena of phonological rules, especially assimilation, reduction and elision processes. In this paper, the writer conducted phonological study which attempts to find the phenomena of those processes in song lyrics. In taking the data, the writer transcribed the lyrics of the songs, along with checking them to the internet source, then observed the lyrics to find the phenomena of assimilation, reduction, and elision. After that, she classified the observed phenomena in the lyrics based on the phonological processes. From the data analysis, the results showed that there were three processes found both in the first and second songs: assimilation, reduction and elision. The difference is that in the first song, it has four kinds of assimilation, while from the second song only has three kinds of assimilation. Keywords: assimilation, reduction, elision Introduction brothers and sisters’ discussion or even in songs, for which songs are considered as the As English spoken by the native media for the composer to share his feelings. speakers, it sometimes undergoes simplification to ease the native speakers in Assimilation usually happens in the expressing their feelings. That is why, it is double consonants. This is a phenomenon common for them to speak English in high which shows the influence of one sound to speed along with their emotions. As the another to become more similar. While for result, they make a ‘shortcut’ to get ease of the reduction process, it can happen to the their pronunciation. -

Sound Change, Priming, Salience

Sound change, priming, salience Producing and perceiving variation in Liverpool English Marten Juskan language Language Variation 3 science press Language Variation Editors: John Nerbonne, Dirk Geeraerts In this series: 1. Côté, Marie-Hélène, Remco Knooihuizen and John Nerbonne (eds.). The future of dialects. 2. Schäfer, Lea. Sprachliche Imitation: Jiddisch in der deutschsprachigen Literatur (18.–20. Jahrhundert). 3. Juskan, Marten. Sound change, priming, salience: Producing and perceiving variation in Liverpool English. ISSN: 2366-7818 Sound change, priming, salience Producing and perceiving variation in Liverpool English Marten Juskan language science press Marten Juskan. 2018. Sound change, priming, salience: Producing and perceiving variation in Liverpool English (Language Variation 3). Berlin: Language Science Press. This title can be downloaded at: http://langsci-press.org/catalog/book/210 © 2018, Marten Juskan Published under the Creative Commons Attribution 4.0 Licence (CC BY 4.0): http://creativecommons.org/licenses/by/4.0/ ISBN: 978-3-96110-119-1 (Digital) 978-3-96110-120-7 (Hardcover) ISSN: 2366-7818 DOI:10.5281/zenodo.1451308 Source code available from www.github.com/langsci/210 Collaborative reading: paperhive.org/documents/remote?type=langsci&id=210 Cover and concept of design: Ulrike Harbort Typesetting: Marten Juskan Proofreading: Alec Shaw, Amir Ghorbanpour, Andreas Hölzl, Felix Hoberg, Havenol Schrenk, Ivica Jeđud, Jeffrey Pheiff, Jeroen van de Weijer, Kate Bellamy Fonts: Linux Libertine, Libertinus Math, Arimo, DejaVu Sans Mono Typesetting software:Ǝ X LATEX Language Science Press Unter den Linden 6 10099 Berlin, Germany langsci-press.org Storage and cataloguing done by FU Berlin To Daniela Contents 1 Introduction 1 1.1 Intentions – what this study is about .............. -

Information to Users

INFORMATION TO USERS This manuscript has been reproduced from the microfilm master. UMI films the text directly from the original or copy submitted. Thus, some thesis and dissertation copies are in typewriter face, while others may be from any type of computer printer. The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleedthrough, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send UMI a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. Oversize materials (e.g., maps, drawings, charts) are reproduced by sectioning the original, beginning at the upper left-hand comer and continuing from left to right in equal sections with small overlaps. Each original is also photographed in one exposure and is included in reduced form at the back of the book. Photographs included in the original manuscript have been reproduced xerographically in this copy. Higher quality 6" x 9" black and white photographic prints are available for any photographs or illustrations appearing in this copy for an additional charge. Contact UMI directly to order. UMI University Microfilms International A Bell & Howell Information C om pany 300 North Zeeb Road. Ann Arbor, Ml 48106-1346 USA 313/761-4700 800/521-0600 Order Number 9401204 Phonetics and phonology of Nantong Chinese Ac, Benjamin Xiaoping, Ph.D. The Ohio State University, 1993 Copyri^t ©1993 by Ao, Benjamin Xiaoping. -

Feet and Fusion: the Case of Malay,’ Appears in the Proceedings of the Southeast Asian Linguistics Society's 13Th Annual Conference

Ann Delilkan Head-dependent Asymmetry: Feet and Fusion in Malay1 Abstract The variegated data relating to fusion in Malay have long escaped unified analysis. Past analyses assume that the conditioning environment is morphological. I suggest the question to ask is not why fusion occurs where it does but why it does not occur elsewhere. I show, too, that the answer to this question lies unquestionably in the consideration of prosody, not morphology. Crucially, I claim that the demand for feature recoverability interacts with the prosodic specifications of the language. While seeking independent support in the language for this claim, I find evidence that, far from demanding special treatment, fusion is more appropriately seen as a natural impulse in the language, one of many segmental changes that occur to effect greater prosodic unmarkedness. Within my description of the parameters of fusion, all data relevant to the process that have hitherto been cited as exceptions are, instead, predictable. Fusion in Malay is, in fact, learnable. 1. Introduction: Background and data The complex data relating to fusion (or ‘nasal substation’) in Malay have long escaped unified analysis. Most past analyses assume that the crucial conditioning environment for the process is purely morphological. Thus, it is claimed that root-initial voiceless obstruents trigger fusion when preceded by nasal-final prefixes (Teoh (1994), Onn (1980), Zaharani (1998), and, on related data in Indonesian, Pater (1996)). All analyses, however, suffer from incomplete coverage of the relevant data, numerous instances set aside as ‘exceptions’, if mentioned at all. In (1), which derives from Delilkan (1999, 2000), ‘•’ denotes data previously considered exceptional. -

1 Phonetic Bias in Sound Change

i i “Garrett-Johnson” — 2011/11/26 — 15:25 — page 1 — #1 i i 1 Phonetic bias in sound change ANDREW GARRETT AND KEITH JOHNSON 1.1 Introduction Interest in the phonetics of sound change is as old as scientific linguistics (Osthoff and Brugman 1878).1 The prevalent view is that a key component of sound change is what Hyman (1977) dubbed phonologization: the process or processes by which automatic phonetic patterns give rise to a language’s phonological patterns. Sound patterns have a variety of other sources, including analogical change, but we focus here on their phonetic 1 For helpful discussion we thank audiences at UC Berkeley and UC Davis, and seminar students in 2008 (Garrett) and 2010 (Johnson). We are also very grateful to Juliette Blevins, Joan Bybee, Larry Hyman, John Ohala, and Alan Yu, whose detailed comments on an earlier version of this chapter have saved us from many errors and injudicious choices, though we know they will not all agree with what they find here. i i i i i i “Garrett-Johnson” — 2011/11/26 — 15:25 — page 2 — #2 i i 2 Andrew Garrett and Keith Johnson grounding.2 In the study of phonologization and sound change, the three long-standing questions in (1) are especially important. (1) a. Typology: Why are some sound changes common while others are rare or nonexistent? b. Conditioning: What role do lexical and morphological factors play in sound change? c. Actuation: What triggers a particular sound change at a particular time and place? In this chapter we will address the typology and actuation questions in some detail; the conditioning question, though significant and controver- sial, will be discussed only briefly (in §1.5.3). -

Mayan Phonology Ryan Bennett∗ Yale University

Language and Linguistics Compass 10/10 (2016): 469–514, 10.1111/lnc3.12148 Mayan phonology Ryan Bennett∗ Yale University Abstract This paper presents an overview of the phonetics and phonology of Mayan languages. The focus is primarily descriptive, but the article also attempts to frame the sound patterns of Mayan languages within a larger typological and theoretical context. Special attention is given to areas which are ripe for further descriptive or theoretical investigation. 1. Introduction This article provides an overview of the phonology of Mayan languages. The principal aim is to describe the commonalities and differences between phonological systems in the Mayan family. I have also tried to highlight some phonological patterns which are interesting from a typological or theoretical perspective. There are many intriguing aspects of Mayan phonology which remain understudied; I’ve noted a handful of such phenomena here, with the hope that other researchers will take up these fascinating and difficult questions. Apart from phonology proper, this article also touches on the phonetics and morpho- syntax of Mayan languages (see also Coon this volume). Most work on Mayan phonology has focused on basic phonetic description and phonemic analysis. A much smaller portion of the literature deals directly with phonotactics, alternations, prosody, morpho-phonology, or quantitative phonetics. Since phonology connects with all of these topics, I have tried to be as thematically inclusive as possible given the current state of research on Mayan sound patterns. The phonemic systems of Mayan languages are comparatively well-studied, thanks to the efforts of numerous Mayan and non-Mayan scholars. This article has drawn heavily on grammatical descriptions published by the Proyecto Lingüístico Francisco Marroquín (PLFM), Oxlajuuj Keej Maya’ Ajtz’ib’ (OKMA), and the Academia de Lenguas Mayas de Guatemala (ALMG); many of these works were written by native-speaker linguists. -

Turkish Vowel Epenthesis

Turkish vowel epenthesis Jorge Hankamer Introduction One of the striking features of Turkish morphophonology is the system of C~0 and V~0 alternations at morpheme boundaries, which seem to conspire to prevent the occurrence of a stem-final V and a suffix-initial V next to each other. The classic analysis of this phenomenon was provided by Lees (1961), and has been generally accepted since. Zimmer (1965, 1970), however, noticed a flaw in the Lees analysis, and proposed a correction, which will be discussed in section 2. The present paper argues that there is a second flaw in the Lees analysis, and one that weakens the case for Zimmer’s proposed correction. Instead, I propose a more radical reanalysis of the phenomenon, in which most of the V~0 alternations are due not to vowel deletions, as in the Lees analysis, but to vowel epenthesis.This raises some theoretically interesting questions. 1 The V~0 alternation Turkish,1 with certain exceptions,2 does not permit VV sequences within words. Thus, while both V-initial and V-final syllables are permitted, VV sequences are avoided both _________________ 1 The variety of Turkish investigated here is “Modern Standard Turkish”, cf. Lees 1961, Lewis 1967. 2 There are four classes of exceptions: (i) some borrowed roots which originally had a glottal stop either between vowels or at the end of the root (the glottal stop being lost in modern Turkish) have VV root-internally or V+V with a V-initial suffix: saat ‘hour,clock’ <Arabic sa?at şair ‘poet’ <Arabic şa?ir camii ‘mosque-poss’ <cami? + (s)I (ii) Turkish words with syllable-initial ğ (“yumuşak ge”) have VV superficially due to the zero realization of the ğ: kağıt ‘paper’ dağı < dağ + (y)I ‘mountain-acc’ geleceği < gel + AcAg+I ‘come-fut-poss’ Commonly, the first vowel is just pronounced long. -

PL2 Production of English Word-Final Consonants: the Role of Orthography and Learner Profile Variables

L2 Production of English word-final consonants... PL2 PRODUCTION OF ENGLISH WORD-FINAL CONSONANTS: THE ROLE OF ORTHOGRAPHY AND LEARNER PROFILE VARIABLES PRODUÇÃO DE CONSOANTES FINAIS DO INGLÊS COMO L2: O PAPEL DA ORTOGRAFIA E DE VARIÁVEIS RELACIONADAS AO PERFIL DO APRENDIZ Rosane Silveira* ABSTRACT The present study investigates some factors affecting the acquisition of second language (L2) phonology by learners with considerable exposure to the target language in an L2 context. More specifically, the purpose of the study is two-fold: (a) to investigate the extent to which participants resort to phonological processes resulting from the transfer of L1 sound-spelling correspondence into the L2 when pronouncing English word-final consonants; and (b) to examine the relationship between rate of transfer and learner profile factors, namely proficiency level, length of residence in the L2 country, age of arrival in the L2 country, education, chronological age, use of English with native speakers, attendance in EFL courses, and formal education. The investigation involved 31 Brazilian speakers living in the United States with diverse profiles. Data were collected using a questionnaire to elicit the participants’ profiles, a sentence-reading test (pronunciation measure), and an oral picture-description test (L2 proficiency measure). The results indicate that even in an L2 context, the transfer of L1 sound-spelling correspondence to the production of L2 word-final consonants is frequent. The findings also reveal that extensive exposure to rich L2 input leads to the development of proficiency and improves production of L2 word-final consonants. Keywords: L2 consonant production; grapho-phonological transfer; learner profile. RESUMO O presente estudo examina fatores que afetam a produção de consoantes em segunda língua (L2) por aprendizes que foram consideravelmente expostos à língua-alvo em um contexto de L2.