Detection of Retinal Abnormalities in Fundus Image Using CNN Deep Learning Networks Mohamed Akil, Yaroub Elloumi, Rostom Kachouri

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

12 Retina Gabriele K

299 12 Retina Gabriele K. Lang and Gerhard K. Lang 12.1 Basic Knowledge The retina is the innermost of three successive layers of the globe. It comprises two parts: ❖ A photoreceptive part (pars optica retinae), comprising the first nine of the 10 layers listed below. ❖ A nonreceptive part (pars caeca retinae) forming the epithelium of the cil- iary body and iris. The pars optica retinae merges with the pars ceca retinae at the ora serrata. Embryology: The retina develops from a diverticulum of the forebrain (proen- cephalon). Optic vesicles develop which then invaginate to form a double- walled bowl, the optic cup. The outer wall becomes the pigment epithelium, and the inner wall later differentiates into the nine layers of the retina. The retina remains linked to the forebrain throughout life through a structure known as the retinohypothalamic tract. Thickness of the retina (Fig. 12.1) Layers of the retina: Moving inward along the path of incident light, the individual layers of the retina are as follows (Fig. 12.2): 1. Inner limiting membrane (glial cell fibers separating the retina from the vitreous body). 2. Layer of optic nerve fibers (axons of the third neuron). 3. Layer of ganglion cells (cell nuclei of the multipolar ganglion cells of the third neuron; “data acquisition system”). 4. Inner plexiform layer (synapses between the axons of the second neuron and dendrites of the third neuron). 5. Inner nuclear layer (cell nuclei of the bipolar nerve cells of the second neuron, horizontal cells, and amacrine cells). 6. Outer plexiform layer (synapses between the axons of the first neuron and dendrites of the second neuron). -

Morphological Aspect of Tapetum Lucidum at Some Domestic Animals

Bulletin UASVM, Veterinary Medicine 65(2)/2008 pISSN 1843-5270; eISSN 1843-5378 MORPHOLOGICAL ASPECT OF TAPETUM LUCIDUM AT SOME DOMESTIC ANIMALS Donis ă Alina, A. Muste, F.Beteg, Roxana Briciu University of Angronomical Sciences and Veterinary Medicine Calea M ănăş tur 3-5 Cluj-Napoca [email protected] Keywords: animal ophthalmology, eye fundus, tapetum lucidum. Abstract: The ocular microanatomy of a nocturnal and a diurnal eye are very different, with compromises needed in the arrhythmic eye. Anatomic differences in light gathering are found in the organization of the retina and the optical system. The presence of a tapetum lucidum influences the light. The tapetum lucidum represents a remarkable example of neural cell and tissue specialization as an adaptation to a dim light environment and, despite these differences, all tapetal variants act to increase retinal sensitivity by reflecting light back through the photoreceptor layer. This study propose an eye fundus examination, in animals of different species: cattles, sheep, pigs, dogs cats and rabbits, to determine the presence or absence of tapetum lucidum, and his characteristics by species to species, age and even breed. Our observation were made between 2005 - 2007 at the surgery pathology clinic from FMV Cluj, on 31 subjects from different species like horses, dogs and cats (25 animals). MATERIAL AND METHOD Our observation were made between 2005 - 2007 at the surgery pathology clinic from FMV Cluj, on 30 subjects from different species like cattles, sheep, pigs, dogs cats and rabbits.The animals were halt and the examination was made with minimal tranquilization. For the purpose we used indirect ophthalmoscopy method with indirect ophthalmoscope Heine Omega 2C. -

Characteristics of Structures and Lesions of the Eye in Laboratory Animals Used in Toxicity Studies

J Toxicol Pathol 2015; 28: 181–188 Concise Review Characteristics of structures and lesions of the eye in laboratory animals used in toxicity studies Kazumoto Shibuya1*, Masayuki Tomohiro2, Shoji Sasaki3, and Seiji Otake4 1 Testing Department, Nippon Institute for Biological Science, 9-2221-1 Shin-machi, Ome, Tokyo 198-0024, Japan 2 Clinical & Regulatory Affairs, Alcon Japan Ltd., Toranomon Hills Mori Tower, 1-23-1 Toranomon, Minato-ku, Tokyo 105-6333, Japan 3 Japan Development, AbbVie GK, 3-5-27 Mita, Minato-ku, Tokyo 108-6302, Japan 4 Safety Assessment Department, LSI Medience Corporation, 14-1 Sunayama, Kamisu-shi, Ibaraki 314-0255, Japan Abstract: Histopathology of the eye is an essential part of ocular toxicity evaluation. There are structural variations of the eye among several laboratory animals commonly used in toxicity studies, and many cases of ocular lesions in these animals are related to anatomi- cal and physiological characteristics of the eye. Since albino rats have no melanin in the eye, findings of the fundus can be observed clearly by ophthalmoscopy. Retinal atrophy is observed as a hyper-reflective lesion in the fundus and is usually observed as degenera- tion of the retina in histopathology. Albino rats are sensitive to light, and light-induced retinal degeneration is commonly observed because there is no melanin in the eye. Therefore, it is important to differentiate the causes of retinal degeneration because the lesion occurs spontaneously and is induced by several drugs or by lighting. In dogs, the tapetum lucidum, a multilayered reflective tissue of the choroid, is one of unique structures of the eye. -

Ophthalmology Abbreviations Alphabetical

COMMON OPHTHALMOLOGY ABBREVIATIONS Listed as one of America’s Illinois Eye and Ear Infi rmary Best Hospitals for Ophthalmology UIC Department of Ophthalmology & Visual Sciences by U.S.News & World Report Commonly Used Ophthalmology Abbreviations Alphabetical A POCKET GUIDE FOR RESIDENTS Compiled by: Bryan Kim, MD COMMON OPHTHALMOLOGY ABBREVIATIONS A/C or AC anterior chamber Anterior chamber Dilators (red top); A1% atropine 1% education The Department of Ophthalmology accepts six residents Drops/Meds to its program each year, making it one of nation’s largest programs. We are anterior cortical changes/ ACC Lens: Diagnoses/findings also one of the most competitive with well over 600 applicants annually, of cataract whom 84 are granted interviews. Our selection standards are among the Glaucoma: Diagnoses/ highest. Our incoming residents graduated from prestigious medical schools ACG angle closure glaucoma including Brown, Northwestern, MIT, Cornell, University of Michigan, and findings University of Southern California. GPA’s are typically 4.0 and board scores anterior chamber intraocular ACIOL Lens are rarely lower than the 95th percentile. Most applicants have research lens experience. In recent years our residents have gone on to prestigious fellowships at UC Davis, University of Chicago, Northwestern, University amount of plus reading of Iowa, Oregon Health Sciences University, Bascom Palmer, Duke, UCSF, Add power (for bifocal/progres- Refraction Emory, Wilmer Eye Institute, and UCLA. Our tradition of excellence in sives) ophthalmologic education is reflected in the leadership positions held by anterior ischemic optic Nerve/Neuro: Diagno- AION our alumni, who serve as chairs of ophthalmology departments, the dean neuropathy ses/findings of a leading medical school, and the director of the National Eye Institute. -

Entoptic Phenomena and Reproducibility Ofcorneal Striae

Br J Ophthalmol: first published as 10.1136/bjo.71.10.737 on 1 October 1987. Downloaded from British Journal of Ophthalmology, 1987, 71, 737-741 Entoptic phenomena and reproducibility of corneal striae following contact lens wear MURRAY H JOHNSON, C MONTAGUE RUBEN, AND DAVID M PERRIGIN From the Institutefor Contact Lens Research, University of Houston- University Park, 4901 Calhoun Road, Houston, Texas 77004, USA SUMMARY Vertical corneal striae distributed across the posterior cornea are one of the objective signs ofclinically unacceptable corneal swelling (>6%) resulting from contact lens wear. This study reports that corneal striae are repeatable both in configuration and location with different levels of hypoxia. In most instances entoptic phenomena result from the presence of these lines. The results suggest that the healthy, avascular, transparent cornea has certain localised areas in its anatomical structure which may give rise to bundles of collagen fibres being made visible objectively and subjectively during conditions of corneal swelling. Corneal striae is a term used to describe lines seen in vidual was strikingly similar. Furthermore, there was the corneal stroma of varied appearance, aetiology, intrasubject repeatability of striae with large inter- and pathology. Deep striae have been seen as a result subject variability of corneal striate lines. of trauma (penetrating wounds),' after intraocular In this paper we describe the repeatability and operations (striate keratitis),' in degenerative entoptic phenomena of corneal -

Retinal Pigment Epithelial Lipofuscin and Melanin and Choroidal Melanin in Human Eyes

Retinal Pigment Epithelial Lipofuscin and Melanin and Choroidal Melanin in Human Eyes John J. Weirer,*t Francois C. Delori,*t Glenn L. Wing,t and Karbrra A. Fitch* Optical measurements of the pigments of the retinal pigment epithelium (RPE) and choroid were made on 38 human autopsy eyes of both blacks and whites, varying in age between 2 wk and 90 yr old. Lipofuscin in melanin-bleached RPE was measured as fluorescence at 470 mm following excitation at 365 nm and was found to be proportional to fluorescence measured at 560 nm in unbleached tissue. Transmission measurements of RPE and choroidal melanin were converted and expressed as optical density units. The choroidal melanin content increased from the periphery to the posterior pole. RPE melanin concentration decreased from the periphery to the posterior pole with an increase in the macula. Conversely, the amount of RPE lipofuscin increased from the periphery to the posterior pole with a consistent dip at the fovea. There was an inverse relationship between RPE lipofuscin concentration and RPE melanin concentration. The RPE melanin content was similar between whites and blacks. Lipofuscin concentration was significantly greater (P = 0.002) in the RPE of whites compared to blacks; whereas blacks had a significantly greater (P = 0.005) choroidal melanin content than whites. The amounts of both choroidal and RPE melanin showed a trend of decreasing content with aging, whereas the amount RPE lipofuscin tended to increase (whites > blacks). Per fundus area, the amount of choroidal melanin was always greater than that in the RPE. There was a statistically significant (P = 0.001) increase in RPE height with age, most marked in eyes of whites after age 50 and correlated with the increase in lipofuscin concentration. -

Microsporidial Stromal Keratitis

CLINICOPATHOLOGIC REPORTS, CASE REPORTS, AND SMALL CASE SERIES SECTION EDITOR: W. RICHARD GREEN, MD Microsporidial Stromal Keratitis Microsporida are spore-forming, ob- ligate eukaryotic protozoan para- sites that belong to the phylum Microspora. The ophthalmic mani- festations of ocular microsporidi- osis exhibit characteristic clinical fea- tures depending on the genus involved. With the genus Encepha- Figure 1. The clinical appearance of the right Figure 2. The clinical appearance of the right litozoon, infection is limited to the cornea at the time of the initial examination cornea 9 months after the initial examination epithelial cells of the cornea and con- shows a central area of stromal infiltration and displays a central epithelial defect associated junctiva, producing a diffuse punc- edema without suppuration. A 3% hypopyon is with a disciform opacity. Superficial peripheral tate epithelial keratoconjunctivitis. present inferiorly. corneal vascularization is observed superiorly. With the genera Nosema and Micro- sporidium, the infection typically in- and scrapings collected for bacte- wrinkled and folded. The endothe- volves the corneal stroma, includ- rial, chlamydial, fungal, and herpes- lium was attenuated with a retro- ing the keratocytes.1 virus cultures were all negative for corneal fibrovascular membrane, To date, only 5 case reports of microorganisms. containing few neutrophils. The cor- microsporidial stromal keratitis have Over the next 2 months, the vi- neal stroma showed round to oval been published.2-6 We report an ad- sual acuity improved to 20/25 OD, organisms measuring 2.0 to 4.0 µm. ditional case caused by Vittaforma with gradual resolution of the stro- These organisms were mostly lo- corneae (formerly known as No- mal edema. -

Funduscopic Interpretation Understanding the Fundus: Is That Normal?

Funduscopic Interpretation Understanding the Fundus: is that normal? Gillian McLellan BVMS PhD DVOphthal DECVO DACVO MRCVS With thanks to Christine Heinrich and all who contributed images Fundus ≠ Retina working knowledge of fundus anatomy how to evaluate the fundus systematically normal fundus variants hallmarks of fundus pathology Fundus anatomy Vitreous normally optically clear (almost) Only retinal vessels / pigmented RPE +/- inner choroid (tapetum) make a major contribution to the typical appearance of a normal fundus Retinal Vascular Patterns holangiotic Retinal Vascular Patterns Merangiotic Paurangiotic Anangiotic Retinal anatomy light traverses 8 retinal layers to reach photoreceptor O.S. photoreceptors largely responsible for translucent appearance of NSR cone density highest in area centralis or macula RPE = single cell layer Pigmented /non-tapetal Tapetal / non-pigmented retinal anatomy tapetum structure within the choroid cellular in dog and cat regular compacted collagen array in ruminants, horses visible due to absence of pigment in overlying RPE seen ophthalmoscopically as a bright, shiny sweep of color (or not) Tapetal fundus Non-Tapetal fundus RPE Tapetal development RPE completely pigments during fetal development Autophagy of melanin occurs in presumptive tapetal zone RPE depigments Tapetal maturation is not complete until 12-14 weeks of age in dogs and cats Connecting capillaries In areas of thin tapetum choroidal vessels may segmentally shine through, in some cases the long posterior ciliary artery, or vortex veins may also be seen Tapetal variation in equine fundus Feline tapetum – transient variant Normal : Non-tapetal fundus Varies in color depending on amount of melanin in RPE and choroid RPE contains melanin, tapetum absent Normal : Non-tapetal fundus • non-tapetal fundus • (optic disc) • (retinal blood vessels) RPE contains melanin, where the tapetum is absent. -

The Horizontal Raphe of the Human Retina and Its Watershed Zones

vision Review The Horizontal Raphe of the Human Retina and its Watershed Zones Christian Albrecht May * and Paul Rutkowski Department of Anatomy, Medical Faculty Carl Gustav Carus, TU Dresden, 74, 01307 Dresden, Germany; [email protected] * Correspondence: [email protected] Received: 24 September 2019; Accepted: 6 November 2019; Published: 8 November 2019 Abstract: The horizontal raphe (HR) as a demarcation line dividing the retina and choroid into separate vascular hemispheres is well established, but its development has never been discussed in the context of new findings of the last decades. Although factors for axon guidance are established (e.g., slit-robo pathway, ephrin-protein-receptor pathway) they do not explain HR formation. Early morphological organization, too, fails to establish a HR. The development of the HR is most likely induced by the long posterior ciliary arteries which form a horizontal line prior to retinal organization. The maintenance might then be supported by several biochemical factors. The circulation separate superior and inferior vascular hemispheres communicates across the HR only through their anastomosing capillary beds resulting in watershed zones on either side of the HR. Visual field changes along the HR could clearly be demonstrated in vascular occlusive diseases affecting the optic nerve head, the retina or the choroid. The watershed zone of the HR is ideally protective for central visual acuity in vascular occlusive diseases but can lead to distinct pathological features. Keywords: anatomy; choroid; development; human; retina; vasculature 1. Introduction The horizontal raphe (HR) was first described in the early 1800s as a horizontal demarcation line that extends from the macula to the temporal Ora dividing the temporal retinal nerve fiber layer into a superior and inferior half [1]. -

Multimodal Imaging in a Patient with Prader–Willi Syndrome Mohamed A

Hamid et al. Int J Retin Vitr (2018) 4:45 https://doi.org/10.1186/s40942-018-0147-6 International Journal of Retina and Vitreous CASE REPORT Open Access Multimodal imaging in a patient with Prader–Willi syndrome Mohamed A. Hamid*, Mitul C. Mehta and Baruch D. Kuppermann Abstract Background: Prader–Willi syndrome (PWS) is a genetic disease caused by loss of expression of the paternally inher- ited copy of several genes on the long arm of chromosome 15. Ophthalmic manifestations of PWS include strabismus, amblyopia, nystagmus, hypopigmentation of the iris and choroid, diabetic retinopathy, cataract and congenital ectro- pion uvea. An overlap between PWS and oculocutaneous albinism (OCA) has long been recognized and attributed to deletion of OCA2 gene located in PWS critical region (PWCR). Case report: A 30-year-old male patient with PWS presented with vision loss in his left eye. His right eye had normal visual acuity. Multimodal imaging revealed absence of a foveal depression and extremely reduced diameter of the foveal avascular zone in the right eye and an inactive type 2 macular neovascular lesion in the left eye. Conclusions: We report a presumed association of fovea plana and choroidal neovascularization with PWS. The use of multimodal imaging revealed novel fndings in a PWS patient that might enrich our current understanding of the overlap between PWS and OCA. Keywords: Prader–Willi syndrome, Fovea plana, Macular-foveal capillaries, Type 2 macular neovascularization Background stereopsis, amblyopia and nystagmus [1]. Hypopigmen- Prader–Willi syndrome (PWS) is a complex genetic tation of the iris and choroid, diabetic retinopathy, cata- disorder caused by lack of expression of the paternally- ract, congenital ocular fbrosis syndrome and congenital inherited PWS critical region (PWCR) of chromosome ectropion uvea have also been reported [3]. -

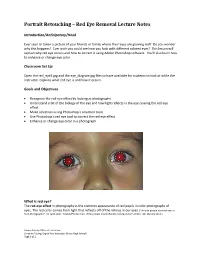

Portrait Retouching – Red Eye Removal Lecture Notes

Portrait Retouching – Red Eye Removal Lecture Notes Introduction/Anticipatory/Hook Ever seen or taken a picture of your friends or family where their eyes are glowing red? Do you wonder why this happens? Ever wish you could see how you look with different colored eyes? This lesson will explain why red eye occurs and how to correct it using Adobe Photoshop software. You’ll also learn how to enhance or change eye color. Classroom Set Up Open the red_eye2.jpg and the eye_diagram.jpg files to have available for students to look at while the instructor explains what red eye is and how it occurs. Goals and Objectives Recognize the red-eye effect by looking at photographs Understand a bit of the biology of the eye and how light reflects in the eye causing the red eye effect Make selections using Photoshop’s selection tools Use Photoshop’s red eye tool to correct the red-eye effect Enhance or change eye color in a photograph What is red eye? The red-eye effect in photography is the common appearance of red pupils in color photographs of eyes. The red color comes from light that reflects off of the retinas in our eyes. ("Why do people have red eyes in flash photographs?" 01 April 2000. HowStuffWorks.com. <http://www.howstuffworks.com/question51.htm> 08 February 2011.) Solano County Office of Education Christine A Long, Digital Arts Instructor (Dixon High School) Page 1 of 2 Why does red eye happen? The red eye effect occurs when using a photographic flash very close to the camera lens (as with most compact cameras or cell phone cameras), in ambient low light. -

The Dark Choroid in Posterior Retinal Dystrophies

Br J Ophthalmol: first published as 10.1136/bjo.65.5.359 on 1 May 1981. Downloaded from British Journal of Ophthalmology, 1981, 65, 359-363 The dark choroid in posterior retinal dystrophies G. FISH, R. GREY, K. S. SEHMI, AND A. C. BIRD From the Professorial Unit, Moorfields Eye Hospital, City Road, London EC] V 2PD SUMMARY Many patients with heredomacular degeneration exhibit a peculiar fluorescein angiographic finding of absence of the normal background fluorescence (a dark choroid). The cause of this is unknown but may relate to the deposition of an abnormal material in the retinal pigment epithelial cells. The finding does not correlate with severity or duration ofdisease but is more frequent in patients with flecks. The finding may be useful in subdividing heredomacular degenerations into more specific disease groups. Inherited macular degeneration comprises several visual loss after 60 years of age, and those with different diseases of unknown aetiology, some of degeneration due to toxic agents were excluded. which are well recognised and believed to be single Stereo colour fundus photographs and stereo an- neurological entities, for example, Best's disease, giography were accomplished on all patients, and as polymorphous dystrophy, pseudoinflammatory mac- many as possible had electrodiagnostic studies and ular dystrophy, and central areolar choroidal testing of visual fields and colour vision. sclerosis. In the remainder the individual disorders Colour vision testing included HRR plates, are unrecognised, and attempts have been made to Ishihara places, panel D 15, and Farnsworth-Munsell subdivide and catagorise them in terms of their 100 hue. Visual fields were recorded on a Goldmann functional, genetic, and morphological character- perimeter, and electrodiagnostic tests included istics.