No Belief Propagation Required

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Linear Algebraic Techniques for Spanning Tree Enumeration

LINEAR ALGEBRAIC TECHNIQUES FOR SPANNING TREE ENUMERATION STEVEN KLEE AND MATTHEW T. STAMPS Abstract. Kirchhoff's Matrix-Tree Theorem asserts that the number of spanning trees in a finite graph can be computed from the determinant of any of its reduced Laplacian matrices. In many cases, even for well-studied families of graphs, this can be computationally or algebraically taxing. We show how two well-known results from linear algebra, the Matrix Determinant Lemma and the Schur complement, can be used to elegantly count the spanning trees in several significant families of graphs. 1. Introduction A graph G consists of a finite set of vertices and a set of edges that connect some pairs of vertices. For the purposes of this paper, we will assume that all graphs are simple, meaning they do not contain loops (an edge connecting a vertex to itself) or multiple edges between a given pair of vertices. We will use V (G) and E(G) to denote the vertex set and edge set of G respectively. For example, the graph G with V (G) = f1; 2; 3; 4g and E(G) = ff1; 2g; f2; 3g; f3; 4g; f1; 4g; f1; 3gg is shown in Figure 1. A spanning tree in a graph G is a subgraph T ⊆ G, meaning T is a graph with V (T ) ⊆ V (G) and E(T ) ⊆ E(G), that satisfies three conditions: (1) every vertex in G is a vertex in T , (2) T is connected, meaning it is possible to walk between any two vertices in G using only edges in T , and (3) T does not contain any cycles. -

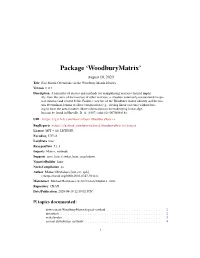

Package 'Woodburymatrix'

Package ‘WoodburyMatrix’ August 10, 2020 Title Fast Matrix Operations via the Woodbury Matrix Identity Version 0.0.1 Description A hierarchy of classes and methods for manipulating matrices formed implic- itly from the sums of the inverses of other matrices, a situation commonly encountered in spa- tial statistics and related fields. Enables easy use of the Woodbury matrix identity and the ma- trix determinant lemma to allow computation (e.g., solving linear systems) without hav- ing to form the actual matrix. More information on the underlying linear alge- bra can be found in Harville, D. A. (1997) <doi:10.1007/b98818>. URL https://github.com/mbertolacci/WoodburyMatrix BugReports https://github.com/mbertolacci/WoodburyMatrix/issues License MIT + file LICENSE Encoding UTF-8 LazyData true RoxygenNote 7.1.1 Imports Matrix, methods Suggests covr, lintr, testthat, knitr, rmarkdown VignetteBuilder knitr NeedsCompilation no Author Michael Bertolacci [aut, cre, cph] (<https://orcid.org/0000-0003-0317-5941>) Maintainer Michael Bertolacci <[email protected]> Repository CRAN Date/Publication 2020-08-10 12:30:02 UTC R topics documented: determinant,WoodburyMatrix,logical-method . .2 instantiate . .2 mahalanobis . .3 normal-distribution-methods . .4 1 2 instantiate solve-methods . .5 WoodburyMatrix . .6 WoodburyMatrix-class . .8 Index 10 determinant,WoodburyMatrix,logical-method Calculate the determinant of a WoodburyMatrix object Description Calculates the (log) determinant of a WoodburyMatrix using the matrix determinant lemma. Usage ## S4 method for signature 'WoodburyMatrix,logical' determinant(x, logarithm) Arguments x A object that is a subclass of WoodburyMatrix logarithm Logical indicating whether to return the logarithm of the matrix. Value Same as base::determinant. -

![Arxiv:1912.02762V2 [Stat.ML] 8 Apr 2021](https://docslib.b-cdn.net/cover/9464/arxiv-1912-02762v2-stat-ml-8-apr-2021-629464.webp)

Arxiv:1912.02762V2 [Stat.ML] 8 Apr 2021

Journal of Machine Learning Research 22 (2021) 1-64 Submitted 12/19; Published 3/21 Normalizing Flows for Probabilistic Modeling and Inference George Papamakarios∗ [email protected] Eric Nalisnick∗ [email protected] Danilo Jimenez Rezende [email protected] Shakir Mohamed [email protected] Balaji Lakshminarayanan [email protected] DeepMind Editor: Ryan P. Adams Abstract Normalizing flows provide a general mechanism for defining expressive probability distribu- tions, only requiring the specification of a (usually simple) base distribution and a series of bijective transformations. There has been much recent work on normalizing flows, ranging from improving their expressive power to expanding their application. We believe the field has now matured and is in need of a unified perspective. In this review, we attempt to provide such a perspective by describing flows through the lens of probabilistic modeling and inference. We place special emphasis on the fundamental principles of flow design, and discuss foundational topics such as expressive power and computational trade-offs. We also broaden the conceptual framing of flows by relating them to more general probabil- ity transformations. Lastly, we summarize the use of flows for tasks such as generative modeling, approximate inference, and supervised learning. Keywords: normalizing flows, invertible neural networks, probabilistic modeling, proba- bilistic inference, generative models 1. Introduction The search for well-specified probabilistic models|models that correctly describe the pro- cesses that produce data|is one of the enduring ideals of the statistical sciences. Yet, in only the simplest of settings are we able to achieve this goal. A central need in all of statis- arXiv:1912.02762v2 [stat.ML] 8 Apr 2021 tics and machine learning is then to develop the tools and theories that allow ever-richer probabilistic descriptions to be made, and consequently, that make it possible to develop better-specified models. -

Chapter 1 Introduction to Matrices

Chapter 1 Introduction to Matrices Contents (final version) 1.0 Introduction........................................ 1.3 1.1 Basics............................................ 1.4 1.2 Matrix structures..................................... 1.14 Notation.............................................. 1.14 Common matrix shapes and types................................. 1.15 Matrix transpose and symmetry.................................. 1.19 1.3 Multiplication....................................... 1.21 Vector-vector multiplication.................................... 1.21 Matrix-vector multiplication.................................... 1.24 Matrix-matrix multiplication.................................... 1.25 Matrix multiplication properties.................................. 1.25 Using matrix-vector operations in high-level computing languages................ 1.31 Invertibility............................................. 1.39 1.4 Orthogonality....................................... 1.40 1.5 Matrix determinant.................................... 1.43 1.1 © J. Lipor, January 5, 2021, 14:27 (final version) 1.2 1.6 Eigenvalues........................................ 1.49 Properties of eigenvalues...................................... 1.52 Trace................................................ 1.55 1.7 Appendix: Fields, Vector Spaces, Linear Transformations.............. 1.56 © J. Lipor, January 5, 2021, 14:27 (final version) 1.3 1.0 Introduction This chapter reviews vectors and matrices and basic properties like shape, orthogonality, determinant, -

Fast and Accurate Camera Covariance Computation for Large 3D Reconstruction

Fast and Accurate Camera Covariance Computation for Large 3D Reconstruction Michal Polic1, Wolfgang F¨orstner2, and Tomas Pajdla1 [0000−0003−3993−337X][0000−0003−1049−8140][0000−0001−6325−0072] 1 CIIRC, Czech Technical University in Prague, Czech Republic fmichal.polic,[email protected], www.ciirc.cvut.cz 2 University of Bonn, Germany [email protected], www.ipb.uni-bonn.de Abstract. Estimating uncertainty of camera parameters computed in Structure from Motion (SfM) is an important tool for evaluating the quality of the reconstruction and guiding the reconstruction process. Yet, the quality of the estimated parameters of large reconstructions has been rarely evaluated due to the computational challenges. We present a new algorithm which employs the sparsity of the uncertainty propagation and speeds the computation up about ten times w.r.t. previous approaches. Our computation is accurate and does not use any approximations. We can compute uncertainties of thousands of cameras in tens of seconds on a standard PC. We also demonstrate that our approach can be ef- fectively used for reconstructions of any size by applying it to smaller sub-reconstructions. Keywords: uncertainty, covariance propagation, Structure from Mo- tion, 3D reconstruction 1 Introduction Three-dimensional reconstruction has a wide range of applications (e.g. virtual reality, robot navigation or self-driving cars), and therefore is an output of many algorithms such as Structure from Motion (SfM), Simultaneous location and mapping (SLAM) or Multi-view Stereo (MVS). Recent work in SfM and SLAM has demonstrated that the geometry of three-dimensional scene can be obtained from a large number of images [1],[14],[16]. -

Linear Algebraic Techniques for Weighted Spanning Tree Enumeration 3

LINEAR ALGEBRAIC TECHNIQUES FOR WEIGHTED SPANNING TREE ENUMERATION STEVEN KLEE AND MATTHEW T. STAMPS Abstract. The weighted spanning tree enumerator of a graph G with weighted edges is the sum of the products of edge weights over all the spanning trees in G. In the special case that all of the edge weights equal 1, the weighted spanning tree enumerator counts the number of spanning trees in G. The Weighted Matrix-Tree Theorem asserts that the weighted spanning tree enumerator can be calculated from the determinant of a reduced weighted Laplacian matrix of G. That determinant, however, is not always easy to compute. In this paper, we show how two well-known results from linear algebra, the Matrix Determinant Lemma and the method of Schur complements, can be used to elegantly compute the weighted spanning tree enumerator for several families of graphs. 1. Introduction In this paper, we restrict our attention to finite simple graphs. We will assume each graph has a finite number of vertices and does not contain any loops or multiple edges. We will denote the vertex set and edge set of a graph G by V (G) and E(G), respectively. A function ω : V (G) × V (G) → R, whose values are denoted by ωi,j for vi, vj ∈ V (G), is an edge weighting on G if ωi,j = ωj,i for all vi, vj ∈ V (G) and if ωi,j = 0 for all {vi, vj} ∈/ E(G). The weighted degree of a vertex vi ∈ V (G) is the value deg(vi; ω)= ωi,j = ωi,j. -

Exploring QR Factorization on GPU for Quantum Monte Carlo Simulation

Exploring QR factorization on GPU for Quantum Monte Carlo Simulation Benjamin McDaniel, Ming Wong August 7, 2015 Abstract QMCPACK is open-source scientific software designed to perform Quan- tum Monte Carlo simulations, which are first principles methods for de- termining the properties of the electronic structure of atoms, molecules, and solids. One major objective is finding the ground state for a physical system. We will investigate possible alternatives to the existing method in QMCPACK such as QR factorization for evaluating single-particle up- dates to a system's electron configuration by improving the computational efficiency and numerical stability of the algorithms. 1 1 Introduction Quantum Monte Carlo (QMC) simulations can be used to accurately de- scribe physical systems (Ceperly/Chester 3081). These simulations use Slater- Jastrow trial wave functions to evaluate possible changes to the system, one particle at a time, for their potential effect on the overall energy of the system (Nukala/Kent 130). The Slater determinant factor of the trial wave function for the entire system of n electrons can be represented as χ1(x1) χ2(x1) ··· χN (x1) χ1(x2) χ2(x2) ··· χN (x2) Ψ(x ; x ; ··· ; x ) = p1 1 2 n N! . .. χ1(xN ) χ2(xN ) ··· χN (xN ) Pn where χ1;2···n represents the single-particle wave functions: χ(ri) = j=1 φjcjri . A single particle's contribution to the overall energy of the system is there- fore encoded in a single row of the above matrix, and the effect of a proposed single-particle position change on the overall energy of the system can be as- sessed by altering a single row of the matrix with the proposed change, and re-computing the determinant. -

Ensemble Kalman Filter with Perturbed Observations in Weather Forecasting and Data Assimilation

Ensemble Kalman Filter with perturbed observations in weather forecasting and data assimilation Yihua Yang 28017913 Department of Mathematics and Statistics University of Reading April 10, 2020 Abstract Data assimilation in Mathematics provides algorithms for widespread applications in various fields, especially in meteorology. It is of practical use to deal with a large amount of information in several dimensions in the complex system that is hard to estimate. Weather forecasting is one of the applications, where the prediction of meteorological data are corrected given the observations. Numerous approaches are contained in data assimilation. One specific sequential method is the Kalman Filter. The core is to estimate unknown information with the new data that is measured and the prior data that is predicted. The main benefit of the Kalman Filter lies in the more accurate prediction than that with single measurements. As a matter of fact, there are different improved methods in the Kalman Filter. In this project, the Ensemble Kalman Filter with perturbed observations is considered. It is achieved by Monte Carlo simulation. In this method, the ensemble is involved in the calculation instead of the state vectors. In addition, the measurement with perturbation is viewed as the suitable observation. These changes compared with the Linear Kalman Filter make it more advantageous in that applications are not restricted in linear systems any more and less time is taken when the data are calculated by computers. The more flexibility as well as the less pressure of calculation enable the specific method to be frequently utilised than other methods. This project seeks to develop the Ensemble Kalman Filter with perturbed obser- vations gradually. -

Efficient Belief Space Planning in High-Dimensional State Spaces By

Efficient Belief Space Planning in High-dimensional State Spaces by Exploiting Sparsity and Calculation Re-use Dmitry Kopitkov Efficient Belief Space Planning in High-dimensional State Spaces by Exploiting Sparsity and Calculation Re-use Research Thesis Submitted in partial fulfillment of the requirements for the degree of Master of Science in Technion Autonomous Systems Program (TASP) Dmitry Kopitkov Submitted to the Senate of the Technion — Israel Institute of Technology Adar 5777 Haifa March 2017 This research was carried out under the supervision of Assistant Prof. Vadim Indelman, in the Faculty of Aerospace. Acknowledgements I would like to hugely thank my advisor Prof. Vadim Indelman for all his guidance, help, support and patience through the years of my Master’s studies. My research skills got elevated significantly all thanks to his excellent schooling and high-standard demands. I would also like to thank the people from Technion Autonomous Systems Program, espe- cially so Sigalit Preger, for helping me getting over the bureaucracy of the university and for being supportive through sometimes very uneasy moments during the last years. Finally, I thank all my friends for having my back and being there for me this entire time. Your help allowed me to keep standing on my legs and to continue and accomplish this research. The generous financial help of the Technion is gratefully acknowledged. Contents List of Figures List of Tables Abstract 1 Abbreviations and Notations 3 1 Introduction 5 1.1 Related Work . .6 1.2 Contributions . .7 1.3 Organization . .8 2 Notations and Problem Formulation 11 3 Approach 17 3.1 BSP as Factor Graph . -

Doctoral Thesis Probabilistic Data Reconciliation in Material Flow

Doctoral Thesis Probabilistic Data Reconciliation in Material Flow Analysis submitted in satisfaction of the requirements for the degree of Doctor of Science in Civil Engineering of the TU Wien, Faculty of Civil Engineering Dissertation Probabilistischer Datenausgleich in der Materialflussanalyse ausgeführt zum Zwecke der Erlangung des akademischen Grades eines Doktors der technischen Wissenschaft eingereicht an der Technischen Universität Wien, Fakultät für Bauingenieurwesen von Dipl.-Ing. Oliver Cencic Matr.Nr.: 09140006 Petronell-C., Austria Supervisor: Univ.-Prof. Dipl.-Ing. Dr.techn. Helmut Rechberger Institute for Water Quality and Resource Management Technische Universität Wien, Austria Supervisor: Univ.-Doz. Dipl.-Ing. Dr. Rudolf Frühwirth Institute of High Energy Physics (HEPHY) Austrian Academy of Sciences, Austria Assessor: Associate Prof. Reinout Heijungs Department of Econometrics and Operations Research Vrije Universiteit Amsterdam, The Netherlands Assessor: Prof. Julian M. Allwood Department of Engineering University of Cambridge, United Kingdom Vienna, February 2018 Acknowledgement First of all, I would like to express my deepest gratitude to Dr. Rudolf Frühwirth for his patience and his unlimited support during the work on this theses. I learned so much about statistics and programming in MATLAB through all the discussion we had. It was motivating, inspiring, and a great honor to work with him. I also want to thank Prof. Helmut Rechberger for supervising my work and giving me the freedom to work on mathematical methodologies deviating from the actual field of research at the department. I would also like to express my appreciation to Prof. Reinout Heijungs and Prof. Julian Allwood for their evaluation of my thesis. Special thanks to my colleagues Nada Dzubur, Oliver Schwab, David Laner and Manuel Hahn for giving me the chance to internally discuss uncertainty matters after many years of solitary work on this topic. -

A New Algorithm for Improved Determinant Computation with a View Towards Resultants

A new algorithm for improved determinant computation with a view towards resultants by Khalil Shivji Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Honours Bachelor of Science in the Department of Mathematics Faculty of Science c Khalil Shivji 2019 SIMON FRASER UNIVERSITY Fall 2019 Copyright in this work rests with the author. Please ensure that any reproduction or re-use is done in accordance with the relevant national copyright legislation. Approval Name: Khalil Shivji Degree: Honours Bachelor of Science (Mathematics) Title: A new algorithm for improved determinant computation with a view towards resultants Supervisory Committee: Dr. Michael Monagan Supervisor Professor of Mathematics Dr. Nathan Ilten Course Instructor Associate Professor of Mathematics Date Approved: December 13, 2019 ii Abstract Certain symbolic matrices can be highly structured, with the possibility of having a block triangular form. Correctly identifying the block triangular form of such a matrix can greatly increase the efficiency of computing its determinant. Current techniques to compute the block diagonal form of a matrix rely on existing algorithms from graph theory which do not take into consideration key properties of the matrix itself. We developed a simple algorithm that computes the block triangular form of a matrix using basic linear algebra and graph theory techniques. We designed and implemented an algorithm that uses the Matrix Deter- minant Lemma to exploit the nature of block triangular matrices. Using this new algorithm, the computation of any determinant that has a block triangular form can be expedited with negligible overhead. Keywords: Linear Algebra; Determinants; Matrix Determinant Lemma; Schwartz-Zippel Lemma; Algorithms; Dixon Resultants iii Dedication For my brothers, Kassim, Yaseen, and Hakeem. -

The Sherman Morrison Iteration

The Sherman Morrison Iteration Joseph T. Slagel Thesis submitted to the Faculty of the Virginia Polytechnic Institute and State University in partial fulfillment of the requirements for the degree of Master of Science in Mathematics Julianne Chung, Chair Matthias Chung, Chair Serkan Gugercin May 6, 2015 Blacksburg, Virginia Keywords: \Sherman Morrison, regularized least squares problem, extremely underdetermined linear system," Copyright 2015, Joseph T. Slagel The Sherman Morrison Iteration Joseph T. Slagel (ABSTRACT) The Sherman Morrison iteration method is developed to solve regularized least squares problems. Notions of pivoting and splitting are deliberated on to make the method more robust. The Sherman Morrison iteration method is shown to be effective when dealing with an extremely underdetermined least squares problem. The performance of the Sherman Morrison iteration is compared to classic direct methods, as well as iterative methods, in a number of experiments. Specific Matlab implementation of the Sherman Morrison iteration is discussed, with Matlab codes for the method available in the appendix. Acknowledgments I acknowledge Dr. Julianne Chung and Dr. Matthias Chung for their masterful patience and sage-like guidance during the composition of this thesis. I also acknowledge Dr. Serkan Gugercin for serving on my committee and being a transcendent teacher. iii Contents 1 Introduction 1 2 Preliminaries 2 2.1 Ill-Posed Inverse Problems . .2 2.2 Least Squares Problems . .3 2.3 Tikhonov Regularization . .7 3 The Sherman Morrison Iteration 10 3.1 Developing the General Case . 10 3.1.1 Pivoting . 14 3.1.2 Splitting . 16 3.2 Application to Regularized Least Squares Problem . 22 3.3 Efficiencies .