Wirespeed: Extending the AFF4 Forensic Container Format for Scalable Acquisition and Live Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ZN1A-M4 M6DTFN3 Specifications

ganzsecurity.com 1080p & 5 MP IR IP Mini Domes w/ GXi Embedded Intelligence ZN1A-M4DTFN3 / M6DTFN3 Features: • 1080p & 5 Megapixel @ 30fps • VCA Technology Video Analytics Network Devices • 1/2.8” SONY STARVIS Exmor Sensor • Superior Low Light Performance • True Day / Night and WDR • SMART Bitrate Control & Region of Interest • 2.8mm Fixed Lens • 2 IR LEDs up to 59’ Range ganzsecurity.com ZN1A-M4/6DTFN3 1080p & 5MP IR IP Mini Domes w/ GXi Embedded Intelligence Specifications (Part 1) Model ZN1A-M4DTFN3 ZN1A-M6DTFN3 Image Sensor 1/2.8” SONY STARVIS Exmor 2.13MP CMOS 1/2.8” SONY STARVIS Exmor 5MP CMOS Effective Pixels 1920 x 1080 2592 x 1944 Scanning System Progressive scanning AGC Control Auto Iris Type Auto-Iris Minimum Illumination Color: 0.03 Lux (DSS on) / B/W : 0 Lux (IR on) Camera Lens 2.8mm, F2.0 Fixed Lens Angle of View Horizontal: Approx. 115° / Vertical Approx. 64° Shutter Speed Automatic / Manual: 1/30 ~ 1/32,000, Anti-Flicker, Slow Shutter (1/2, 1/3, 1/5, 1/6, 1/7.5, 1/10) Day/Night Performance True Day / Night (ICR) Wide Dynamic Range True WDR, 120dB S/N Ratio 50 dB Video Compression H.265, H.264 Baseline, Main, High profile, MJPEG (Motion JPEG) Multiple Profile 2592 x 1944 @30fps & 480 @30 fps 1080p @30fps & D1(704x480 or 704x576) @30fps Streaming Performance with H.264, H265 & MJPEG with H.264, H.265 & MJPEG Max Resolution 1920 x 1080 2592 x 1944 Video Bit Rate 100Kbps ~ 10Mbps Multi-rate for Preview and Recording Video Bit Rate Control Multi Streaming CBR/VBR @ H.264 (Controllable frame rate and Bandwidth) Digital Noise -

Abkürzungs-Liste ABKLEX

Abkürzungs-Liste ABKLEX (Informatik, Telekommunikation) W. Alex 1. Juli 2021 Karlsruhe Copyright W. Alex, Karlsruhe, 1994 – 2018. Die Liste darf unentgeltlich benutzt und weitergegeben werden. The list may be used or copied free of any charge. Original Point of Distribution: http://www.abklex.de/abklex/ An authorized Czechian version is published on: http://www.sochorek.cz/archiv/slovniky/abklex.htm Author’s Email address: [email protected] 2 Kapitel 1 Abkürzungen Gehen wir von 30 Zeichen aus, aus denen Abkürzungen gebildet werden, und nehmen wir eine größte Länge von 5 Zeichen an, so lassen sich 25.137.930 verschiedene Abkür- zungen bilden (Kombinationen mit Wiederholung und Berücksichtigung der Reihenfol- ge). Es folgt eine Auswahl von rund 16000 Abkürzungen aus den Bereichen Informatik und Telekommunikation. Die Abkürzungen werden hier durchgehend groß geschrieben, Akzente, Bindestriche und dergleichen wurden weggelassen. Einige Abkürzungen sind geschützte Namen; diese sind nicht gekennzeichnet. Die Liste beschreibt nur den Ge- brauch, sie legt nicht eine Definition fest. 100GE 100 GBit/s Ethernet 16CIF 16 times Common Intermediate Format (Picture Format) 16QAM 16-state Quadrature Amplitude Modulation 1GFC 1 Gigabaud Fiber Channel (2, 4, 8, 10, 20GFC) 1GL 1st Generation Language (Maschinencode) 1TBS One True Brace Style (C) 1TR6 (ISDN-Protokoll D-Kanal, national) 247 24/7: 24 hours per day, 7 days per week 2D 2-dimensional 2FA Zwei-Faktor-Authentifizierung 2GL 2nd Generation Language (Assembler) 2L8 Too Late (Slang) 2MS Strukturierte -

Ciena Acronyms Guide.Pdf

The Acronyms Guide edited by Erin Malone Chris Janson 1st edition Publisher Acknowledgement: This is the first edition of this book. Please notify us of any acronyms we may have missed by submitting them via the form at www.ciena.com/acronymsguide. We will be happy to include them in further editions of “The Acronyms Guide”. For additional information on Ciena’s Carrier Ethernet or Optical Communications Certification programs please contact [email protected]. Table of Contents Numerics ..........................1 A .......................................1 B .......................................2 C .......................................3 D .......................................5 E .......................................7 F........................................9 G .....................................10 H .....................................11 I .......................................12 J ......................................14 K .....................................14 L ......................................14 M ....................................15 N .....................................17 O.....................................18 P .....................................20 Q.....................................22 R .....................................22 S......................................23 T .....................................26 U .....................................28 V .....................................29 W ....................................29 X .....................................30 Z......................................30 -

" Who Controls the Vocabulary, Controls the Knowledge"

Acronyms from Future-Based Consultancy & Solutions "Translation" of some Business, Finance, ICDT acronyms (including several SAP ones), initialims, tech term oddities and techronyms, loaded words and buzzwords to ease the reading of courses, books, magazines and papers: see "anacronym", "ASS" and many others ... (third main version since 1997) ( www.fbc-e.com , updated & corrected twice a month. Release 02-10-2009) " Who controls the vocabulary , 6170+ controls the knowledge " George ORWELL in "1984" Instruction To ease your researches , we are inviting you to use the " search " function within the Menu " edit " Pour faciliter vos recherches, utilisez la fonction " rechercher " disponible dans le menu " Edition " Information Underligned names are identifying authors, editors and / or copyrighted applications ©, ®, ™ FBWPA Free Business White Page Available (www.fbc-e.com ) Acronym Rose salmon is related to acronyms and assimilated terms and concepts. IL / InLin Internet Lingo also called " PC talk" Intelligence Light green color is related to intelligence, business intelligence ( BI , CI ) FBC>s Yellow color is related to FBC>s concepts and methodologies (more on www.fbc-e.com ) Finance Deep blue color is related to Finance and Accounting ( FI ) Note: BOLD acronyms KM Deep green color is related to Knowledge Management ( KM ) and texts are "translated" HR & R Lemon green color is related to HR and recruitment in the list. Mobility Light blue color is related to mobile communication ( MoMo ) Security Red color is related to security and risks management ( RM ) Note : US spelling Virtual Pink color is related to virtual / virtuality ( VR ) & ampersand $$$ temporary files Feel free to copy and distribute this "computer-babble *.001 Hayes JT Fax translator" provided that it is distributed only in its 0 Day FTP server supposed to be moved within original and unmodified state with our name, address, the next 24 hours to another IP . -

Air Traffic Management Abbreviation Compendium

Air Traffic Management Abbreviation Compendium List of Aviation, Aerospace and Aeronautical Acronyms DLR-IB-FL-BS-2021-1 Institute of Air Traffic Management Abbreviation Compendium Flight Guidance Document properties Title Air Traffic Management Abbreviation Compendium Subject List of Aviation, Aerospace and Aeronautical Acronyms Institute Institute of Flight Guidance, Braunschweig, German Aerospace Center, Germany Authors Nikolai Rieck, Marco-Michael Temme IB-Number DLR-IB-FL-BS-2021-1 Date 2021-01-28 Version 1.0 Title: Air Traffic Management Abbreviation Compendium Date: 2021-01-28 Page: 2 Version: 1.0 Authors: N. Rieck & M.-M. Temme Institute of Air Traffic Management Abbreviation Compendium Flight Guidance Index of contents 2.1. Numbers and Punctuation Marks _______________________________________________________ 6 2.2. Letter - A ___________________________________________________________________________ 7 2.3. Letter - B ___________________________________________________________________________ 55 2.4. Letter - C __________________________________________________________________________ 64 2.5. Letter - D _________________________________________________________________________ 102 2.6. Letter - E __________________________________________________________________________ 128 2.7. Letter - F __________________________________________________________________________ 152 2.8. Letter - G _________________________________________________________________________ 170 2.9. Letter - H _________________________________________________________________________ -

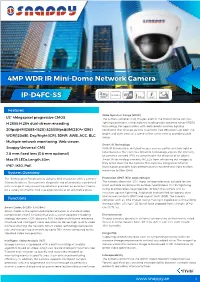

4MP WDR IR Mini-Dome Network Camera IP-D4FC-SS

4MP WDR IR Mini-Dome Network Camera 4Mp H.265+ IP-D4FC-SS 30m IR IP67 Vandal-proof Features Wide Dynamic Range (WDR) · 1/3” 4Megapixel progressive CMOS The camera achieves vivid images, even in the most intense contrast · H.265& H.264 dual-stream encoding lighting conditions, using industry-leading wide dynamic range (WDR) technology. For applications with both bright and low lighting · 20fps@4M(2688×1520) &25/30fps@3M(2304×1296) conditions that change quickly, true WDR (120 dB) optimizes both the bright and dark areas of a scene at the same time to provide usable · WDR(120dB), Day/Night(ICR), 3DNR, AWB, AGC, BLC video. · Multiple network monitoring: Web viewer, Smart IR Technology Snappy Universal CMS With IR illumination, detailed images can be captured in low light or · 2.8 mm fixed lens (3.6 mm optional) total darkness. The camera's Smart IR technology adjusts the intensity of camera's infrared LEDs to compensate the distance of an object. · Max IR LEDs Length 30m Smart IR technology prevents IR LEDs from whitening out images as they come closer to the camera. The camera's integrated infrared · IP67, IK10, PoE illumination provides high performance in extreme low-light environ- ments up to 30m (98ft). System Overview The IR Megapixel Fixed camera delivers 4MP resolution with a 2.8mm/ Protection (IP67, IK10, wide voltage) 3.6mm fixed lens. The camera's elegant blend of aesthetics combined The camera allows for ±25% input voltage tolerance, suitable for the with a range of easy mounting solutions provides an excellent choice most unstable conditions for outdoor applications. -

Download Book

® BOOKS FOR PROFESSIONALS BY PROFESSIONALS Akramullah Digital Video Concepts, Methods, and Metrics Digital Video Concepts, Methods, and Metrics: Quality, Compression, Performance, and Power Trade-off Analysis is a concise reference for professionals in a wide range of applications and vocations. It focuses on giving the reader mastery over the concepts, methods, and metrics of digital video coding, so that readers have sufficient understanding to choose and tune coding parameters for optimum results that would suit their particular needs for quality, compression, speed, and power. The practical aspects are many: Uploading video to the Internet is only the begin- ning of a trend where a consumer controls video quality and speed by trading off various other factors. Open source and proprietary applications such as video e-mail, private party content generation, editing and archiving, and cloud asset management would give further control to the end-user. What You’ll Learn: • Cost-benefit analysis of compression techniques • Video quality metrics evaluation • Performance and power optimization and measurement • Trade-off analysis among power, performance, and visual quality • Emerging uses and applications of video technologies ISBN 978-1-4302-6712-6 53999 Shelve in Graphics/Digital Photography User level: Beginning–Advanced 9781430 267126 For your convenience Apress has placed some of the front matter material after the index. Please use the Bookmarks and Contents at a Glance links to access them. Contents at a Glance About the Author ............................................................................ -

Zn1a-B4dmz52u / B6dmz52u

ganzsecurity.com 1080p & 5 MP IR IP Bullets w/ GXi Imbedded Intelligence ZN1A-B4DMZ52U / B6DMZ52U Features: Network Devices • 1080p & 5 Megapixel @ 30fps • Intelligent Video Analytics • 1/2.8” SONY STARVIS Exmor Sensor • Superior Low Light Performance • True Day / Night and WDR • ProSet Remote Zoom and Focus • 6~50mm P-Iris Lens • 6 IR LEDs up to 120’ Range ganzsecurity.com ZN1A-B4/6DMZ52U 1080p & 5MP IR IP Bullets w/ GXi Imbedded Intelligence Specifications (Part 1) Model ZN1A-B4DMZ52U ZN1A-B6DMZ52U Image Sensor 1/2.8” SONY STARVIS Exmor 2.13MP CMOS 1/2.8” SONY STARVIS Exmor 5MP CMOS Effective Pixels 1080p (1920 x 1080) 5MP (2592 x 1944) Scanning System Progressive scanning AGC Control Auto Iris Type P-Iris Minimum Illumination Color: 0.08 Lux (DSS on) / B/W : 0 Lux (IR on) Camera Lens 6mm ~ 50mm, F1.6 with ProSet Motorized Focus & Zoom Angle of View Horizontal: Approx. 40.8° (Wide) to 6.9° (Tele) Shutter Speed Automatic / Manual: 1/30 ~ 1/32,000, Anti-Flicker, Slow Shutter (1/2, 1/3, 1/5, 1/6, 1/7.5, 1/10) Day/Night Performance True Day / Night (ICR) Wide Dynamic Range True WDR, 120dB or above S/N Ratio 50 dB Video Compression H.265, H.264 Baseline, Main, High profile, MJPEG (Motion JPEG) Multiple Profile 1080p @30fps & D1(704x480 or 704x576) @30fps 2592 x 1944 @30fps & 480 @30 fps Streaming Performance with H.264, H265 & MJPEG with H.264, H.265 & MJPEG Max Resolution 1920 x 1080 2592 x 1944 Video Bit Rate 100Kbps ~ 10Mbps Multi-rate for Preview and Recording Bit Rate Control Multi Streaming CBR/VBR (Controllable frame rate and Bandwidth) -

IBM/Cisco Multiprotocol Routing: an Introduction and Implementation

Front cover IBM/Cisco Multiprotocol Routing: An Introduction and Implementation Learn about the IBM/Cisco multiprotocol products and solutions Understand how to implement FCIP, VSANs, and IVR Discover how to implement iSCSI Jon Tate Michael Engelbrecht Jacek Koman ibm.com/redbooks International Technical Support Organization IBM/Cisco Multiprotocol Routing: An Introduction and Implementation March 2009 SG24-7543-01 Note: Before using this information and the product it supports, read the information in “Notices” on page ix. Second Edition (March 2009) This edition applies to Version 4.1.n of the Cisco Fabric Manager and Device Manager, and NX-OS operating system. © Copyright International Business Machines Corporation 2009. All rights reserved. Note to U.S. Government Users Restricted Rights -- Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Notices . ix Trademarks . x Preface . xi The team that wrote this book . xi Become a published author . xiii Comments welcome. xiv Summary of changes . xv December 2008, Second Edition . xv Chapter 1. SAN routing introduction . 1 1.1 SAN routing definitions . 3 1.1.1 Fibre Channel . 3 1.1.2 Fibre Channel switching . 4 1.1.3 Fibre Channel routing . 4 1.1.4 Tunneling . 4 1.1.5 Routers and gateways . 5 1.1.6 Fibre Channel routing between physical or virtual fabrics. 5 1.2 Routed gateway protocols. 5 1.2.1 FCIP . 5 1.2.2 iSCSI . 8 1.3 Routing issues. 10 1.3.1 Frame size . 10 1.3.2 TCP congestion control. 11 1.3.3 Round-trip delay . 11 1.3.4 Write acceleration . -

Cisco Prime Infrastructure 3.1 Reference Guide April 2016

Cisco Prime Infrastructure 3.1 Reference Guide April 2016 Americas Headquarters Cisco Systems, Inc. 170 West Tasman Drive San Jose, CA 95134-1706 USA http://www.cisco.com Tel: 408 526-4000 800 553-NETS (6387) Fax: 408 527-0883 Text Part Number: THE SPECIFICATIONS AND INFORMATION REGARDING THE PRODUCTS IN THIS MANUAL ARE SUBJECT TO CHANGE WITHOUT NOTICE. ALL STATEMENTS, INFORMATION, AND RECOMMENDATIONS IN THIS MANUAL ARE BELIEVED TO BE ACCURATE BUT ARE PRESENTED WITHOUT WARRANTY OF ANY KIND, EXPRESS OR IMPLIED. USERS MUST TAKE FULL RESPONSIBILITY FOR THEIR APPLICATION OF ANY PRODUCTS. THE SOFTWARE LICENSE AND LIMITED WARRANTY FOR THE ACCOMPANYING PRODUCT ARE SET FORTH IN THE INFORMATION PACKET THAT SHIPPED WITH THE PRODUCT AND ARE INCORPORATED HEREIN BY THIS REFERENCE. IF YOU ARE UNABLE TO LOCATE THE SOFTWARE LICENSE OR LIMITED WARRANTY, CONTACT YOUR CISCO REPRESENTATIVE FOR A COPY. The Cisco implementation of TCP header compression is an adaptation of a program developed by the University of California, Berkeley (UCB) as part of UCB’s public domain version of the UNIX operating system. All rights reserved. Copyright © 1981, Regents of the University of California. NOTWITHSTANDING ANY OTHER WARRANTY HEREIN, ALL DOCUMENT FILES AND SOFTWARE OF THESE SUPPLIERS ARE PROVIDED “AS IS” WITH ALL FAULTS. CISCO AND THE ABOVE-NAMED SUPPLIERS DISCLAIM ALL WARRANTIES, EXPRESSED OR IMPLIED, INCLUDING, WITHOUT LIMITATION, THOSE OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT OR ARISING FROM A COURSE OF DEALING, USAGE, OR TRADE PRACTICE. IN NO EVENT SHALL CISCO OR ITS SUPPLIERS BE LIABLE FOR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, OR INCIDENTAL DAMAGES, INCLUDING, WITHOUT LIMITATION, LOST PROFITS OR LOSS OR DAMAGE TO DATA ARISING OUT OF THE USE OR INABILITY TO USE THIS MANUAL, EVEN IF CISCO OR ITS SUPPLIERS HAVE BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGES. -

![[ENTRY ARTIFICIAL INTELLIGENCE] Authors: Oliver Knill: March 2000 Literature](https://docslib.b-cdn.net/cover/6487/entry-artificial-intelligence-authors-oliver-knill-march-2000-literature-11446487.webp)

[ENTRY ARTIFICIAL INTELLIGENCE] Authors: Oliver Knill: March 2000 Literature

ENTRY ARTIFICIAL INTELLIGENCE [ENTRY ARTIFICIAL INTELLIGENCE] Authors: Oliver Knill: March 2000 Literature: Peter Norvig, Paradigns of Artificial Intelligence Programming Daniel Juravsky and James Martin, Speech and Language Processing Adaptive Simulated Annealing [Adaptive Simulated Annealing] A language interface to a neural net simulator. artificial intelligence [artificial intelligence] (AI) is a field of computer science concerned with the concepts and methods of symbolic knowledge representation. AI attempts to model aspects of human thought on computers. Aspectrs of AI: • computer vision • language processing • pattern recognition • expert systems • problem solving • roboting • optical character recognition • artificial life • grammars • game theory Babelfish [Babelfish] Online translation system from Systran. Chomsky [Chomsky] Noam Chomsky is a pioneer in formal language theory. He is MIT Professor of Linguistics, Linguistic Theory, Syntax, Semantics and Philosophy of Language. Eliza [Eliza] One of the first programs to feature English output as well as input. It was developed by Joseph Weizenbaum at MIT. The paper appears in the January 1966 issue of the "Communications of the Association of Computing Machinery". Google [Google] A search engine emerging at the end of the 20'th century. It has AI features, allows not only to answer questions by pointing to relevant webpages but can also do simple tasks like doing arithmetic computations, convert units, read the news or find pictures with some content. GPS [GPS] General Problem Solver. A program developed in 1957 by Alan Newell and Herbert Simon. The aim was to write a single computer program which could solve any problem. One reason why GPS was destined to fail is now at the core of computer science. -

TAOS Glossary Release 9.2 Or Later

TAOS Glossary Part Number: 7820-0648-006 For software version 9.2 or later March 2002 Copyright © 1998-2002 Lucent Technologies Inc. All rights reserved. This material is protected by the copyright laws of the United States and other countries. It may not be reproduced, distributed, or altered in any fashion by any entity (either internal or external to Lucent Technologies), except in accordance with applicable agreements, contracts, or licensing, without the express written consent of Lucent Technologies. For permission to reproduce or distribute, please email your request to [email protected]. Notice Every effort was made to ensure that the information in this document was complete and accurate at the time of printing, but information is subject to change. European Community (EC) RTTE compliance Hereby, Lucent Technologies, declares that the equipment documented in this publication is in compliance with the essential require- ments and other relevant provisions of the Radio and Telecommunications Technical Equipment (RTTE) Directive 1999/5/EC. To view the official Declaration of Conformity certificate for this equipment, according to EN 45014, access the Lucent INS online documentation library at http://www.lucentdocs.com/ins. Safety, compliance, and warranty Information Before handling any Lucent Access Networks hardware product, read the Edge Access Safety and Compliance Guide included in your product package. See that guide also to determine how products comply with the electromagnetic interference (EMI) and network compatibility requirements of your country. See the warranty card included in your product package for the limited warranty that Lucent Technologies provides for its products. Security statement In rare instances, unauthorized individuals make connections to the telecommunications network through the use of access features.