Vmware Vsphere® Storage Apis – Array Integration (VAAI)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

“Log” File in Stata

Updated July 2018 Creating a “Log” File in Stata This set of notes describes how to create a log file within the computer program Stata. It assumes that you have set Stata up on your computer (see the “Getting Started with Stata” handout), and that you have read in the set of data that you want to analyze (see the “Reading in Stata Format (.dta) Data Files” handout). A log file records all your Stata commands and output in a given session, with the exception of graphs. It is usually wise to retain a copy of the work that you’ve done on a given project, to refer to while you are writing up your findings, or later on when you are revising a paper. A log file is a separate file that has either a “.log” or “.smcl” extension. Saving the log as a .smcl file (“Stata Markup and Control Language file”) keeps the formatting from the Results window. It is recommended to save the log as a .log file. Although saving it as a .log file removes the formatting and saves the output in plain text format, it can be opened in most text editing programs. A .smcl file can only be opened in Stata. To create a log file: You may create a log file by typing log using ”filepath & filename” in the Stata Command box. On a PC: If one wanted to save a log file (.log) for a set of analyses on hard disk C:, in the folder “LOGS”, one would type log using "C:\LOGS\analysis_log.log" On a Mac: If one wanted to save a log file (.log) for a set of analyses in user1’s folder on the hard drive, in the folder “logs”, one would type log using "/Users/user1/logs/analysis_log.log" If you would like to replace an existing log file with a newer version add “replace” after the file name (Note: PC file path) log using "C:\LOGS\analysis_log.log", replace Alternately, you can use the menu: click on File, then on Log, then on Begin. -

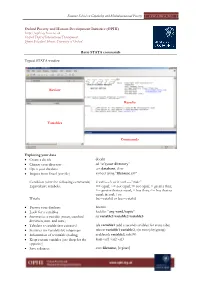

Basic STATA Commands

Summer School on Capability and Multidimensional Poverty OPHI-HDCA, 2011 Oxford Poverty and Human Development Initiative (OPHI) http://ophi.qeh.ox.ac.uk Oxford Dept of International Development, Queen Elizabeth House, University of Oxford Basic STATA commands Typical STATA window Review Results Variables Commands Exploring your data Create a do file doedit Change your directory cd “c:\your directory” Open your database use database, clear Import from Excel (csv file) insheet using "filename.csv" Condition (after the following commands) if var1==3 or if var1==”male” Equivalence symbols: == equal; ~= not equal; != not equal; > greater than; >= greater than or equal; < less than; <= less than or equal; & and; | or. Weight [iw=weight] or [aw=weight] Browse your database browse Look for a variables lookfor “any word/topic” Summarize a variable (mean, standard su variable1 variable2 variable3 deviation, min. and max.) Tabulate a variable (per category) tab variable1 (add a second variables for cross tabs) Statistics for variables by subgroups tabstat variable1 variable2, s(n mean) by(group) Information of a variable (coding) codebook variable1, tab(99) Keep certain variables (use drop for the keep var1 var2 var3 opposite) Save a dataset save filename, [replace] Summer School on Capability and Multidimensional Poverty OPHI-HDCA, 2011 Creating Variables Generate a new variable (a number or a gen new_variable = 1 combinations of other variables) gen new_variable = variable1+ variable2 Generate a new variable conditional gen new_variable -

PC23 and PC43 Desktop Printer User Manual Document Change Record This Page Records Changes to This Document

PC23 | PC43 Desktop Printer PC23d, PC43d, PC43t User Manual Intermec by Honeywell 6001 36th Ave.W. Everett, WA 98203 U.S.A. www.intermec.com The information contained herein is provided solely for the purpose of allowing customers to operate and service Intermec-manufactured equipment and is not to be released, reproduced, or used for any other purpose without written permission of Intermec by Honeywell. Information and specifications contained in this document are subject to change without prior notice and do not represent a commitment on the part of Intermec by Honeywell. © 2012–2014 Intermec by Honeywell. All rights reserved. The word Intermec, the Intermec logo, Fingerprint, Ready-to-Work, and SmartSystems are either trademarks or registered trademarks of Intermec by Honeywell. For patent information, please refer to www.hsmpats.com Wi-Fi is a registered certification mark of the Wi-Fi Alliance. Microsoft, Windows, and the Windows logo are registered trademarks of Microsoft Corporation in the United States and/or other countries. Bluetooth is a trademark of Bluetooth SIG, Inc., U.S.A. The products described herein comply with the requirements of the ENERGY STAR. As an ENERGY STAR partner, Intermec Technologies has determined that this product meets the ENERGY STAR guidelines for energy efficiency. For more information on the ENERGY STAR program, see www.energystar.gov. The ENERGY STAR does not represent EPA endorsement of any product or service. ii PC23 and PC43 Desktop Printer User Manual Document Change Record This page records changes to this document. The document was originally released as Revision 001. Version Number Date Description of Change 005 12/2014 Revised to support MR7 firmware release. -

11 Creating New Variables Generate and Replace This Chapter Shows the Basics of Creating and Modifying Variables in Stata

11 Creating new variables generate and replace This chapter shows the basics of creating and modifying variables in Stata. We saw how to work with the Data Editor in [GSM] 6 Using the Data Editor—this chapter shows how we would do this from the Command window. The two primary commands used for this are • generate for creating new variables. It has a minimum abbreviation of g. • replace for replacing the values of an existing variable. It may not be abbreviated because it alters existing data and hence can be considered dangerous. The most basic form for creating new variables is generate newvar = exp, where exp is any kind of expression. Of course, both generate and replace can be used with if and in qualifiers. An expression is a formula made up of constants, existing variables, operators, and functions. Some examples of expressions (using variables from the auto dataset) would be 2 + price, weight^2 or sqrt(gear ratio). The operators defined in Stata are given in the table below: Relational Arithmetic Logical (numeric and string) + addition ! not > greater than - subtraction | or < less than * multiplication & and >= > or equal / division <= < or equal ^ power == equal != not equal + string concatenation Stata has many mathematical, statistical, string, date, time-series, and programming functions. See help functions for the basics, and see[ D] functions for a complete list and full details of all the built-in functions. You can use menus and dialogs to create new variables and modify existing variables by selecting menu items from the Data > Create or change data menu. This feature can be handy for finding functions quickly. -

Generating Define.Xml Using SAS® by Element-By-Element and Domain-By-Domian Mechanism Lina Qin, Beijing, China

PharmaSUG China 2015 - 30 Generating Define.xml Using SAS® By Element-by-Element And Domain-by-Domian Mechanism Lina Qin, Beijing, China ABSTRACT An element-by-element and domain-by-domain mechanism is introduced for generating define.xml using SAS®. Based on CDISC Define-XML Specification, each element in define.xml can be generated by applying a set of templates instead of writing a great deal of “put” statements. This will make programs more succinct and flexible. The define.xml file can be generated simply by combining, in proper order, all of the relevant elements. Moreover, as each element related to a certain domain can be separately created, it is possible to generate a single-domain define.xml by combining all relevant elements of that domain. This mechanism greatly facilitates generating and validating define.xml. Keywords: Define-XML, define.xml, CRT-DDS, SDTM, CDISC, SAS 1 INTRODUCTION Define.xml file is used to describe CDISC SDTM data sets for the purpose of submissions to FDA[1]. While the structure of define.xml is set by CDISC Define-XML Specification[1], its content is subject to specific clinical study. Many experienced SAS experts have explored different methods to generate define.xml using SAS[5][6][7][8][9]. This paper will introduce a new method to generate define.xml using SAS. The method has two features. First, each element in define.xml can be generated by applying a set of templates instead of writing a great deal of “put” statements. This will make programs more succinct and flexible. Second, the define.xml can be generated on a domain-by-domain basis, which means each domain can have its own separate define.xml file. -

Node and Edge Attributes

Cytoscape User Manual Table of Contents Cytoscape User Manual ........................................................................................................ 3 Introduction ...................................................................................................................... 48 Development .............................................................................................................. 4 License ...................................................................................................................... 4 What’s New in 2.7 ....................................................................................................... 4 Please Cite Cytoscape! ................................................................................................. 7 Launching Cytoscape ........................................................................................................... 8 System requirements .................................................................................................... 8 Getting Started .......................................................................................................... 56 Quick Tour of Cytoscape ..................................................................................................... 12 The Menus ............................................................................................................... 15 Network Management ................................................................................................. 18 The -

Repair Your Computer in Windows Vista Or 7

Repair your computer in Windows Vista or 7 How to use System Recovery Options for repairing Windows Vista or 7 installations Visiting www.winhelp.us adds cookies (the non-edible ones) to your device. More non-scary details are in Privacy Policy. Stay safe! When Windows is not able to start even in Safe Mode, then most probably there are some errors or missing files on your hard disk that prevent Windows Vista or 7 from starting correctly. Repair Your Computer is a set of tools for recovering from Windows such errors and it is available on Windows installation DVD. Windows 7 users can also create a System Repair Disc, or borrow one from friends - as long as the hardware architecture (32-bit/x86 or 64-bit/x64) matches. Here are some troubleshooting steps to try before using Repair Your Computer: Last Known Good Configuration often solves booting and stability problems after installing software, drivers, or messing with Registry entries. Always boot to Safe Mode at least once - this often repairs corrupted file system and essential system files. If Windows is able to boot, use System File Checker and icacls.exe to repair corrupted system files. While Windows is running, use free WhoCrashed for determining BSOD (Blue Screen Of Death) causes. Also, Reliability Monitor might reveal faulty drivers or software. System Restore can help reverting back to a state when your computer was running normally. Windows 7 user might be able to launch Repair Your Computer or Startup Repair from a hidden system partition. The two options are described later in this article. -

Stacks, Queues September, 2012

COMP 250 Fall 2012 Exercises 2b - stacks, queues September, 2012 Questions 1. Consider the following sequence of stack operations: push(d), push(h), pop(), push(f), push(s), pop(), pop(), push(m). (a) Assume the stack is initially empty, what is the sequence of popped values, and what is the final state of the stack? (Identify which end is the top of the stack.) (b) Suppose you were to replace the push and pop operations with enqueue and dequeue respectively. What would be the sequence of dequeued values, and what would be the final state of the queue? (Identify which end is the front of the queue.) 2. Use a stack to test for balanced parentheses, when scanning the following expressions. Your solution should show the state of the stack each time it is modified. The \state of the stack" must indicate which is the top element. Only consider the parentheses [,],(,),f,g . Ignore the variables and operators. (a) [a+{b/(c-d)+e/(f+g)}-h] (b) [a{b+[c(d+e)-f]+g} 3. Suppose you have a stack in which the values 1 through 5 must be pushed on the stack in that order, but that an item on the stack can be popped at any time. Give a sequence of push and pop operations such that the values are popped in the following order: (a) 2, 4, 5, 3, 1 (b) 1, 5, 4, 2, 3 (c) 1, 3, 5, 4, 2 It might not be possible in each case. 4. (a) Suppose you have three stacks s1, s2, s2 with starting configuration shown on the left, and finishing condition shown on the right. -

Disk Management

IBM i Version 7.2 Systems management Disk management IBM Note Before using this information and the product it supports, read the information in “Notices” on page 141. This edition applies to IBM i 7.2 (product number 5770-SS1) and to all subsequent releases and modifications until otherwise indicated in new editions. This version does not run on all reduced instruction set computer (RISC) models nor does it run on CISC models. This document may contain references to Licensed Internal Code. Licensed Internal Code is Machine Code and is licensed to you under the terms of the IBM License Agreement for Machine Code. © Copyright International Business Machines Corporation 2004, 2013. US Government Users Restricted Rights – Use, duplication or disclosure restricted by GSA ADP Schedule Contract with IBM Corp. Contents Disk management..................................................................................................1 What's new for IBM i 7.2..............................................................................................................................1 PDF file for Disk Management..................................................................................................................... 1 Getting started with disk management.......................................................................................................2 Components of disk storage.................................................................................................................. 2 Finding the logical address for a disk -

Ch 7 Using ATTRIB, SUBST, XCOPY, DOSKEY, and the Text Editor

Using ATTRIB, SUBST, XCOPY, DOSKEY, and the Text Editor Ch 7 1 Overview The purpose and function of file attributes will be explained. Ch 7 2 Overview Utility commands and programs will be used to manipulate files and subdirectories to make tasks at the command line easier to do. Ch 7 3 Overview This chapter will focus on the following commands and programs: ATTRIB XCOPY DOSKEY EDIT Ch 7 4 File Attributes and the ATTRIB Command Root directory keeps track of information about every file on a disk. Ch 7 5 File Attributes and the ATTRIB Command Each file in the directory has attributes. Ch 7 6 File Attributes and the ATTRIB Command Attributes represented by single letter: S - System attribute H - Hidden attribute R - Read-only attribute A - Archive attribute Ch 7 7 File Attributes and the ATTRIB Command NTFS file system: Has other attributes At command line only attributes can change with ATTRIB command are S, H, R, and A Ch 7 8 File Attributes and the ATTRIB Command ATTRIB command: Used to manipulate file attributes Ch 7 9 File Attributes and the ATTRIB Command ATTRIB command syntax: ATTRIB [+R | -R] [+A | -A] [+S | -S] [+H | -H] [[drive:] [path] filename] [/S [/D]] Ch 7 10 File Attributes and the ATTRIB Command Attributes most useful to set and unset: R - Read-only H - Hidden Ch 7 11 File Attributes and the ATTRIB Command The A attribute (archive bit) signals file has not been backed up. Ch 7 12 File Attributes and the ATTRIB Command XCOPY command can read the archive bit. -

Instruction Set Architectures: Talking to the Machine

Instruction Set Architectures: Talking to the Machine 1 The Next Two Weeks Tw o G o a l s Prepare you for 141L Project: Lab 2 Your own processor for Bitcoin mining! Understand what an ISA is and what it must do. Understand the design questions they raise Think about what makes a good ISA vs a bad one See an example of designing an ISA. Learn to “see past your code” to the ISA Be able to look at a piece of C code and know what kinds of instructions it will produce. Understand (or begin to) the compiler’s role Be able to roughly estimate the performance of code based on this understanding (we will refine this skill throughout the quarter.) 2 In the beginning... The Difference Engine ENIAC Physical configuration specifies the computation 3 The Stored Program Computer The program is data i.e., it is a sequence of numbers that machine interprets A very elegant idea The same technologies can store and manipulate programs and data Programs can manipulate programs. 4 The Stored Program Computer A very simple model Processor IO Several questions How are program represented? Memory How do we get algorithms out of our brains and into that representation? Data Program How does the the computer interpret a program? 5 Representing Programs We need some basic building blocks -- call them “instructions” What does “execute a program” mean? What instructions do we need? What should instructions look like? What data will the instructions operate on? How complex should an instruction be? How do functions work? 6 Program Execution This is the algorithm for a stored-program computer The Program Counter (PC) is the key Instruction Fetch Read instruction from program storage (mem[PC]) Instruction Decode Determine required actions and instruction size Operand Fetch Locate and obtain operand data Instruction Execute Compute result value Store Deposit results in storage for later use Result Compute Determine successor instruction (i.e. -

Revised: Cloning System Disks

MARK 5 MEMO #84 MASSACHUSETTS INSTITUTE OF TECHNOLOGY HAYSTACK OBSERVATORY WESTFORD, MASSACHUSETTS 01886 11 August 2010 Telephone: 781-981-5400 Fax: 781-981-0590 To: Mark 5 Development Group From: Dan L. Smythe Subject: Cloning system disks I describe here how I cloned a 250-GB system disk to a 128-GB solid state disk, by reducing the size of the original sda1 partition to fit on the new 128-GB disk, and copying the root partition (sda1) to the new disk. I am sure there are other, perhaps better, ways to do this; but this way worked for me. Note that in general it is not safe to use parted or dd on a mounted file system, so you will need to boot from a CD to make the clone. Here is a general outline of the procedure: 1) Use fdisk or sfdisk to determine the size of the new disk. 2) Use parted to resize the partitions on the old disk to fit the new disk. 3) Use sfdisk to create identical partitions on the new disk 4) Use dd to copy the root partition from the old disk to the new disk. 5) Use grub-install to install grub on the new disk. It is a good idea to update the OS before making the clone. If the Field System is installed, you can use /etc/cron.weekly/apt-get or /usr2/fs/etc_cron.weekly_apt-get to download and install Debian security updates. Power down the system and install the new disk as SATA1, where the original OS disk is SATA0.