Impact Evaluation of Third Elementary Education Project in the Republic of the Philippines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Chec List Amphibians and Reptiles, Romblon Island

Check List 8(3): 443-462, 2012 © 2012 Check List and Authors Chec List ISSN 1809-127X (available at www.checklist.org.br) Journal of species lists and distribution Amphibians and Reptiles, Romblon Island Group, central PECIES Philippines: Comprehensive herpetofaunal inventory S OF Cameron D. Siler 1*, John C. Swab 1, Carl H. Oliveros 1, Arvin C. Diesmos 2, Leonardo Averia 3, Angel C. ISTS L Alcala 3 and Rafe M. Brown 1 1 University of Kansas, Department of Ecology and Evolutionary Biology, Biodiversity Institute, Lawrence, KS 66045-7561, USA. 2 Philippine National Museum, Zoology Division, Herpetology Section. Rizal Park, Burgos St., Manila, Philippines. 3 Silliman University Angelo King Center for Research and Environmental Management, Dumaguete City, Negros Oriental, Philippines. * Corresponding author. E-mail: [email protected] Abstract: We present results from several recent herpetological surveys in the Romblon Island Group (RIG), Romblon Province, central Philippines. Together with a summary of historical museum records, our data document the occurrence of 55 species of amphibians and reptiles in this small island group. Until the present effort, and despite past studies, observations of evolutionarily distinct amphibian species, including conspicuous, previously known, endemics like the forestherpetological frogs Platymantis diversity lawtoni of the RIGand P.and levigatus their biogeographical and two additional affinities suspected has undescribedremained poorly species understood. of Platymantis We . reportModerate on levels of reptile endemism prevail on these islands, including taxa like the karst forest gecko species Gekko romblon and the newly discovered species G. coi. Although relatively small and less diverse than the surrounding landmasses, the islands of Romblon Province contain remarkable levels of endemism when considered as percentage of the total fauna or per unit landmass area. -

Binanog Dance

Gluck Classroom Fellow: Jemuel Jr. Barrera-Garcia Ph.D. Student in Critical Dance Studies: Designated Emphasis in Southeast Asian Studies Flying Without Wings: The Philippines’ Binanog Dance Binanog is an indigenous dance from the Philippines that features the movement of an eagle/hawk to the symbolic beating of bamboo and gong that synchronizes the pulsating movements of the feet and the hands of the lead and follow dancers. This specific type of Binanog dance comes from the Panay-Bukidnon indigenous community in Panay Island, Western Visayas, Philippines. The Panay Bukidnon, also known as Suludnon, Tumandok or Panayanon Sulud is usually the identified indigenous group associated with the region and whose territory cover the mountains connecting the provinces of Iloilo, Capiz and Aklan in the island of Panay, one of the main Visayan islands of the Philippines. Aside from the Aetas living in Aklan and Capiz, this indigenous group is known to be the only ethnic Visayan language-speaking community in Western Visayas. SMILE. A pair of Binanog dancers take a pose They were once associated culturally as speakers after a performance in a public space. of the island’s languages namely Kinaray-a, Akeanon and Hiligaynon, most speakers of which reside in the lowlands of Panay and their geographical remoteness from Spanish conquest, the US invasion of the country, and the hairline exposure they had with the Japanese attacks resulted in a continuation of a pre-Hispanic culture and tradition. The Suludnon is believed to have descended from the migrating Indonesians coming from Mainland Asia. The women have developed a passion for beauty wearing jewelry made from Spanish coins strung together called biningkit, a waistband of coins called a wakus, and a headdress of coins known as a pundong. -

Understanding Health-Seeking Behavior for Inpatient Care in Antique and Guimaras

UNDERSTANDING HEALTH-SEEKING BEHAVIOR FOR INPATIENT CARE IN ANTIQUE AND GUIMARAS Presented at 6th Global Symposium on Health Systems Research Authors: Nuevo C, Co P, Alvior H, Samson C November 8-12, 2020 Contact details: [email protected] Introduction Figure 1. Current Health Expenditures (CHE) Philippines, 2016 - 2018 A highly decentralized healthcare system has caused great Health care delivery and expenditures in the fragmentation in the financing and delivery of health services. Philippines are heavily oriented towards • Provinces manage and finance public inpatient facilities inpatient care in the past years. In 2018: • Municipalities / cities manage and finance public primary facilities 43.9% of CHE spent on inpatient care This separation in management and governance of healthcare delivery created a disconnect in the continuum of care. Similarly, patients end up accessing care wherever they prefer, which are 1 2 mostly inpatient facilities. The Philippine Health Insurance Corporation (PhilHealth) runs the 3 4 state social health insurance and pays providers for services The Universal Health Care (UHC) Act introduces a rendered for PhilHealth members. Data shows that payments for major reform in the health system: establishment 2018 are also more towards inpatient care. of healthcare provider networks (HCPNs) within provincially-integrated local health systems. 87.0% of PhilHealth benefit payouts for inpatient care Antique and Guimaras in Western Visayas has Packages and payment systems for primary and inpatient care is committed to local health system integration and separate, as PhilHealth can only pay local government units HCPN institutionalization as UHC Integration Sites. 1 managing public facilities because of local autonomy. In effect, incentives also are fragmented Figure 2. -

WHO IMC Antique Assessment(2).Pdf

Antique: A joint assessment was conducted between WorldHealth Organisation and International Medical Corps. Please find enclosed the number of Dr Ric (Antique PHO) 09177176448 should you wish to respond to the gaps. Please do also coordinate with the health cluster so that we can ensure no duplicaiton of work. Please see report below; Affected Municipalities: Libertad Pandan Tibiao Barbaza Laua an Culasi Islands up north Electricity/Communications: • In the northern part of the province: Libertad, Pandan, Sebaste, Culasi- electricity and communications is intermittent. Health Facility Damage: (see attached document for complete list and names) • 3 BHS Totally Damaged in Libertad Municipality: Maramig BHS and Cubay BHS and Valderrama Municipality: Cansilayan BHS • 25 BHS Partially Damaged (still functional) • 8 RHU’s Partially Damaged (still functional) • 4 partially damaged hospitals- Pandan, Sebaste, Culasi, Barbaza (roofs, windows, doors) Cold Chain: • UNICEF conducted an assessment last week: Prelim results o No damage to vaccines due to the Typhoon (all RTC’s brought vaccines to hospitals or PHO- before or right after the Typhoon) o All RHC refrigerators for vaccines are home refrigerators and not vaccine refrigerators. o Need Vaccine carriers- especially in the municipalities in the north • Electricity out in many municipalities. No RHC has generators. Vaccines are currently being stored in areas with generators- Municipal Hall, Hospitals or private homes. • They would like generators for the RHU’s no one planning on helping with this at this time. • Routine EPI is on-going. No disruption Drugs/ Medical Supply Needs: (PHO will send a list of needs tonight) • ARI antibiotics, Cough Meds, ORS Morbidities/Surveillance: • ARI, Wounds are most common • Increase Diarrhea in Belison (due to destroyed water piping- see WASH Section Below). -

Ati, the Indigenous People of Panay: Their Journey, Ancestral Birthright and Loss

Hollins University Hollins Digital Commons Dance MFA Student Scholarship Dance 5-2020 Ati, the Indigenous People of Panay: Their Journey, Ancestral Birthright and Loss Annielille Gavino Follow this and additional works at: https://digitalcommons.hollins.edu/dancemfastudents Part of the South and Southeast Asian Languages and Societies Commons HOLLINS UNIVERSITY MASTER OF FINE ARTS DANCE Ati, the Indigenous People of Panay: Their Journey, Ancestral Birthright and Loss Monday, May 7, 2020 Annielille Gavino Low Residency Track- Two Summer ABSTRACT: This research investigates the Ati people, the indigenous people of Panay Island, Philippines— their origins, current economic status, ancestral rights, development issues, and challenges. This particular inquiry draws attention to the history of the Ati people ( also known as Aetas, Aytas, Agtas, Batak, Mamanwa ) as the first settlers of the islands. In contrast to this, a festive reenactment portraying Ati people dancing in the tourism sponsored Dinagyang and Ati-Atihan festival will be explored. This paper aims to compare the displacement of the Ati as marginalized minorities in contrast to how they are celebrated and portrayed in the dance festivals. Methodology My own field research was conducted through interviews of three Ati communities of Panay, two Dinagyang Festival choreographers, and a discussion with cultural anthropologist, Dr. Alicia P. Magos, and a visit to the Museo de Iloilo. Further data was conducted through scholarly research, newspaper readings, articles, and video documentaries. Due to limited findings on the Ati, I also searched under the blanket term, Negrito ( term used during colonial to post colonial times to describe Ati, Aeta, Agta, Ayta,Batak, Mamanwa ) and the Austronesians and Austo-Melanesians ( genetic ancestor of the Negrito indigenous group ). -

Iloilo Antique Negros Occidental Capiz Aklan Guimaras

Sigma Kalibo Panitan Makato Handicap International Caluya PRCS - IFRC Don Bosco Network Ivisan PRCS - IFRC Humanity First Tangalan CapizNED CapizNED Don Bosco Network PRCS - IFRC CARE Supporting Self Recovery PRAY PRCS - IFRC IOM Citizens’ Disaster Response Center New Washington CapizNED IOM Region VI Humanity First Caritas Austria Don Bosco Network PRAY PRAY of Shelter Activities Malay PRAY World Vision Numancia PRCS - IFRC Humanity First PRCS - IFRC Buruanga IOM PRCS - IFRC by Municipality (Roxas) PRCS - IFRC Nabas Buruanga Don Boxco Network Balasan Pontevedra Altavas Roxas City PRCS - IFRC Ibajay HEKS - TFM 3W map summary Nabas Libertad IOM Region VI Caritas Austria IOM R e gion VI World Vision PRCS - IFRC CapizNED Tangalan CapizNED World Vision Citizens’ Disaster Response Center Produced April 14, 2014 Pandan PRAY Batad Numancia Don Bosco Network IOM Region VI CARE IRC Makato PRCS - IFRC PRCS - IFRC Malinao Makato Kalibo Panay MSF-CH Don BoSco Network Batan Humanity First Humanity First This map depicts data PRCS - IFRC Lezo IOM Caritas Austria Relief o peration for Northern Iloilo World Vision Lezo PRCS - IFRC CapizNED Solidar Suisse gathered by the Shelter CARE PRCS - IFRCNew Washington IOM Region VI Pilar Cluster about agencies Don Bosco Network HEKS - TFM Malinao HEKS - TFM Carles who are responding to Sebaste Banga Caritas Austria IOM Region VI PRAY DFID - HMS Illustrious Sebaste World Vision Welt Hunger Hilfe Typhoon Yolanda. PRCS - IFRC Concern Worldwide IOM Banga Citizens’ Disaster Response CenterRoxas City Humanity First IOM Region VI Batan Humanity First MSF-CH Carles Any agency listed may Citizens’Panay Disaster Response Center Save the Children Region VI Altavas Ivisan ADRA Ayala Land have projects at different Madalag AklanBalete SapSapi-Ani-An stages of completion (e.g. -

Onland Signatures of the Palawan Microcontinental Block and Philippine Mobile Belt Collision and Crustal Growth Process: a Review

Journal of Asian Earth Sciences 34 (2009) 610–623 Contents lists available at ScienceDirect Journal of Asian Earth Sciences journal homepage: www.elsevier.com/locate/jaes Onland signatures of the Palawan microcontinental block and Philippine mobile belt collision and crustal growth process: A review Graciano P. Yumul Jr. a,b,*, Carla B. Dimalanta a, Edanjarlo J. Marquez c, Karlo L. Queaño d,e a National Institute of Geological Sciences, College of Science, University of the Philippines, Diliman, Quezon City, Philippines b Department of Science and Technology, Bicutan, Taguig City, Metro Manila, Philippines c Department of Physical Science and Mathematics, University of the Philippines-Manila, Philippines d School of Earth and Materials Science and Engineering, Mapua Institute of Technology, Intramuros, Manila, Philippines e Lands Geological Survey Division, Mines and Geosciences Bureau, Department of Environment and Natural Resources, North Avenue, Quezon City, Philippines article info abstract Article history: The collision of the Palawan microcontinental block with the Philippine mobile belt had significantly Received 14 April 2008 influenced the geological evolution of the Philippines. Multiple collisions involving several fragments, Received in revised form 7 October 2008 through space and time, resulted into the collage of terranes of varying origin exposed in this part of cen- Accepted 15 October 2008 tral Philippines. Cusping of the overriding plate, volcanic arc gap, ophiolite emplacement, incipient back- arc rifting, island rotation and tilting, raised coastal terraces, metamorphism, intrusion of igneous rocks and steepened subducted slab as seen in focal mechanism solutions are some of the manifestations of this Keywords: collision. A late Early Miocene to early Middle Miocene age (20–16 Ma) is proposed for the major collision Collision between the Palawan indenter and the Philippine mobile belt. -

Iloilo Capiz Antique Aklan Negros Occidental

PHILIPPINES: Summary of Planned Cash Activities in REGION VI (Western Visayas) (as of 24 Feb 2014) Malay Planned Cash Activities 0 Buruanga Nabas 1 - 5 6 - 10 11 - 20 Libertad Ibajay Aklan > 20 Pandan Tangalan Numancia Makato Kalibo Lezo New Washington Malinao Banga Capiz Sebaste Roxas City Batan Panay Carles Balete Altavas Ivisan Sapi-An Madalag Pilar Balasan Estancia Panitan Mambusao Sigma Culasi Libacao Pontevedra President Roxas Batad Dao Jamindan Ma-Ayon San Dionisio Cuartero Tibiao Dumalag Sara Barbaza Tapaz Antique Dumarao Lemery Concepcion Bingawan Passi City Laua-An Calinog San Rafael Ajuy Lambunao San Enrique Bugasong Barotac Viejo Duenas Banate Negros Valderrama Dingle Occidental Janiuay Anilao Badiangan Mina Pototan Patnongon Maasin Iloilo Manapla Barotac Nuevo San Remigio Cadiz City Alimodian Cabatuan Sagay City New Lucena Victorias City Leon Enrique B. Magalona ¯ Belison Dumangas Zarraga Data Source: OCHA 3W database, Humanitarian Cluster lead organizations, GADMTubungan Santa Barbara Created 14 March 2014 San Jose Sibalom Silay City Escalante City 0 3 6 12 Km Planned Cash Activities in Region VI by Province, Municipality and Type of Activity as of 24 February 2014 Cash Grant/ Cash Grant/ Cash for Work Province Municipality Cash Voucher Transfer TOTAL (CFW) (conditional) (unconditional) BALETE 0 5 0 0 5 IBAJAY 0 0 0 1 1 AKLAN LIBACAO 0 1 0 0 1 MALINAO 0 8 0 1 9 BARBAZA 0 0 0 1 1 CULASI 0 0 0 1 1 LAUA-AN 0 0 0 1 1 ANTIQUE SEBASTE 0 0 0 1 1 TIBIAO 0 0 0 1 1 not specified 0 1 0 0 1 CUARTERO 0 0 0 1 1 DAO 0 6 0 0 6 JAMINDAN -

309 BOOK REVIEWS Felix B. Regalado and Quintin B. Franco, History of Panay. Iloilo City, Philippines

BOOK REVIEWS 309 Felix B. Regalado and Quintin B. Franco, History of Panay. Iloilo City, Philippines: Central Philippine University, 1973, 593 pp., illustrations, oral authorities, appendices, bibliography, index. For the student of Philippine history there is no dearth of written source materials. On the contrary, there are substantial collections in libraries and archives in places such as Manila, Seville, Madrid, Valladolid, Mexico City, Rome, Chicago and Washington. Some materials have remained largely untouched. However, the authors of this book under review have only made use of what is left in the Filipiniana collec- tions in the Philippine Islands after insects, war ravage and negligence have done their job of destruction. The writing of a survey text is a difficult art, especially the composition of a college textbook of regional history. It requires not only a sure grip of local details-to be set against the backdrop of more general, national developments-but needs also to place local events in a relevant, well-integrated, historical framework-a formidable task indeed. The title, History of Panay, suggests a comprehensive treatment of the historical evolution of prehistoric Panay, in the Western Visayan island group, up to its formation as a modern part of the Philippine Republic, but the book falls far short of expectation. For one thing, it is too loosely organized for smooth digestion, with an imbalance in material giving too much pre-eminence to recent war action. Furthermore, the book is interspersed with a number of careless, trifling assertions. Unfortunately, the authors also display a deplorable lack of command of the English language, which accounts for the rather numerous slips and errors in style, grammar and sentence patterns. -

Aklan Antique Capiz Guimaras Iloilo Masbate Negros Occidental Negros

121°50'E 122°0'E 122°10'E 122°20'E 122°30'E 122°40'E 122°50'E 123°0'E 123°10'E 123°20'E Busay Tonga Salvacion Panubigan Panguiranan Lanas Poblacion Mapitogo Romblon Palane Mapili Pinamihagan Poblacion Jangan Ilaya Victory Masbate Dao Balud Baybay Sampad 12°0'N 12°0'N Yapak Ubo San Balabag Antonio San Andres Quinayangan Diotay Quinayangan Tonga Manoc-Manoc Guinbanwahan Mabuhay Bongcanaway Danao Union Pulanduta Caticlan Argao Rizal Calumpang Cubay San Norte Viray Unidos Poblacion Cogon Pawa Naasug Motag Balusbus Cubay Libertad Dumlog Sur Malay Bel-Is Cabulihan Nabaoy Tagororoc Mayapay Napaan Habana Habana Balusbos Jintotolo Cabugan Gibon Alegria Laserna Toledo Cantil Poblacion El Progreso Tag-Osip Nabas Nagustan Buruanga Poblacion Katipunan Buenasuerte Nazareth Alimbo-Baybay 11°50'N Buenavista Aslum 11°50'N Buenafortuna Ondoy Poblacion Colongcolong Aquino Agbago Polo San Tigum Inyawan Solido Tagbaya Isidro Matabana Tul-Ang Bugtongbato Maramig Magallanes Laguinbanua Buenavista Naisud Panilongan Pajo Santa Bagacay Cubay Luhod-Bayang Antipolo Cruz Jawili Libertad Pinatuad Capilijan Panangkilon Batuan Afga Santander San Rizal Codiong Unat Regador Paz Roque Mabusao Dumatad Poblacion Tinindugan Igcagay Candari Maloco Naligusan Bagongbayan Centro Santa Weste Baybay Lindero Barusbus Bulanao Cruz Buang Agdugayan Panayakan Tagas Union Pucio Taboc Tondog Dapdap Tinigbas San Naile Tangalan Sapatos Patria Santo Guia Joaquin Fragante Cabugao Tamalagon Baybay Duyong Rosario Zaldivar Jinalinan Santa Tingib Cabugao Pudiot Nauring Ana Lanipga Dumga Alibagon -

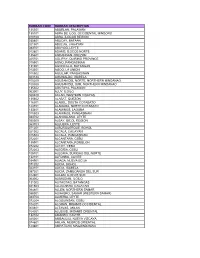

Rurban Code Rurban Description 135301 Aborlan

RURBAN CODE RURBAN DESCRIPTION 135301 ABORLAN, PALAWAN 135101 ABRA DE ILOG, OCCIDENTAL MINDORO 010100 ABRA, ILOCOS REGION 030801 ABUCAY, BATAAN 021501 ABULUG, CAGAYAN 083701 ABUYOG, LEYTE 012801 ADAMS, ILOCOS NORTE 135601 AGDANGAN, QUEZON 025701 AGLIPAY, QUIRINO PROVINCE 015501 AGNO, PANGASINAN 131001 AGONCILLO, BATANGAS 013301 AGOO, LA UNION 015502 AGUILAR, PANGASINAN 023124 AGUINALDO, ISABELA 100200 AGUSAN DEL NORTE, NORTHERN MINDANAO 100300 AGUSAN DEL SUR, NORTHERN MINDANAO 135302 AGUTAYA, PALAWAN 063001 AJUY, ILOILO 060400 AKLAN, WESTERN VISAYAS 135602 ALABAT, QUEZON 116301 ALABEL, SOUTH COTABATO 124701 ALAMADA, NORTH COTABATO 133401 ALAMINOS, LAGUNA 015503 ALAMINOS, PANGASINAN 083702 ALANGALANG, LEYTE 050500 ALBAY, BICOL REGION 083703 ALBUERA, LEYTE 071201 ALBURQUERQUE, BOHOL 021502 ALCALA, CAGAYAN 015504 ALCALA, PANGASINAN 072201 ALCANTARA, CEBU 135901 ALCANTARA, ROMBLON 072202 ALCOY, CEBU 072203 ALEGRIA, CEBU 106701 ALEGRIA, SURIGAO DEL NORTE 132101 ALFONSO, CAVITE 034901 ALIAGA, NUEVA ECIJA 071202 ALICIA, BOHOL 023101 ALICIA, ISABELA 097301 ALICIA, ZAMBOANGA DEL SUR 012901 ALILEM, ILOCOS SUR 063002 ALIMODIAN, ILOILO 131002 ALITAGTAG, BATANGAS 021503 ALLACAPAN, CAGAYAN 084801 ALLEN, NORTHERN SAMAR 086001 ALMAGRO, SAMAR (WESTERN SAMAR) 083704 ALMERIA, LEYTE 072204 ALOGUINSAN, CEBU 104201 ALORAN, MISAMIS OCCIDENTAL 060401 ALTAVAS, AKLAN 104301 ALUBIJID, MISAMIS ORIENTAL 132102 AMADEO, CAVITE 025001 AMBAGUIO, NUEVA VIZCAYA 074601 AMLAN, NEGROS ORIENTAL 123801 AMPATUAN, MAGUINDANAO 021504 AMULUNG, CAGAYAN 086401 ANAHAWAN, SOUTHERN LEYTE -

Choice Analysis of Tourist Spots: the Case of Guimaras Province

Journal of the Eastern Asia Society for Transportation Studies, Vol.10, 2013 Choice Analysis of Tourist Spots: The Case of Guimaras Province Maria Katrina M. GANZONa, Alexis M. FILLONEb a Graduate Student, Civil Engineering Department, De La Salle University, Manila, 1004, Philippines b Associate Professor, Civil Engineering Department, De La Salle university, Manila, 1004, Philippines a E-mail: [email protected] b E-mail: [email protected] Abstract: This study determines the factors, especially the transport-related factors, affecting the choice of destination of Guimaras tourists. Logit models were developed to determine the utility of the agritourism tourist spots, and the variables affecting the preference of tourists. The inclusion of agritourism sites in the current tour package of Guimaras tourists was assessed using showcards through a stated preference survey. The socio-demographic and travel attributes of the tourists and characteristics of a destination are related to the choice made by the decision-makers in picking an agritourism site they would want to explore in Guimaras Province. Keywords: Tourism planning, Choice modeling, Stated preference survey 1. INTRODUCTION Tourism is considered as one of the biggest industries in any country for it does not only cater to locals but more often to foreigners. Tourism brings in a lot of money to a certain country; some of which go to the national government as tax, while most of it benefits businesses which cater to tourists in the country. A thriving business can open opportunites for employment. Looking at it in a global scale, the impact of the tourism industry in 2011 is 9% of the global GDP which had a value of US$6 trillion and created 255 million jobs.