Z:\TRANSCRIPTS\2018\0208DED NACIQI REV.Wpd

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

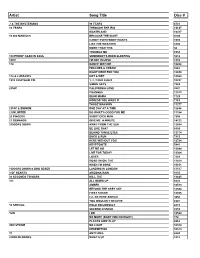

Copy UPDATED KAREOKE 2013

Artist Song Title Disc # ? & THE MYSTERIANS 96 TEARS 6781 10 YEARS THROUGH THE IRIS 13637 WASTELAND 13417 10,000 MANIACS BECAUSE THE NIGHT 9703 CANDY EVERYBODY WANTS 1693 LIKE THE WEATHER 6903 MORE THAN THIS 50 TROUBLE ME 6958 100 PROOF AGED IN SOUL SOMEBODY'S BEEN SLEEPING 5612 10CC I'M NOT IN LOVE 1910 112 DANCE WITH ME 10268 PEACHES & CREAM 9282 RIGHT HERE FOR YOU 12650 112 & LUDACRIS HOT & WET 12569 1910 FRUITGUM CO. 1, 2, 3 RED LIGHT 10237 SIMON SAYS 7083 2 PAC CALIFORNIA LOVE 3847 CHANGES 11513 DEAR MAMA 1729 HOW DO YOU WANT IT 7163 THUGZ MANSION 11277 2 PAC & EMINEM ONE DAY AT A TIME 12686 2 UNLIMITED DO WHAT'S GOOD FOR ME 11184 20 FINGERS SHORT DICK MAN 7505 21 DEMANDS GIVE ME A MINUTE 14122 3 DOORS DOWN AWAY FROM THE SUN 12664 BE LIKE THAT 8899 BEHIND THOSE EYES 13174 DUCK & RUN 7913 HERE WITHOUT YOU 12784 KRYPTONITE 5441 LET ME GO 13044 LIVE FOR TODAY 13364 LOSER 7609 ROAD I'M ON, THE 11419 WHEN I'M GONE 10651 3 DOORS DOWN & BOB SEGER LANDING IN LONDON 13517 3 OF HEARTS ARIZONA RAIN 9135 30 SECONDS TO MARS KILL, THE 13625 311 ALL MIXED UP 6641 AMBER 10513 BEYOND THE GREY SKY 12594 FIRST STRAW 12855 I'LL BE HERE AWHILE 9456 YOU WOULDN'T BELIEVE 8907 38 SPECIAL HOLD ON LOOSELY 2815 SECOND CHANCE 8559 3LW I DO 10524 NO MORE (BABY I'MA DO RIGHT) 178 PLAYAS GON' PLAY 8862 3RD STRIKE NO LIGHT 10310 REDEMPTION 10573 3T ANYTHING 6643 4 NON BLONDES WHAT'S UP 1412 4 P.M. -

Marygold Manor DJ List

Page 1 of 143 Marygold Manor 4974 songs, 12.9 days, 31.82 GB Name Artist Time Genre Take On Me A-ah 3:52 Pop (fast) Take On Me a-Ha 3:51 Rock Twenty Years Later Aaron Lines 4:46 Country Dancing Queen Abba 3:52 Disco Dancing Queen Abba 3:51 Disco Fernando ABBA 4:15 Rock/Pop Mamma Mia ABBA 3:29 Rock/Pop You Shook Me All Night Long AC/DC 3:30 Rock You Shook Me All Night Long AC/DC 3:30 Rock You Shook Me All Night Long AC/DC 3:31 Rock AC/DC Mix AC/DC 5:35 Dirty Deeds Done Dirt Cheap ACDC 3:51 Rock/Pop Thunderstruck ACDC 4:52 Rock Jailbreak ACDC 4:42 Rock/Pop New York Groove Ace Frehley 3:04 Rock/Pop All That She Wants (start @ :08) Ace Of Base 3:27 Dance (fast) Beautiful Life Ace Of Base 3:41 Dance (fast) The Sign Ace Of Base 3:09 Pop (fast) Wonderful Adam Ant 4:23 Rock Theme from Mission Impossible Adam Clayton/Larry Mull… 3:27 Soundtrack Ghost Town Adam Lambert 3:28 Pop (slow) Mad World Adam Lambert 3:04 Pop For Your Entertainment Adam Lambert 3:35 Dance (fast) Nirvana Adam Lambert 4:23 I Wanna Grow Old With You (edit) Adam Sandler 2:05 Pop (slow) I Wanna Grow Old With You (start @ 0:28) Adam Sandler 2:44 Pop (slow) Hello Adele 4:56 Pop Make You Feel My Love Adele 3:32 Pop (slow) Chasing Pavements Adele 3:34 Make You Feel My Love Adele 3:32 Pop Make You Feel My Love Adele 3:32 Pop Rolling in the Deep Adele 3:48 Blue-eyed soul Marygold Manor Page 2 of 143 Name Artist Time Genre Someone Like You Adele 4:45 Blue-eyed soul Rumour Has It Adele 3:44 Pop (fast) Sweet Emotion Aerosmith 5:09 Rock (slow) I Don't Want To Miss A Thing (Cold Start) -

A Doll's House

A DOLL'S HOUSE by Henrik Ibsen ACT I. ACT II. ACT III. DRAMATIS PERSONAE Torvald Helmer. Nora, his wife. Doctor Rank. Mrs Linde. Nils Krogstad. Helmer's three young children. Anne, their nurse. A Housemaid. A Porter. [The action takes place in Helmer's house.] A DOLL'S HOUSE ACT I [SCENE.--A room furnished comfortably and tastefully, but not extravagantly. At the back, a door to the right leads to the entrance-hall, another to the left leads to Helmer's study. Between the doors stands a piano. In the middle of the left-hand wall is a door, and beyond it a window. Near the window are a round table, arm-chairs and a small sofa. In the right-hand wall, at the farther end, another door; and on the same side, nearer the footlights, a stove, two easy chairs and a rocking-chair; between the stove and the door, a small table. Engravings on the walls; a cabinet with china and other small objects; a small book-case with well-bound books. The floors are carpeted, and a fire burns in the stove. It is winter. A bell rings in the hall; shortly afterwards the door is heard to open. Enter NORA, humming a tune and in high spirits. She is in outdoor dress and carries a number of parcels; these she lays on the table to the right. She leaves the outer door open after her, and through it is seen a PORTER who is carrying a Christmas Tree and a basket, which he gives to the MAID who has opened the door.] Nora. -

8123 Songs, 21 Days, 63.83 GB

Page 1 of 247 Music 8123 songs, 21 days, 63.83 GB Name Artist The A Team Ed Sheeran A-List (Radio Edit) XMIXR Sisqo feat. Waka Flocka Flame A.D.I.D.A.S. (Clean Edit) Killer Mike ft Big Boi Aaroma (Bonus Version) Pru About A Girl The Academy Is... About The Money (Radio Edit) XMIXR T.I. feat. Young Thug About The Money (Remix) (Radio Edit) XMIXR T.I. feat. Young Thug, Lil Wayne & Jeezy About Us [Pop Edit] Brooke Hogan ft. Paul Wall Absolute Zero (Radio Edit) XMIXR Stone Sour Absolutely (Story Of A Girl) Ninedays Absolution Calling (Radio Edit) XMIXR Incubus Acapella Karmin Acapella Kelis Acapella (Radio Edit) XMIXR Karmin Accidentally in Love Counting Crows According To You (Top 40 Edit) Orianthi Act Right (Promo Only Clean Edit) Yo Gotti Feat. Young Jeezy & YG Act Right (Radio Edit) XMIXR Yo Gotti ft Jeezy & YG Actin Crazy (Radio Edit) XMIXR Action Bronson Actin' Up (Clean) Wale & Meek Mill f./French Montana Actin' Up (Radio Edit) XMIXR Wale & Meek Mill ft French Montana Action Man Hafdís Huld Addicted Ace Young Addicted Enrique Iglsias Addicted Saving abel Addicted Simple Plan Addicted To Bass Puretone Addicted To Pain (Radio Edit) XMIXR Alter Bridge Addicted To You (Radio Edit) XMIXR Avicii Addiction Ryan Leslie Feat. Cassie & Fabolous Music Page 2 of 247 Name Artist Addresses (Radio Edit) XMIXR T.I. Adore You (Radio Edit) XMIXR Miley Cyrus Adorn Miguel Adorn Miguel Adorn (Radio Edit) XMIXR Miguel Adorn (Remix) Miguel f./Wiz Khalifa Adorn (Remix) (Radio Edit) XMIXR Miguel ft Wiz Khalifa Adrenaline (Radio Edit) XMIXR Shinedown Adrienne Calling, The Adult Swim (Radio Edit) XMIXR DJ Spinking feat. -

Kidtribe Fitness Par-Tay!

www.kidtribe.com KidTribe’s “Hooper-Size” CD “What Up? Warm Up!” / KidTribe Theme / Hoop Party / Hooper-Size Me / Hoop-Hop-Don’t-Stop / Hooper’s Delight / Peace Out! Warm-Up What Up? Warm Up – KidTribe / Start the Commotion – The Wiseguys / Put Your Hands Up In the Air (radio edit) - Danzel Don’t Stop the Party, I Gotta Feeling, Let’s Get It Started – Black-Eyed Peas / Right Here Right Now – Fatboy Slim I Like to Move It – Chingy (Please get from “Madagascar” soundtrack, otherwise there’s a slight content issue – use of the word “sexy” in one part – easy to cheer over it though.) /Get the Party Started – Pink / Cooler Than Me – Mike Posner Free-Style / Skill Building (current hip-hop / pop music) – make sure ALL are CLEAN or RADIO EDITS All Grades (rated G / PG): o Anything & everything by Michael Jackson, Lady Gaga, Step Up Soundtracks o Gangnam Style - Psi o Party Rock Anthem - LMFAO o Chasing the Sun, Wish You Were Here – The Wanted, o Scream & Shout (RADIO EDIT)- Will. I.Am o Don’t Stop the Party, I Gotta Feeling, Rock That Body, Boom Boom Pow (RADIO EDIT)– Black Eyed Peas o We Found Love, Where Have You Been, Only Girl, Disturbia, Umbrella, Pon de Replay, SOS – Rihanna o All Around the World, Beauty and the Beat (Justin Bieber) o Firework, California Girls, ET – Katie Perry o Good Time – Owl City o Moves Like Jagger, Pay Phone, one More Night – Maroon 5 o Good Feeling, Wild Ones, Where Them Girls At, Club Can’t Handle Me, Right Round, In the Ayer, Sugar – Flo Rida o Live While We’re Young – One Direction o Starships – Nicki Minage -

Sean Paul Step up Give It up to Me Mp3 Download

Sean paul step up give it up to me mp3 download Sean Paul Give It Up To Me () - file type: mp3 - download - bitrate: kbps. Sean Paul Ft Keyshia Cole Give It Up To Me. Now Playing. When You Gonna (give It Up To Me) (ft. Keyshia Cole). Artist: Sean Paul. MB. Convert Youtube Sean Paul Step Up Give It Up To Me to MP3 instantly. Free song download Sean Paul Give It Up To Me Feat Keyshia Cole Mp3. To start this download Keyshia Cole) (Disney Version for the film Step Up).mp3. 3gp & mp4. List download link Lagu MP3 SEAN PAUL FT KEYSHIA COLE GIVE IT UP TO ME ( Sean Paul Give It Up To Me Step Up Movie Chan. Вы можете бесплатно скачать mp3 Sean Paul Feat. Keyshia Cole – Give It Up To Me в высоком качестве kbit используйте кнопку скачать mp3. Free Sean Paul Give It Up To Me Feat Keyshia Cole Disney Version For The Film Step 3. Play & Download Size MB ~ ~ kbps. Download and Convert sean paul give it up to me to MP3 or MP4 for free! Paul - Give It Up To Me (Feat. Keyshia Cole) (Disney Version for the film Step Up). Sean Paul ft Keyshia Cole "Give It Up To Me" Remade by MD Basseliner on Reason Genre: Instrumental Hip Hop, MD Basseliner. times, 0 Play. WMG Give It Up To Me (Disney Version) Feat. Keyshia Cole Subscribe to Sean Paul's Channel Here. Buy Give It Up To Me: Read 2 Digital Music Reviews - На этой странице вы можете слушать Sean Paul Give It Up To Me Feat Keyshia Cole и скачивать бесплатно в формате mp3. -

The Derailment of Feminism: a Qualitative Study of Girl Empowerment and the Popular Music Artist

THE DERAILMENT OF FEMINISM: A QUALITATIVE STUDY OF GIRL EMPOWERMENT AND THE POPULAR MUSIC ARTIST A Thesis by Jodie Christine Simon Master of Arts, Wichita State University, 2010 Bachelor of Arts. Wichita State University, 2006 Submitted to the Department of Liberal Studies and the faculty of the Graduate School of Wichita State University in partial fulfillment of the requirements for the degree of Master of Arts July 2012 @ Copyright 2012 by Jodie Christine Simon All Rights Reserved THE DERAILMENT OF FEMINISM: A QUALITATIVE STUDY OF GIRL EMPOWERMENT AND THE POPULAR MUSIC ARTIST The following faculty members have examined the final copy of this thesis for form and content, and recommend that it be accepted in partial fulfillment of the requirement for the degree of Masters of Arts with a major in Liberal Studies. __________________________________________________________ Jodie Hertzog, Committee Chair __________________________________________________________ Jeff Jarman, Committee Member __________________________________________________________ Chuck Koeber, Committee Member iii DEDICATION To my husband, my mother, and my children iv ACKNOWLEDGMENTS I would like to thank my adviser, Dr. Jodie Hertzog, for her patient and insightful advice and support. A mentor in every sense of the word, Jodie Hertzog embodies the very nature of adviser; her council was very much appreciated through the course of my study. v ABSTRACT “Girl Power!” is a message that parents raising young women in today’s media- saturated society should be able to turn to with a modicum of relief from the relentlessly harmful messages normally found within popular music. But what happens when we turn a critical eye toward the messages cloaked within this supposedly feminist missive? A close examination of popular music associated with girl empowerment reveals that many of the messages found within these lyrics are frighteningly just as damaging as the misogynistic, violent, and explicitly sexual ones found in the usual fare of top 100 Hits. -

Karaoke Catalog Updated On: 11/01/2019 Sing Online on in English Karaoke Songs

Karaoke catalog Updated on: 11/01/2019 Sing online on www.karafun.com In English Karaoke Songs 'Til Tuesday What Can I Say After I Say I'm Sorry The Old Lamplighter Voices Carry When You're Smiling (The Whole World Smiles With Someday You'll Want Me To Want You (H?D) Planet Earth 1930s Standards That Old Black Magic (Woman Voice) Blackout Heartaches That Old Black Magic (Man Voice) Other Side Cheek to Cheek I Know Why (And So Do You) DUET 10 Years My Romance Aren't You Glad You're You Through The Iris It's Time To Say Aloha (I've Got A Gal In) Kalamazoo 10,000 Maniacs We Gather Together No Love No Nothin' Because The Night Kumbaya Personality 10CC The Last Time I Saw Paris Sunday, Monday Or Always Dreadlock Holiday All The Things You Are This Heart Of Mine I'm Not In Love Smoke Gets In Your Eyes Mister Meadowlark The Things We Do For Love Begin The Beguine 1950s Standards Rubber Bullets I Love A Parade Get Me To The Church On Time Life Is A Minestrone I Love A Parade (short version) Fly Me To The Moon 112 I'm Gonna Sit Right Down And Write Myself A Letter It's Beginning To Look A Lot Like Christmas Cupid Body And Soul Crawdad Song Peaches And Cream Man On The Flying Trapeze Christmas In Killarney 12 Gauge Pennies From Heaven That's Amore Dunkie Butt When My Ship Comes In My Own True Love (Tara's Theme) 12 Stones Yes Sir, That's My Baby Organ Grinder's Swing Far Away About A Quarter To Nine Lullaby Of Birdland Crash Did You Ever See A Dream Walking? Rags To Riches 1800s Standards I Thought About You Something's Gotta Give Home Sweet Home -

90'S & 2K DANCE MUSIC ARTIST TITLE

90's & 2K DANCE MUSIC ARTIST TITLE 02 Maroon 5 ft. Christina Aguilera - Moves Like Jagger Bob Sinclaire - Rock This Party Bob Sinclaire - Rock This Party We Speak no Americano - Yolanda Be Cool & Dcup We Speak no Americano - Yolanda Be Cool & Dcup Dynamite - Taio Cruz Dynamite - Taio Cruz Enrique Iglesias – Tonight (Feat. Ludacris) Enrique Iglesias – Tonight (Feat. Ludacris) Black Eyed Peas - I Gotta Feeling(1) Black Eyed Peas - I Gotta Feeling(1) Alright - Machel Montano ft Pitbull Alright - Machel Montano ft Pitbull Usher ft. Will.I.Am.- OMG Usher ft. Will.I.Am.- OMG Sean Kingston - Fire Burning The Dancefloor - HotNewHipHop.com Sean Kingston - Fire Burning The Dancefloor - HotNewHipHop.com Rihanna - Please Don't Stop The Music Rihanna - Please Don't Stop The Music Flo-Rida ft. T-Pain - Get Low Flo-Rida ft. T-Pain - Get Low David Guetta ft. Akon - Sexy Bitch David Guetta ft. Akon - Sexy Bitch Chris Brown - Forever Chris Brown - Forever Beyonce - Single Ladies (Put a Ring on it) Beyonce - Single Ladies (Put a Ring on it) Pharrell Williams - Come Get It Bae ft. Miley Cyrus (Audio) Pharrell Williams - Come Get It Bae ft. Miley Cyrus Paloma Faith - Only Love Can Hurt Like This (Official Video) (Audio) Ariana Grande - Break Free feat. Zedd (Audio) Paloma Faith - Only Love Can Hurt Like This (Official Video) will.i.am, Cody Wise - It's My Birthday 27 Benny Benassi ft. Gary Go - Cinema Robin Thicke - Blurred Lines (Remix) 30 Beyonce - Best Thing I Never Had Robin Thicke feat. Pharrel - Blurred Lines (Armando Silvestre Remix) 39 Elena - Disco Romancing Black Eyed Peas Time of my Life New full version high quality LMFAO - Sexy and I Know It 256kbps (Single) feel this moment - pitbull ft. -

Songs by Artist

Sound Master Entertianment Songs by Artist smedenver.com Title Title Title .38 Special 2Pac 4 Him Caught Up In You California Love (Original Version) For Future Generations Hold On Loosely Changes 4 Non Blondes If I'd Been The One Dear Mama What's Up Rockin' Onto The Night Thugz Mansion 4 P.M. Second Chance Until The End Of Time Lay Down Your Love Wild Eyed Southern Boys 2Pac & Eminem Sukiyaki 10 Years One Day At A Time 4 Runner Beautiful 2Pac & Notorious B.I.G. Cain's Blood Through The Iris Runnin' Ripples 100 Proof Aged In Soul 3 Doors Down That Was Him (This Is Now) Somebody's Been Sleeping Away From The Sun 4 Seasons 10000 Maniacs Be Like That Rag Doll Because The Night Citizen Soldier 42nd Street Candy Everybody Wants Duck & Run 42nd Street More Than This Here Without You Lullaby Of Broadway These Are Days It's Not My Time We're In The Money Trouble Me Kryptonite 5 Stairsteps 10CC Landing In London Ooh Child Let Me Be Myself I'm Not In Love 50 Cent We Do For Love Let Me Go 21 Questions 112 Loser Disco Inferno Come See Me Road I'm On When I'm Gone In Da Club Dance With Me P.I.M.P. It's Over Now When You're Young 3 Of Hearts Wanksta Only You What Up Gangsta Arizona Rain Peaches & Cream Window Shopper Love Is Enough Right Here For You 50 Cent & Eminem 112 & Ludacris 30 Seconds To Mars Patiently Waiting Kill Hot & Wet 50 Cent & Nate Dogg 112 & Super Cat 311 21 Questions All Mixed Up Na Na Na 50 Cent & Olivia 12 Gauge Amber Beyond The Grey Sky Best Friend Dunkie Butt 5th Dimension 12 Stones Creatures (For A While) Down Aquarius (Let The Sun Shine In) Far Away First Straw AquariusLet The Sun Shine In 1910 Fruitgum Co. -

Swarthmore Folk Alumni Songbook 2019

Swarthmore College ALUMNI SONGBOOK 2019 Edition Swarthmore College ALUMNI SONGBOOK Being a nostalgic collection of songs designed to elicit joyful group singing whenever two or three are gathered together on the lawns or in the halls of Alma Mater. Nota Bene June, 1999: The 2014 edition celebrated the College’s Our Folk Festival Group, the folk who keep sesquicentennial. It also honored the life and the computer lines hot with their neverending legacy of Pete Seeger with 21 of his songs, plus conversation on the folkfestival listserv, the ones notes about his musical legacy. The total number who have staged Folk Things the last two Alumni of songs increased to 148. Weekends, decided that this year we’d like to In 2015, we observed several anniversaries. have some song books to facilitate and energize In honor of the 125th anniversary of the birth of singing. Lead Belly and the 50th anniversary of the Selma- The selection here is based on song sheets to-Montgomery march, Lead Belly’s “Bourgeois which Willa Freeman Grunes created for the War Blues” was added, as well as a new section of 11 Years Reunion in 1992 with additional selections Civil Rights songs suggested by three alumni. from the other participants in the listserv. Willa Freeman Grunes ’47 helped us celebrate There are quite a few songs here, but many the 70th anniversary of the first Swarthmore more could have been included. College Intercollegiate Folk Festival (and the We wish to say up front, that this book is 90th anniversary of her birth!) by telling us about intended for the use of Swarthmore College the origins of the Festivals and about her role Alumni on their Alumni Weekend and is neither in booking the first two featured folk singers, for sale nor available to the general public. -

Women in Reality: a Rhetorical Analysis of Three of Henrik Ibsen’S Plays in Order to Determine the Most Prevalent Feminist Themes

WOMEN IN REALITY: A RHETORICAL ANALYSIS OF THREE OF HENRIK IBSEN’S PLAYS IN ORDER TO DETERMINE THE MOST PREVALENT FEMINIST THEMES A Thesis by Lesa M. Bradford MA, Wichita State University, 2007 BS, Northwestern Oklahoma State University, 1992 Submitted to the department of Communication and the faculty of the Graduate School of Wichita State University in partial fulfillment of the requirements for the degree of Master of Arts May 2007 © Copyright 2007 by Lesa M. Bradford All Rights Reserved WOMEN IN REALITY: A RHETORICAL ANALYSIS OF THREE OF HENRIK IBSEN’S PLAYS IN ORDER TO DETERMINE THE MOST PREVALENT FEMINIST THEMES. I have examined the final copy of this Thesis for form and content and recommend that it be accepted in partial fulfillment of the requirement for the degree of Master of Arts in Communication, with a major in Theatre and Drama Steve Peters, Committee Chair We have read this Thesis and recommend its acceptance: Betty Monroe, Committee Member Royce Smith, Committee Member iii ACKNOWLEDGEMENTS I would like to thank my family for all the support and love that they have given me throughout this whole process. They have been selfless and I have put them through quite an ordeal trying to accomplish my dreams. I love you all dearly. I would also like to thank my two dearest friends who encouraged and helped motivate me when things got tough and life threw obstacles in my way. I couldn’t get through it all without your support. Lastly, I would like to thank the faculty, staff, and students at WSU who made my time there so cherished and wonderful.