Inglorious Lstms Generating Tarantino Movies with Neural Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Appalling! Terrifying! Wonderful! Blaxploitation and the Cinematic Image of the South

Antoni Górny Appalling! Terrifying! Wonderful! Blaxploitation and the Cinematic Image of the South Abstract: The so-called blaxploitation genre – a brand of 1970s film-making designed to engage young Black urban viewers – has become synonymous with channeling the political energy of Black Power into larger-than-life Black characters beating “the [White] Man” in real-life urban settings. In spite of their urban focus, however, blaxploitation films repeatedly referenced an idea of the South whose origins lie in antebellum abolitionist propaganda. Developed across the history of American film, this idea became entangled in the post-war era with the Civil Rights struggle by way of the “race problem” film, which identified the South as “racist country,” the privileged site of “racial” injustice as social pathology.1 Recently revived in the widely acclaimed works of Quentin Tarantino (Django Unchained) and Steve McQueen (12 Years a Slave), the two modes of depicting the South put forth in blaxploitation and the “race problem” film continue to hold sway to this day. Yet, while the latter remains indelibly linked, even in this revised perspective, to the abolitionist vision of emancipation as the result of a struggle between idealized, plaintive Blacks and pathological, racist Whites, blaxploitation’s troping of the South as the fulfillment of grotesque White “racial” fantasies offers a more powerful and transformative means of addressing America’s “race problem.” Keywords: blaxploitation, American film, race and racism, slavery, abolitionism The year 2013 was a momentous one for “racial” imagery in Hollywood films. Around the turn of the year, Quentin Tarantino released Django Unchained, a sardonic action- film fantasy about an African slave winning back freedom – and his wife – from the hands of White slave-owners in the antebellum Deep South. -

“No Reason to Be Seen”: Cinema, Exploitation, and the Political

“No Reason to Be Seen”: Cinema, Exploitation, and the Political by Gordon Sullivan B.A., University of Central Florida, 2004 M.A., North Carolina State University, 2007 Submitted to the Graduate Faculty of The Kenneth P. Dietrich School of Arts and Sciences in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Pittsburgh 2017 UNIVERSITY OF PITTSBURGH THE KENNETH P. DIETRICH SCHOOL OF ARTS AND SCIENCES This dissertation was presented by Gordon Sullivan It was defended on October 20, 2017 and approved by Marcia Landy, Distinguished Professor, Department of English Jennifer Waldron, Associate Professor, Department of English Daniel Morgan, Associate Professor, Department of Cinema and Media Studies, University of Chicago Dissertation Advisor: Adam Lowenstein, Professor, Department of English ii Copyright © by Gordon Sullivan 2017 iii “NO REASON TO BE SEEN”: CINEMA, EXPLOITATION, AND THE POLITICAL Gordon Sullivan, PhD University of Pittsburgh, 2017 This dissertation argues that we can best understand exploitation films as a mode of political cinema. Following the work of Peter Brooks on melodrama, the exploitation film is a mode concerned with spectacular violence and its relationship to the political, as defined by French philosopher Jacques Rancière. For Rancière, the political is an “intervention into the visible and sayable,” where members of a community who are otherwise uncounted come to be seen as part of the community through a “redistribution of the sensible.” This aesthetic rupture allows the demands of the formerly-invisible to be seen and considered. We can see this operation at work in the exploitation film, and by investigating a series of exploitation auteurs, we can augment our understanding of what Rancière means by the political. -

Birth, Django, and the Violenceof Racial Redemption

religions Article Rescue US: Birth, Django, and the Violence of Racial Redemption Joseph Winters Religious Studies, Duke University, Durham, NC 27708, USA; [email protected] Received: 19 December 2017; Accepted: 8 January 2018; Published: 12 January 2018 Abstract: In this article, I show how the relationship between race, violence, and redemption is articulated and visualized through film. By juxtaposing DW Griffith’s The Birth of a Nation and Quentin Tarantino’s Django Unchained, I contend that the latter inverts the logic of the former. While Birth sacrifices black bodies and explains away anti-black violence for the sake of restoring white sovereignty (or rescuing the nation from threatening forms of blackness), Django adopts a rescue narrative in order to show the excessive violence that structured slavery and the emergence of the nation-state. As an immanent break within the rescue narrative, Tarantino’s film works to “rescue” images and sounds of anguish from forgetful versions of history. Keywords: race; redemption; violence; cinema; Tarantino; Griffith; Birth of a Nation; Django Unchained Race, violence, and the grammar of redemption form an all too familiar constellation, especially in the United States. Through various narratives and strategies, we are disciplined to imagine the future as a state of perpetual advancement that promises to transcend legacies of anti-black violence and cruelty. Similarly, we are told that certain figures or events (such as the election of Barack Obama) compensate for, and offset, the black suffering that -

Blaxploitation and the Cinematic Image of the South

Antoni Górny Appalling! Terrifying! Wonderful! Blaxploitation and the Cinematic Image of the South Abstract: The so-called blaxploitation genre – a brand of 1970s film-making designed to engage young Black urban viewers – has become synonymous with channeling the political energy of Black Power into larger-than-life Black characters beating “the [White] Man” in real-life urban settings. In spite of their urban focus, however, blaxploitation films repeatedly referenced an idea of the South whose origins lie in antebellum abolitionist propaganda. Developed across the history of American film, this idea became entangled in the post-war era with the Civil Rights struggle by way of the “race problem” film, which identified the South as “racist country,” the privileged site of “racial” injustice as social pathology.1 Recently revived in the widely acclaimed works of Quentin Tarantino (Django Unchained) and Steve McQueen (12 Years a Slave), the two modes of depicting the South put forth in blaxploitation and the “race problem” film continue to hold sway to this day. Yet, while the latter remains indelibly linked, even in this revised perspective, to the abolitionist vision of emancipation as the result of a struggle between idealized, plaintive Blacks and pathological, racist Whites, blaxploitation’s troping of the South as the fulfillment of grotesque White “racial” fantasies offers a more powerful and transformative means of addressing America’s “race problem.” Keywords: blaxploitation, American film, race and racism, slavery, abolitionism The year 2013 was a momentous one for “racial” imagery in Hollywood films. Around the turn of the year, Quentin Tarantino released Django Unchained, a sardonic action- film fantasy about an African slave winning back freedom – and his wife – from the hands of White slave-owners in the antebellum Deep South. -

Special Topics in Film)

RACE & GENDER IN AMERICAN FILM (SPECIAL TOPICS IN FILM) Fall 2020 Synchronous Remote Course Mondays 6:00pm-9:00pm (Eastern Time Zone) Course Numbers: 21:014:255:02/21:350:363:01 Rutgers University-Newark Professor: Dr. Lyra D. Monteiro Email: [email protected] Google Hangouts: [email protected] Video Office Hours: Tuesdays, 4:00-5:00pm (Eastern Time Zone), Wednesdays 5:30-6:30pm (Eastern Time Zone), and by appointment COURSE DESCRIPTION This summer’s uprisings were sparked by a number of widely circulating videos in which a white man or woman kills (or in some cases, nearly kills) a Black man or woman. We will not be watching these horrific videos in this course. What we will be doing is studying the ways in which movies that may seem like frivolous fun are actually part of the story as to how these events came to occur. Movies teach every one of us how to “be” the race and gender assigned to us by this society—and how to think about, feel towards, and treat those who are assigned to other races and genders. We will also be looking at how filmmakers who hold marginalized identities have, in recent years, made movies that actively seek to address the disastrous racial violence in the United States. During the first part of the term, we will use films to learn and practice tools for analyzing how race, gender, and sexual orientation function in film—and how this shapes privilege and oppression in the real world. The remainder of the course looks at films from the past few years, seeking to understand and compare the different choices that their (mostly Black) creators made—in terms of different genres, settings, styles, etc.—in order to engage with present-day issues of anti-Blackness. -

A Look Into the South According to Quentin Tarantino Justin Tyler Necaise University of Mississippi

University of Mississippi eGrove Honors College (Sally McDonnell Barksdale Honors Theses Honors College) 2018 The ineC matic South: A Look into the South According to Quentin Tarantino Justin Tyler Necaise University of Mississippi. Sally McDonnell Barksdale Honors College Follow this and additional works at: https://egrove.olemiss.edu/hon_thesis Part of the American Studies Commons Recommended Citation Necaise, Justin Tyler, "The ineC matic South: A Look into the South According to Quentin Tarantino" (2018). Honors Theses. 493. https://egrove.olemiss.edu/hon_thesis/493 This Undergraduate Thesis is brought to you for free and open access by the Honors College (Sally McDonnell Barksdale Honors College) at eGrove. It has been accepted for inclusion in Honors Theses by an authorized administrator of eGrove. For more information, please contact [email protected]. THE CINEMATIC SOUTH: A LOOK INTO THE SOUTH ACCORDING TO QUENTIN TARANTINO by Justin Tyler Necaise A thesis submitted to the faculty of The University of Mississippi in partial fulfillment of the requirements of the Sally McDonnell Barksdale Honors College. Oxford May 2018 Approved by __________________________ Advisor: Dr. Andy Harper ___________________________ Reader: Dr. Kathryn McKee ____________________________ Reader: Dr. Debra Young © 2018 Justin Tyler Necaise ALL RIGHTS RESERVED ii To Laney The most pure-hearted person I have ever met. iii ACKNOWLEDGEMENTS Thank you to my family for being the most supportive group of people I could have ever asked for. Thank you to my father, Heath, who instilled my love for cinema and popular culture and for shaping the man I am today. Thank you to my mother, Angie, who taught me compassion and a knack for looking past the surface to see the truth that I will carry with me through life. -

Django Unchained (Rated R) Under the Tree on Dec

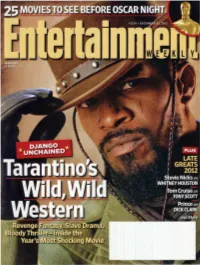

-UENTIN TARANTINO knows the.secret of yuletide gift giving: Get them something they wouldn't get thf ~selves. After all, who but Tarantino, the man who achine-gunned Hitler's face off in his WWII remix (jfiourious Basterds, would think of laying a hyper- violent all-star slave-revenge-Western fairy tale like Django Unchained (rated R) under the tree on Dec. 25? Like so,many other presents, Django was wrapped just in time. After seven months of production, additional reshoots, weather complications, a revolving-door cast list, a sudden death, and a sprint to the finish in the editing room, Tarantino has managed to deliver the film for its festive release date. With that long, dusty trail now behind , him, the filmmaker slumps beneath a foreign-language . ' \Pulp Fiction poster in a corner of Do Hwa, the Korean l ' l ◄ , 1 , ~staurant he co-owns in Manhattan's West Village, and ' t av mits he's tired. "It's been a long journey, man." I i I , 26 I EW.COM Dec,a 21, 2012 (Clockwise from far left) Foxx; Foxx, Leonardo DiCaprio, and Waltz; Kerry Washington and Samuel L. Jackson; Washington Keep in mind: An exhausted Quentin Tarantino, even at 49 and a long way from his wunderkind years, is still as energetic as a regular person would be after two strong coffees. And he's char acteristically enthusiastic about his new film: a spaghetti Western silent. To a degree, Tarantino understands the response. "There transposed to the antebellum South that follows a slave (Jamie is no setup for Django, for what we're trying to do. -

The South According to Quentin Tarantino

University of Mississippi eGrove Electronic Theses and Dissertations Graduate School 2015 The South According To Quentin Tarantino Michael Henley University of Mississippi Follow this and additional works at: https://egrove.olemiss.edu/etd Part of the American Studies Commons Recommended Citation Henley, Michael, "The South According To Quentin Tarantino" (2015). Electronic Theses and Dissertations. 887. https://egrove.olemiss.edu/etd/887 This Thesis is brought to you for free and open access by the Graduate School at eGrove. It has been accepted for inclusion in Electronic Theses and Dissertations by an authorized administrator of eGrove. For more information, please contact [email protected]. THE SOUTH ACCORDING TO QUENTIN TARANTINO A Thesis presented in partial fulfillment of requirements for the degree of Master of Arts in the Department of the Center for the Study of Southern Culture The University of Mississippi by MICHAEL LEE HENLEY August 2015 Copyright Michael Lee Henley 2015 ALL RIGHTS RESERVED ABSTRACT This thesis explores the filmmaker Quentin Tarantino’s portrayal of the South and southerners in his films Pulp Fiction (1994), Death Proof (2007), and Django Unchained (2012). In order to do so, it explores and explains Tarantino’s mixture of genres, influences, and filmmaking styles in which he places the South and its inhabitants into current trends in southern studies which aim to examine the South as a place that is defined by cultural reproductions, lacking authenticity, and cultural distinctiveness. Like Godard before him, Tarantino’s movies are commentaries on film history itself. In short, Tarantino’s films actively reimagine the South and southerners in a way that is not nostalgic for a “southern way of life,” nor meant to exploit lower class whites. -

Genre, Justice & Quentin Tarantino

University of South Florida Scholar Commons Graduate Theses and Dissertations Graduate School 11-6-2015 Genre, Justice & Quentin Tarantino Eric Michael Blake University of South Florida, [email protected] Follow this and additional works at: http://scholarcommons.usf.edu/etd Part of the Film and Media Studies Commons, and the Theory and Criticism Commons Scholar Commons Citation Blake, Eric Michael, "Genre, Justice & Quentin Tarantino" (2015). Graduate Theses and Dissertations. http://scholarcommons.usf.edu/etd/5911 This Thesis is brought to you for free and open access by the Graduate School at Scholar Commons. It has been accepted for inclusion in Graduate Theses and Dissertations by an authorized administrator of Scholar Commons. For more information, please contact [email protected]. Genre, Justice, & Quentin Tarantino by Eric M. Blake A thesis submitted in partial fulfillment of the requirements for the degree of Master of Arts Department of Humanities and Cultural Studies With a concentration in Film Studies College of Arts & Sciences University of South Florida Major Professor: Andrew Berish, Ph.D. Amy Rust, Ph.D. Daniel Belgrad, Ph.D. Date of Approval: October 23, 2015 Keywords: Cinema, Film, Film Studies, Realism, Postmodernism, Crime Film Copyright © 2015, Eric M. Blake TABLE OF CONTENTS Abstract ................................................................................................................................. ii Introduction ..................................................................................................................................1 -

How to Be a Film Critic in Five Easy Lessons

How to Be a Film Critic in Five Easy Lessons How to Be a Film Critic in Five Easy Lessons By Christopher K. Brooks How to Be a Film Critic in Five Easy Lessons By Christopher K. Brooks This book first published 2020 Cambridge Scholars Publishing Lady Stephenson Library, Newcastle upon Tyne, NE6 2PA, UK British Library Cataloguing in Publication Data A catalogue record for this book is available from the British Library Copyright © 2020 by Christopher K. Brooks All rights for this book reserved. No part of this book may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording or otherwise, without the prior permission of the copyright owner. ISBN (10): 1-5275-4322-6 ISBN (13): 978-1-5275-4322-5 Lou Lopez and his Emerson Biggins Staff The disenfranchised of the English Department: Rebeccah Bree, Fran Connor, Margaret Dawe, Darren Defrain, Albert Goldbarth, Jean Griffith, and Sam Taylor Brian Evans, Mary Sherman, Kerry Branine, Mythili Menon, and T.J. Boynton. Peter Zoller, William Woods, Kim Hamilton, and Susan Wilcox Mary Waters and Ron Matson, forgiven . This book is for all of you TABLE OF CONTENTS Introduction ................................................................................................ 1 A Background to Film Criticism and Millennial Viewers How to Be a Film Critic in Five Easy Lessons ........................................... 9 A Primer for Film Students and Critic Wannabes Chapter One ............................................................................................. -

Get out of La La Land! Naturalizing the Colour Line with “Colour-Blind" Cinema

Get Out of La La Land! Naturalizing the Colour Line with “Colour-Blind" Cinema by Gavin Ward A thesis submitted to the Faculty of Graduate and Postdoctoral Affairs in partial fulfillment of the requirements for the degree of Master of Arts in Sociology Carleton University Ottawa, Ontario © 2019 Gavin Ward Abstract This thesis is grounded in semiotics, discourse, and critical race theory to identify and analyze contemporary racial representations in Hollywood cinema during the “post-race” era. “Post- race” has been used sporadically since 1971 when the concept was first published in James Wooton’s New York Times article Compact Set Up for ‘Post-Racial’ South, however I appropriate it here to more accurately reflect the time period from 2008 to 2019. During this time, the United States of America had elected its first African-American president to the White House. For many, this act had symbolically legitimized America as a “post-race” nation in which contemporary racial inequalities could be explained by the principles of universal liberalism and meritocracy, thus “naturalizing” contemporary racial inequalities with nonracial dynamics. This ideology minimizes the impact of historical racisms and uses racial “colour-blindness” to construct a false sense of racial harmony. Antonio Gramsci had proposed that mass media, such as films, have been used to reinforce dominant ideology through cultural hegemony. In the United States, Hollywood has been an important vehicle of discursive formation and narrative control and contributes to the cementing of America’s “post-race” la la land. In this thesis, I conclude that Hollywood films continue to placate concerns of race relations for the dominant ingroup through cinematic escapism, glamour and romance. -

Tarantino Unchained

Alumne: Pablo Nogueras Tutora: Montserrat Gispert Tarantino unchained Presentation I have always loved cinema. Since I was little my father would bring me to watch any Disney animated movie and I really enjoyed them. As years passed, my likes evolved and movies such as the Star Wars and Indiana Jones sagas had a great impact on me. Then I discovered Christopher Nolan, Ridley Scott, Guy Ritchie, Scors- ese… and a new world of cinema appeared in my life and it made me realize it was what I really wanted to do in the future. Then Reservoir Dogs appeared and it changed my way of seeing things. I ended watching all Tarantino’s movies and never would be the same again. The way the directors sees things, and then represents them in his movies, it’s a completely dif- ferent way from the typical Hollywood cinema. He had a huge impact on me, and I wanted to know how he did it. That’s how appeared the ide of making the research project about Quentin Tarantino. Objectives When one sees a film by Quentin Tarantino one unconsciously knows that is one of his works. But, what makes Tarantino so Tarantino? This project aims to identify and analyze the most relevant traits of Quentin Tarantino’s films. Other objectives are: —Find the connections between the movies. —Relate the semblance of his productions to the action movies spaghetti westerns from the 70s, find the movies which Tarantino was inspired by and examine how accurately he uses those references in his films. —Account the number of deaths and bullets shot in each film, and which weapons were used.