Interpreters Machine Interpreters

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Using the Unibasic Debugger

C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\DEBUGGER\BASBTITL.fm March 8, 2010 10:30 am Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta UniData Using the UniBasic Debugger UDT-720-UDEB-1 C:\Program Files\Adobe\FrameMaker8\UniData 7.2\7.2rebranded\DEBUGGER\BASBTITL.fm March 8, 2010 10:30 am Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Beta Notices Edition Publication date: July 2008 Book number: UDT-720-UDEB-1 Product version: UniData 7.2 Copyright © Rocket Software, Inc. 1988-2008. All Rights Reserved. Trademarks The following trademarks appear in this publication: Trademark Trademark Owner Rocket Software™ Rocket Software, Inc. Dynamic Connect® Rocket Software, Inc. RedBack® Rocket Software, Inc. SystemBuilder™ Rocket Software, Inc. UniData® Rocket Software, Inc. UniVerse™ Rocket Software, Inc. U2™ Rocket Software, Inc. U2.NET™ Rocket Software, Inc. U2 Web Development Environment™ Rocket Software, Inc. wIntegrate® Rocket Software, Inc. Microsoft® .NET Microsoft Corporation Microsoft® Office Excel®, Outlook®, Word Microsoft Corporation Windows® Microsoft Corporation Windows® 7 Microsoft Corporation Windows Vista® Microsoft Corporation Java™ and all Java-based trademarks and logos Sun Microsystems, Inc. UNIX® X/Open Company Limited ii Using the UniBasic Debugger The above trademarks are property of the specified companies in the United States, other countries, or both. All other products or services mentioned in this document may be covered by the trademarks, service marks, or product names as designated by the companies who own or market them. License agreement This software and the associated documentation are proprietary and confidential to Rocket Software, Inc., are furnished under license, and may be used and copied only in accordance with the terms of such license and with the inclusion of the copyright notice. -

Kednos PL/I for Openvms Systems User Manual

) Kednos PL/I for OpenVMS Systems User Manual Order Number: AA-H951E-TM November 2003 This manual provides an overview of the PL/I programming language. It explains programming with Kednos PL/I on OpenVMS VAX Systems and OpenVMS Alpha Systems. It also describes the operation of the Kednos PL/I compilers and the features of the operating systems that are important to the PL/I programmer. Revision/Update Information: This revised manual supersedes the PL/I User’s Manual for VAX VMS, Order Number AA-H951D-TL. Operating System and Version: For Kednos PL/I for OpenVMS VAX: OpenVMS VAX Version 5.5 or higher For Kednos PL/I for OpenVMS Alpha: OpenVMS Alpha Version 6.2 or higher Software Version: Kednos PL/I Version 3.8 for OpenVMS VAX Kednos PL/I Version 4.4 for OpenVMS Alpha Published by: Kednos Corporation, Pebble Beach, CA, www.Kednos.com First Printing, August 1980 Revised, November 1983 Updated, April 1985 Revised, April 1987 Revised, January 1992 Revised, May 1992 Revised, November 1993 Revised, April 1995 Revised, October 1995 Revised, November 2003 Kednos Corporation makes no representations that the use of its products in the manner described in this publication will not infringe on existing or future patent rights, nor do the descriptions contained in this publication imply the granting of licenses to make, use, or sell equipment or software in accordance with the description. Possession, use, or copying of the software described in this publication is authorized only pursuant to a valid written license from Kednos Corporation or an anthorized sublicensor. -

Data General Extended Algol 60 Compiler

DATA GENERAL EXTENDED ALGOL 60 COMPILER, Data General's Extended ALGOL is a powerful language tial I/O with optional formatting. These extensions comple which allows systems programmers to develop programs ment the basic structure of ALGOL and significantly in on mini computers that would otherwise require the use of crease the convenience of ALGOL programming without much larger, more expensive computers. No other mini making the language unwieldy. computer offers a language with the programming features and general applicability of Data General's Extended FEATURES OF DATA GENERAL'S EXTENDED ALGOL Character strings are implemented as an extended data ALGOL. type to allow easy manipulation of character data. The ALGOL 60 is the most widely used language for describ program may, for example, read in character strings, search ing programming algorithms. It has a flexible, generalized, for substrings, replace characters, and maintain character arithmetic organization and a modular, "building block" string tables efficiently. structure that provides clear, easily readable documentation. Multi-precision arithmetic allows up to 60 decimal digits The language is powerful and concise, allowing the systems of precision in integer or floating point calculations. programmer to state algorithms without resorting to "tricks" Device-independent I/O provides for reading and writ to bypass the language. ing in line mode, sequential mode, or random mode.' Free These characteristics of ALGOL are especially important form reading and writing is permitted for all data types, or in the development of working prototype systems. The output can be formatted according to a "picture" of the clear documentation makes it easy for the programmer to output line or lines. -

Supplementary Materials

Contents 2 Programming Language Syntax C 1 2.3.5 Syntax Errors C 1 2.4 Theoretical Foundations C 13 2.4.1 Finite Automata C 13 2.4.2 Push-Down Automata C 18 2.4.3 Grammar and Language Classes C 19 2.6 Exercises C 24 2.7 Explorations C 25 3 Names, Scopes, and Bindings C 26 3.4 Implementing Scope C 26 3.4.1 Symbol Tables C 26 3.4.2 Association Lists and Central Reference Tables C 31 3.8 Separate Compilation C 36 3.8.1 Separate Compilation in C C 37 3.8.2 Packages and Automatic Header Inference C 40 3.8.3 Module Hierarchies C 41 3.10 Exercises C 42 3.11 Explorations C 44 4SemanticAnalysis C 45 4.5 Space Management for Attributes C 45 4.5.1 Bottom-Up Evaluation C 45 4.5.2 Top-Down Evaluation C 50 C ii Contents 4.8 Exercises C 57 4.9 Explorations C 59 5 Target Machine Architecture C 60 5.1 The Memory Hierarchy C 61 5.2 Data Representation C 63 5.2.1 Integer Arithmetic C 65 5.2.2 Floating-Point Arithmetic C 67 5.3 Instruction Set Architecture (ISA) C 70 5.3.1 Addressing Modes C 71 5.3.2 Conditions and Branches C 72 5.4 Architecture and Implementation C 75 5.4.1 Microprogramming C 76 5.4.2 Microprocessors C 77 5.4.3 RISC C 77 5.4.4 Multithreading and Multicore C 78 5.4.5 Two Example Architectures: The x86 and ARM C 80 5.5 Compiling for Modern Processors C 88 5.5.1 Keeping the Pipeline Full C 89 5.5.2 Register Allocation C 93 5.6 Summary and Concluding Remarks C 98 5.7 Exercises C 100 5.8 Explorations C 104 5.9 Bibliographic Notes C 105 6 Control Flow C 107 6.5.4 Generators in Icon C 107 6.7 Nondeterminacy C 110 6.9 Exercises C 116 6.10 Explorations -

CS412/CS413 Introduction to Compilers Tim Teitelbaum Lecture 12

CS412/CS413 Introduction to Compilers Tim Teitelbaum Lecture 12: Symbol Tables February 15, 2008 CS 412/413 Spring 2008 Introduction to Compilers 1 Where We Are Source code if (b == 0) a = b; (character stream) Lexical Analysis Token if ( b == 0 ) a = b ; stream Syntax Analysis if (Parsing) == = Abstract syntax tree (AST) b0ab if Semantic Analysis boolean int Decorated == = AST int b int 0 int a int b Errors lvalue (incorrect program) CS 412/413 Spring 2008 Introduction to Compilers 2 Non-Context-Free Syntax • Programs that are correct with respect to the language’s lexical and context-free syntactic rules may still contain other syntactic errors • Lexical analysis and context-free syntax analysis are not powerful enough to ensure the correct usage of variables, objects, functions, statements, etc. • Non-context-free syntactic analysis is known as semantic analysis CS 412/413 Spring 2008 Introduction to Compilers 3 Incorrect Programs •Example 1: lexical analysis does not distinguish between different variable or function identifiers (it returns the same token for all identifiers) int a; int a; a = 1; b = 1; •Example 2: syntax analysis does not correlate the declarations with the uses of variables in the program: int a; a = 1; a = 1; •Example3: syntax analysis does not correlate the types from the declarations with the uses of variables: int a; int a; a = 1; a = 1.0; CS 412/413 Spring 2008 Introduction to Compilers 4 Goals of Semantic Analysis • Semantic analysis ensures that the program satisfies a set of additional rules regarding the -

Design and Implementation of the GNU Prolog System Abstract 1 Introduction

Design and Implementation of the GNU Prolog System Daniel Diaz Philippe Codognet University of Paris 1 University of Paris 6 CRI, bureau C1407 LIP6, case 169 90, rue de Tolbiac 8, rue du Capitaine Scott 75013 Paris, FRANCE 75015 Paris, FRANCE and INRIA-Rocquencourt and INRIA-Rocquencourt [email protected] [email protected] Abstract In this paper we describe the design and the implementation of the GNU Pro- log system. This system draws on our previous experience of compiling Prolog to C in the wamcc system and of compiling finite domain constraints in the clp(FD) system. The compilation scheme has however been redesigned in or- der to overcome the drawbacks of compiling to C. In particular, GNU-Prolog is based on a low-level mini-assembly platform-independent language that makes it possible to avoid compiling C code, and thus drastically reduces compilation time. It also makes it possible to produce small stand-alone executable files as the result of the compilation process. Interestingly, GNU Prolog is now com- pliant to the ISO standard, includes several extensions (OS interface, sockets, global variables, etc) and integrates a powerful constraint solver over finite domains. The system is efficient and in terms of performance is comparable with commercial systems for both the Prolog and constraint aspects. 1 Introduction GNU Prolog is a free Prolog compiler supported by the GNU organization (http://www.gnu.org/software/prolog). It is a complete system which in- cludes: floating point numbers, streams, dynamic code, DCG, operating sys- tem interface, sockets, a Prolog debugger, a low-level WAM debugger, line editing facilities with completion on atoms, etc. -

Design and Implementation of the Symbol Table for Object- Oriented Programming Language

International Journal of Database Theory and Application Vol.10, No.7 (2017), pp.27-40 http://dx.doi.org/10.14257/ijdta.2017.10.7.03 Design and Implementation of the Symbol Table for Object- Oriented Programming Language Yangsun Lee Dept. of of Computer Engineering, Seokyeong University 16-1 Jungneung-Dong, Sungbuk-Ku, Seoul 136-704, KOREA [email protected] Abstract The symbol table used in the existing compiler stores one symbol information into a plurality of sub tables, and the abstract syntax tree necessary for generating symbols has a binary tree structure composed of a single data structure node. This structure increases the source code complexity of modules that generate symbols and modules that reference symbol tables, and when designing a compiler for a new language, it is necessary to newly design an abstract syntax tree and a symbol table structure considering the characteristics of the language. In this paper, we apply the object-oriented principle and visitor pattern to improve the abstract syntax tree structure and design and implement the symbol table for the object - oriented language. The design of AST (abstract syntax trees) with object-oriented principles and Visitor patterns reduces the time and cost of redesign because it makes it easy to add features of the language without the need to redesign the AST (abstract syntax tree) for the new object-oriented language. In addition, it is easy to create a symbol through the Visitor pattern. Symbol tables using the open-close principle and the dependency inversion principle can improve the code reusability of the source code that creates and refer to the table and improve the readability of the code. -

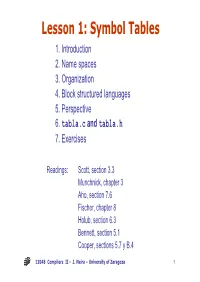

Lesson 1: Symbol Tables 1

Lesson 1: Symbol Tables 1. Introduction 2. Name spaces 3. Organization 4. Block structured languages 5. Perspective 6. tabla.c and tabla.h 7. Exercises Readings: Scott, section 3.3 Munchnick, chapter 3 Aho, section 7.6 Fischer, chapter 8 Holub, section 6.3 Bennett, section 5.1 Cooper, sections 5.7 y B.4 12048 Compilers II - J. Neira – University of Zaragoza 1 The Symbol Table • Why is it important? – Lexical analysis? int a, 1t; – Syntactic analysis? – Semantic analysis? ¿?¿? – Code generation? – Code optimization? – Execution? while a then ... • Why is it particular and complex? – What information does it contain? ¿?¿? – How/when is information included? – How/when is it accessed? – How/when is it deleted? • Do interpreters need one? c := i > 1; • Do debuggers? ¿? ¿? • Unassemblers? ¿? ¿? 12048 Compilers II - J. Neira – University of Zaragoza 2 1. Introduction Symbol table: structure It can additionally include: used by the compiler to store – temporary symbols information (in the form of attributes) associated to – labels symbols declared in the – Predefined symbols program. •Conceptually it is a set of records •Program dictionary •Its organization is strongly •name: lexical analysis influenced by syntactic as •type: syntactic analysis well as semantic aspects of •scope: semantic analysis the language at hand. •address: code generation • Types available in the language: determine the CONTENTS of the table. • Scope rules: determine the visibility of the symbols, i.e., the table’s ACCESS MECHANISM. 12048 Compilers II - J. Neira – University of Zaragoza 3 Table contents • Reserved words: they have a • Literals, constants that denote a special meaning; they CANNOT certain value be redefined. • Symbols generated by the program begin end type compiler var array if .. -

Implementation of a Subset of Modes in an Algol 68 Compiler

' . I ' ' IMPLEMENTATION OF A SUBSET OF MODES IN AN ALGOL 68 COMPILER By WALTER MICHAEL SEAY 1\ ' Bachelor of Science Troy State University Troy, Alabama 1974 Submitted to the Faculty of the Graduate College of the Oklahoma State University in partial fulfillment of the requirements for the Degree of MASTER OF SCIENCE July, 1976 IMPLEMENTATION OF A SUBSET OF MODES IN AN ALGOL 68 COMPILER Thesis Approveda Thes~~e:ser ~~ ' 953416 ii PREFACE This thesis is a description .of the mode facilities which have been added to the Oklahoma State University ALGOL 68 Compiler. Also included is a description of the changes that were required to update the language accepted by the compiler in accordance with the newest definition. I would like to thank the faculty of the Computing and Information Sciences Department for their assistance and their desire to teach. A special th~ks is in order to my advisor, Dr. G. E. Hedrick, for his invaluable assistance and understanding during my stay at Oklahoma State University. I would also like to thank my two sons, Bobby and Johnny, who often were required to be quieter than little boys should ever .have to be. It is impossible for me to express properly my thanks to my wife, Kathy, who did so much more than type. iii TABLE OF CONTENTS Chapter Page I. INTRODUCTION ••••••••• • • • • • • • • • 1 Objectives • • • • • • • • • • , • . • - • • • • 1 History of the Oklahoma State University ALGOL 68 Compiler • • • • • • • • • • • • 2 Literature Review • • • • • • • • • • • • • 4 II. ALGOL 68 MODES • • • • • • • • • • • • • • • • • 7 Introduction • • • • • • • • ~ • • • • • • • 7 Tools for Building New Modes • • • • • • • • 8 The Subset of Modes Chosen for Implementation • • • • • -. -

Compiler Construction the Art of Niklaus Wirth

Compiler Construction—The Art of Niklaus Wirth 1 Compiler Construction The Art of Niklaus Wirth Hanspeter Mössenböck University of Linz [email protected] Abstract Niklaus Wirth is not only a master of language design but also a pioneer of compiler construction. For four decades he has refined his techniques for building simple, efficient and reliable compilers. This paper tries to collect some general principles behind his work. It is not a paper about new compilation techniques but a reflection about Wirth's way to write compilers. 1 Introduction Among the numerous research topics that Niklaus Wirth approached during his professional career, programming languages and their compilers clearly was the dominating theme. There is hardly anybody in Computer Science who has such a deep insight into language design as Niklaus Wirth. Since the early sixties he has been active in this field and has contributed such seminal languages as Algol-W, Pascal, Modula-2 and Oberon. And being an engineer, he was always as much concerned about the simplicity and efficiency of his compilers as about the elegance of his languages. In this paper I will try to summarize some principles of Wirth's art of compiler construction. Although I have no personal recollections from Wirth's early projects I have studied his compilers from the early eighties on and was lucky to have the chance to work with him for several years during the Oberon project. So this paper is more about what I learned from him and what I appreciate about his work. Wirth himself gave a much more thorough description of his compiler technology in his recent compiler book [22], in his Turing Award lecture [2] and in his recollections at the second conference on the History of Programming Languages [21]. -

Debugging of a Local, Or Remote, Java Virtual Machine (JVM)

CONTENTS Introduction - Module #1 if we are lucky, and the program that dumped the core was compiled with debug options, the core file will have information about the source code and lines. The Basics 2 Module #5 Enable to inspect more easily a program, we need to have the symbol table available for the program we intend to debug; this is accomplished using the –g Introduction to Dtrace 49 flag of the compiler we are going to use (we could also debug it without the –g flag but it is really cum- Finally, we will approach both situations using Dtrace bersome sometimes). In our case we will use which is available in FreeBSD, OSX, Solaris and FreeBSD 10 as the platform, and the clang compiler opensolaris and will check if this tool is beneficial that comes with it. After a program is compiled using and a time saver in the process. the –g flag we are able to peek inside it using the gdb debugger. Module #2 Advanced inspection of data structures and vari- ables 19 Now that we have used the display command or the print command, it is getting pretty tedious to manu- ally inspect a variable or data structure by typing p, or display, every time we hit a breakpoint we have set. There is a command called “commands” to save us from all this typing. First, we set a breakpoint where we want to automatically inspect the data. Module #3 Introduction to the jdb debugger 29 The Java Debugger (JDB) is a simple command-line debugger for Java classes. -

Symbol Table: Introduction

Subject: Compiler Design Symbol Table: Introduction It is a data structure of the compiler 2)Source code-> compiler-> targetcode 3)source code-> enters into front end also called Analysis(Lex.Ana, symantic and syntax analysis)-> generation intermediate code-> backend(synthesis) Compiler role: it consist all the data structure(variables(name, types, attributes,scopes,states) ->accumulated in the symbol table),that contain the source program and it also convey information of the source code Symbol table creation is done at the beginning of the front end How symbol table help us? It gives name of all the identifiers of the SC Associated information related to the name and variable How we can access the related information *What all attributes related to an identifier is provided by the symbol table-> procedure call,type,record etc. Who creates this table? 1)this symbol is created at the analysis part by the help of the scanner. This scanner collects information and enters the data into the symbol table Note: It enters data that is not present before in the symbol table What is the use? 1) Used by both front end and back end 2) Just to check the identifiers present the source 3) To check the assignment and expression statements 4) To generate intermediate and target code Implementation of the symbol table: Operations used: Insert()-> to insert value in the table , lookup()-> to search avalue in a symbol table 1)Hash table 2)Binary Search tree 3)Link list Int a LB1 UB1 Symbol table scope: Whenever we declare an element in a program then that elements scope and their areas of implementation applies A C B Block Global: Real, integer,A,B Block A: Real , integer Block B: Real, integer Block A and B are nested to global .