Computer Vision Using Simplecv and the Raspberry Pi 2

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Premium 275+ Strong Colors

PREMIUM 275+ STRONG COLORS Die weltweit erste Street Art optimierte Sprühdose wurde The worldwide first street art optimized spray can was von Künstlern für Künstler entwickelt. Der hochdeckende developed with artists for artists. The highly opaque classic Klassiker, mit 4-fach gemahlenen Auto-K™ Pigmenten, ist with its 4 times ground Auto-K™ pigments, is the most die zuverlässigste Graffiti-Sprühdose seit 1999. re liable graffiti spray can since 1999. Mit über 275 Farbtönen verfügt das Sortiment der Moreover, with more than 275 color shades MOLOTOW™ MOLOTOW™ PREMIUM außerdem über eine der PREMIUM has one of the biggest spray paint color range. umfangreichsten Sprühdosen-Farbpaletten. PREMIUM PREMIUM Transparent 400 ml | 327300 PREMIUM Transparent PREMIUM Neon 400 ml | 327499 GRAFFITI PREMIUM 400 ml | 327000 SPRAY PAINT SEMI GLOSS MATT MATT HIGHLY HIGHEST UV AND GOOD UV AND GOOD UV RESISTANCE THE ORIGINAL WEATHER RESISTANCE WEATHER RESISTANCE WITH SEALING SINCE 1999 OPAQUE 252 COLOR SHADES TRANSPARENT 15 COLOR SHADES + 2 CLEAR COATINGS NEON 8 COLOR SHADES #181- #001 jasmin yellow 327001 #032 MAD C cherry red 327188 #063 purple violet 327138 #094 shock blue 327032 #122 riviera pastel 327216 #153 grasshopper 327064 nature green middle 327252 #203 cocoa middle 327234 #228 grey blue light 327177 2 zinc yellow 327006 signal red 327014 crocus 327199 tulip blue light 327213 riviera light 327072 cream green 327058 mustard 327049 cocoa 327126 pebble grey 327238 Händlerstempel ∙ dealer stamp Issue 12/20 #002 #033 #064 #095 #123 #154 #182 #204 #229 #182- #204- #003 cadmium yellow 327082 #034 apricot 327116 #065 lavender 327200 #096 tulip blue middle 327214 #124 TASTE riviera middle 327217 #155 hippie green 327063 mustard yellow 327262 caramel light 327253 #230 marble 327239 1 1 #182- #004 signal yellow 327165 #035 salmon orange 327134 #066 lilac 327201 #097 tulip blue 327033 #125 riviera dark 327073 #156 BACON wasabi 327222 khaki green 327263 #205 caramel 327091 #231 signal white 327160 2 4 Item-No. -

18 Free Ways to Download Any Video Off the Internet Posted on October 2, 2007 by Aseem Kishore Ads by Google

http://www.makeuseof.com/tag/18-free-ways-to-download-any-video-off-the-internet/ 18 Free Ways To Download Any Video off the Internet posted on October 2, 2007 by Aseem Kishore Ads by Google Download Videos Now download.cnet.com Get RealPlayer® & Download Videos from the web. 100% Secure Download. Full Movies For Free www.YouTube.com/BoxOffice Watch Full Length Movies on YouTube Box Office. Absolutely Free! HD Video Players from US www.20north.com/ Coby, TV, WD live, TiVo and more. Shipped from US to India Video Downloading www.VideoScavenger.com 100s of Video Clips with 1 Toolbar. Download Video Scavenger Today! It seems like everyone these days is downloading, watching, and sharing videos from video-sharing sites like YouTube, Google Video, MetaCafe, DailyMotion, Veoh, Break, and a ton of other similar sites. Whether you want to watch the video on your iPod while working out, insert it into a PowerPoint presentation to add some spice, or simply download a video before it’s removed, it’s quite essential to know how to download, convert, and play these videos. There are basically two ways to download videos off the Internet and that’s how I’ll split up this post: either via a web app or via a desktop application. Personally, I like the web applications better simply because you don’t have to clutter up and slow down your computer with all kinds of software! UPDATE: MakeUseOf put together an excellent list of the best websites for watching movies, TV shows, documentaries and standups online. -

2020 Global Color Trend Report

Global Color Trend Report Lip colors that define 2020 for Millennials and Gen Z by 0. Overview 03 1. Introduction 05 2. Method 06 Content 3. Country Color Analysis for Millennials and Gen Z 3.1 Millennial Lip Color Analysis by Country 08 3.2 Gen Z Lip Color Analysis by Country 09 4. 2020 Lip Color Trend Forecast 4.1 2020 Lip Color Trend Forecast for Millennials 12 4.2 2020 Lip Color Trend Forecast for Gen Z 12 5. Country Texture Analysis for Millennials and Gen Z 5.1 Millennial Lip Texture Analysis by Country 14 5.2 Gen Z Lip Texture Analysis by Country 15 6. Conclusion 17 02 Overview Millennial and Generation Z consumers hold enormous influence and spending power in today's market, and it will only increase in the years to come. Hence, it is crucial for brands to keep up with trends within these cohorts. Industry leading AR makeup app, YouCam Makeup, analyzed big data of 611,382 Millennial and Gen Z users over the course of six months. Based on our findings, we developed a lip color trend forecast for the upcoming year that will allow cosmetics The analysis is based on brands to best tailor their marketing strategy. According to the results, pink will remain the most popular color across all countries and age groups throughout 2020. The cranberry pink shade is the top favorite among Millennials and Gen Z across all countries. Gen Z generally prefers darker 611,382 shades of pink, while millennial consumers lean toward brighter shades. The second favorite shade of pink among Gen Z in Brazil, China, Japan, and the US is Ripe Raspberry. -

Primaria Digital. Aulas Digitales Móviles. Manual General Introductorio 1

ARGENTINA Primaria Digital. Aulas Digitales Móviles. Manual General Introductorio 1 Dirección de Gestión Educativa; Dirección de Educación Primaria Presenta los lineamientos del Plan Primaria Digital y cuenta con tres partes. En ellas se detallan la política de integración del país, sus objetivos, y una propuesta pedagógica para llevarla a cabo. También se trata la importancia de las Aulas Digitales móviles en la escuela primaria, sus ventajas e interacción, y se brinda una orientación para su uso. 01/08/2018 AULAS DIGITALES MÓVILES Instructivo técnico Equipo Técnico Jurisccional - Dirección Provincial de Tecnologías Educativas Ministerio de Educación Autoridades Presidente de la Nación Ing. Mauricio Macri Ministro de Educación y Deportes Lic. Esteban Bullrich Jefe de Gabinete Dr. Diego Sebastián Marías Secretario de Gestión Educativa Lic. Maximiliano Gulmanelli Secretaria de Innovación y Calidad Educativa Lic. María de las Mercedes Miguel Subsecretario de Coordinación Administrativa Sr. Félix Lacroze Gerente general Educ.ar S.E. Lic. Guillermo Fretes Directora de Educación Digital y Contenidos Multiplataforma Lic. María Florencia Ripani Director en Gestión de programas Ing. Mauro Iván Nunes Equipo Técnico Jurisccional - Dirección Provincial de Tecnologías Educativas Ministerio de Educación Argentina. Ministerio de Educación de la Nación Manual de primaria digital : instructivo técnico. - 1.a ed. - Ciudad Autónoma de Buenos Aires : Ministerio de Educación de la Nación, 2016. 39 p. : il. ; 28x20 cm. ISBN 978-950-00-1120-4 1. Formación -

How to Install and Configure Webcam Trust WB 3320X Live on Ubuntu /Debian Linux

Walking in Light with Christ - Faith, Computing, Diary Articles & tips and tricks on GNU/Linux, FreeBSD, Windows, mobile phone articles, religious related texts http://www.pc-freak.net/blog How to Install and configure webcam trust WB 3320X Live on Ubuntu /Debian Linux Author : admin I had to install WebCAM TRUST WB 3320X on one Xubuntu Linux install. Unfortunately by default the camera did not get detected (the Webcam vendor did not provide driver or specifications for Linux either). Thus I researched on the internet if and how this camera can be made work on Ubuntu Linux. I found some threads discussing the same issues as mine in Ubuntu Forums here . The threads even suggested a possible fix, which when followed literally did not work on this particular 32-bit Xubuntu 12.04.1 installation. I did 20 minutes research more but couldn't find much on how to make the Webcam working. I used Cheese and Skype to test if the webcamera can capture video, but in both of them all I see was just black screen. he camera was detected in lsusb displayed info as: # lsusb | grep -i webcam Bus 002 Device 002: ID 093a:2621 Pixart Imaging, Inc. PAC731x Trust Webcam After reading further a bit I found out some people online suggesting loading the gspca kernel module. I searched what kind of gspca*.kokernel modules are available using: 1 / 8 Walking in Light with Christ - Faith, Computing, Diary Articles & tips and tricks on GNU/Linux, FreeBSD, Windows, mobile phone articles, religious related texts http://www.pc-freak.net/blog locate gspca |grep -i .ko 1. -

MX-19.2 Users Manual

MX-19.2 Users Manual v. 20200801 manual AT mxlinux DOT org Ctrl-F = Search this Manual Ctrl+Home = Return to top Table of Contents 1 Introduction...................................................................................................................................4 1.1 About MX Linux................................................................................................................4 1.2 About this Manual..............................................................................................................4 1.3 System requirements..........................................................................................................5 1.4 Support and EOL................................................................................................................6 1.5 Bugs, issues and requests...................................................................................................6 1.6 Migration............................................................................................................................7 1.7 Our positions......................................................................................................................8 1.8 Notes for Translators.............................................................................................................8 2 Installation...................................................................................................................................10 2.1 Introduction......................................................................................................................10 -

Pipenightdreams Osgcal-Doc Mumudvb Mpg123-Alsa Tbb

pipenightdreams osgcal-doc mumudvb mpg123-alsa tbb-examples libgammu4-dbg gcc-4.1-doc snort-rules-default davical cutmp3 libevolution5.0-cil aspell-am python-gobject-doc openoffice.org-l10n-mn libc6-xen xserver-xorg trophy-data t38modem pioneers-console libnb-platform10-java libgtkglext1-ruby libboost-wave1.39-dev drgenius bfbtester libchromexvmcpro1 isdnutils-xtools ubuntuone-client openoffice.org2-math openoffice.org-l10n-lt lsb-cxx-ia32 kdeartwork-emoticons-kde4 wmpuzzle trafshow python-plplot lx-gdb link-monitor-applet libscm-dev liblog-agent-logger-perl libccrtp-doc libclass-throwable-perl kde-i18n-csb jack-jconv hamradio-menus coinor-libvol-doc msx-emulator bitbake nabi language-pack-gnome-zh libpaperg popularity-contest xracer-tools xfont-nexus opendrim-lmp-baseserver libvorbisfile-ruby liblinebreak-doc libgfcui-2.0-0c2a-dbg libblacs-mpi-dev dict-freedict-spa-eng blender-ogrexml aspell-da x11-apps openoffice.org-l10n-lv openoffice.org-l10n-nl pnmtopng libodbcinstq1 libhsqldb-java-doc libmono-addins-gui0.2-cil sg3-utils linux-backports-modules-alsa-2.6.31-19-generic yorick-yeti-gsl python-pymssql plasma-widget-cpuload mcpp gpsim-lcd cl-csv libhtml-clean-perl asterisk-dbg apt-dater-dbg libgnome-mag1-dev language-pack-gnome-yo python-crypto svn-autoreleasedeb sugar-terminal-activity mii-diag maria-doc libplexus-component-api-java-doc libhugs-hgl-bundled libchipcard-libgwenhywfar47-plugins libghc6-random-dev freefem3d ezmlm cakephp-scripts aspell-ar ara-byte not+sparc openoffice.org-l10n-nn linux-backports-modules-karmic-generic-pae -

Crushed Fruits and Syrups William Fenton Robertson University of Massachusetts Amherst

University of Massachusetts Amherst ScholarWorks@UMass Amherst Masters Theses 1911 - February 2014 1936 Crushed fruits and syrups William Fenton Robertson University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/theses Robertson, William Fenton, "Crushed fruits and syrups" (1936). Masters Theses 1911 - February 2014. 1914. Retrieved from https://scholarworks.umass.edu/theses/1914 This thesis is brought to you for free and open access by ScholarWorks@UMass Amherst. It has been accepted for inclusion in Masters Theses 1911 - February 2014 by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. MASSACHUSETTS STATE COLLEGE Us LIBRARY PHYS F SCI LD 3234 M268 1936 R652 CRUSHED FRUITS AND SYRUPS William Fenton Robertson Thesis submitted for the degree of Master of Science Massachusetts State College, Amherst June 1, 1936 8 TABLE OF CONTENTS I. INTRODUCTION Page 1 II. GENERAL DISCUSSION OF PRODUCTS, TYPES AND TERMS AS USED IN THE TRADE 2 Pure Product Pure Flavored Products Imitation Products Types of Products Terms used in fountain supply trade and their explanation III. DISCUSSION OF MATERIALS AND SOURCE OF SUPPLY 9 Fruits Syrups and Juices Colors Flavors Other Ingredients IV. MANUFACTURING PROCEDURE AND GENERAL AND SPECIFIC FORMULAE 15 Fruits - General Formula Syrups - General Formula Flavored Syrups - General Formula Specific Formulae Banana Extract, Imitation Banana Syrup, Imitation Birch Beer Extract, Imitation Birch Beer Syrup, Imitation Cherry Syrup, Imitation Chocolate Flavored Syrup Chooolate Flavored Syrup, Double Strength Coffee Syrup Ginger Syrup Grape Syrup Lemon Syrup, No. I Lemon Syrup, No. II Lemon and Lime Syrup Orange Syrup Orangeade cx> Pineapple Syrup Raspberry Syrup ~~~ Root Beer Syrup ^ Strawberry Syrup ui TABLE OF CONTENTS (Continued) Vanilla Syrup Butterscotch Caramel Fudge Cherries Frozen Pudding Chocolate Fudge Ginger Glace Marshmallow Crushed Raspberries Walnuts in Syrup Pectin Emulsion CRUSHED FRUIT V. -

2021 FALL PLUG PROGRAM Tray Sizes Item Tray Sizes Item 144 288 Cabbage Flowering Osaka 144 288 Kale Flowering Nagoya

2021 FALL PLUG PROGRAM Tray Sizes Item Tray Sizes Item 144 288 Cabbage Flowering Osaka 144 288 Kale Flowering Nagoya . Height: 6-12” Spread: 12-18” . Height: 10-12 Spread: 15-18” Mix Red Mix Rose Pink White Red White 144 288 Cabbage Flowering Pigeon 144 288 Kale Flowering Peacock . Height: 6-10” Spread: 12-14” . Height: 8-12” Spread: 12-14” Purple Victoria Red White Red White 144 288 Kale Flowering Redbor 288 Calendula Bon Bon . Height: 8-24” (can grow up to 3ft) Spread: 26” . Height: 10-12” Spread: 10-12” Apricot Orange 144 288 Kale Flowering Winterbor Light Yellow Yellow . Height: 24-30” Spread: 8” Mix 288 Kale Flowering Yokohama 144 288 Celosia Dragons’ Breath . Height: 5-7” Spread: 8” . Height: 24” Spread: 16” Mix White Red 288 Dianthus Coronet . Height: 8-10” Spread: 8-10” 144 288 Marigold Antigua Cherry Red Strawberry . Height: 10-12” Spread: 10-12” Flower Size: 3” Mix White Gold Primrose Rose Mix Yellow Orange 288 Dianthus Ideal Select . Height: 8-10” Spread: 8” 144 288 Marigold Durango Formula Mix Violet . Height: 10-12” Spread: 6-8” Flower Size: 2-2.5” Raspberry White Bee Orange Red White Fire Bolero Outback Mix Rose Flame Red Gold Tangerine 288 Dianthus Super Parfait Mix Yellow . Height: 6-8” Spread: 8-10” Mix Red Peppermint 288 Mustard Ornamental Miz America Raspberry Strawberry . Height: 4-10” Spread: 4-8” 288 Dianthus Telstar 144 288 Mustard Ornamental Red Giant . Height: 8-10” Spread: 9” . Height: 24” Spread: 24” Burgundy Purple 144 Ornamental Pepper Chilly Chili Carmine Rose Purple Picotee . -

SPRING PLANT SALE List of Perennials

Do you have extra unused GALLON SPRING PLANT SALE SIZE ONLY plant pots? We will reuse gallon-sized round plant pots ONLY ! List of Perennials We do not use square or small pots, or plant trays. Please return to Roots and Branches. Leave at end of the driveway-290 Green Valley Place Friday, May 14th (One block east of Main & Vine, then right on Eder to Green Valley place, West Bend.) Noon to 7:00pm Saturday, May 15th N 8:00am to 1:00pm Corner Of Main & Vine West Bend Plant Pricing Perennials: $6 gallon pots Annual 4-pack flats $15 4 1/2” Annual Pots- $4 each (No Flat Discount) LOCALLY GROWN, WINTER-HARDY PERENNIALS LOCALLY GROWN ANNUALS Questions call 335-5083 or visit rootsbranches.org Shade Tolerant Perennials Part Shade to Sun Perennials Hosta: Excellent foliage plants for shade. Incredible range of leaf color and shape. Some varieties available are: Big Root Geranium: Sun to part shade– Fragrant, hardy groundcover chokes out weeds & looks nice spring thru fall, pink flowers late spring, drought & heat tolerant, ht 12-18” Island Breeze: Bright yellow leaves with red stems, ht 12” Bleeding Heart: Part shade – two varieties available Pink or White! Heart shaped Honeybells: Large apple green leaves, ht 21-23” flowers late spring, ht 31” Big Leaf Tricolor: Large heart shaped leaf with blue/green center and Dwarf Iris ‘Pixie Princess’: Part shade– White with sharp blue border bearded variety, light green and cream edges & streaks, ht 24-28” early bloom, ht 11” Guacamole: Huge glossy apple green leaves with streaked dark green margins, -

Rose Primary Colors and Hues

Rose Primary Colors and Hues for roses available at Alden Lane Nursery Some roses will exhibit multiple colors and/or shades Most roses will exhibit these colors when opening. Continued sun exposure will induce some color fading. ORANGE – Includes Salmon, Apricot, Melon, Preach, Copper Abbaye De Cluny Hollywood Star Playboy Abraham Darby Hot Cocoa Polka Adobe Sunrise Hot n Spicy Port Sunlight All aTwitter Jacobs Robe Pumpkin Patch America Josephs Coat Rainbows End Apricot Candy Jubilee Celebration Remember Me Austrian Copper Judy Garland Rio Samba Brandy Just Joey Royal Sunset Bronze Star Lady of Shalott Salmon Sunblaze Carding Mill Lasting Peace Smoke Rings Cary Grant Livin Easy Spanish Sunset Charisma Livin Easy-Easy Going Strike It Rich Chiluly Mulitigraft Sundowner Chris Evert Mandarin Sunblaze Sunset Celebration Colorific Mardi Gras Sunstruck Day Breaker Marilyn Monroe Tahitian Sunset Drift – Coral Marmalade Skies Tangerine Streams Drift - Peach New Year Tequila Supreme Easy Does It Orange Crush Tiddly Winks Eyeconic Melon Lemonade Oranges n Lemons Trumpeter Eyeconic Pink Lemonade Over The Moon Valencia Fragrant Cloud Passionate Kisses Vavoom French Lace Pink Flamingo We Salute You Garden Sun Westerland Gingersnap Alden Lane Nursery 981 Alden Lane Livermore, CA 94550 (925)447-0280 www.aldenlane.com Rose Primary Colors and Hues for roses available at Alden Lane Nursery Some roses will exhibit multiple colors and/or shades Most roses will exhibit these colors when opening. Continued sun exposure will induce some color fading. PINK -

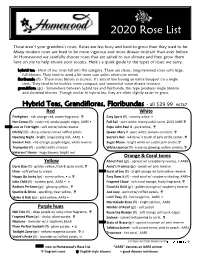

2020 Rose List

2020 Rose List These aren’t your grandma’s roses. Roses are less fussy and hard-to-grow than they used to be. Many modern roses are bred to be more vigorous and more disease resistant than ever before. At Homewood we carefully choose roses that are suited to our climate and then grow them here on site to help ensure your success. Here’s a quick guide to the types of roses we carry. hybrid tea - Most of our roses fall into this category. These are classic, long-stemmed roses with large, full blooms. They tend to need a bit more care unless otherwise noted. floribunda (fl) - These roses bloom in clusters. It’s almost like having an entire bouquet on a single stem. They tend to be bushier, more compact, and somewhat more disease resistant. grandiflora (gr) - Somewhere between hybrid tea and floribunda, this type produces single blooms and clustered blooms. Though similar to hybrid tea, they are often slightly easier to grow. Hybrid Teas, Grandifloras, Floribundas - all $29.99 #6767 Red White Firefighter - rich orange-red, sweet fragrance, ♕ Easy Spirit (fl) - creamy white, •♕ Hot Cocoa (fl) - rusty red, smoky purple edges, AARS •♕ Full Sail - pure white, honeysuckle scent, 2013 AARS ♕ Love at First Sight - soft red w/ white reverse♕♕♕ Pope John Paul II - pure white, ♕ Oh My! (fl) - deep, velvety red w/ ruffled petals♕♕ Queen Mary 2 - pure white, banana-scented, ♕ Opening Night - bright, long-lasting red, AARS •♕ Secret’s Out - white w/ a touch of pink at the center, ♕ Smokin’ Hot - red-orange, purple edges, white reverse♕ Sugar Moon -