AP2009-05Report.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Operating Instructions Present Weather Sensor Parsivel

Operating instructions Present Weather Sensor Parsivel English We reserve the right to make technical changes! Table of contents 1 Scope of delivery 5 2 Part numbers 5 3 Parsivel Factory Settings 6 4 Safety instructions 7 5 Introduction 8 5.1 Functional principle 8 5.2 Connection Options for the Parsivel 9 6 Installing the Parsivel 10 6.1 Cable Selection 10 6.2 Wiring the Parsivel 11 6.3 Grounding the Parsivel 13 6.4 Installing the Parsivel 14 7 Connecting the Parsivel to a data logger 15 7.1 Connecting the Parsivel to the LogoSens Station Manager via RS-485 interface 15 7.2 Connecting the Parsivel to a Data logger via the SDI-12 Interface 17 7.3 Connecting the Parsivel to a Data Logger with Impulse/Status Input 21 8 Connecting the Parsivel to a PC 23 8.1 Connecting the Parsivel to Interface Converter RS-485/RS-232 (Accessories) 23 8.2 Connecting the Parsivel to the ADAM-4520 Converter RS-485/RS-232 (Accessories) 25 8.3 Connecting the Parsivel to Interface Converter RS-485/USB (Accessories) 26 8.4 Connecting the Parsivel to any RS-485 Interface Converter 27 8.5 Connecting the Parsivel for configuration via the Service-Tool to a PC 27 9 Connecting the Parsivel to a Power Supply (Accessory) 29 10 Heating the Parsivel sensor heads 30 11 Operating Parsivel with a Terminal software 31 11.1 Set up communications between the Parsivel and the terminal program 31 11.2 Measured value numbers 32 11.3 Defining the formatting string 33 11.4 OTT telegram 33 11.5 Updating Parsivel Firmware 34 12 Maintenance 36 12.1 Cleaning the laser’s protective glass -

How to Get Weather and Pest Data?

How to get weather and pest data? François Brun (ACTA) with contributions of the other lecturers IPM CC, October 2016 Which data ? • Weather and Climate – Weather : conditions of the atmosphere over a short period of time – climate : atmosphere behavior over relatively long periods of time. • Pest and Disease data – Effects of conditions : experiments – Epidemiology : observation / monitoring networks Weather and Climate data Past Weather Historical Climate Data – Ground weather station – Average and variability – Satellite,… – Real long time series – Reconstituted long series – Simulated long series (1961- 1990 : reference) Forecast Weather Climate projections – Prediction with model – Prediction with model – Short term : 1h, 3h, 12h, 24, – IPCC report 3 day, 15 day. – 2021-2050 : middle of – Seasonal prediction : 1 to 6 century period months (~ el nino ) – 2071-2100 : end of century period Past Weather data Standard weather station Standard : at 2 m height • Frequent Useful for us – Thermometer : temperature – Anemometer : wind speed – Wind vane : wind direction – Hygrometer : humidity – Barometer : atmospheric pressure • Less frequent – Ceilometer : cloud height – Present weather sensor – Visibility sensor – Rain gauge : liquid-equivalent precipitation – Ultrasonic snow depth sensor for measuring depth of snow © Choi – Pyranometer : solar radiation Past Weather data In field / micro weather observations Wetness duration Temperature and humidity in canopy Water in soil © Choi Past Weather data Where to retrieve them ? • Your own weather -

ICICLE Program Updates (Stephanie Divito, FAA)

In-Cloud ICing and Large-drop Experiment Stephanie DiVito, FAA October 13, 2020 New FAA Flight program: ICICLE In-Cloud ICing and Large-drop Experiment Other Participants: Desert Research Institute (DRI), National Oceanic and Atmospheric Association (NOAA) Earth System Research Laboratory (ESRL), National Aeronautics and Space Administration (NASA) Langley Research Center, Meteo-France, UK Met Office, Deutscher Wetterdienst (German Meteorological Office), Northern Illinois University, Iowa State University, University of Illinois at Urbana-Champaign, and Valparaiso University 10/13/2020 FPAW: ICICLE 2 Flight Program Overview • January 27 – March 8, 2019 • Operations Base: Rockford, Illinois – Domain: 200 nmi radius • NRC Convair-580 aircraft – Owned and operated by NRC Flight Research Laboratory – Jointly instrumented by NRC and ECCC – Extensively used in icing research for over 25 years • 120 flight hours (110 for research) • 26 research flights (30 total) 10/13/2020 FPAW: ICICLE 3 Scientific & Technical Objectives • Observe, document, and further characterize a variety of in-flight and surface-level icing conditions – Environmental parameters and particle size distribution for: . Small-drop icing, FZDZ and FZRA – Transitions between those environments & non-icing environments – Synoptic, mesoscale & local effects • Assess ability of operational data, icing tools and products to diagnose and forecast those features – Satellite – GOES-16 – Radar – Individual NEXRADs, MRMS – Surface based – ASOS, AWOS, etc. – Numerical Weather Prediction (NWP) models – Microphysical parameterizations, TLE, etc. – Icing Products - CIP, FIP, other icing tools 10/13/2020 FPAW: ICICLE 4 Sampling Objectives (1/2) • Collect data in a wide variety of icing and non-icing conditions – Small-drop and large-drop . Including those with (& without) FZDZ and FZRA – Null icing environments . -

Ott Parsivel - Enhanced Precipitation Identifier for Present Weather, Drop Size Distribution and Radar Reflectivity - Ott Messtechnik, Germany

® OTT PARSIVEL - ENHANCED PRECIPITATION IDENTIFIER FOR PRESENT WEATHER, DROP SIZE DISTRIBUTION AND RADAR REFLECTIVITY - OTT MESSTECHNIK, GERMANY Kurt Nemeth1, Martin Löffler-Mang2 1 OTT Messtechnik GmbH & Co. KG, Kempten (Germany) 2 HTW, Saarbrücken (Germany) as a laser-optic enhanced precipitation identifier and present weather sensor. The patented extinction method for simultaneous measurements of particle size and velocity of all liquid and solid precipitation employs a direct physical measurement principle and classification of hydrometeors. The instrument provides a full picture of precipitation events during any kind of weather phenomenon and provides accurate reporting of precipitation types, accumulation and intensities without degradation of per- formance in severe outdoor environments. Parsivel® operates in any climate regime and the built-in heating device minimizes the negative effect of freezing and frozen precipitation accreting critical surfaces on the instrument. Parsivel® can be integrated into an Automated Surface/ Weather Observing System (ASOS/AWOS) as part of the sensor suite. The derived data can be processed and 1. Introduction included into transmitted weather observation reports and messages (WMO, SYNOP, METAR and NWS codes). ® OTT Parsivel : Laser based optical Disdrometer for 1.2. Performance, accuracy and calibration procedure simultaneous measurement of PARticle SIze and VELocity of all liquid and solid precipitation. This state The new generation of Parsivel® disdrometer provides of the art instrument, designed to operate under all the latest state of the art optical laser technology. Each weather conditions, is capable of fulfilling multiple hydrometeor, which falls through the measuring area is meteorological applications: present weather sensing, measured simultaneously for size and velocity with an optical precipitation gauging, enhanced precipitation acquisition cycle of 50 kHz. -

Launching of New RS90-AG Radiosonde Valuable

40813_VaisalaNews_155 7.12.2000 18:29 Sivu 1 155/2001155/2001 After Extensive Field Testing: Launching of New RS90-AG Radiosonde Customer Satisfaction Survey for WOBS Customers: Valuable Customer Feedback Using Product Platforms: Next Generation of Surface Weather Software Products New ROSA Weather Station Generation: Evolution Rather Than Revolution 40813_VaisalaNews_155 7.12.2000 18:29 Sivu 2 Contents President’s Column 3 Serving Better Our Customers 4 Customer Satisfaction Survey for WOBS Customers: Valuable Customer Feedback 6 Next Generation of Surface Weather Software Products 8 Meteorological Data Management System: Aurora’s payload system was MetMan for Multi-purpose Data Collection 10 developed for high altitude dropsonde missions, for use on Compact MAWS301 Automatic Weather Station 12 low speed platforms such as Demanding Tactical Military Needs 14 Pathfinder, Altus and Perseus B. Due to funding cuts, the Vaisala Technology for the U.S. Air Force 17 dropsonde payload was not Military Exhibition EUROSATORY 2000 in France 18 permitted to be deployed and Climatological Conditions on the My Thuan Bridge 19 operated from Pathfinder. Nevertheless, Aurora tested and Major Contract from the U.S. qualified the payload for flight, National Weather Service 20 using its high altitude test Using L and S-Band Boundary Layer Radars and a chambers. Vaisala’s dropsondes were an integral part of this Millimeter-wave Doppler Radar with Vaisala MAWS: scientific experiment. Weather Observations 20 Researchers are using dropson- Royal Botanic Gardens Melbourne: des to get a more accurate pic- Fostering Plant Conservation 24 ture of hurricanes. Fire RAWS Unit on the Bircher Burned Over 26 Launching of RS90-AG Radiosonde 29 Vaisala’s MAWS301 Automatic Global Positioning System 31 Weather Station is a new gener- ation weather station especially Significant Radiosonde Order designed for applications where from Met Service Canada 32 no commercial power or com- Vaisala’s Next Generation munication networks are avail- 32 able or economically installed. -

Observation of Present and Past Weather; State of the Ground

CHAPTER CONTENTS Page CHAPTER 14. OBSERVATION OF PRESENT AND PAST WEATHER; STATE OF THE GROUND .. 450 14.1 General ................................................................... 450 14.1.1 Definitions ......................................................... 450 14.1.2 Units and scales ..................................................... 450 14.1.3 Meteorological requirements ......................................... 451 14.1.4 Observation methods. 451 14.2 Observation of present and past weather ...................................... 451 14.2.1 Precipitation. 452 14.2.1.1 Objects of observation ....................................... 452 14.2.1.2 Instruments and measuring devices: precipitation type ........... 452 14.2.1.3 Instruments and measuring devices: precipitation intensity and character ............................................... 454 14.2.1.4 Instruments and measuring devices: multi-sensor approach ....... 455 14.2.2 Atmospheric obscurity and suspensoids ................................ 455 14.2.2.1 Objects of observation ....................................... 455 14.2.2.2 Instruments and measuring devices for obscurity and suspensoid characteristics .................................... 455 14.2.3 Other weather events ................................................ 456 14.2.3.1 Objects of observation ....................................... 456 14.2.3.2 Instruments and measuring devices. 457 14.2.4 State of the sky ...................................................... 457 14.2.4.1 Objects of observation ...................................... -

Ront November-Ddecember, 2002 National Weather Service Central Region Volume 1 Number 6

The ront November-DDecember, 2002 National Weather Service Central Region Volume 1 Number 6 Technology at work for your safety In this issue: Conceived and deployed as stand alone systems for airports, weather sensors and radar systems now share information to enhance safety and efficiency in the National Airspace System. ITWS - Integrated Jim Roets, Lead Forecaster help the flow of air traffic and promote air Terminal Aviation Weather Center safety. One of those modernization com- Weather System The National Airspace System ponents is the Automated Surface (NAS) is a complex integration of many Observing System (ASOS). technologies. Besides the aircraft that fly There are two direct uses for ASOS, you and your family to vacation resorts, and the FAA’s Automated Weather or business meetings, many other tech- Observing System (AWOS). They are: nologies are at work - unseen, but critical Integrated Terminal Weather System MIAWS - Medium to aviation safety. The Federal Aviation (ITWS), and the Medium Intensity Intensity Airport Administration (FAA) is undertaking a Airport Weather System (MIAWS). The Weather System modernization of the NAS. One of the technologies that make up ITWS, shown modernization efforts is seeking to blend in Figure 1, expand the reach of the many weather and aircraft sensors, sur- observing site from the terminal to the en veillance radar, and computer model route environment. Their primary focus weather output into presentations that will is to reduce delays caused by weather, Gust fronts - Evolution and Detection Weather radar displays NWS - Doppler FAA - ITWS ASOS - It’s not just for airport observations anymore Mission Statement To enhance aviation safety by Source: MIT Lincoln Labs increasing the pilots’ knowledge of weather systems and processes Figure 1. -

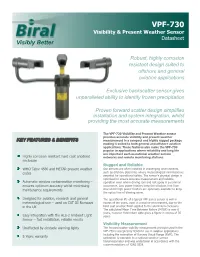

VPF-730 Visibility & Present Weather Sensor Datasheet Visibly Better

VPF-730 Visibility & Present Weather Sensor Datasheet Visibly Better Robust, highly corrosion resistant design suited to offshore and general aviation applications Exclusive backscatter sensor gives unparalleled ability to identify frozen precipitation Proven forward scatter design simplifies installation and system integration, whilst providing the most accurate measurements The VPF-730 Visibility and Present Weather sensor provides accurate visibility and present weather KEY FEATURES & BENEFITS measurement in a compact and highly rugged package making it suited to both general and offshore aviation applications. These features also make the VPF-730 popular in applications where reliability and long life are important such as national weather service Highly corrosion resistant hard coat anodised networks and remote monitoring stations. enclosure Rugged and Reliable WMO Table 4680 and METAR present weather Our sensors are often installed in challenging environments, codes such as offshore platforms, where meteorological information is essential for operational safety. The sensor's physical design is optimised to ensure accurate measurement and reliable Automatic window contamination monitoring – operation even where driving rain and salt spray is a common ensures optimum accuracy whilst minimising occurrence. Low power heaters keep the windows free from maintenance requirements dew whilst high power heaters are optionally available to keep the optics free of blowing snow. Designed for aviation, research and general The operational life of a typical VPF series sensor is well in meteorological use – used on CAT III Runways excess of ten years, even in a marine environment, due to the in the UK hard coat anodise finish applied to the aluminium enclosure. The calculated Mean Time Between Failure (MTBF) is over 6 years, however field return data gives a figure in excess of 35 Easy integration with the ALS-2 Ambient Light years. -

Open Tobin Dissertation V2.Pdf

The Pennsylvania State University The Graduate School A MULTI-FACETED VIEW OF WINTER PRECIPITATION: SOCIETAL IMPACTS, POLARIMETRIC RADAR DETECTION, AND MICROPHYSICAL MODELING OF TRANSITIONAL WINTER PRECIPITATION A Dissertation in Meteorology and Atmospheric Science by Dana Marie Tobin 2020 Dana Marie Tobin Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy December 2020 ii The dissertation of Dana Marie Tobin was reviewed and approved by the following: Matthew R. Kumjian Associate Professor of Meteorology Dissertation Advisor Chair of Committee Eugene E. Clothiaux Professor of Meteorology Jerry Y. Harrington Professor of Meteorology Vikash V. Gayah Associate Professor of Civil and Environmental Engineering David J. Stensrud Professor of Meteorology Head of the Department iii ABSTRACT There remain several unanswered questions related to transitional winter precipitation, ranging from the impacts that it has on society to what microphysical processes are involved with its formation. With an improved understanding of the formation and impacts of transitional winter precipitation types, it is possible to reduce or minimize their adverse societal impacts in the future by improving their detection and forecasting. Precipitation is known to have an adverse effect on motor vehicle transportation, but no study has quantified the effects of ice pellets or freezing precipitation. An investigation of the number of vehicle-related fatalities during each precipitation type reveals a bias in the number of transitional-winter-precipitation type categories such that the fatality data cannot be used as-is to quantify the impacts of precipitation on vehicle fatalities with certainty. Matching traffic crash data to nearby precipitation-type reports provides an avenue to identify periods of precipitation during which a crash occurred. -

SWS-250 Visibility & Present Weather Sensor Datasheet Visibly Better

SWS-250 Visibility & Present Weather Sensor Datasheet Visibly Better Most advanced sensor in the SWS range reporting both WMO Table 4680 and METAR codes Exclusive backscatter sensor gives unparalleled ability to identify frozen precipitation Compact forward scatter design simplifies installation and integration with aviation Runway Visual Range (RVR) and METAR systems The SWS-250 Visibility and Present Weather sensor is the most advanced of the SWS series with many of the KEY FEATURES & BENEFITS present weather reporting capabilities of Biral's flagship VPF-750 present weather sensor. The use of a backscatter receiver, exclusive to Biral sensors, WMO Table 4680 and METAR present and past significantly improves the accuracy of present weather weather codes reporting and allows a wider range of precipitation types to be identified with confidence. The ability to Automatic window contamination monitoring – accurately distinguish frozen from non-frozen precipitation can be of significant importance in ensures optimum accuracy whilst minimising aviation applications and national weather service maintenance requirements monitoring networks. Wide range of visibility reporting formats Visibility Measurement including Meteorological Optical Range (MOR) The measurement of visibility by forward scatter as used by the and atmospheric extinction coefficient (EXCO) SWS-250 is now widely accepted and seen as having significant advantages over more traditional techniques such as the use of Easy integration with the ALS-2 Ambient Light transmissometers. Whilst transmissometers have the advantage Sensor – fast installation, reliable results of direct visibility measurement they are expensive to both acquire and maintain, whilst the limited measurement range restricts their use in some applications. Forward scatter sensors 10m to 99.99km measurement range by contrast are compact, considerably less expensive and require less maintenance. -

JO 7900.5D Chg.1

U.S. DEPARTMENT OF TRANSPORTATION JO 7900.SD CHANGE CHG 1 FEDERAL AVIATION ADMINISTRATION National Policy Effective Date: 11/29/2017 SUBJ: JO 7900.SD Surface Weather Observing 1. Purpose. This change amends practices and procedures in Surface Weather Observing and also defines the FAA Weather Observation Quality Control Program. 2. Audience. This order applies to all FAA and FAA-contract personnel, Limited Aviation Weather Reporting Stations (LAWRS) personnel, Non-Federal Observation (NF-OBS) Program personnel, as well as United States Coast Guard (USCG) personnel, as a component ofthe Department ofHomeland Security and engaged in taking and reporting aviation surface observations. 3. Where I can find this order. This order is available on the FAA Web site at http://faa.gov/air traffic/publications and on the MyFAA employee website at http://employees.faa.gov/tools resources/orders notices/. 4. Explanation of Changes. This change adds references to the new JO 7210.77, Non Federal Weather Observation Program Operation and Administration order and removes the old NF-OBS program from Appendix B. Backup procedures for manual and digital ATIS locations are prescribed. The FAA is now the certification authority for all FAA sponsored aviation weather observers. Notification procedures for the National Enterprise Management Center (NEMC) are added. Appendix B, Continuity of Service is added. Appendix L, Aviation Weather Observation Quality Control Program is also added. PAGE CHANGE CONTROL CHART RemovePa es Dated Insert Pa es Dated ii thru xi 12/20/16 ii thru xi 11/15/17 2 12/20/16 2 11/15/17 5 12/20/17 5 11/15/17 7 12/20/16 7 11/15/17 12 12/20/16 12 11/15/17 15 12/20/16 15 11/15/17 19 12/20/16 19 11/15/17 34 12/20/16 34 11/15/17 43 thru 45 12/20/16 43 thru 45 11/15/17 138 12/20/16 138 11/15/17 148 12/20/16 148 11/15/17 152 thru 153 12/20/16 152 thru 153 11/15/17 AppendixL 11/15/17 Distribution: Electronic 1 Initiated By: AJT-2 11/29/2017 JO 7900.5D Chg.1 5. -

HIDROMETEOROLOGIA.Pdf

TELEMETRIATELEMETRIA BI-DIRECIONALBI-DIRECIONAL Para Aplicações Hidrometeorológicas AgoraAgora vocêvocê escolheescolhe comocomo SATÉLITE COMERCIAIS e quando receber os dados (BI-DIRECIONAIS) e quando receber os dados ORBCOMM (Órbita Baixa) ESTAÇÃO INMARSAT C (Geoestacionário) INMARSAT C (Geoestacionário) METEOROLÓGICA AUTOTRAC (Geoestacionário) SATÉLITES AMBIENTAIS (NÃO BI-DIRECIONAIS) GOES (Geoestacionário) Além das opções de telemetria a ARGOS (Polar) tecnologia VAISALA oferece: SCD/INPE (Equatorial) Extensa biblioteca de cálculos CELULAR / RÁDIO Cálculo de evapotranspiração GSM ( GPRS / SMS / DATACALL) Extensa lista de sensores RÁDIO ( UHF + SPREAD SPECTRUM ) POSTO Sensores de qualidade de água CÉLULAS REPETIDORAS HIDROLÓGICO Relógio ajustado pelo GPS LINHA FÍSICA Banco de dados PSTN - LINHA DISCADA TCP-IP (LAN) Compromisso com a solução e confiabilidade LINHA PONTO A PONTO Assistência técnica no País LA ABR-11 © Vaisala Oyj HOBECOHOBECO ÍNDICEÍNDICE VAISALAVAISALA TECNOLOGIATECNOLOGIA EMEM ESTAÇÕESESTAÇÕES REMOTASREMOTAS -- VAISALAVAISALA TECNOLOGIATECNOLOGIA EMEM SENSORESSENSORES -- VAISALAVAISALA TECNOLOGIATECNOLOGIA DEDE COMUNICAÇÃOCOMUNICAÇÃO TECNOLOGIATECNOLOGIA EMEM ESTAÇÃOESTAÇÃO CENTRALCENTRAL -- VAISALAVAISALA ENTREENTRE EMEM CONTATOCONTATO COMCOM AA HOBECOHOBECO LA ABR-11 © Vaisala Oyj HOBECOHOBECO LA ABR-11 © Vaisala Oyj Missão : Prover soluções em sistemas de observação hidrometeorológicos LA ABR-11 © Vaisala Oyj e ambientais. Solução e Compromisso: • Entendimento do problema • Compromisso com a de observação satisfação