Comparison Between Touch Gesture and Pupil Reaction to Find Occurrences of Interested Item in Mobile Web Browsing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Divibib Gmbh

Kompatible eBook-Reader, Smartphones und Tablet PCs Stand: 13.10.2014 Diese Zusammenstellung erfolgt anhand der vom Hersteller angegebenen Daten. Es handelt sich weder um eine Kaufempfehlung, noch um eine Garantie, dass ein Gerät alle gewünschten Funktionen erfüllt. Dies betrifft auch die notwendige DRM-Funktionalität. Wir empfehlen ausdrücklich vor dem Kauf eines Gerätes die Anforderungen mit dem Händler zu klären. Das ePub-Format ist mit Windows- und Mac OS X - Betriebssystemen nutzbar. eBook-Reader: Acer LumiRead 600* Iconia Tab B1 *per WiFi direktes Ausleihen und Öffnen im eBook-Reader möglich. BeBook BeBook „Mini“ Bookeen Cybook Opus Cybook Gen3 Cybook Odyssey* Cybook Odyssey HD Frontlight* *per WiFi direktes Ausleihen und Öffnen im eBook-Reader möglich Icarus Icarus Illumnia* * Offenes Android-Betriebssystem – Installation von Apps möglich. Imcosys imcoV6L* * Offenes Android-Betriebssystem – Installation von Apps möglich. Seite 1 von 6 - 2 - IRiver iRiver Story Kobo Kobo eReader Touch N905 Kobo glo Kobo mini Kobo Aura HD Kobo Aura Kobo Aura H2O eLyricon EBX-700.Touch Onyx Boox T68* * Offenes Android-Betriebssystem – Installation von Apps möglich. Pocketbook Pocketbook A10 Pocketbook 360° Pocketbook 602 Pocketbook 603 Pocketbook Touch 622 Pocketbook Basic Pocketbook Touch Lux Pocketbook Touch Lux 2 Pocketbook Aqua Pocketbook Ultra Pocketbook Sense Sony Sony PRS-350 Sony PRS-650 Reader™ Wi-Fi® (WLAN) (PRS-T1)* Reader™ Wi-Fi® (WLAN) (PRS-T2)* Nur in Verbindung mit der Reader™ Wi-Fi® (WLAN) (PRS-T3 / T3S)* Zusatzsoftware „Sony Reader for PC/MAC“ *per WiFi direktes Ausleihen und Öffnen im eBook-Reader möglich - 3 - Thalia OYO Reader TouchME Tolino Tolino Shine*/** Tolino Vision*/** Tolino Tab *per WiFi direktes Ausleihen und Öffnen im eBook-Reader möglich ** Beim Aufruf von Titeln im ePub3-Format erscheint ein Warnhinweis. -

Electronic 3D Models Catalogue (On July 26, 2019)

Electronic 3D models Catalogue (on July 26, 2019) Acer 001 Acer Iconia Tab A510 002 Acer Liquid Z5 003 Acer Liquid S2 Red 004 Acer Liquid S2 Black 005 Acer Iconia Tab A3 White 006 Acer Iconia Tab A1-810 White 007 Acer Iconia W4 008 Acer Liquid E3 Black 009 Acer Liquid E3 Silver 010 Acer Iconia B1-720 Iron Gray 011 Acer Iconia B1-720 Red 012 Acer Iconia B1-720 White 013 Acer Liquid Z3 Rock Black 014 Acer Liquid Z3 Classic White 015 Acer Iconia One 7 B1-730 Black 016 Acer Iconia One 7 B1-730 Red 017 Acer Iconia One 7 B1-730 Yellow 018 Acer Iconia One 7 B1-730 Green 019 Acer Iconia One 7 B1-730 Pink 020 Acer Iconia One 7 B1-730 Orange 021 Acer Iconia One 7 B1-730 Purple 022 Acer Iconia One 7 B1-730 White 023 Acer Iconia One 7 B1-730 Blue 024 Acer Iconia One 7 B1-730 Cyan 025 Acer Aspire Switch 10 026 Acer Iconia Tab A1-810 Red 027 Acer Iconia Tab A1-810 Black 028 Acer Iconia A1-830 White 029 Acer Liquid Z4 White 030 Acer Liquid Z4 Black 031 Acer Liquid Z200 Essential White 032 Acer Liquid Z200 Titanium Black 033 Acer Liquid Z200 Fragrant Pink 034 Acer Liquid Z200 Sky Blue 035 Acer Liquid Z200 Sunshine Yellow 036 Acer Liquid Jade Black 037 Acer Liquid Jade Green 038 Acer Liquid Jade White 039 Acer Liquid Z500 Sandy Silver 040 Acer Liquid Z500 Aquamarine Green 041 Acer Liquid Z500 Titanium Black 042 Acer Iconia Tab 7 (A1-713) 043 Acer Iconia Tab 7 (A1-713HD) 044 Acer Liquid E700 Burgundy Red 045 Acer Liquid E700 Titan Black 046 Acer Iconia Tab 8 047 Acer Liquid X1 Graphite Black 048 Acer Liquid X1 Wine Red 049 Acer Iconia Tab 8 W 050 Acer -

7-Inch and 8-Inch Tablet Comparison with Benchmarks

7-INCH AND 8-INCH TABLET COMPARISON WITH BENCHMARKS Benchmarks for tablets give a representative view of device performance. When purchasing a tablet, consumers can use benchmark results that measure battery life, graphics performance, and processor power to better understand these important and varied capabilities. In the Principled Technologies labs, we measured the performance of ten Android™ tablets, five 7-inch and five 8-inch models from multiple brands, using an assortment of benchmarks. Two 7-inch and two 8-inch tablets featured Intel processors while the rest were ARM-based devices. TABLETS WE TESTED We tested the following 7-inch tablets: Amazon® Kindle Fire HD Intel® processor-powered Dell™ Venue™ 7 Intel processor-powered ECS TA70CA2 Lenovo® IdeaTab™ A3000 Samsung® Galaxy Tab® 3 In addition, we tested the following 8-inch tablets: Acer® Iconia A1-810-L615 Intel processor-powered Acer Iconia A1-830-1633 Asus® MeMO Pad™ 8 ME180A-A1-WH Intel processor-powered Dell Venue 8 HP 8 1401 BENCHMARKS WE USED We ran the following benchmarks to test the tablets: BatteryXPRT 2014, divided into Battery Life Network and Network Performance categories Futuremark® 3DMark® GeekBench 3, Single-core and Multi-core MobileXPRT 2013, divided into Performance and User Experience categories Passmark® PerformanceTest™ Mobile WebXPRT 2013 We ran each test three times and report the median of the runs. For detailed information about the tablets we tested, see Appendix A. For detailed testing steps, see Appendix B. APRIL 2014 A PRINCIPLED TECHNOLOGIES TEST REPORT Commissioned by Intel Corp. BATTERY LIFE COMPARISON BatteryXPRT 2014 Many consumers consider battery life to be a crucial feature when purchasing a tablet. -

Getting Started Ubuntu

Getting Started withUbuntu 16.04 Copyright © 2010–2016 by The Ubuntu Manual Team. Some rights reserved. c b a This work is licensed under the Creative Commons Attribution–Share Alike 3.0 License. To view a copy of this license, see Appendix A, visit http://creativecommons.org/licenses/by-sa/3.0/, or send a letter to Creative Commons, 171 Second Street, Suite 300, San Francisco, California, 94105, USA. Getting Started with Ubuntu 16.04 can be downloaded for free from http:// ubuntu-manual.org/ or purchased from http://ubuntu-manual.org/buy/ gswu1604/en_US. A printed copy of this book can be ordered for the price of printing and delivery. We permit and even encourage you to distribute a copy of this book to colleagues, friends, family, and anyone else who might be interested. http://ubuntu-manual.org Revision number: 125 Revision date: 2016-05-03 22:38:45 +0200 Contents Prologue 5 Welcome 5 Ubuntu Philosophy 5 A brief history of Ubuntu 6 Is Ubuntu right for you? 7 Contact details 8 About the team 8 Conventions used in this book 8 1 Installation 9 Getting Ubuntu 9 Trying out Ubuntu 10 Installing Ubuntu—Getting started 11 Finishing Installation 16 2 The Ubuntu Desktop 19 Understanding the Ubuntu desktop 19 Unity 19 The Launcher 21 The Dash 21 Workspaces 24 Managing windows 24 Unity’s keyboard shortcuts 26 Browsing files on your computer 26 Files file manager 27 Searching for files and folders on your computer 29 Customizing your desktop 30 Accessibility 32 Session options 33 Getting help 34 3 Working with Ubuntu 37 All the applications you -

ESTTA800156 02/08/2017 in the UNITED STATES PATENT and TRADEMARK OFFICE BEFORE the TRADEMARK TRIAL and APPEAL BOARD Proceeding 9

Trademark Trial and Appeal Board Electronic Filing System. http://estta.uspto.gov ESTTA Tracking number: ESTTA800156 Filing date: 02/08/2017 IN THE UNITED STATES PATENT AND TRADEMARK OFFICE BEFORE THE TRADEMARK TRIAL AND APPEAL BOARD Proceeding 91220591 Party Plaintiff TCT Mobile International Limited Correspondence SUSAN M NATLAND Address KNOBBE MARTENS OLSON & BEAR LLP 2040 MAIN STREET , 14TH FLOOR IRVINE, CA 92614 UNITED STATES [email protected], [email protected] Submission Motion to Amend Pleading/Amended Pleading Filer's Name Jonathan A. Hyman Filer's e-mail [email protected], [email protected] Signature /jhh/ Date 02/08/2017 Attachments TCLC.004M-Opposer's Motion for Leave to Amend Notice of Opp and Motion to Suspend.pdf(1563803 bytes ) TCLC.004M-AmendNoticeofOpposition.pdf(1599537 bytes ) TCLC.004M-NoticeofOppositionExhibits.pdf(2003482 bytes ) EXHIBIT A 2/11/2015 Moving Definition and More from the Free MerriamWebster Dictionary An Encyclopædia Britannica Company Join Us On Dictionary Thesaurus Medical Scrabble Spanish Central moving Games Word of the Day Video Blog: Words at Play My Faves Test Your Dictionary SAVE POPULARITY Vocabulary! move Save this word to your Favorites. If you're logged into Facebook, you're ready to go. 13 ENTRIES FOUND: moving move moving average moving cluster movingcoil movingiron meter moving pictureSponsored Links Advertise Here moving sidewalkKnow Where You Stand moving staircaseMonitor your credit. Manage your future. Equifax Complete™ Premier. fastmovingwww.equifax.com -

In the United States District Court for the District of Delaware

Case 1:15-cv-01125-GMS Document 76 Filed 11/23/16 Page 1 of 83 PageID #: 1338 IN THE UNITED STATES DISTRICT COURT FOR THE DISTRICT OF DELAWARE KONINKLIJKE PHILIPS N.V., U.S. PHILIPS CORPORATION, Plaintiffs, Case No.: 15-1125-GMS v. JURY TRIAL DEMANDED ASUSTEK COMPUTER INC., ASUS COMPUTER INTERNATIONAL, Defendants. MICROSOFT CORPORATION, Intervenor-Plaintiff, v. KONINKLIJKE PHILIPS N.V., U.S. PHILIPS CORPORATION, Intervenor-Defendants. SECOND AMENDED COMPLAINT FOR PATENT INFRINGEMENT Plaintiffs Koninklijke Philips N.V. and U.S. Philips Corporation (collectively, “Plaintiffs” or “Philips”), bring this Second Amended Complaint for patent infringement against Defendants ASUSTeK Computer Inc. and ASUS Computer International (collectively, “Defendants” or “ASUS”), and hereby allege as follows: Nature of the Action 1. This is an action for patent infringement under 35 U.S.C. § 271, et seq., by Philips ME1 23763547v.1 Case 1:15-cv-01125-GMS Document 76 Filed 11/23/16 Page 2 of 83 PageID #: 1339 against ASUS for infringement of United States Patent Nos. RE 44,913 (“the ’913 patent”), 6,690,387 (“the ’387 patent”), 7,184,064 (“the ’064 patent”), 7,529,806 (“the ’806 patent”), 5,910,797 (“the ’797 patent”), 6,522,695 (“the ’695 patent”), RE 44,006 (“the ’006 patent”), 8,543,819 (“the ’819 patent”), 9,436,809 (“the ’809 patent”), 6,772,114 (“the ’114 patent”), and RE 43,564 (“the ’564 patent”) (collectively, the “patents-in-suit”). The Parties 2. Plaintiff Koninklijke Philips N.V., formerly known as Koninklijke Philips Electronics N.V., is a corporation duly organized and existing under the laws of the Netherlands. -

Using Tablet Computers to Implement Surveys in Challenging Environments Lindsay J Benstead*, Kristen Kao†, Pierre F Landry‡, Ellen M Lust**, Dhafer Malouche††

Articles Using Tablet Computers to Implement Surveys in Challenging Environments Lindsay J Benstead*, Kristen Kao†, Pierre F Landry‡, Ellen M Lust**, Dhafer Malouche†† Tags: local governance, sub-saharan africa, middle east and north africa, tablet computers, challenging survey environments, computer- assisted personal interviewing (capi) DOI: 10.29115/SP-2017-0009 Survey Practice Vol. 10, Issue 2, 2017 Computer-assisted personal interviewing (CAPI) has increasingly been used in developing countries, but literature and training on best practices have not kept pace. Drawing on our experiences using CAPI to implement the Local Governance Performance Index (LGPI) in Tunisia and Malawi and an election study in Jordan, this paper makes practical recommendations for mitigating challenges and leveraging CAPI’s benefits to obtain high quality data. CAPI offers several advantages. Tablets facilitate complex skip patterns and randomization of long question batteries and survey experiments, which helps to reduce measurement error. Tablets’ global positioning system (GPS) technology reduces sampling error by locating sampling units and facilitating analysis of neighborhood effects. Immediate data uploading, time-stamps for individual questions, and interview duration capture allowed real time data quality checks and interviewer monitoring. Yet, CAPI entails challenges, including costs of learning new software; questionnaire programming; and piloting to resolve coding bugs; and ethical and logistical considerations, such as electricity and Internet connectivity. introduction Computer-assisted personal interviewing (CAPI) using smartphones, laptops, and tablets has long been standard in western countries. Since the 1990s, CAPI has also been used in developing countries (Bethlehem 2009, 156-160) and is increasingly utilized in developing countries as well. Unlike standard paper and pencil interviewing, where the interviewer records responses on paper and manually codes them into a computer, CAPI allows the interviewer to record answers directly onto a digital device. -

Libreoffice Pt13 P.12 My Opinion P.31 Columns

Full Circle REVIEW: THE INDEPENDENT MAGAZINE FOR THE UBUNTU LINUX COMMUNITY BODHI LINUX ISSUE #59 - March 2012 WITH E17 DESKTOP FFOORREEMMOOSSTT DDAATTAA RREECCOOVVEERRYY HOW TO RECOVER DELETED FILES full circle magazine #59 full circle magazine is neither affiliated wit1h, nor endorsed by, Canonical Ltd. contents ^ HowTo Full Circle Opinions THE INDEPENDENT MAGAZINE FOR THE UBUNTU LINUX COMMUNITY Python - Part 31 p.07 My Story p.28 Linux News p.04 My Desktop p.52 LibreOffice Pt13 p.12 My Opinion p.31 Columns Portable Linux p.15 Command & Conquer p.05 Ubuntu Games p.48 I Think... p.33 Adjust Virtual Disk Size p.17 Linux Labs p.22 Q&A p.45 Review p.35 BACK NEXT MONTH Create Greeting Cards p.18 Ubuntu Women p.46 Closing Windows p.24 Letters p.40 The articles contained in this magazine are released under the Creative Commons Attribution-Share Alike 3.0 Unported license. This means you can adapt, copy, distribute and transmit the articles but only under the following conditions: you must attribute the work to the original author in some way (at least a name, email or URL) and to this magazine by name ('Full Circle Magazine') and the URL www.fullcirclemagazine.org (but not attribute the article(s) in any way that suggests that they endorse you or your use of the work). If you alter, transform, or build upon this work, you must distribute the resulting work under the same, similar or a compatible license. Full Circle magazine is entirely independent of Canonical, the sponsor of the Ubuntu projects, and the views and opinions in the magazine should in no way be assumed tfoulhl acivrecleCamnaognaiczainlee#nd5o9rseme2nt. -

Library Compatible Ebook Devices

Current as of 3/1/2013. For the most up-to-date list, visit overdrive.com/drc. Library Compatible eBook Devices eBooks from your library’s ‘Virtual Branch’ website powered by OverDrive® are currently compatible with a variety of readers, computers and devices. eBook readers Other devices • Aluratek LIBRE Air/Color/Touch • En Tourage Pocket eDGe™ • iRiver Story HD • Literati™ Reader • Pandigital® Novel Amazon® Kindle Sony® Barnes & Noble® Kobo™ • PocketBook Pro 602 (U.S. libraries only) Daily Edition, Pocket NOOK™ 3G+Wi-Fi, Kobo eReader, • Skytex Primer Kindle, 2, 3, DX, Touch, Edition, PRS-505, Wi-Fi, Simple Touch Touch, Glo The process to download Keyboard, Paperwhite PRS-700, Touch Edition, or transfer eBooks to these Wi-Fi PRS-T1, T2 devices may vary by device, most require Adobe Digital Editions. Mobile devices Get the FREE OverDrive Media Console app or read in your browser using OverDrive Read: Other devices ® ™ iPad®, iPad® Mini, iPhone® • Acer Iconia • Motorola Xoom BlackBerry® ® Android™ ™ ™ & iPod touch ® • Agasio Dropad • Nextbook Next 2 Windows ™ Tablet • Archos Arnova Child Pad • Nexus 7 & 10 Tablets Windows® • Archos™ Tablets • NOOK Color, Tablet, Phone 7 & 8 ® • ASUS Transformer HD, HD+ • Coby Kyros • Pandigital Nova • Cruz™ Tablet • Samsung® Galaxy Tab™ • Dell Streak • Sony Tablet S • Fuhu Nabi Tablet • Sylvania Mini Tablet • Kindle Fire, Fire HD • Toshiba Thrive™ • Kobo Vox • ViewSonic gTablet Available in Mobihand™ Available in the Available in Available in • MEEP! Tablet & AppWorld™ App StoreSM Google Play Windows Store ...or use the FREE Kindle reading app on many of these devices. Computers Install the FREE Adobe Digital Editions software to download and read eBooks on your computer and transfer to eBook readers. -

What's in The

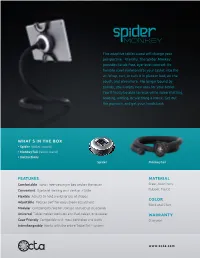

This adaptive tablet stand will change your perspective—literally. The Spider Monkey provides hands-free, eye-level comfort. Its flexiblesteelskeletonliftsyourtabletintothe air. Wrap, curl, or tuck it in place in bed, on the couch, and elsewhere. No longer bound by gravity, you’ll enjoy new uses for your tablet. You’llfinallybeabletorelaxwhilevideochatting, reading, writing, or watching a movie. Get out the popcorn, and get your hands back. WHAT’S IN THE BOX • Spider (tablet mount) • MonkeyTail (tablet stand) • Instructions Spider MonkeyTail FEATURES MATERIAL Comfortable Hands-free viewing in bed and on the couch Steel, Aluminum, Convenient Eye-level viewing on a desk or a table Rubber, Plastic Flexible Adjusts to hold a wild variety of shapes COLOR Adjustable Rotates 360° for easy screen adjustment Black and Silver Modular Components fold for storage and set up in seconds Universal Tablet holder works on any iPad, tablet, or e-reader WARRANTY Case-Friendly Compatible with most portfolios and shells One-year Interchangeable Works with the entire TabletTail™ system www.octa.com COMPATIBILITY ASUS MeMO Pad: MeMo Pad, 10, S6000, ThinkPad: Helix, Tablet 2 HD 7, FHD 10, FHD 10 LTE, Eee Pad Lenovo Mix 10 Compatible Cases MeMO 171, Smart 10” Microsoft Surface: 2, Pro, Pro 3, RT The Spider can hold cases between Transformer Pad: Infinity TF700T, Samsung ATIV: Smart PC 500T, 5.80in (14.73cm) and 7.90in TF300T, TF300TL, Transformer Tab 5, Tab 7, Galaxy Note: 10.1, Pro (20.07cm) in length or width that Book: T100, VivoTab: VivoTab, 12.2, Galaxy Tab: 10.1, 2-10.1, 2-7.0, have a max thickness of 12mm Smart, RT, RT 3G, RT LTE 3-7.0, 3-7.0 Lite, 3-10.1, 4-10.1, 7.0 (.47in). -

A Note from the Chairman

A Note from the Chairman Dear Friends and Partners, The past two months have been fruitful for ASUS. We hosted press events in Berlin, Moscow, San Francisco, Tokyo and Taipei in order to announce a series of incredible products that transform expectations for mobile computing. Please allow me to share some of our news with you. One of our most exciting new products is the ASUS Transformer Book T100, the next evolution of the Eee PC. The T100 has the functionality of a notebook, a detachable touch screen, multiple entertainment modes, and enough battery life for all-day computing. All of this with zero compromise. It is a game-changer for our mobile lifestyle. Next, meet the ASUS VivoPC, a traditional desktop PC housed in a chic and stylishly designed compact case. The ASUS VivoPC is equipped with the latest in-built 802.11ac Wi-Fi technology and provides users with a fast and stable connection for web surfing, media streaming and online gaming. The VivioPC’s extraordinary ASUS Sonic Master speakers and audio tuning software allows users to sit back and enjoy deep immersive sounds. Our latest release is the new ASUS PadFone, an original “We should not be afraid to challenge innovation that, when docked in the pad station, transforms convention as we try to replace the tried and a HD 5-inch smartphone into a full HD 10.1-inch tablet. It tested ways of doing things. Success stems from the generation of new ideas to create a world of offers the best of both worlds with one data plan. -

Electronic Environments for Reading: an Annotated Bibliography of Pertinent Hardware and Software (2011)

Electronic Environments for Reading: An Annotated Bibliography of Pertinent Hardware and Software (2011) Corina Koolen (Leiden University), Alex Garnett (University of British Columbia), Ray Siemens (University of Victoria), and the INKE, ETCL, and PKP Research Groups List of keywords: reading environments, knowledge environments, social reading, social media, digital reading, e-reading, e-readers, hardware, software, INKE Corina Koolen is a PhD student and lecturer at Leiden University, the Netherlands and an ETCL research assistant. P.N. van Eyckhof 3, 2311 BV, Leiden, the Netherlands. Email: [email protected] . Alex Garnett is a PhD student at the University of British Columbia, Vancouver and an ETCL research assistant. Irving K. Barber Learning Centre, Suite 470- 1961 East Mall, Vancouver, BC V6T 1Z1. Email: [email protected] Dr. Raymond Siemens is Canada Research Chair in Humanities Computing and Distinguished Professor in the Faculty of Humanities at the University of Victoria, in English with cross appointment in Computer Science. Faculty of Humanities, University of Victoria, PO Box 3070 STN CSC, Victoria, BC, Canada V8W 3W1. Email: [email protected] . Introduction In researching and implementing new research environments, hardware is an important feature that up until recently was not a central concern: it was implied to be a personal computer. Larger (such as tabletop settings) as well as smaller digital devices (as the PDA) have existed for quite some time, but especially recent hardware such as dedicated e-reading devices (the Kindle, 2007), smartphones (the iPhone, 2007) and tablet computers (the iPad, 2010) have widened access to information, by extending reader control of digital texts.