Play Your Song 29

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Adobe Trademark Database for General Distribution

Adobe Trademark List for General Distribution As of May 17, 2021 Please refer to the Permissions and trademark guidelines on our company web site and to the publication Adobe Trademark Guidelines for third parties who license, use or refer to Adobe trademarks for specific information on proper trademark usage. Along with this database (and future updates), they are available from our company web site at: https://www.adobe.com/legal/permissions/trademarks.html Unless you are licensed by Adobe under a specific licensing program agreement or equivalent authorization, use of Adobe logos, such as the Adobe corporate logo or an Adobe product logo, is not allowed. You may qualify for use of certain logos under the programs offered through Partnering with Adobe. Please contact your Adobe representative for applicable guidelines, or learn more about logo usage on our website: https://www.adobe.com/legal/permissions.html Referring to Adobe products Use the full name of the product at its first and most prominent mention (for example, “Adobe Photoshop” in first reference, not “Photoshop”). See the “Preferred use” column below to see how each product should be referenced. Unless specifically noted, abbreviations and acronyms should not be used to refer to Adobe products or trademarks. Attribution statements Marking trademarks with ® or TM symbols is not required, but please include an attribution statement, which may appear in small, but still legible, print, when using any Adobe trademarks in any published materials—typically with other legal lines such as a copyright notice at the end of a document, on the copyright page of a book or manual, or on the legal information page of a website. -

Adobe Creative Cloud for Enterprise Overview

Requires Services New CS6 Since Creative Cloud for enterprise App Single Always have access to the latest Adobe creative apps, services, IT tools and enterprise support Apps All Apps, Services, and Features What it’s used for Adobe Photoshop Edit and composite images, use 3D tools, edit video, and perform advanced image analysis. • • Adobe Illustrator Create vector-based graphics for print, web, video, and mobile. • • Adobe InDesign Design professional layouts for print and digital publishing. • • Adobe Bridge Browse, organize and search your photos and design files in one central place. Design • Adobe Acrobat Pro Create, protect, sign, collaborate on, and print PDF documents. • Adobe Dreamweaver Design, develop, and maintain standards-based websites and applications. • • Web Adobe Animate Create interactive animations for multiple platforms. • • • Adobe Premiere Pro Edit video with high-performance, industry-leading editing suite. • • Adobe After Effects Create industry-standard motion graphics and visual effects. • • Adobe Audition Create, edit, and enhance audio for broadcast, video, and film. • • Adobe Prelude Streamline the import and logging of video, from any video format. • • • Video and audio and Video Adobe Media Encoder Automate the process of encoding video and audio to virtually any video or device format. • Exclusive Creative Cloud Apps (not available in Adobe Creative Suite) Adobe XD Design and prototype user experiences for websites, mobile apps and more. • • • • Adobe Dimension Composite high-quality, photorealistic images with 2D and 3D assets. • • • • Adobe Character Animator Animate your 2D characters in real time. • • Adobe InCopy Professional writing and editing solution that tightly integrates with Adobe InDesign. • • Adobe Lightroom Classic Organize, edit, and publish digital photographs. -

Adobe Apps for Education Images and Pictures

Adobe Images and pictures › Figures and illustrations › Documents › Apps for Education Empowering students, educators, Portfolios and presentations › Productivity and collaboration › Apps › and administrators to express their creativity. Websites › Video and audio › Games › See page 11 for a glossary of Adobe apps. Adobe Apps for Education Images and pictures Images and pictures › Sample project Create Beginner Retouch photos on the fly Portfolio and presentations › Create an expressive drawing Websites › Make quick enhancements to photos Figures and illustrations › Learn five simple ways to enhance a photo Productivity and collaboration › Make a photo slide show Video and audio › Intermediate Make non-destructive edits in Camera Raw Edit and combine images to make creative compositions Documents › Shoot and edit a professional headshot Apps › Comp, preview, and build a mobile app design Games › Expert Create a 3D composition Adobe Apps for Education Portfolio and presentations Images and pictures › Sample project Create Beginner Convert a PowerPoint presentation into an interactive online presentation Portfolio and presentations › Create an oral history presentation Websites › Create a digital science fair report Figures and illustrations › Productivity and collaboration › Create a digital portfolio of course work Video and audio › Intermediate Create a self-paced interactive tutorial Documents › Create a slide presentation Apps › Expert Turn a publication into an ePub Games › Adobe Apps for Education Websites Images and pictures › Sample -

The Starling Manual (Korean)

차례 Starling 매뉴얼 1.1 1. 시작하기 1.2 1.1. 소개 1.3 1.2. Adobe AIR는 무엇입니까? 1.4 1.3. Starling이 뭐죠? 1.5 1.4. IDE 선택하기 1.6 1.5. 리소스(Resources) 1.7 1.6. 헬로월드(Hello World) 1.8 1.7. 요약 1.9 2. 기본 개념 1.10 2.1. Starling 구성 1.11 2.2. 디스플레이 프로그래밍 1.12 2.3. 텍스쳐와 이미지(Textures & Images) 1.13 2.4. 다이나믹 텍스트(Dynamic Text) 1.14 2.5. 이벤트 핸들링 1.15 2.6. 애니메이션 1.16 2.7. 애셋 관리(Asset Management) 1.17 2.8. 조각 필터(Fragment Filters) 1.18 2.9. 메쉬(Meshes) 1.19 2.10. 마스크(Masks) 1.20 2.11. 스프라이트 3D(Sprite3D) 1.21 2.12. 유틸리티(Utilities) 1.22 2.13. 요약 1.23 3. 고급 주제 1.24 3.1. ATF 텍스쳐 1.25 3.2. 컨텍스트 손실(Context Loss) 1.26 3.3. 메모리 관리 1.27 3.4. 퍼포먼스 최적화 1.28 3.5. 커스텀 필터 1.29 3.6. 커스텀 스타일 1.30 1 3.7. 거리 필드 렌더링(Distance Field Rendering) 1.31 3.8. 요약 1.32 4. 모바일 개발 1.33 4.1. 다중 해상도 개발 1.34 4.2. 기기 회전(Device Rotation) 1.35 4.3. 요약 1.36 5. 마지막 드리는 말씀 1.37 2 Starling 매뉴얼 이 매뉴얼의 목적은 광범위한 Starling Framework에 대해 최대한 자세하게 소개하는 것입니다. Starling은 2D 게임에 중점을 둔, 모든 종류의 애플리케이션에 사용할 수 있는 ActionScript 3 용 크로스플랫폼 엔진입니다. -

New Adobe Creative Cloud Licensing Comparison Chart

New Adobe Creative Cloud Licensing Comparison Chart ‡ Creative Cloud Creative Cloud † Creative Cloud † What it’s used for for education, for education, for enterprise named-user device license license Edit and composite images, use 3D tools, edit Adobe Photoshop CC video, and perform advanced image analysis • • • Create vector-based graphics for print, web, Adobe Illustrator CC video, and mobile • • • Design professional layouts for print and s l Adobe InDesign CC digital publishing • • • Browse, organize, and search your photos Adobe Bridge CC • • • sign too e Install and manage extensions for many creative D Adobe Extension Manager CC apps that are part of Creative Cloud • • • Use with InDesign for a professional writing Adobe InCopy CC and editing solution • • • Create, protect, sign, collaborate on, and print Adobe Acrobat XI Pro PDF documents • • • Design, develop, and maintain standards-based Adobe Dreamweaver CC websites and applications • • • s l Create rich interactive content across varied Adobe Flash Professional CC platforms and devices • • • Adobe Flash Builder Premium Build exceptional applications for iOS, Android, eb too Edition and BlackBerry using a single codebase • • • W Rapidly prototype websites and applications, Adobe Fireworks and optimize web graphics • • • Edit video with high-performance, industry- Adobe Premiere Pro CC leading tools • • • Create industry-standard motion graphics and s l • • • Create, edit, and enhance audio for broadcast, Adobe Audition CC • • • Adobe SpeedGrade CC Manipulate light and color -

Theindiegamedeveloperhandbook.Pdf

THE INDIE GAME DEVELOPER HANDBOOK This page intentionally left blank THE INDIE GAME DEVELOPER HANDBOOK Richard Hill-Whittall First published 2015 by Focal Press 70 Blanchard Road, Suite 402, Burlington, MA 01803 and by Focal Press 2 Park Square, Milton Park, Abingdon, Oxon OX14 4RN Focal Press is an imprint of the Taylor & Francis Group, an informa business © 2015 Taylor & Francis The right of Richard Hill-Whittall to be identified as the author of this work has been asserted by him in accordance with sections 77 and 78 of the Copyright, Designs and Patents Act 1988. All rights reserved. No part of this book may be reprinted or reproduced or utilised in any form or by any electronic, mechanical, or other means, now known or hereafter invented, including photocopying and recording, or in any information storage or retrieval system, without permission in writing from the publishers. Notices Knowledge and best practice in this field are constantly changing. As new research and experience broaden our understanding, changes in research methods, professional practices, or medical treatment may become necessary. Practitioners and researchers must always rely on their own experience and knowledge in evaluating and using any information, methods, compounds, or experiments described herein. In using such information or methods they should be mindful of their own safety and the safety of others, including parties for whom they have a professional responsibility. Product or corporate names may be trademarks or registered trademarks, and are used only for identification and explanation without intent to infringe. Library of Congress Cataloging-in-Publication Data Hill-Whittall, Richard. -

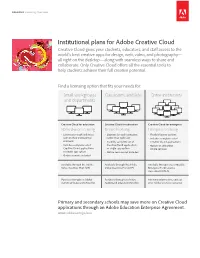

Institutional Plans for Adobe Creative Cloud

Education Licensing Overview Institutional plans for Adobe Creative Cloud Creative Cloud gives your students, educators, and staff access to the world’s best creative apps for design, web, video, and photography— all right on the desktop—along with seamless ways to share and collaborate. Only Creative Cloud offers all the essential tools to help students achieve their full creative potential. Find a licensing option that fits your needs for: Small workgroups Classrooms and labs Entire institutions and departments Creative Cloud for education Creative Cloud for education Creative Cloud for enterprise Named-user licensing Device licensing Enterprise licensing • Licenses for each individual • Licenses for each computer, • Flexible license options user on their institutional rather than each user • Includes complete set of computer • Includes complete set of Creative Cloud applications • Includes complete set of Creative Cloud applications • Option to add select Creative Cloud applications or single-app option online services or single-app option • Online services not included • Online services included Available through the Adobe Available through the Adobe Available through a customizable Value Incentive Plan (VIP). Value Incentive Plan (VIP). Enterprise Term License Agreement (ETLA). Purchase through an Adobe Purchase through an Adobe For more information, contact Authorized Education Reseller. Authorized Education Reseller. your Adobe account executive. Primary and secondary schools may save more on Creative Cloud applications through -

Adobe Creative Cloud for Teams

Adobe Creative Cloud for teams World-class creative apps. Hassle-free administration. Built to accelerate your team’s creativity, Adobe Creative Cloud for teams gives you access to the world’s best creative apps and updates as soon as they’re released. Explore new creative frontiers in 3D design, voice prototyping, and 360/VR video. Get updates to your favorite apps. And try the newest apps, like Adobe Premiere Rush CC, Adobe Photoshop Lightroom CC, and Adobe XD CC. Collaborate securely Share files and folders across desktops, devices, and the web. Adobe Creative Cloud Libraries make it easy to maintain version control, stay on top of changes, and work together more productively. And you can edit video projects as a team with Adobe Team Projects inside your Creative Cloud video apps. Deploy and manage without hassles Deploy apps and updates your way—either handle them centrally or let users install as needed. Use the web-based Admin Console to add or reassign seats anytime with just a few clicks, and billing catches up at your next payment cycle. Get expert help when you need it Have questions about deployment or license management? Creative Cloud for teams offers an onboarding webinar as well as advanced 24x7 technical support. And if you need training on a desktop app, count on 1:1 Expert Services sessions and thousands of tutorials to help you make the most of your software. Jump-start the design process with Adobe Stock Access millions of high-quality, royalty-free creative assets, including images, graphics, videos, templates, and 3D. -

Procedures to Uninstall Adobe CC Apps in Windows

Procedures to Uninstall Adobe CC Apps in Windows Towson employees, including student employees, that are registered to access Adobe software should ALWAYS use the Adobe Creative Cloud Desktop app installed on their university office computer to uninstall Adobe apps. The procedures below should only be used for two Adobe apps -- Adobe Scout and Gaming SDK. These apps can’t be uninstalled on an office computer using the desktop app. Classrooms and computer labs will also need to use the procedures below to uninstall Adobe apps on lab images because they don’t use the Adobe Creative Cloud Desktop app. Requirements Computer must be on campus and connected to the wired university network Administrator rights on the computer (instructions below). Quit all running applications and don’t work on the computer until the procedures below are completed. Procedures 1. Grant Yourself Administrator Rights First step is to grant yourself temporary administrator rights on the computer so you can uninstall the Adobe CC app. You can grant yourself rights by selecting the Temporary Computer Administrator Rights Tool at https://webapps.towson.edu/TCAR/ A video that demonstrates how to use the Temporary Computer Administrator Rights Tool is available at https://www.youtube.com/watch?v=RYB0pZlydHw&index=22&list=PLxqZTEhnn8ubsBgTKbhNC1RHqHCW9yDCl After logging on to the Temporary Computer Administrator Rights Tool: Check the box in the red rectangle to comply with the terms of the tool. Select This Computer to grant temporary administrator rights to. Select Yourself to grant the rights to. Select 30 min. as the time needed to grant the rights. -

Animating Cryptographic Primitives

Animating Cryptographic Primitives A dissertation submitted to The University of Manchester for the degree of Master of Science in the Faculty of Engineering and Physical Sciences 2017 Richard Lenin Leiva Atiaga School of Computer Science 2 Contents List of Tables .................................................................................................................... 5 List of Figures ................................................................................................................... 6 Glossary ........................................................................................................................... 9 Abbreviations ................................................................................................................. 10 Abstract ......................................................................................................................... 11 Declaration .................................................................................................................... 12 Copyright ....................................................................................................................... 13 Chapter 1: Introduction................................................................................................... 14 1.1 Chapter Overview ................................................................................................ 14 1.2 Introduction ........................................................................................................ 14 1.3 Project -

Adobe Creative Cloud for Education Licensing for Classrooms, Labs, Small Workgroups, and Departments

Higher Education Licensing Overview Adobe Creative Cloud for education Licensing for classrooms, labs, small workgroups, and departments Only Adobe brings together an essential and comprehensive set of integrated apps and services to further collaboration and creativity in education. Adobe Creative Cloud for education gives your students, faculty, and staff access to the latest industry-leading creative software for design, web, video, and photography—on the desktop and mobile devices—plus seamless ways to share and collaborate through access to online services. With Creative Cloud for education, your school or university gets: • Software that’s always up to date—Licenses include updates to apps and services as soon as they’re released, so you’ll always have access to the latest creative tools and features. • Flexible licensing options—You can license the complete set of creative apps or just a single one, with or without services. • Collaboration that’s built in—With an option to add online services, small workgroups and teams can collaborate and share with ease.* • Easy deployment and management—A web-based Admin Console makes it easy to purchase additional seats, add users, and manage software updates.* Now more than ever, the world needs creative ideas. The Adobe Education Exchange helps educators ignite creativity with professional development opportunities, learning resources, and peer-to-peer collaboration. Visit the Adobe Education Exchange: http://edex.adobe.com Find a licensing option that fits your needs: Small workgroups Classrooms -

BUILDING ADOBE AIR APPLICATIONS Iv Contents

Building ADOBE® AIR® Applications Legal notices Legal notices For legal notices, see http://help.adobe.com/en_US/legalnotices/index.html. Last updated 5/16/2017 iii Contents Chapter 1: About Adobe AIR Chapter 2: Adobe AIR installation Installing Adobe AIR . 3 Removing Adobe AIR . 5 Installing and running the AIR sample applications . 5 Adobe AIR updates . 6 Chapter 3: Working with the AIR APIs AIR-specific ActionScript 3.0 classes . 7 Flash Player classes with AIR-specific functionality . 11 AIR-specific Flex components . 15 Chapter 4: Adobe Flash Platform tools for AIR development Installing the AIR SDK . 16 Setting up the Flex SDK . 18 Setting up external SDKs . 18 Chapter 5: Creating your first AIR application Creating your first desktop Flex AIR application in Flash Builder . 19 Creating your first desktop AIR application using Flash Professional . 22 Create your first AIR application for Android in Flash Professional . 24 Creating your first AIR application for iOS . 25 Create your first HTML-based AIR application with Dreamweaver . 29 Creating your first HTML-based AIR application with the AIR SDK . 31 Creating your first desktop AIR application with the Flex SDK . 35 Creating your first AIR application for Android with the Flex SDK . 39 Chapter 6: Developing AIR applications for the desktop Workflow for developing a desktop AIR application . 43 Setting desktop application properties . 44 Debugging a desktop AIR application . 49 Packaging a desktop AIR installation file . 51 Packaging a desktop native installer . 54 Packaging a captive runtime bundle for desktop computers . 57 Distributing AIR packages for desktop computers . 59 Chapter 7: Developing AIR applications for mobile devices Setting up your development environment .