Strategic Identity Signaling in Heterogeneous Networks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1616580050.Pdf

СОДЕРЖАНИЕ СОДЕРЖАНИЕ СОДЕРЖАНИЕ 2 ВВЕДЕНИЕ 3 СОЦИАЛЬНЫЕ МЕДИА НА ВЫБОРАХ: НАЧАЛО ДЛИННОГО ПУТИ 4 Становление политических интернет-технологий 1996–2016 4 2016: интернет побеждает телевизор. Феномен Дональда Трампа 5 ПОЛОЖЕНИЕ СОЦИАЛЬНЫХ МЕДИА К СТАРТУ КАМПАНИИ-2020 8 ИСПОЛЬЗОВАНИЕ НОВЫХ ИНСТРУМЕНТОВ В ХОДЕ ПРЕЗИДЕНТСКОЙ ГОНКИ 10 Цифровая стратегия демократов на выборах-2020. «Барометр» 10 Цифровая стратегия Трампа на выборах-2020. Роботизация таргетинга 14 Полевая работа в условиях эпидемии 18 Растущая популярность мобильных приложений 19 Фандрайзинг 22 Предвыборный дискурс и цензура 25 TikTok. Фактор новых соцмедиа в политической агитации 27 КЛЮЧЕВЫЕ ВЫВОДЫ 31 СПИСОК ИСТОЧНИКОВ 32 2 ВВЕДЕНИЕ ВВЕДЕНИЕ Выборы 2020 года в США прошли в самых не- В этом докладе не ставится цель проследить обычных условиях из всех возможных – на фоне хронологию президентской гонки в США и не вы- глобальной пандемии, самого тяжёлого экономи- сказываются оценочные суждения о результатах ческого кризиса за более чем 80 лет и наиболее прошедших выборов. Он посвящён анализу того массовых уличных протестов за последние 50 лет. инструментария, что использовался в ходе пред- Политтехнологам приходится на ходу подстра- выборной борьбы. иваться под эти неожиданные обстоятельства, В самом ближайшем будущем эти технологии адаптируясь к новой реальности. Уже давно про- станут активно использоваться во всём мире. Сле- должающаяся цифровизация современной поли- дует внимательно изучить цифровые тренды в тики лишь ускорилась. планировании и проведении предвыборных кам- На фоне кризиса традиционных медиа и соцсе- паний, агитации, полевой работы и уличной ак- тей появляются альтернативные площадки, на- тивности, которые на деле показали себя в США. бирающие стремительную популярность. Пред- выборная активность уходит в онлайн, где проще и дешевле проводить виртуальные митинги, со- брания сторонников и заниматься мобилизацией избирателей. -

The New Frontier of Activism - a Study of the Conditioning Factors for the Role of Modern Day Activists

The new frontier of activism - a study of the conditioning factors for the role of modern day activists Master’s Thesis Andreas Holbak Espersen | MSocSc Political Communication & Management Søren Walther Bjerregård | MSc International Business & Politics Supervisor Erik Caparros Højbjerg | Departmenent of Management, Politics and Philosophy Submitted 7th November 2016 | STUs: 251.792 Table of Contents Abstract ...................................................................................................................................................................................................... 5 Chapter 0 - Research question and literature review ....................................................................................... 6 0.1 Preface ............................................................................................................................................................................................... 7 0.2 Introduction ................................................................................................................................................................................... 8 0.2.1 Research area .................................................................................................................................................................... 9 0.3 Literature review ...................................................................................................................................................................... 11 0.3.1 Activism in International -

“No Left, No Right – Only the Game”

“No Left, No Right – Only the Game” A Netnographic Study of the Online Community r/KotakuInAction Master of Arts: Media and Communication Studies – Culture, Collaborative Media, and Creative Industries Master’s Thesis (Two-year) | 15 credits Student: Oskar Larsson Supervisor: Maria Brock Year: 2021 Word Count: 15,937 Abstract This thesis examines how 'othering' discourse can be used to construct and negotiate boundaries and shape collective identities within online spaces. Through a mixed-method approach of thematic analysis and a netnographic study, and by drawing on theoretical concepts of online othering and identity formation, this thesis explores how the Gamergate community r/KotakuInAction can be understood in relation to Gamergate, the Alt-Right and society at large. The results show that the community perceive and construct the SJW as a common adversary – a monstrous representation of feminism, progressiveness and political correctness. The analysis also revealed how racist rhetorics and white male anxieties characterize the communitys' othering discourse. Through an in-depth study of user-submitted comment, this thesis argues that r/KotakuInAction's collective identity is fluid and reactionary in nature, characterized by a discourse that is indicative of Alt-Right ideology and white male supremacy. Future research should further explore the network of communities that r/KotakuInAction is part of, as well as examine how the community transform over time. Keywords: Gamergate, Reddit, Alt-Right, Online Othering, Collective Identity, -

Online Media and the 2016 US Presidential Election

Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Faris, Robert M., Hal Roberts, Bruce Etling, Nikki Bourassa, Ethan Zuckerman, and Yochai Benkler. 2017. Partisanship, Propaganda, and Disinformation: Online Media and the 2016 U.S. Presidential Election. Berkman Klein Center for Internet & Society Research Paper. Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:33759251 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA AUGUST 2017 PARTISANSHIP, Robert Faris Hal Roberts PROPAGANDA, & Bruce Etling Nikki Bourassa DISINFORMATION Ethan Zuckerman Yochai Benkler Online Media & the 2016 U.S. Presidential Election ACKNOWLEDGMENTS This paper is the result of months of effort and has only come to be as a result of the generous input of many people from the Berkman Klein Center and beyond. Jonas Kaiser and Paola Villarreal expanded our thinking around methods and interpretation. Brendan Roach provided excellent research assistance. Rebekah Heacock Jones helped get this research off the ground, and Justin Clark helped bring it home. We are grateful to Gretchen Weber, David Talbot, and Daniel Dennis Jones for their assistance in the production and publication of this study. This paper has also benefited from contributions of many outside the Berkman Klein community. The entire Media Cloud team at the Center for Civic Media at MIT’s Media Lab has been essential to this research. -

Entire Issue (PDF)

E PL UR UM IB N U U S Congressional Record United States th of America PROCEEDINGS AND DEBATES OF THE 114 CONGRESS, SECOND SESSION Vol. 162 WASHINGTON, THURSDAY, JULY 7, 2016 No. 109 House of Representatives The House met at 10 a.m. and was ing but cause greater harm for our watershed. Researchers expect that for called to order by the Speaker pro tem- planet and future generations. Each every degree of Celsius of global warm- pore (Mr. WEBSTER of Florida). day that passes without action on cli- ing, the amount of water that gets f mate change is another day we are evaporated and sucked up by plants wreaking havoc on our world. from the Colorado River could increase DESIGNATION OF SPEAKER PRO I think President Obama said it best 2 or 3 percent. With 4.5 million acres of TEMPORE when he stated: ‘‘If anybody still wants farmland irrigated using the Colorado The SPEAKER pro tempore laid be- to dispute the science around climate River water and with nearly 40 million fore the House the following commu- change, have at it. You’ll be pretty residents depending on it, the incre- nication from the Speaker: lonely, because you’ll be debating our mental losses that are predicted will military, most of America’s business have a devastating impact. WASHINGTON, DC, As the West continues to experience July 7, 2016. leaders, the majority of the American I hereby appoint the Honorable DANIEL people, almost the entire scientific less rain and an increase in the sever- WEBSTER to act as Speaker pro tempore on community, and 200 nations around the ity and length of droughts, greater im- this day. -

(Pdf) Download

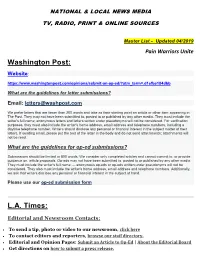

NATIONAL & LOCAL NEWS MEDIA TV, RADIO, PRINT & ONLINE SOURCES Master List - Updated 04/2019 Pain Warriors Unite Washington Post: Website: https://www.washingtonpost.com/opinions/submit-an-op-ed/?utm_term=.d1efbe184dbb What are the guidelines for letter submissions? Email: [email protected] We prefer letters that are fewer than 200 words and take as their starting point an article or other item appearing in The Post. They may not have been submitted to, posted to or published by any other media. They must include the writer's full name; anonymous letters and letters written under pseudonyms will not be considered. For verification purposes, they must also include the writer's home address, email address and telephone numbers, including a daytime telephone number. Writers should disclose any personal or financial interest in the subject matter of their letters. If sending email, please put the text of the letter in the body and do not send attachments; attachments will not be read. What are the guidelines for op-ed submissions? Submissions should be limited to 800 words. We consider only completed articles and cannot commit to, or provide guidance on, article proposals. Op-eds may not have been submitted to, posted to or published by any other media. They must include the writer's full name — anonymous op-eds or op-eds written under pseudonyms will not be considered. They also must include the writer's home address, email address and telephone numbers. Additionally, we ask that writers disclose any personal or financial interest in the subject at hand. Please use our op-ed submission form L.A. -

![Download the Full What Happened Collection [PDF]](https://docslib.b-cdn.net/cover/3730/download-the-full-what-happened-collection-pdf-723730.webp)

Download the Full What Happened Collection [PDF]

American Compass December 2020 WHAT HAPPENED THE TRUMP PRESIDENCY IN REVIEW AMERICAN COMPASS is a 501(c)(3) non-profit organization, launched in May 2020 with a mission to restore an economic consensus that emphasizes the importance of family, community, and industry to the nation’s liberty and prosperity— REORIENTING POLITICAL FOCUS from growth for its own sake to widely shared economic development that sustains vital social institutions; SETTING A COURSE for a country in which families can achieve self-sufficiency, contribute productively to their communities, and prepare the next generation for the same; and HELPING POLICYMAKERS NAVIGATE the limitations that markets and government each face in promoting the general welfare and the nation’s security. www.americancompass.org [email protected] What Happened: The Trump Presidency in Review Table of Contents FOREWORD: THE WORK REMAINS President Trump told many important truths, but one also has to act by Daniel McCarthy 1 INTRODUCTION 4 TOO FEW OF THE PRESIDENT’S MEN An iconoclast’s administration will struggle to find personnel both experienced and aligned by Rachel Bovard 5 A POPULISM DEFERRED Trump’s transitional presidency lacked the vision and agenda necessary to let go of GOP orthodoxy by Julius Krein 11 THE POTPOURRI PRESIDENCY A decentralized and conflicted administration was uniquely inconsistent in its policy actions by Wells King 17 SOME LIKE IT HOT Unsustainable economic stimulus at an expansion’s peak, not tax cuts or tariffs, fueled the Trump boom by Oren Cass 23 Copyright © 2020 by American Compass, Inc. Electronic versions of these articles with hyperlinked references are available at www.americancompass.org. -

The 2020 Election 2 Contents

Covering the Coverage The 2020 Election 2 Contents 4 Foreword 29 Us versus him Kyle Pope Betsy Morais and Alexandria Neason 5 Why did Matt Drudge turn on August 10, 2020 Donald Trump? Bob Norman 37 The campaign begins (again) January 29, 2020 Kyle Pope August 12, 2020 8 One America News was desperate for Trump’s approval. 39 When the pundits paused Here’s how it got it. Simon van Zuylen–Wood Andrew McCormick Summer 2020 May 27, 2020 47 Tuned out 13 The story has gotten away from Adam Piore us Summer 2020 Betsy Morais and Alexandria Neason 57 ‘This is a moment for June 3, 2020 imagination’ Mychal Denzel Smith, Josie Duffy 22 For Facebook, a boycott and a Rice, and Alex Vitale long, drawn-out reckoning Summer 2020 Emily Bell July 9, 2020 61 How to deal with friends who have become obsessed with 24 As election looms, a network conspiracy theories of mysterious ‘pink slime’ local Mathew Ingram news outlets nearly triples in size August 25, 2020 Priyanjana Bengani August 4, 2020 64 The only question in news is ‘Will it rate?’ Ariana Pekary September 2, 2020 3 66 Last night was the logical end 92 The Doociness of America point of debates in America Mark Oppenheimer Jon Allsop October 29, 2020 September 30, 2020 98 How careful local reporting 68 How the media has abetted the undermined Trump’s claims of Republican assault on mail-in voter fraud voting Ian W. Karbal Yochai Benkler November 3, 2020 October 2, 2020 101 Retire the election needles 75 Catching on to Q Gabriel Snyder Sam Thielman November 4, 2020 October 9, 2020 102 What the polls show, and the 78 We won’t know what will happen press missed, again on November 3 until November 3 Kyle Pope Kyle Paoletta November 4, 2020 October 15, 2020 104 How conservative media 80 E. -

Oregon's Anti-Immigrant Movement

Oregon’s Anti-Immigrant Movement A resource from the Center for New Community ________________________________________________________________________ The recent wave of nativist ballot measures proposed in Oregon did not come out of nowhere. The statewide nativist group Oregonians for Immigration Reform (OFIR) has long been fostering relationships with state legislators and building a grassroots base, helping the national anti-immigrant movement gain a strong foothold in Oregon. Reporters and outlets planning to cover OFIR and their latest efforts should provide important context, including: Evidence of OFIR’s ideological extremism and fringe rhetoric OFIR’s links to national organizations that have hate group designations OFIR’s dependence on financial assistance from a notorious white nationalist Who Is OFIR? OFIR is by far one of the strongest state contact groups of the Federation for American Immigration Reform (FAIR), the flagship national anti-immigrant organization. FAIR is considered a hate group because of its roots in white nationalism and eugenics and its current day virulent and false attacks on immigrants. FAIR, and other anti-immigrant organizations connected to FAIR, have shown an increasing interest in using Oregon as a testing ground for state-wide anti-immigrant policies because of Oregon’s low barrier to qualify a ballot measure. This, coupled with OFIR’s strong relationships and coordination with legislators and grassroots activists, creates a unique situation for the anti-immigrant movement to advance far-right nativist measures in a conventional blue state. Nativist victories in Oregon can be used to build momentum for the anti-immigrant movement around the country. For instance, politicians and nativist leaders used the 2014 failure of Measure 88, which would have allowed undocumented Oregon residents to obtain driver licenses, to support legislation in Georgia that would block access to driver licenses to anyone with DACA or DAPA. -

Canadian Muslim Voting Guide: Federal Election 2019

Wilfrid Laurier University Scholars Commons @ Laurier Sociology Faculty Publications Sociology Fall 2019 Canadian Muslim Voting Guide: Federal Election 2019 Jasmin Zine Wilfrid Laurier University, [email protected] Fatima Chakroun Wilfrid Laurier University Shifa Abbas Wilfrid Laurier University Follow this and additional works at: https://scholars.wlu.ca/soci_faculty Part of the Islamic Studies Commons, and the Sociology Commons Recommended Citation Zine, Jasmin; Chakroun, Fatima; and Abbas, Shifa, "Canadian Muslim Voting Guide: Federal Election 2019" (2019). Sociology Faculty Publications. 12. https://scholars.wlu.ca/soci_faculty/12 This Report is brought to you for free and open access by the Sociology at Scholars Commons @ Laurier. It has been accepted for inclusion in Sociology Faculty Publications by an authorized administrator of Scholars Commons @ Laurier. For more information, please contact [email protected]. PREPARED BY CANADIAN ISLAMOPHOBIA INDUSTRY RESEARCH PROJECT TABLE OF C ONTENTS Summary of Federal Party Grades 1 How to Use this Guide 1 Introduction 2 Muslim Canadian Voters 2 Key Issues 2 Key Issues 1 Alt-Right Groups & Islamophobia 1 Motion 103 4 Religious Freedom and Dress in Quebec (BILL 21 & BILL 62) 3 Immigration/Refugees 4 Boycott, Divestment, Sanctions (BDS) Movement 5 Foreign Policy 4 Conclusion 4 References by Section 5 The Canadian Muslim Voting Guide is led by Dr. Jasmin Zine, Professor, Wilfrid Laurier University. This guide was made possible with support from Hassina Alizai, Ryan Anningson, Sahver Kuzucuoglu, Doaa Shalabi, Ryan Hopkins and Phillip Oddi. The guide was designed by Fatima Chakroun and Shifa Abba. PAGE 01 S UMMARY OF FEDERAL PARTY GRADES Grades: P – Pass; N - Needs Improvement; F – Fail. -

Free Speech Group Fights Lawsuits Vs. News Sharers 30 September 2010, by CRISTINA SILVA , Associated Press Writer

Free speech group fights lawsuits vs. news sharers 30 September 2010, By CRISTINA SILVA , Associated Press Writer (AP) -- A San Francisco group that defends online "That's just ridiculous, and the reason it is ridiculous free speech is taking on a Las Vegas company it is that I don't think there has been any defendant says is shaking down news-sharing Internet users that we've called to shake them down for a through more than 140 copyright infringement settlement," he said. lawsuits filed this year. Dozens of the lawsuits have been settled privately, The Electronic Frontier Foundation's counterclaim and Righthaven and Stephens Media declined to represents the first significant challenge to disclose the terms. The EFF says the settlements Righthaven LLC's unprecedented campaign to have averaged about $5,000. police the sharing of news content on blogs, political sites and personal Web pages. Movie and music producers have previously targeted individuals who illegally share copyright At stake is what constitutes fair use - when and content. What makes Righthaven's business model how it is appropriate to share content in an age unique is that it buys copyrights from news where newsmakers increasingly encourage companies like Stephens Media and then readers to share stories on Facebook, Twitter, aggressively files lawsuits without first giving Digg and other social networking sites. defendants a chance to remove the content in question. The EFF argues that the lawsuits limit free speech and bully defendants into costly settlements by Allen Lichtenstein, a lawyer for the Nevada threatening $150,000 in damages and the transfer American Civil Liberties Union, said the lawsuits of domain names. -

Numbersusa Factsheet

NUMBERSUSA IMPACT: NumbersUSA is a nonprofit grassroots organization of nearly 1.1 million members that describes itself as a group of “moderates, conservatives, and liberals working for immigration numbers that serve America’s finest goals. Founded in 1996, NumbersUSA advocates numerical restrictions on legal immigration, an elimination of undocumented immigration, an elimination of the visa lottery, reform of birthright citizenship, and an end to “chain migration.” The organization was founded by author and journalist Roy Beck, and has ties to anti-immigration activist John Tanton’s network of anti-immigration organizations. The organization has expressed support for individuals with anti-Muslim and anti-immigrant views, such as former U.S. Senator and Attorney General Jeff Sessions, Senator Tom Cotton, and anti-Muslim activist Frank Gaffney. • NumbersUSA is a nonprofit, nonpartisan grassroots organization that advocates numerical restrictions on legal immigration and an elimination of undocumented immigration. It favors “removing jobs, public benefits and other incentives that encourage people to become illegal aliens and remain in the U.S.” The organization promotes the influx of immigrants who are part of the nuclear family of an American citizen, refugees with “no long-term prospects of returning home,” and immigrants with “truly extraordinary skills in the national interest.” • The organization was founded in 1996 by author and journalist Roy Beck. Prior to the establishment of NumbersUSA, Beck worked for ten years at U.S. Incorporated, an organization founded by anti- immigration activist John Tanton. Beck also served as an editor for the Social Contract Press, a Tanton publication notorious for its promotion of white nationalist and anti-immigration views.