IBM Research Report Docker and Container Security White Paper

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4

SUSE Linux Enterprise Server 12 SP4 Storage Administration Guide Storage Administration Guide SUSE Linux Enterprise Server 12 SP4 Provides information about how to manage storage devices on a SUSE Linux Enterprise Server. Publication Date: September 24, 2021 SUSE LLC 1800 South Novell Place Provo, UT 84606 USA https://documentation.suse.com Copyright © 2006– 2021 SUSE LLC and contributors. All rights reserved. Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”. For SUSE trademarks, see https://www.suse.com/company/legal/ . All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its aliates. Asterisks (*) denote third-party trademarks. All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its aliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof. Contents About This Guide xii 1 Available Documentation xii 2 Giving Feedback xiv 3 Documentation Conventions xiv 4 Product Life Cycle and Support xvi Support Statement for SUSE Linux Enterprise Server xvii • Technology Previews xviii I FILE SYSTEMS AND MOUNTING 1 1 Overview -

Security Assurance Requirements for Linux Application Container Deployments

NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 NISTIR 8176 Security Assurance Requirements for Linux Application Container Deployments Ramaswamy Chandramouli Computer Security Division Information Technology Laboratory This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 October 2017 U.S. Department of Commerce Wilbur L. Ross, Jr., Secretary National Institute of Standards and Technology Walter Copan, NIST Director and Under Secretary of Commerce for Standards and Technology NISTIR 8176 SECURITY ASSURANCE FOR LINUX CONTAINERS National Institute of Standards and Technology Internal Report 8176 37 pages (October 2017) This publication is available free of charge from: https://doi.org/10.6028/NIST.IR.8176 Certain commercial entities, equipment, or materials may be identified in this document in order to describe an experimental procedure or concept adequately. Such identification is not intended to imply recommendation or endorsement by NIST, nor is it intended to imply that the entities, materials, or equipment are necessarily the best available for the purpose. This p There may be references in this publication to other publications currently under development by NIST in accordance with its assigned statutory responsibilities. The information in this publication, including concepts and methodologies, may be used by federal agencies even before the completion of such companion publications. Thus, until each ublication is available free of charge from: http publication is completed, current requirements, guidelines, and procedures, where they exist, remain operative. For planning and transition purposes, federal agencies may wish to closely follow the development of these new publications by NIST. -

Course Outline & Schedule

Course Outline & Schedule Call US 408-759-5074 or UK +44 20 7620 0033 Suse Linux Advanced System Administration Curriculum Linux Course Code SLASA Duration 5 Day Course Price $2,425 Course Description This instructor led SUSE Linux Advanced System Administration training course is designed to teach the advanced administration, security, networking and performance tasks required on a SUSE Linux Enterprise system. Targeted to closely follow the official LPI curriculum (generic Linux), this course together with the SUSE Linux System Administration course will enable the delegate to work towards achieving the LPIC-2 qualification. Exercises and examples are used throughout the course to give practical hands-on experience with the techniques covered. Objectives The delegate will learn and acquire skills as follows: Perform administrative tasks with supplied tools such as YaST Advanced network configuration Network troubleshooting and analysing packets Creating Apache virtual hosts and hosting user web content Sharing Windows and Linux resources with SAMBA Configuring a DNS server and configuring DNS logging Configuring a DHCP server and client Sharing Linux network resources with NFS Creating Unit Files Configuring AutoFS direct and indirect maps Configuring a secure FTP server Configuring a SQUID proxy server Creating Btrfs subvolumes and snapshots Backing-up and restoring XFS filesystems Configuring LVM and managing Logical Volumes Managing software RAID Centralised storage with iSCSI Monitoring disk status and reliability with SMART Perpetual -

How SUSE Helps Pave the Road to S/4HANA

White Paper How SUSE Helps Pave the Road to S/4HANA Sponsored by: SUSE and SAP Peter Rutten Henry D. Morris August 2017 IDC OPINION According to SAP, there are now 6,800 SAP customers that have chosen S/4HANA. In addition, many more of the software company's customers still have to make the decision to move to S/4HANA. These customers have to determine whether they are ready to migrate their database for mission-critical workloads to a new database (SAP HANA) and a new application suite (S/4HANA). Furthermore, SAP HANA runs on Linux only, an operating environment that SAP customers may not have used extensively before. This white paper discusses the choices SAP customers have for migrating to S/4HANA on Linux and looks at how SUSE helps customers along the way. SITUATION OVERVIEW Among SAP customers, migration from traditional databases to the SAP HANA in-memory platform is continuing steadily. IDC's Data Analytics Infrastructure (SAP) Survey, November 2016 (n = 300) found that 49.3% of SAP customers in North America are running SAP HANA today. Among those that are not on SAP HANA, 29.9% will be in two to four years and 27.1% in four to six years. The slower adopters say that they don't feel rushed, stating that there's time until 2025 to make the decision, and that their current databases are performing adequately. While there is no specific order that SAP customers need to follow to get to S/4HANA, historically businesses have often migrated to SAP Business Warehouse (BW) on SAP HANA first as BW is a rewarding starting point for SAP HANA for two reasons: there is an instant performance improvement and migration is relatively easy since BW typically does not contain core enterprise data that is business critical. -

Securing Server Applications Using Apparmor TUT-1179

Securing Server Applications Using AppArmor TUT-1179 Brian Six Sales Engineer – SUSE [email protected] 1 Agenda AppArmor Introduction How to interact and configure Demo with simple script Can AppArmor help with containers Final thoughts 2 Intro to AppArmor 3 What is AppArmor? Bad software does what it shouldn’t do Good software does what it should do Secure software does what it should do, and nothing else AppArmor secures the Good and the Bad software 4 What is AppArmor? Mandatory Access Control (MAC) security system Applies policies to Applications Access to files and paths, network functions, system functions 5 What is AppArmor? Dynamic for existing profiled applications Regardless of the “user” the process is running as, the policy can be enforced. Root is no exception. 6 What is AppArmor? 7 Architecture Logging and OS Component Application Alerting AppArmor AppArmor Interface Application Profile AppArmor Linux Kernel 2.6 and later LSM Interface 8 How To Interact And Configure 9 How To Interact With AppArmor Apparmor-Utils First Install package 10 How To Interact With AppArmor Used more often 11 What Do Some Of These Do aa-status: Display aa-genprof : Create aa-logprof: Update aa-complain: Set a aa-enforce: Set a status of profile a profile an existing profile profile in complain profile in enforce enforcement mode mode 12 Common Permissions r – read w – write k – lock ux - unconstrained execute Ux - unconstrained execute – scrub px – discrete profile execute Px - discrete profile execute – scrub ix - inherit execute cx - local security profile l – link a – append m - mmap 13 Profile At-A-Glance https://doc.opensuse.org/documentation/leap/archive/42.3/security/html/book.security/cha.apparmor.profiles.html # a comment about foo's local (children) profile for #include <tunables/global> /usr/bin/foobar. -

In Search of the Ideal Storage Configuration for Docker Containers

In Search of the Ideal Storage Configuration for Docker Containers Vasily Tarasov1, Lukas Rupprecht1, Dimitris Skourtis1, Amit Warke1, Dean Hildebrand1 Mohamed Mohamed1, Nagapramod Mandagere1, Wenji Li2, Raju Rangaswami3, Ming Zhao2 1IBM Research—Almaden 2Arizona State University 3Florida International University Abstract—Containers are a widely successful technology today every running container. This would cause a great burden on popularized by Docker. Containers improve system utilization by the I/O subsystem and make container start time unacceptably increasing workload density. Docker containers enable seamless high for many workloads. As a result, copy-on-write (CoW) deployment of workloads across development, test, and produc- tion environments. Docker’s unique approach to data manage- storage and storage snapshots are popularly used and images ment, which involves frequent snapshot creation and removal, are structured in layers. A layer consists of a set of files and presents a new set of exciting challenges for storage systems. At layers with the same content can be shared across images, the same time, storage management for Docker containers has reducing the amount of storage required to run containers. remained largely unexplored with a dizzying array of solution With Docker, one can choose Aufs [6], Overlay2 [7], choices and configuration options. In this paper we unravel the multi-faceted nature of Docker storage and demonstrate its Btrfs [8], or device-mapper (dm) [9] as storage drivers which impact on system and workload performance. As we uncover provide the required snapshotting and CoW capabilities for new properties of the popular Docker storage drivers, this is a images. None of these solutions, however, were designed with sobering reminder that widespread use of new technologies can Docker in mind and their effectiveness for Docker has not been often precede their careful evaluation. -

Have You Driven an Selinux Lately? an Update on the Security Enhanced Linux Project

Have You Driven an SELinux Lately? An Update on the Security Enhanced Linux Project James Morris Red Hat Asia Pacific Pte Ltd [email protected] Abstract All security-relevant accesses between subjects and ob- jects are controlled according to a dynamically loaded Security Enhanced Linux (SELinux) [18] has evolved mandatory security policy. Clean separation of mecha- rapidly over the last few years, with many enhancements nism and policy provides considerable flexibility in the made to both its core technology and higher-level tools. implementation of security goals for the system, while fine granularity of control ensures complete mediation. Following integration into several Linux distributions, SELinux has become the first widely used Mandatory An arbitrary number of different security models may be Access Control (MAC) scheme. It has helped Linux to composed (or “stacked”) by SELinux, with their com- receive the highest security certification likely possible bined effect being fully analyzable under a unified pol- for a mainstream off the shelf operating system. icy scheme. SELinux has also proven its worth for general purpose Currently, the default SELinux implementation com- use in mitigating several serious security flaws. poses the following security models: Type Enforcement (TE) [7], Role Based Access Control (RBAC) [12], While SELinux has a reputation for being difficult to Muilti-level Security (MLS) [29], and Identity Based use, recent developments have helped significantly in Access Control (IBAC). These complement the standard this area, and user adoption is advancing rapidly. Linux Discretionary Access Control (DAC) scheme. This paper provides an informal update on the project, With these models, SELinux provides comprehensive discussing key developments and challenges, with the mandatory enforcement of least privilege, confidential- aim of helping people to better understand current ity, and integrity. -

Addressing Challenges in Automotive Connectivity: Mobile Devices, Technologies, and the Connected Car

2015-01-0224 Published 04/14/2015 Copyright © 2015 SAE International doi:10.4271/2015-01-0224 saepcelec.saejournals.org Addressing Challenges in Automotive Connectivity: Mobile Devices, Technologies, and the Connected Car Patrick Shelly Mentor Graphics Corp. ABSTRACT With the dramatic mismatch between handheld consumer devices and automobiles, both in terms of product lifespan and the speed at which new features (or versions) are released, vehicle OEMs are faced with a perplexing dilemma. If the connected car is to succeed there has to be a secure and accessible method to update the software in a vehicle's infotainment system - as well as a real or perceived way to graft in new software content. The challenge has become even more evident as the industry transitions from simple analog audio systems which have traditionally served up broadcast content to a new world in which configurable and interactive Internet- based content rules the day. This paper explores the options available for updating and extending the software capability of a vehicle's infotainment system while addressing the lifecycle mismatch between automobiles and consumer mobile devices. Implications to the design and cost of factory installed equipment will be discussed, as will expectations around the appeal of these various strategies to specific target demographics. CITATION: Shelly, P., "Addressing Challenges in Automotive Connectivity: Mobile Devices, Technologies, and the Connected Car," SAE Int. J. Passeng. Cars – Electron. Electr. Syst. 8(1):2015, doi:10.4271/2015-01-0224. INTRODUCTION be carefully taken into account. The use of app stores is expected to grow significantly in the coming years as automotive OEMs begin to Contemporary vehicle infotainment systems face an interesting explore apps not only on IVI systems, but on other components of the challenge. -

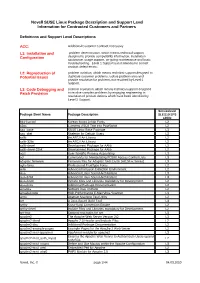

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners

Novell SUSE Linux Package Description and Support Level Information for Contracted Customers and Partners Definitions and Support Level Descriptions ACC: Additional Customer Contract necessary L1: Installation and problem determination, which means technical support Configuration designed to provide compatibility information, installation assistance, usage support, on-going maintenance and basic troubleshooting. Level 1 Support is not intended to correct product defect errors. L2: Reproduction of problem isolation, which means technical support designed to Potential Issues duplicate customer problems, isolate problem area and provide resolution for problems not resolved by Level 1 Support. L3: Code Debugging and problem resolution, which means technical support designed Patch Provision to resolve complex problems by engaging engineering in resolution of product defects which have been identified by Level 2 Support. Servicelevel Package Short Name Package Description SLES10 SP3 s390x 844-ksc-pcf Korean 8x4x4 Johab Fonts L2 a2ps Converts ASCII Text into PostScript L2 aaa_base SUSE Linux Base Package L3 aaa_skel Skeleton for Default Users L3 aalib An ASCII Art Library L2 aalib-32bit An ASCII Art Library L2 aalib-devel Development Package for AAlib L2 aalib-devel-32bit Development Package for AAlib L2 acct User-Specific Process Accounting L3 acl Commands for Manipulating POSIX Access Control Lists L3 adaptec-firmware Firmware files for Adaptec SAS Cards (AIC94xx Series) L3 agfa-fonts Professional TrueType Fonts L2 aide Advanced Intrusion Detection Environment L2 alsa Advanced Linux Sound Architecture L3 alsa-32bit Advanced Linux Sound Architecture L3 alsa-devel Include Files and Libraries mandatory for Development. L2 alsa-docs Additional Package Documentation. L2 amanda Network Disk Archiver L2 amavisd-new High-Performance E-Mail Virus Scanner L3 amtu Abstract Machine Test Utility L2 ant A Java-Based Build Tool L3 anthy Kana-Kanji Conversion Engine L2 anthy-devel Include Files and Libraries mandatory for Development. -

High Velocity Kernel File Systems with Bento

High Velocity Kernel File Systems with Bento Samantha Miller, Kaiyuan Zhang, Mengqi Chen, and Ryan Jennings, University of Washington; Ang Chen, Rice University; Danyang Zhuo, Duke University; Thomas Anderson, University of Washington https://www.usenix.org/conference/fast21/presentation/miller This paper is included in the Proceedings of the 19th USENIX Conference on File and Storage Technologies. February 23–25, 2021 978-1-939133-20-5 Open access to the Proceedings of the 19th USENIX Conference on File and Storage Technologies is sponsored by USENIX. High Velocity Kernel File Systems with Bento Samantha Miller Kaiyuan Zhang Mengqi Chen Ryan Jennings Ang Chen‡ Danyang Zhuo† Thomas Anderson University of Washington †Duke University ‡Rice University Abstract kernel-level debuggers and kernel testing frameworks makes this worse. The restricted and different kernel programming High development velocity is critical for modern systems. environment also limits the number of trained developers. This is especially true for Linux file systems which are seeing Finally, upgrading a kernel module requires either rebooting increased pressure from new storage devices and new demands the machine or restarting the relevant module, either way on storage systems. However, high velocity Linux kernel rendering the machine unavailable during the upgrade. In the development is challenging due to the ease of introducing cloud setting, this forces kernel upgrades to be batched to meet bugs, the difficulty of testing and debugging, and the lack of cloud-level availability goals. support for redeployment without service disruption. Existing Slow development cycles are a particular problem for file approaches to high-velocity development of file systems for systems. -

Resource Management: Linux Kernel Namespaces and Cgroups

Resource management: Linux kernel Namespaces and cgroups Rami Rosen [email protected] Haifux, May 2013 www.haifux.org 1/121 http://ramirose.wix.com/ramirosen TOC Network Namespace PID namespaces UTS namespace Mount namespace user namespaces cgroups Mounting cgroups links Note: All code examples are from for_3_10 branch of cgroup git tree (3.9.0-rc1, April 2013) 2/121 http://ramirose.wix.com/ramirosen General The presentation deals with two Linux process resource management solutions: namespaces and cgroups. We will look at: ● Kernel Implementation details. ●what was added/changed in brief. ● User space interface. ● Some working examples. ● Usage of namespaces and cgroups in other projects. ● Is process virtualization indeed lightweight comparing to Os virtualization ? ●Comparing to VMWare/qemu/scaleMP or even to Xen/KVM. 3/121 http://ramirose.wix.com/ramirosen Namespaces ● Namespaces - lightweight process virtualization. – Isolation: Enable a process (or several processes) to have different views of the system than other processes. – 1992: “The Use of Name Spaces in Plan 9” – http://www.cs.bell-labs.com/sys/doc/names.html ● Rob Pike et al, ACM SIGOPS European Workshop 1992. – Much like Zones in Solaris. – No hypervisor layer (as in OS virtualization like KVM, Xen) – Only one system call was added (setns()) – Used in Checkpoint/Restart ● Developers: Eric W. biederman, Pavel Emelyanov, Al Viro, Cyrill Gorcunov, more. – 4/121 http://ramirose.wix.com/ramirosen Namespaces - contd There are currently 6 namespaces: ● mnt (mount points, filesystems) ● pid (processes) ● net (network stack) ● ipc (System V IPC) ● uts (hostname) ● user (UIDs) 5/121 http://ramirose.wix.com/ramirosen Namespaces - contd It was intended that there will be 10 namespaces: the following 4 namespaces are not implemented (yet): ● security namespace ● security keys namespace ● device namespace ● time namespace. -

Demystifying Internet of Things Security Successful Iot Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M

Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment — Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Ned Smith David M. Wheeler Demystifying Internet of Things Security: Successful IoT Device/Edge and Platform Security Deployment Sunil Cheruvu Anil Kumar Chandler, AZ, USA Chandler, AZ, USA Ned Smith David M. Wheeler Beaverton, OR, USA Gilbert, AZ, USA ISBN-13 (pbk): 978-1-4842-2895-1 ISBN-13 (electronic): 978-1-4842-2896-8 https://doi.org/10.1007/978-1-4842-2896-8 Copyright © 2020 by The Editor(s) (if applicable) and The Author(s) This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. Open Access This book is licensed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license and indicate if changes were made. The images or other third party material in this book are included in the book’s Creative Commons license, unless indicated otherwise in a credit line to the material.