NVIDIA A100 Tensor Core GPU Architecture UNPRECEDENTED ACCELERATION at EVERY SCALE

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Supermicro GPU Solutions Optimized for NVIDIA Nvlink

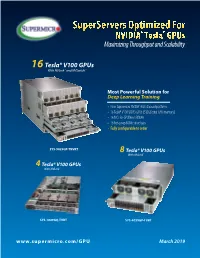

SuperServers Optimized For NVIDIA® Tesla® GPUs Maximizing Throughput and Scalability 16 Tesla® V100 GPUs With NVLink™ and NVSwitch™ Most Powerful Solution for Deep Learning Training • New Supermicro NVIDIA® HGX-2 based platform • 16 Tesla® V100 SXM3 GPUs (512GB total GPU memory) • 16 NICs for GPUDirect RDMA • 16 hot-swap NVMe drive bays • Fully configurable to order SYS-9029GP-TNVRT 8 Tesla® V100 GPUs With NVLink™ 4 Tesla® V100 GPUs With NVLink™ SYS-1029GQ-TVRT SYS-4029GP-TVRT www.supermicro.com/GPU March 2019 Maximum Acceleration for AI/DL Training Workloads PERFORMANCE: Highest Parallel peak performance with NVIDIA Tesla V100 GPUs THROUGHPUT: Best in class GPU-to-GPU bandwidth with a maximum speed of 300GB/s SCALABILITY: Designed for direct interconections between multiple GPU nodes FLEXIBILITY: PCI-E 3.0 x16 for low latency I/O expansion capacity & GPU Direct RDMA support DESIGN: Optimized GPU cooling for highest sustained parallel computing performance EFFICIENCY: Redundant Titanium Level power supplies & intelligent cooling control Model SYS-1029GQ-TVRT SYS-4029GP-TVRT • Dual Intel® Xeon® Scalable processors with 3 UPI up to • Dual Intel® Xeon® Scalable processors with 3 UPI up to 10.4GT/s CPU Support 10.4GT/s • Supports up to 205W TDP CPU • Supports up to 205W TDP CPU • 8 NVIDIA® Tesla® V100 GPUs • 4 NVIDIA Tesla V100 GPUs • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s GPU Support • NVIDIA® NVLink™ GPU Interconnect up to 300GB/s • Optimized for GPUDirect RDMA • Optimized for GPUDirect RDMA • Independent CPU and GPU thermal zones -

20201130 Gcdv V1.0.Pdf

DE LA RECHERCHE À L’INDUSTRIE Architecture evolutions for HPC 30 Novembre 2020 Guillaume Colin de Verdière Commissariat à l’énergie atomique et aux énergies alternatives - www.cea.fr Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 EVOLUTION DRIVER: TECHNOLOGICAL CONSTRAINTS . Power wall . Scaling wall . Memory wall . Towards accelerated architectures . SC20 main points Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 2 POWER WALL P: power 푷 = 풄푽ퟐ푭 V: voltage F: frequency . Reduce V o Reuse of embedded technologies . Limit frequencies . More cores . More compute, less logic o SIMD larger o GPU like structure © Karl Rupp https://software.intel.com/en-us/blogs/2009/08/25/why-p-scales-as-cv2f-is-so-obvious-pt-2-2 Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 3 SCALING WALL FinFET . Moore’s law comes to an end o Probable limit around 3 - 5 nm o Need to design new structure for transistors Carbon nanotube . Limit of circuit size o Yield decrease with the increase of surface • Chiplets will dominate © AMD o Data movement will be the most expensive operation • 1 DFMA = 20 pJ, SRAM access= 50 pJ, DRAM access= 1 nJ (source NVIDIA) 1 nJ = 1000 pJ, Φ Si =110 pm Commissariat à l’énergie atomique et aux énergies alternatives EUROfusion G. Colin de Verdière 30/11/2020 4 MEMORY WALL . Better bandwidth with HBM o DDR5 @ 5200 MT/s 8ch = 0.33 TB/s Thread 1 Thread Thread 2 Thread Thread 3 Thread o HBM2 @ 4 stacks = 1.64 TB/s 4 Thread Skylake: SMT2 . -

Nvidia Video Codec Sdk - Encoder

NVIDIA VIDEO CODEC SDK - ENCODER Application Note vNVENC_DA-6209-001_v14 | July 2021 Table of Contents Chapter 1. NVIDIA Hardware Video Encoder.......................................................................1 1.1. Introduction............................................................................................................................... 1 1.2. NVENC Capabilities...................................................................................................................1 1.3. NVENC Licensing Policy...........................................................................................................3 1.4. NVENC Performance................................................................................................................ 3 1.5. Programming NVENC...............................................................................................................5 1.6. FFmpeg Support....................................................................................................................... 5 NVIDIA VIDEO CODEC SDK - ENCODER vNVENC_DA-6209-001_v14 | ii Chapter 1. NVIDIA Hardware Video Encoder 1.1. Introduction NVIDIA GPUs - beginning with the Kepler generation - contain a hardware-based encoder (referred to as NVENC in this document) which provides fully accelerated hardware-based video encoding and is independent of graphics/CUDA cores. With end-to-end encoding offloaded to NVENC, the graphics/CUDA cores and the CPU cores are free for other operations. For example, in a game recording scenario, -

Parallel Rendering on Hybrid Multi-GPU Clusters

Eurographics Symposium on Parallel Graphics and Visualization (2012) H. Childs and T. Kuhlen (Editors) Parallel Rendering on Hybrid Multi-GPU Clusters S. Eilemann†1, A. Bilgili1, M. Abdellah1, J. Hernando2, M. Makhinya3, R. Pajarola3, F. Schürmann1 1Blue Brain Project, EPFL; 2CeSViMa, UPM; 3Visualization and MultiMedia Lab, University of Zürich Abstract Achieving efficient scalable parallel rendering for interactive visualization applications on medium-sized graphics clusters remains a challenging problem. Framerates of up to 60hz require a carefully designed and fine-tuned parallel rendering implementation that fits all required operations into the 16ms time budget available for each rendered frame. Furthermore, modern commodity hardware embraces more and more a NUMA architecture, where multiple processor sockets each have their locally attached memory and where auxiliary devices such as GPUs and network interfaces are directly attached to one of the processors. Such so called fat NUMA processing and graphics nodes are increasingly used to build cost-effective hybrid shared/distributed memory visualization clusters. In this paper we present a thorough analysis of the asynchronous parallelization of the rendering stages and we derive and implement important optimizations to achieve highly interactive framerates on such hybrid multi-GPU clusters. We use both a benchmark program and a real-world scientific application used to visualize, navigate and interact with simulations of cortical neuron circuit models. Categories and Subject Descriptors (according to ACM CCS): I.3.2 [Computer Graphics]: Graphics Systems— Distributed Graphics; I.3.m [Computer Graphics]: Miscellaneous—Parallel Rendering 1. Introduction Hybrid GPU clusters are often build from so called fat render nodes using a NUMA architecture with multiple The decomposition of parallel rendering systems across mul- CPUs and GPUs per machine. -

High Performance Computing and AI Solutions Portfolio

Brochure High Performance Computing and AI Solutions Portfolio Technology and expertise to help you accelerate discovery and innovation Discovery and innovation have always started with great minds Go ahead. dreaming big. As artificial intelligence (AI), high performance computing (HPC) and data analytics continue to converge and Dream big. evolve, they are fueling the next industrial revolution and the next quantum leap in human progress. And with the help of increasingly powerful technology, you can dream even bigger. Dell Technologies will be there every step of the way with the technology you need to power tomorrow’s discoveries and the expertise to bring it all together, today. 463 exabytes The convergence of HPC and AI is driven by data. The data‑driven age is dramatically reshaping industries and reinventing the future. As of data will be created each day by 20251 vast amounts of data pour in from increasingly diverse sources, leveraging that data is both critical and transformational. Whether you’re working to save lives, understand the universe, build better machines, neutralize financial risks or anticipate customer sentiment, 44X ROI data informs and drives decisions that impact the success of your organization — and Average return on investment (ROI) shapes the future of our world. for HPC2 AI, HPC and data analytics are technologies designed to unlock the value of your data. While they have long been treated as separate, the three technologies are converging as 83% of CIOs it becomes clear that analytics and AI are both big‑data problems that require the power, Say they are investing in AI scalable compute, networking and storage provided by HPC. -

Science-Driven Development of Supercomputer Fugaku

Special Contribution Science-driven Development of Supercomputer Fugaku Hirofumi Tomita Flagship 2020 Project, Team Leader RIKEN Center for Computational Science In this article, I would like to take a historical look at activities on the application side during supercomputer Fugaku’s climb to the top of supercomputer rankings as seen from a vantage point in early July 2020. I would like to describe, in particular, the change in mindset that took place on the application side along the path toward Fugaku’s top ranking in four benchmarks in 2020 that all began with the Application Working Group and Computer Architecture/Compiler/ System Software Working Group in 2011. Somewhere along this path, the application side joined forces with the architecture/system side based on the keyword “co-design.” During this journey, there were times when our opinions clashed and when efforts to solve problems came to a halt, but there were also times when we took bold steps in a unified manner to surmount difficult obstacles. I will leave the description of specific technical debates to other articles, but here, I would like to describe the flow of Fugaku development from the application side based heavily on a “science-driven” approach along with some of my personal opinions. Actually, we are only halfway along this path. We look forward to the many scientific achievements and so- lutions to social problems that Fugaku is expected to bring about. 1. Introduction In this article, I would like to take a look back at On June 22, 2020, the supercomputer Fugaku the flow along the path toward Fugaku’s top ranking became the first supercomputer in history to simul- as seen from the application side from a vantage point taneously rank No. -

BRKIOT-2394.Pdf

Unlocking the Mystery of Machine Learning and Big Data Analytics Robert Barton Jerome Henry Distinguished Architect Principal Engineer @MrRobbarto Office of the CTAO CCIE #6660 @wirelessccie CCDE #2013::6 CCIE #24750 CWNE #45 BRKIOT-2394 Cisco Webex Teams Questions? Use Cisco Webex Teams to chat with the speaker after the session How 1 Find this session in the Cisco Events Mobile App 2 Click “Join the Discussion” 3 Install Webex Teams or go directly to the team space 4 Enter messages/questions in the team space BRKIOT-2394 © 2020 Cisco and/or its affiliates. All rights reserved. Cisco Public 3 Tuesday, Jan. 28th Monday, Jan. 27th Wednesday, Jan. 29th BRKIOT-2600 BRKIOT-2213 16:45 Enabling OT-IT collaboration by 17:00 From Zero to IOx Hero transforming traditional industrial TECIOT-2400 networks to modern IoT Architectures IoT Fundamentals 08:45 BRKIOT-1618 Bootcamp 14:45 Industrial IoT Network Management PSOIOT-1156 16:00 using Cisco Industrial Network Director Securing Industrial – A Deep Dive. Networks: Introduction to Cisco Cyber Vision PSOIOT-2155 Enhancing the Commuter 13:30 BRKIOT-1775 Experience - Service Wireless technologies and 14:30 BRKIOT-2698 BRKIOT-1520 Provider WiFi at the Use Cases in Industrial IOT Industrial IoT Routing – Connectivity 12:15 Cisco Remote & Mobile Asset speed of Trains and Beyond Solutions PSOIOT-2197 Cisco Innovates Autonomous 14:00 TECIOT-2000 Vehicles & Roadways w/ IoT BRKIOT-2497 BRKIOT-2900 Understanding Cisco's 14:30 IoT Solutions for Smart Cities and 11:00 Automating the Network of Internet Of Things (IOT) BRKIOT-2108 Communities Industrial Automation Solutions Connected Factory Architecture Theory and 11:00 Practice PSOIOT-2100 BRKIOT-1291 Unlock New Market 16:15 Opening Keynote 09:00 08:30 Opportunities with LoRaWAN for IOT Enterprises Embedded Cisco services Technologies IOT IOT IOT Track #CLEMEA www.ciscolive.com/emea/learn/technology-tracks.html Cisco Live Thursday, Jan. -

GPU-Based Deep Learning Inference

Whitepaper GPU-Based Deep Learning Inference: A Performance and Power Analysis November 2015 1 Contents Abstract ......................................................................................................................................................... 3 Introduction .................................................................................................................................................. 3 Inference versus Training .............................................................................................................................. 4 GPUs Excel at Neural Network Inference ..................................................................................................... 5 Inference Optimizations in Caffe and cuDNN 4 ........................................................................................ 5 Experimental Setup and Testing Methodology ........................................................................................ 7 Inference on Small and Large GPUs .......................................................................................................... 8 Conclusion ................................................................................................................................................... 10 References .................................................................................................................................................. 10 2 Abstract Deep learning methods are revolutionizing various areas of machine perception. On a -

Parallel Texture-Based Vector Field Visualization on Curved Surfaces Using GPU Cluster Computers

Eurographics Symposium on Parallel Graphics and Visualization (2006) Alan Heirich, Bruno Raffin, and Luis Paulo dos Santos (Editors) Parallel Texture-Based Vector Field Visualization on Curved Surfaces Using GPU Cluster Computers S. Bachthaler1, M. Strengert1, D. Weiskopf2, and T. Ertl1 1Institute of Visualization and Interactive Systems, University of Stuttgart, Germany 2School of Computing Science, Simon Fraser University, Canada Abstract We adopt a technique for texture-based visualization of flow fields on curved surfaces for parallel computation on a GPU cluster. The underlying LIC method relies on image-space calculations and allows the user to visualize a full 3D vector field on arbitrary and changing hypersurfaces. By using parallelization, both the visualization speed and the maximum data set size are scaled with the number of cluster nodes. A sort-first strategy with image-space decomposition is employed to distribute the workload for the LIC computation, while a sort-last approach with an object-space partitioning of the vector field is used to increase the total amount of available GPU memory. We specifically address issues for parallel GPU-based vector field visualization, such as reduced locality of memory accesses caused by particle tracing, dynamic load balancing for changing camera parameters, and the combination of image-space and object-space decomposition in a hybrid approach. Performance measurements document the behavior of our implementation on a GPU cluster with AMD Opteron CPUs, NVIDIA GeForce 6800 Ultra GPUs, and Infiniband network connection. Categories and Subject Descriptors (according to ACM CCS): I.3.3 [Computer Graphics]: Viewing algorithms I.3.3 [Three-Dimensional Graphics and Realism]: Color, shading, shadowing, and texture 1. -

Msi Nvidia Drivers Download Update MSI Graphics Card Driver on Windows 10 & 7

msi nvidia drivers download Update MSI Graphics Card Driver on Windows 10 & 7. Easily! Updating MSI graphics card drivers provides you with high gaming performance. So it’s recommended you keep your graphics card driver up-to- date. In this post, you’ll learn two ways to download and install the latest MSI graphics card driver. Download the MSI graphics card driver manually Download and install the MSI graphics card driver automatically. Way 1: Download the MSI graphics card driver manually. MSI provides the graphics driver on their website, for instance, the NVIDIA graphics card driver and the AMD graphics card driver. So you can check for and download the latest driver you need for your graphics card from MSI’s website. The driver always can be downloaded on the SUPPORT section. Go to MSI website and enter the name of your graphics card and perform a quick search. Then follow the on-screen instructions to download the driver you need. MSI always uploads new drivers to their website. So it’s recommended you to check for the driver release often in order to get the latest driver in time. If you don’t have time and patience to download the driver manually, Way 2 may be a better option for you. Way 2 : Download and install the MSI graphics card driver automatically. If you don’t have the time, patience or computer skills to update the MSI graphics driver manually, you can do it automatically with Driver Easy . Driver Easy will automatically recognize your system and find the correct drivers for it. -

NVIDIA DGX Station the First Personal AI Supercomputer 1.0 Introduction

White Paper NVIDIA DGX Station The First Personal AI Supercomputer 1.0 Introduction.........................................................................................................2 2.0 NVIDIA DGX Station Architecture ........................................................................3 2.1 NVIDIA Tesla V100 ..........................................................................................5 2.2 Second-Generation NVIDIA NVLink™ .............................................................7 2.3 Water-Cooling System for the GPUs ...............................................................7 2.4 GPU and System Memory...............................................................................8 2.5 Other Workstation Components.....................................................................9 3.0 Multi-GPU with NVLink......................................................................................10 3.1 DGX NVLink Network Topology for Efficient Application Scaling..................10 3.2 Scaling Deep Learning Training on NVLink...................................................12 4.0 DGX Station Software Stack for Deep Learning.................................................14 4.1 NVIDIA CUDA Toolkit.....................................................................................16 4.2 NVIDIA Deep Learning SDK ...........................................................................16 4.3 Docker Engine Utility for NVIDIA GPUs.........................................................17 4.4 NVIDIA Container -

Evolution of the Graphical Processing Unit

University of Nevada Reno Evolution of the Graphical Processing Unit A professional paper submitted in partial fulfillment of the requirements for the degree of Master of Science with a major in Computer Science by Thomas Scott Crow Dr. Frederick C. Harris, Jr., Advisor December 2004 Dedication To my wife Windee, thank you for all of your patience, intelligence and love. i Acknowledgements I would like to thank my advisor Dr. Harris for his patience and the help he has provided me. The field of Computer Science needs more individuals like Dr. Harris. I would like to thank Dr. Mensing for unknowingly giving me an excellent model of what a Man can be and for his confidence in my work. I am very grateful to Dr. Egbert and Dr. Mensing for agreeing to be committee members and for their valuable time. Thank you jeffs. ii Abstract In this paper we discuss some major contributions to the field of computer graphics that have led to the implementation of the modern graphical processing unit. We also compare the performance of matrix‐matrix multiplication on the GPU to the same computation on the CPU. Although the CPU performs better in this comparison, modern GPUs have a lot of potential since their rate of growth far exceeds that of the CPU. The history of the rate of growth of the GPU shows that the transistor count doubles every 6 months where that of the CPU is only every 18 months. There is currently much research going on regarding general purpose computing on GPUs and although there has been moderate success, there are several issues that keep the commodity GPU from expanding out from pure graphics computing with limited cache bandwidth being one.