Comparing Performance and Energy Efficiency of Fpgas and Gpus For

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

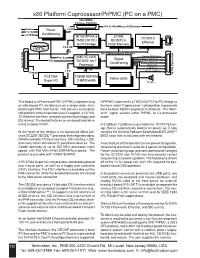

X86 Platform Coprocessor/Prpmc (PC on a PMC)

x86 Platform Coprocessor/PrPMC (PC on a PMC) 32b/33MHz PCI bus PN1/PN2 +5V to Kbd/Mouse/USB power Vcore Power +3.3V +2.5v Conditioning +3.3VIO 1K100 FPGA & 512KB 10/100TX TMS2250 PCI BIOS/PLA Ethernet RJ45 Compact to PCI bridge Flash Memory PLA I/O Flash site 8 32b/33MHz Internal PCI bus Analog SVGA Video Pwr Seq AMD SC2200 Signal COM 1 (RXD/TXD only) IDE "GEODE (tm)" Conditioning COM 2 (RXD/TXD only) Processor USB Port 1 Rear I/O PN4 I/O Rear 64 USB Port 2 LPC Keyboard/Mouse Floppy 36 Pin 36 Pin Connector PC87364 128MB SDRAM Status LEDs Super I/O (16MWx64b) PC Spkr This board is a Processor PMC (PrPMC) implementing A PrPMC implements a TMS2250 PCI-to-PCI bridge to an x86-based PC architecture on a single-wide, stan- the host, and a “Coprocessor” cofniguration implements dard height PMC form factor. This delivers a complete back-to-back 16550 compatible COM ports. The “Mon- x86 platform with comprehensive I/O support, a 10/100- arch” signal selects either PrPMC or Co-processor TX Ethernet interface, and disk access (both floppy and mode. IDE drives). The board features an on-board hard drive using Compact Flash. A 512Kbyte FLASH memory holds the 1K100 PLA im- age that is automatically loaded on power up. It also At the heart of the design is an Advanced Micro De- contains the General Software Embedded BIOS 2000™ vices SC2200 GEODE™ processor that integrates video, BIOS code that is included with each board. -

Convey Overview

THE WORLD’S FIRST HYBRID-CORE COMPUTER. CONVEY HYBRID-CORE COMPUTING Hybrid-core Computing Convey HC-1 High Performance of application- specific hardware Heterogenous solutions • can be much more efficient Performance/ • still hard to program Programmability and Power efficiency deployment ease of an x86 server Application Multicore solutions • don’t always scale well • parallel programming is hard Low Difficult Ease of Deployment Easy 1/22/2010 3 Hybrid-Core Computing Application-Specific Personalities Applications • Extend the x86 instruction set • Implement key operations in Convey Compilers hardware Life Sciences x86-64 ISA Custom ISA CAE Custom Financial Oil & Gas Shared Virtual Memory Cache-coherent, shared memory • Both ISAs address common memory *ISA: Instruction Set Architecture 7/12/2010 4 HC-1 Hardware PCI I/O FPGA FPGA Intel Personalities Chipset FPGA FPGA 8 GB/s 80 GB/s Memory Memory Cache Coherent, Shared Virtual Memory 1/22/2010 5 Using Personalities C/C++ Fortran • Personalities are user specifies reloadable personality at instruction sets Convey Software compile time Development Suite • Compiler instruction generates x86 descriptions and coprocessor instructions from Hybrid-Core Executable P ANSI standard x86-64 and Coprocessor Personalities C/C++ & Fortran Instructions • Executable can run on x86 nodes FPGA Convey HC-1 or Convey Hybrid- bitfiles Core nodes Intel x86 Coprocessor personality loaded at runtime by OS 1/22/2010 6 SYSTEM ARCHITECTURE HC-1 Architecture “Commodity” Intel Server Convey FPGA-based coprocessor Direct -

Powervr SGX Series5xt IP Core Family

PowerVR SGX Series5XT IP Core Family The PowerVR™ SGX Series5XT Graphics Processing Unit (GPU) IP core family is a series Features of highly efficient graphics acceleration IP cores that meet the multimedia requirements of • Most comprehensive IP core family the next generation of consumer, communications and computing applications. and roadmap in the industry PowerVR SGX Series5XT architecture is fully scalable for a wide range of area and • USSE2 delivers twice the peak performance requirements, enabling it to target markets from low cost feature-rich mobile floating point and instruction multimedia products to very high performance consoles and computing devices. throughput of Series5 USSE • YUV and colour space accelerators The family incorporates the second-generation Universal Scalable Shader Engine (USSE2™), for improved performance with a feature set that exceeds the requirements of OpenGL 2.0 and Microsoft Shader • Upgraded PowerVR Series5XT Model 3, enabling 2D, 3D and general purpose (GP-GPU) processing in a single core. shader-driven tile-based deferred rendering (TBDR) architecture • Multi-processor options enable scalability to higher performance • Support for all industry standard PowerVR SGX Family mobile and desktop graphics APIs and operating sytems Series5XT SGX543MP1-16, SGX544MP1-16, SGX554MP1-16 • Fully backwards compatible with PowerVR MBX and SGX Series5 Series5 SGX520, SGX530, SGX531, SGX535, SGX540, SGX545 Benefits Multi-standard API and OS • Extensive product line supports all area/performance requirements OpenGL -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27

Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 1 of 34 1 BOIES, SCHILLER & FLEXNER LLP WILLIAM A. ISAACSON (pro hac vice) 2 5301 Wisconsin Ave. NW, Suite 800 Washington, D.C. 20015 3 Telephone: (202) 237-2727 Facsimile: (202) 237-6131 4 Email: [email protected] 5 6 BOIES, SCHILLER & FLEXNER LLP BOIES, SCHILLER & FLEXNER LLP JOHN F. COVE, JR. (CA Bar No. 212213) PHILIP J. IOVIENO (pro hac vice) 7 DAVID W. SHAPIRO (CA Bar No. 219265) ANNE M. NARDACCI (pro hac vice) KEVIN J. BARRY (CA Bar No. 229748) 10 North Pearl Street 8 1999 Harrison St., Suite 900 4th Floor Oakland, CA 94612 Albany, NY 12207 9 Telephone: (510) 874-1000 Telephone: (518) 434-0600 Facsimile: (510) 874-1460 Facsimile: (518) 434-0665 10 Email: [email protected] Email: [email protected] [email protected] [email protected] 11 [email protected] 12 Attorneys for Plaintiff Jordan Walker Interim Class Counsel for Direct Purchaser 13 Plaintiffs 14 15 UNITED STATES DISTRICT COURT 16 NORTHERN DISTRICT OF CALIFORNIA 17 18 IN RE GRAPHICS PROCESSING UNITS ) Case No.: M:07-CV-01826-WHA ANTITRUST LITIGATION ) 19 ) MDL No. 1826 ) 20 This Document Relates to: ) THIRD CONSOLIDATED AND ALL DIRECT PURCHASER ACTIONS ) AMENDED CLASS ACTION 21 ) COMPLAINT FOR VIOLATION OF ) SECTION 1 OF THE SHERMAN ACT, 15 22 ) U.S.C. § 1 23 ) ) 24 ) ) JURY TRIAL DEMANDED 25 ) ) 26 ) ) 27 ) 28 THIRD CONSOLIDATED AND AMENDED CLASS ACTION COMPLAINT BY DIRECT PURCHASERS M:07-CV-01826-WHA Case M:07-cv-01826-WHA Document 249 Filed 11/08/2007 Page 2 of 34 1 Plaintiffs Jordan Walker, Michael Bensignor, d/b/a Mike’s Computer Services, Fred 2 Williams, and Karol Juskiewicz, on behalf of themselves and all others similarly situated in the 3 United States, bring this action for damages and injunctive relief under the federal antitrust laws 4 against Defendants named herein, demanding trial by jury, and complaining and alleging as 5 follows: 6 NATURE OF THE CASE 7 1. -

Driver Riva Tnt2 64

Driver riva tnt2 64 click here to download The following products are supported by the drivers: TNT2 TNT2 Pro TNT2 Ultra TNT2 Model 64 (M64) TNT2 Model 64 (M64) Pro Vanta Vanta LT GeForce. The NVIDIA TNT2™ was the first chipset to offer a bit frame buffer for better quality visuals at higher resolutions, bit color for TNT2 M64 Memory Speed. NVIDIA no longer provides hardware or software support for the NVIDIA Riva TNT GPU. The last Forceware unified display driver which. version now. NVIDIA RIVA TNT2 Model 64/Model 64 Pro is the first family of high performance. Drivers > Video & Graphic Cards. Feedback. NVIDIA RIVA TNT2 Model 64/Model 64 Pro: The first chipset to offer a bit frame buffer for better quality visuals Subcategory, Video Drivers. Update your computer's drivers using DriverMax, the free driver update tool - Display Adapters - NVIDIA - NVIDIA RIVA TNT2 Model 64/Model 64 Pro Computer. (In Windows 7 RC1 there was the build in TNT2 drivers). http://kemovitra. www.doorway.ru Use the links on this page to download the latest version of NVIDIA RIVA TNT2 Model 64/Model 64 Pro (Microsoft Corporation) drivers. All drivers available for. NVIDIA RIVA TNT2 Model 64/Model 64 Pro - Driver Download. Updating your drivers with Driver Alert can help your computer in a number of ways. From adding. Nvidia RIVA TNT2 M64 specs and specifications. Price comparisons for the Nvidia RIVA TNT2 M64 and also where to download RIVA TNT2 M64 drivers. Windows 7 and Windows Vista both fail to recognize the Nvidia Riva TNT2 ( Model64/Model 64 Pro) which means you are restricted to a low. -

(GPU) Computing

Graphics Processing Unit (GPU) computing This section describes the graphics processing unit (GPU) computing feature of OptiSystem. Note: The GPU computing feature is only configurable with OptiSystem Version 11 (or higher) What is GPU computing? GPU computing or GPGPU takes advantage of a computer’s grahics processing card to augment the speed of general purpose scientific and engineering computing tasks. Compute Unified Device Architecture (CUDA) implementation for OptiSystem NVIDIA revolutionized the GPGPU and accelerated computing when it introduced a new parallel computing architecture: Compute Unified Device Architecture (CUDA). CUDA is both a hardware and software architecture for issuing and managing computations within the GPU, thus allowing it to operate as a generic data-parallel computing device. CUDA allows the programmer to take advantage of the parallel computing power of an NVIDIA graphics card to perform general purpose computations. OptiSystem CUDA implementation The OptiSystem model for GPU computing involves using a central processing unit (CPU) and GPU together in a heterogeneous co-processing computing model. The sequential part of the application runs on the CPU and the computationally-intensive part is accelerated by the GPU. In the OptiSystem GPU programming model, the application has been modified to map the compute-intensive kernels to the GPU. The remainder of the application remains within the CPU. CUDA parallel computing architecture The NVIDIA CUDA parallel computing architecture is enabled on GeForce®, Quadro®, and Tesla™ products. Whereas GeForce and Quadro are designed for consumer graphics and professional visualization respectively, the Tesla product family is designed ground-up for parallel computing and offers exclusive computing 3 GRAPHICS PROCESSING UNIT (GPU) COMPUTING features, and is the recommended choice for the OptiSystem GPU. -

UNIT 8B a Full Adder

UNIT 8B Computer Organization: Levels of Abstraction 15110 Principles of Computing, 1 Carnegie Mellon University - CORTINA A Full Adder C ABCin Cout S in 0 0 0 A 0 0 1 0 1 0 B 0 1 1 1 0 0 1 0 1 C S out 1 1 0 1 1 1 15110 Principles of Computing, 2 Carnegie Mellon University - CORTINA 1 A Full Adder C ABCin Cout S in 0 0 0 0 0 A 0 0 1 0 1 0 1 0 0 1 B 0 1 1 1 0 1 0 0 0 1 1 0 1 1 0 C S out 1 1 0 1 0 1 1 1 1 1 ⊕ ⊕ S = A B Cin ⊕ ∧ ∨ ∧ Cout = ((A B) C) (A B) 15110 Principles of Computing, 3 Carnegie Mellon University - CORTINA Full Adder (FA) AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 4 Carnegie Mellon University - CORTINA 2 Another Full Adder (FA) http://students.cs.tamu.edu/wanglei/csce350/handout/lab6.html AB 1-bit Cout Full Cin Adder S 15110 Principles of Computing, 5 Carnegie Mellon University - CORTINA 8-bit Full Adder A7 B7 A2 B2 A1 B1 A0 B0 1-bit 1-bit 1-bit 1-bit ... Cout Full Full Full Full Cin Adder Adder Adder Adder S7 S2 S1 S0 AB 8 ⁄ ⁄ 8 C 8-bit C out FA in ⁄ 8 S 15110 Principles of Computing, 6 Carnegie Mellon University - CORTINA 3 Multiplexer (MUX) • A multiplexer chooses between a set of inputs. D1 D 2 MUX F D3 D ABF 4 0 0 D1 AB 0 1 D2 1 0 D3 1 1 D4 http://www.cise.ufl.edu/~mssz/CompOrg/CDAintro.html 15110 Principles of Computing, 7 Carnegie Mellon University - CORTINA Arithmetic Logic Unit (ALU) OP 1OP 0 Carry In & OP OP 0 OP 1 F 0 0 A ∧ B 0 1 A ∨ B 1 0 A 1 1 A + B http://cs-alb-pc3.massey.ac.nz/notes/59304/l4.html 15110 Principles of Computing, 8 Carnegie Mellon University - CORTINA 4 Flip Flop • A flip flop is a sequential circuit that is able to maintain (save) a state. -

Exploiting Free Silicon for Energy-Efficient Computing Directly

Exploiting Free Silicon for Energy-Efficient Computing Directly in NAND Flash-based Solid-State Storage Systems Peng Li Kevin Gomez David J. Lilja Seagate Technology Seagate Technology University of Minnesota, Twin Cities Shakopee, MN, 55379 Shakopee, MN, 55379 Minneapolis, MN, 55455 [email protected] [email protected] [email protected] Abstract—Energy consumption is a fundamental issue in today’s data A well-known solution to the memory wall issue is moving centers as data continue growing dramatically. How to process these computing closer to the data. For example, Gokhale et al [2] data in an energy-efficient way becomes more and more important. proposed a processor-in-memory (PIM) chip by adding a processor Prior work had proposed several methods to build an energy-efficient system. The basic idea is to attack the memory wall issue (i.e., the into the main memory for computing. Riedel et al [9] proposed performance gap between CPUs and main memory) by moving com- an active disk by using the processor inside the hard disk drive puting closer to the data. However, these methods have not been widely (HDD) for computing. With the evolution of other non-volatile adopted due to high cost and limited performance improvements. In memories (NVMs), such as phase-change memory (PCM) and spin- this paper, we propose the storage processing unit (SPU) which adds computing power into NAND flash memories at standard solid-state transfer torque (STT)-RAM, researchers also proposed to use these drive (SSD) cost. By pre-processing the data using the SPU, the data NVMs as the main memory for data-intensive applications [10] to that needs to be transferred to host CPUs for further processing improve the system energy-efficiency. -

CUDA What Is GPGPU

What is GPGPU ? • General Purpose computation using GPU in applications other than 3D graphics CUDA – GPU accelerates critical path of application • Data parallel algorithms leverage GPU attributes – Large data arrays, streaming throughput Slides by David Kirk – Fine-grain SIMD parallelism – Low-latency floating point (FP) computation • Applications – see //GPGPU.org – Game effects (FX) physics, image processing – Physical modeling, computational engineering, matrix algebra, convolution, correlation, sorting Previous GPGPU Constraints CUDA • Dealing with graphics API per thread per Shader Input Registers • “Compute Unified Device Architecture” – Working with the corner cases per Context • General purpose programming model of the graphics API Fragment Program Texture – User kicks off batches of threads on the GPU • Addressing modes Constants – GPU = dedicated super-threaded, massively data parallel co-processor – Limited texture size/dimension Temp Registers • Targeted software stack – Compute oriented drivers, language, and tools Output Registers • Shader capabilities • Driver for loading computation programs into GPU FB Memory – Limited outputs – Standalone Driver - Optimized for computation • Instruction sets – Interface designed for compute - graphics free API – Data sharing with OpenGL buffer objects – Lack of Integer & bit ops – Guaranteed maximum download & readback speeds • Communication limited – Explicit GPU memory management – Between pixels – Scatter a[i] = p 1 Parallel Computing on a GPU Extended C • Declspecs • NVIDIA GPU Computing Architecture – global, device, shared, __device__ float filter[N]; – Via a separate HW interface local, constant __global__ void convolve (float *image) { – In laptops, desktops, workstations, servers GeForce 8800 __shared__ float region[M]; ... • Keywords • 8-series GPUs deliver 50 to 200 GFLOPS region[threadIdx] = image[i]; on compiled parallel C applications – threadIdx, blockIdx • Intrinsics __syncthreads() – __syncthreads .. -

A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis

Iowa State University Capstones, Theses and Creative Components Dissertations Summer 2019 A configurable general purpose graphics processing unit for power, performance, and area analysis Garrett Lies Follow this and additional works at: https://lib.dr.iastate.edu/creativecomponents Part of the Digital Circuits Commons Recommended Citation Lies, Garrett, "A configurable general purpose graphics processing unit for power, performance, and area analysis" (2019). Creative Components. 329. https://lib.dr.iastate.edu/creativecomponents/329 This Creative Component is brought to you for free and open access by the Iowa State University Capstones, Theses and Dissertations at Iowa State University Digital Repository. It has been accepted for inclusion in Creative Components by an authorized administrator of Iowa State University Digital Repository. For more information, please contact [email protected]. A Configurable General Purpose Graphics Processing Unit for Power, Performance, and Area Analysis by Garrett Joseph Lies A Creative Component submitted to the graduate faculty in partial fulfillment of the requirements for the degree of MASTER OF SCIENCE Major: Computer Engineering (Very-Large Scale Integration) Program of Study Committee: Joseph Zambreno, Major Professor The student author, whose presentation of the scholarship herein was approved by the program of study committee, is solely responsible for the content of this dissertation/thesis. The Graduate College will ensure this dissertation/thesis is globally accessible and will not permit alterations after a degree is conferred. Iowa State University Ames, Iowa 2019 Copyright c Garrett Joseph Lies, 2019. All rights reserved. ii TABLE OF CONTENTS Page LIST OF TABLES . iv LIST OF FIGURES . .v ACKNOWLEDGMENTS . vi ABSTRACT . vii CHAPTER 1. -

On the Energy Efficiency of Graphics Processing Units for Scientific

On the Energy Efficiency of Graphics Processing Units for Scientific Computing S. Huang, S. Xiao, W. Feng Department of Computer Science Virginia Tech {huangs, shucai, wfeng}@vt.edu Abstract sively parallel, multi-threaded multiprocessor design, combined with an appropriate data-parallel mapping of The graphics processing unit (GPU) has emerged as an application to it. a computational accelerator that dramatically reduces The emergence of more general-purpose program- the time to discovery in high-end computing (HEC). ming environments, such as Brook+ [1] and CUDA [2], However, while today’s state-of-the-art GPU can easily has made it easier to implement large-scale applica- reduce the execution time of a parallel code by many tions on GPUs. The CUDA programming model from orders of magnitude, it arguably comes at the expense NVIDIA was created for developing applications on of significant power and energy consumption. For ex- NVIDIA’s GPU cards, such as the GeForce 8 series. ample, the NVIDIA GTX 280 video card is rated at Similarly, Brook+ supports stream processing on the 236 watts, which is as much as the rest of a compute AMD/ATi GPU cards. For the purposes of this paper, node, thus requiring a 500-W power supply. As a we use CUDA atop an NVIDIA GTX 280 video card consequence, the GPU has been viewed as a “non- for our scientific computation. green” computing solution. GPGPU research has focused predominantly on ac- This paper seeks to characterize, and perhaps de- celerating scientific applications. This paper not only bunk, the notion of a “power-hungry GPU” via an accelerates scientific applications via GPU computing, empirical study of the performance, power, and en- but it also does so in the context of characterizing ergy characteristics of GPUs for scientific computing. -

Comparing the Power and Performance of Intel's SCC to State

Comparing the Power and Performance of Intel’s SCC to State-of-the-Art CPUs and GPUs Ehsan Totoni, Babak Behzad, Swapnil Ghike, Josep Torrellas Department of Computer Science, University of Illinois at Urbana-Champaign, Urbana, IL 61801, USA E-mail: ftotoni2, bbehza2, ghike2, [email protected] Abstract—Power dissipation and energy consumption are be- A key architectural challenge now is how to support in- coming increasingly important architectural design constraints in creasing parallelism and scale performance, while being power different types of computers, from embedded systems to large- and energy efficient. There are multiple options on the table, scale supercomputers. To continue the scaling of performance, it is essential that we build parallel processor chips that make the namely “heavy-weight” multi-cores (such as general purpose best use of exponentially increasing numbers of transistors within processors), “light-weight” many-cores (such as Intel’s Single- the power and energy budgets. Intel SCC is an appealing option Chip Cloud Computer (SCC) [1]), low-power processors (such for future many-core architectures. In this paper, we use various as embedded processors), and SIMD-like highly-parallel archi- scalable applications to quantitatively compare and analyze tectures (such as General-Purpose Graphics Processing Units the performance, power consumption and energy efficiency of different cutting-edge platforms that differ in architectural build. (GPGPUs)). These platforms include the Intel Single-Chip Cloud Computer The Intel SCC [1] is a research chip made by Intel Labs (SCC) many-core, the Intel Core i7 general-purpose multi-core, to explore future many-core architectures. It has 48 Pentium the Intel Atom low-power processor, and the Nvidia ION2 (P54C) cores in 24 tiles of two cores each.