Optimized Declarative Transformation First Eclipse Qvtc Results

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Model Transformation Language of the VIATRA2 Framework

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Elsevier - Publisher Connector Science of Computer Programming 68 (2007) 214–234 www.elsevier.com/locate/scico The model transformation language of the VIATRA2 framework Daniel´ Varro´ ∗, Andras´ Balogh Budapest University of Technology and Economics, Department of Measurement and Information Systems, H-1117, Magyar tudosok krt. 2., Budapest, Hungary OptXware Research and Development LLC, Budapest, Hungary Received 15 August 2006; received in revised form 17 April 2007; accepted 14 May 2007 Available online 5 July 2007 Abstract We present the model transformation language of the VIATRA2 framework, which provides a rule- and pattern-based transformation language for manipulating graph models by combining graph transformation and abstract state machines into a single specification paradigm. This language offers advanced constructs for querying (e.g. recursive graph patterns) and manipulating models (e.g. generic transformation and meta-transformation rules) in unidirectional model transformations frequently used in formal model analysis to carry out powerful abstractions. c 2007 Elsevier B.V. All rights reserved. Keywords: Model transformation; Graph transformation; Abstract state machines 1. Introduction The crucial role of model transformation (MT) languages and tools for the overall success of model-driven system development has been revealed in many surveys and papers during the recent years. To provide a standardized support for capturing queries, views and transformations between modeling languages defined by their standard MOF metamodels, the Object Management Group (OMG) has recently issued the QVT standard. QVT provides an intuitive, pattern-based, bidirectional model transformation language, which is especially useful for synchronization kind of transformations between semantically equivalent modeling languages. -

Model Transformation Languages Under a Magnifying Glass:A Controlled Experiment with Xtend, ATL, And

CORE Metadata, citation and similar papers at core.ac.uk Provided by The IT University of Copenhagen's Repository Model Transformation Languages under a Magnifying Glass: A Controlled Experiment with Xtend, ATL, and QVT Regina Hebig Christoph Seidl Thorsten Berger Chalmers | University of Gothenburg Technische Universität Braunschweig Chalmers | University of Gothenburg Sweden Germany Sweden John Kook Pedersen Andrzej Wąsowski IT University of Copenhagen IT University of Copenhagen Denmark Denmark ABSTRACT NamedElement name : EString In Model-Driven Software Development, models are automatically processed to support the creation, build, and execution of systems. A large variety of dedicated model-transformation languages exists, Project Package Class StructuralElement modifiers : Modifier promising to efficiently realize the automated processing of models. [0..*] packages To investigate the actual benefit of using such specialized languages, [0..*] subpackages [0..*] elements Modifier [0..*] classes we performed a large-scale controlled experiment in which over 78 PUBLIC STATIC subjects solve 231 individual tasks using three languages. The exper- FINAL Attribute Method iment sheds light on commonalities and differences between model PRIVATE transformation languages (ATL, QVT-O) and on benefits of using them in common development tasks (comprehension, change, and Figure 1: Syntax model for source code creation) against a modern general-purpose language (Xtend). Our results show no statistically significant benefit of using a dedicated 1 INTRODUCTION transformation language over a modern general-purpose language. In Model-Driven Software Development (MDSD) [9, 35, 38] models However, we were able to identify several aspects of transformation are automatically processed to support creation, build and execution programming where domain-specific transformation languages do of systems. -

Data Transformation Language (DTL)

DTL Data Transformation Language Phillip H. Sherrod Copyright © 2005-2006 All rights reserved www.dtreg.com DTL is a full programming language built into the DTREG program. DTL makes it easy to generate new variables, transform and combine input variables and select records to be used in the analysis. Contents Contents...................................................................................................................................................3 Introduction .............................................................................................................................................6 Introduction to the DTL Language......................................................................................................6 Using DTL For Data Transformations ....................................................................................................7 The main() function.............................................................................................................................7 Global Variables..................................................................................................................................8 Implicit Global Variables ................................................................................................................8 Explicit Global Variables ................................................................................................................9 Static Global Variables..................................................................................................................11 -

Model-Based Hardware Generation and Programming - the MADES Approach

1 Model-based hardware generation and programming - the MADES approach Ian Gray∗, Nikos Matragkas∗, Neil C. Audsley, Leandro Soares Indrusiak, Dimitris Kolovos, Richard Paige University of York, York, U.K. F Abstract—This paper gives an overview of the model-based hardware the proposed hardware development flow and the way generation and programming approach proposed within the MADES that embedded software can be targeted at the resulting project. MADES aims to develop a model-driven development process complex architectures. for safety-critical, real-time embedded systems. MADES defines a sys- tems modelling language based on subsets of MARTE and SysML that allows iterative refinement from high-level specification down to 1.1 MADES Project Goals final implementation. The MADES project specifically focusses on three The MADES project (Model-based methods and tools unique features which differentiate it from existing model-driven develop- ment frameworks. First, model transformations in the Epsilon modelling for Avionics and surveillance embeddeD systEmS) aims framework are used to move between system models and provide to develop the elements of a fully model-driven ap- traceability. Second, the Zot verification tool is employed to allow early proach for the design, validation, simulation, and code and frequent verification of the system being developed. Third, Compile- generation of complex embedded systems to improve Time Virtualisation is used to automatically retarget architecturally- the current practice in the field. MADES differentiates neutral software for execution on complex embedded architectures. itself from similar projects in that way that it covers This paper concentrates on MADES’s approach to the specification of hardware and the way in which software is refactored by Compile-Time all the phases of the development process: from system Virtualisation. -

Feather: a Feature Model Transformation Language

1 Feather: A Feature Model Transformation Language Ahmet Serkan Karataş * area of usage to include dynamic systems that can face Abstract: Feature modeling has been a very popular approach fluctuating context conditions, changing user requirements, for variability management in software product lines. Building need to include and facilitate novel resources rapidly, and so on. a feature model requires substantial domain expertise, however, The constant need for adaptation required to enhance the even experts cannot foresee all future possibilities. Changing variability management capabilities of SPLs to cover runtime, requirements can force a feature model to evolve in order to which gave birth to the dynamic software product lines adapt to the new conditions. Feather is a language to describe (DSPLs). In addition to the properties they inherit from SPLs, model transformations that will evolve a feature model. This DSPLs have several other properties such as dynamic article presents the structure and foundations of Feather. First, variability management, flexible variation points and binding the language elements, which consist of declarations to times, context awareness, and self-adaptability [21]. characterize the model to evolve and commands to manipulate A major factor affecting the success of a software product its structure, are introduced. Then, semantics grounding in line, classic or dynamic, is the variability management activity feature model properties are given for the commands in order it incorporates [12]. In the literature there are reports of various to provide precise command definitions. Next, an interpreter variability modeling and management approaches such as that can realize the transformations described by the commands in a Feather script is presented. -

Comparative Studies of 10 Programming Languages Within 10 Diverse Criteria Revision 1.0

Comparative Studies of 10 Programming Languages within 10 Diverse Criteria Revision 1.0 Rana Naim∗ Mohammad Fahim Nizam† Concordia University Montreal, Concordia University Montreal, Quebec, Canada Quebec, Canada [email protected] [email protected] Sheetal Hanamasagar‡ Jalal Noureddine§ Concordia University Montreal, Concordia University Montreal, Quebec, Canada Quebec, Canada [email protected] [email protected] Marinela Miladinova¶ Concordia University Montreal, Quebec, Canada [email protected] Abstract This is a survey on the programming languages: C++, JavaScript, AspectJ, C#, Haskell, Java, PHP, Scala, Scheme, and BPEL. Our survey work involves a comparative study of these ten programming languages with respect to the following criteria: secure programming practices, web application development, web service composition, OOP-based abstractions, reflection, aspect orientation, functional programming, declarative programming, batch scripting, and UI prototyping. We study these languages in the context of the above mentioned criteria and the level of support they provide for each one of them. Keywords: programming languages, programming paradigms, language features, language design and implementation 1 Introduction Choosing the best language that would satisfy all requirements for the given problem domain can be a difficult task. Some languages are better suited for specific applications than others. In order to select the proper one for the specific problem domain, one has to know what features it provides to support the requirements. Different languages support different paradigms, provide different abstractions, and have different levels of expressive power. Some are better suited to express algorithms and others are targeting the non-technical users. The question is then what is the best tool for a particular problem. -

WOL: a Language for Database Transformations and Constraints

University of Pennsylvania ScholarlyCommons Database Research Group (CIS) Department of Computer & Information Science April 1997 WOL: A Language for Database Transformations and Constraints Susan B. Davidson University of Pennsylvania, [email protected] Anthony S. Kosky University of California Follow this and additional works at: https://repository.upenn.edu/db_research Davidson, Susan B. and Kosky, Anthony S., "WOL: A Language for Database Transformations and Constraints " (1997). Database Research Group (CIS). 13. https://repository.upenn.edu/db_research/13 Copyright 1997 IEEE. Reprinted from Proceedings of the 13th International Conference on Data Engineering, April 1997, pages 55-65. This material is posted here with permission of the IEEE. Such permission of the IEEE does not in any way imply IEEE endorsement of any of the University of Pennsylvania's products or services. Internal or personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution must be obtained from the IEEE by writing to [email protected]. By choosing to view this document, you agree to all provisions of the copyright laws protecting it. This paper is posted at ScholarlyCommons. https://repository.upenn.edu/db_research/13 For more information, please contact [email protected]. WOL: A Language for Database Transformations and Constraints Abstract The need to transform data between heterogeneous databases arises from a number of critical tasks in data management. These tasks are complicated by schema evolution in the underlying databases, and by the presence of non-standard database constraints. We describe a declarative language, WOL, for specifying such transformations, and its implementation in a system called Morphase. -

SPOT: a DSL for Extending Fortran Programs with Metaprogramming

Hindawi Publishing Corporation Advances in Soware Engineering Volume 2014, Article ID 917327, 23 pages http://dx.doi.org/10.1155/2014/917327 Research Article SPOT: A DSL for Extending Fortran Programs with Metaprogramming Songqing Yue and Jeff Gray Department of Computer Science, University of Alabama, Tuscaloosa, AL 35401, USA Correspondence should be addressed to Songqing Yue; [email protected] Received 8 July 2014; Revised 27 October 2014; Accepted 12 November 2014; Published 17 December 2014 Academic Editor: Robert J. Walker Copyright © 2014 S. Yue and J. Gray. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. Metaprogramming has shown much promise for improving the quality of software by offering programming language techniques to address issues of modularity, reusability, maintainability, and extensibility. Thus far, the power of metaprogramming has not been explored deeply in the area of high performance computing (HPC). There is a vast body of legacy code written in Fortran running throughout the HPC community. In order to facilitate software maintenance and evolution in HPC systems, we introduce a DSL that can be used to perform source-to-source translation of Fortran programs by providing a higher level of abstraction for specifying program transformations. The underlying transformations are actually carried out through a metaobject protocol (MOP) and a code generator is responsible for translating a SPOT program to the corresponding MOP code. The design focus of the framework is to automate program transformations through techniques of code generation, so that developers only need to specify desired transformations while being oblivious to the details about how the transformations are performed. -

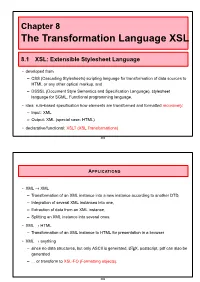

The Transformation Language XSL

Chapter 8 The Transformation Language XSL 8.1 XSL: Extensible Stylesheet Language • developed from – CSS (Cascading Stylesheets) scripting language for transformation of data sources to HTML or any other optical markup, and – DSSSL (Document Style Semantics and Specification Language), stylesheet language for SGML. Functional programming language. • idea: rule-based specification how elements are transformed and formatted recursively: – Input: XML – Output: XML (special case: HTML) • declarative/functional: XSLT (XSL Transformations) 305 APPLICATIONS • XML XML → – Transformation of an XML instance into a new instance according to another DTD, – Integration of several XML instances into one, – Extraction of data from an XML instance, – Splitting an XML instance into several ones. • XML HTML → – Transformation of an XML instance to HTML for presentation in a browser • XML anything → – since no data structures, but only ASCII is generated, LATEX, postscript, pdf can also be generated – ... or transform to XSL-FO (Formatting objects). 306 THE LANGUAGE(S) XSL Partitioned into two sublanguages: • functional programming language: XSLT “understood” by XSLT-Processors (e.g. xt, xalan, saxon, xsltproc ...) • generic language for document-markup: XSL-FO “understood” by XSL-FO-enabled browsers that transform the XSL-FO-markup according to an internal specification into a direct (screen/printable) presentation. (similar to LaTeX) • programming paradigm: self-organizing tree-walking • XSL itself is written in XML-Syntax. It uses the namespace prefixes “xsl:” and “fo:”, bound to http://www.w3.org/1999/XSL/Transform and http://www.w3.org/1999/XSL/Format. • XSL programs can be seen as XML data. • it can be combined with other languages that also have an XML-Syntax (and an own namespace). -

Malan: a Mapping Language for the Data Manipulation Arnaud Blouin, Olivier Beaudoux, S

Malan: A Mapping Language for the Data Manipulation Arnaud Blouin, Olivier Beaudoux, S. Loiseau To cite this version: Arnaud Blouin, Olivier Beaudoux, S. Loiseau. Malan: A Mapping Language for the Data Ma- nipulation. ACM symposium on Document engineering, Sep 2008, Sao Paulo, Brazil. pp.66–75, 10.1145/1410140.1410153. hal-00470261 HAL Id: hal-00470261 https://hal.archives-ouvertes.fr/hal-00470261 Submitted on 5 Apr 2010 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Malan: A Mapping Language for the Data Manipulation Arnaud Blouin Olivier Beaudoux Stéphane Loiseau GRI - ESEO GRI - ESEO LERIA, University of Angers Angers, France Angers, France Angers, France [email protected] [email protected] stephane.loiseau@univ- angers.fr ABSTRACT it does not guarantee the correctness of the transformation Malan is a MApping LANguage that allows the generation process. Working at the schema level by specifying schema of transformation programs by specifying a schema mapping mappings avoids such drawbacks. In our previous work [8], between a source and target data schema. By working at the we started the application of the mapping concept to docu- schema level, Malan remains independent of any transfor- ment transformation. -

QVT Declarative Documentation

QVT Declarative Documentation QVT Declarative Documentation Edward Willink and contributors Copyright 2009 - 2016 Eclipse QVTd 0.14.0 1 1. Overview and Getting Started ....................................................................................... 1 1.1. What is QVT? ................................................................................................... 1 1.1.1. Modeling Layers ...................................................................................... 1 1.2. How Does It Work? ............................................................................................ 2 1.2.1. Editing ................................................................................................... 2 1.2.2. Execution ................................................................................................ 2 1.2.3. Debugger ................................................................................................ 2 1.3. Who is Behind Eclipse QVTd? ............................................................................. 2 1.4. Getting Started ................................................................................................... 3 1.4.1. QVTr Example Project .............................................................................. 3 1.4.2. QVTc Example Project .............................................................................. 5 1.5. Extensions ......................................................................................................... 5 1.5.1. Import ................................................................................................... -

Feature-Based Comparison of Language Transformation Tools

Feature-Based Comparison of Language Transformation Tools 1Muhammad Ilyas, 2Saad Razzaq, 3Fahad Maqbool, 4Qaiser Abbas, 5Wakeel Ahmad, 6Syed M Adnan 1,2,3,4Department of Computer Science and IT, University of Sargodha, Sargodha, Pakistan 5,6Department of Computer Science, University of Wah, Wah Cantt, Pakistan [email protected], Abstract Code transformation is the best option while switching from farmer to next technology. Our paper presents a comparative analysis of code transformation tools based on 18 different factors. These factors are Classes, pointers, Access Specifiers, Functions and Exceptions, etc. For this purpose, we have selected varyCode, Telerik, Multi-online converter, and InstantVB. Source Language considered for this purpose is C sharp (C#) and the target language is Visual Basics (VB). Results show that VaryCode is best among the four tools as its converted programs throw fewer errors and require minor changes while running the program. Key words: Code transformation, Multiconverter, Stylistic Feature, Code Smell, Author style. more readable for the developer. Due to the 1. INTRODUCTION rapid improvement in programming languages, companies want to migrate their Source to source compilation is a process of software to new updated/upgraded code. converting source code written in one high- Developing code from scratch each time is level language to itself or other high-level difficult because it is a complex and languages [1]. It is a refactoring process that cumbersome activity. The automatic is helpful when programs that have to transformation of the code is always very refactor are outside the control of the favorable and speeds up the work. original implementer.