Optimisation of Ad-Hoc Analysis of an OLAP Cube Using Sparksql

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Beyond Relational Databases

EXPERT ANALYSIS BY MARCOS ALBE, SUPPORT ENGINEER, PERCONA Beyond Relational Databases: A Focus on Redis, MongoDB, and ClickHouse Many of us use and love relational databases… until we try and use them for purposes which aren’t their strong point. Queues, caches, catalogs, unstructured data, counters, and many other use cases, can be solved with relational databases, but are better served by alternative options. In this expert analysis, we examine the goals, pros and cons, and the good and bad use cases of the most popular alternatives on the market, and look into some modern open source implementations. Beyond Relational Databases Developers frequently choose the backend store for the applications they produce. Amidst dozens of options, buzzwords, industry preferences, and vendor offers, it’s not always easy to make the right choice… Even with a map! !# O# d# "# a# `# @R*7-# @94FA6)6 =F(*I-76#A4+)74/*2(:# ( JA$:+49>)# &-)6+16F-# (M#@E61>-#W6e6# &6EH#;)7-6<+# &6EH# J(7)(:X(78+# !"#$%&'( S-76I6)6#'4+)-:-7# A((E-N# ##@E61>-#;E678# ;)762(# .01.%2%+'.('.$%,3( @E61>-#;(F7# D((9F-#=F(*I## =(:c*-:)U@E61>-#W6e6# @F2+16F-# G*/(F-# @Q;# $%&## @R*7-## A6)6S(77-:)U@E61>-#@E-N# K4E-F4:-A%# A6)6E7(1# %49$:+49>)+# @E61>-#'*1-:-# @E61>-#;6<R6# L&H# A6)6#'68-# $%&#@:6F521+#M(7#@E61>-#;E678# .761F-#;)7-6<#LNEF(7-7# S-76I6)6#=F(*I# A6)6/7418+# @ !"#$%&'( ;H=JO# ;(\X67-#@D# M(7#J6I((E# .761F-#%49#A6)6#=F(*I# @ )*&+',"-.%/( S$%=.#;)7-6<%6+-# =F(*I-76# LF6+21+-671># ;G';)7-6<# LF6+21#[(*:I# @E61>-#;"# @E61>-#;)(7<# H618+E61-# *&'+,"#$%&'$#( .761F-#%49#A6)6#@EEF46:1-# -

Mapreduce Service

MapReduce Service Troubleshooting Issue 01 Date 2021-03-03 HUAWEI TECHNOLOGIES CO., LTD. Copyright © Huawei Technologies Co., Ltd. 2021. All rights reserved. No part of this document may be reproduced or transmitted in any form or by any means without prior written consent of Huawei Technologies Co., Ltd. Trademarks and Permissions and other Huawei trademarks are trademarks of Huawei Technologies Co., Ltd. All other trademarks and trade names mentioned in this document are the property of their respective holders. Notice The purchased products, services and features are stipulated by the contract made between Huawei and the customer. All or part of the products, services and features described in this document may not be within the purchase scope or the usage scope. Unless otherwise specified in the contract, all statements, information, and recommendations in this document are provided "AS IS" without warranties, guarantees or representations of any kind, either express or implied. The information in this document is subject to change without notice. Every effort has been made in the preparation of this document to ensure accuracy of the contents, but all statements, information, and recommendations in this document do not constitute a warranty of any kind, express or implied. Issue 01 (2021-03-03) Copyright © Huawei Technologies Co., Ltd. i MapReduce Service Troubleshooting Contents Contents 1 Account Passwords.................................................................................................................. 1 1.1 Resetting -

Benchmarking Distributed Data Warehouse Solutions for Storing Genomic Variant Information

Research Collection Journal Article Benchmarking distributed data warehouse solutions for storing genomic variant information Author(s): Wiewiórka, Marek S.; Wysakowicz, David P.; Okoniewski, Michał J.; Gambin, Tomasz Publication Date: 2017-07-11 Permanent Link: https://doi.org/10.3929/ethz-b-000237893 Originally published in: Database 2017, http://doi.org/10.1093/database/bax049 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Database, 2017, 1–16 doi: 10.1093/database/bax049 Original article Original article Benchmarking distributed data warehouse solutions for storing genomic variant information Marek S. Wiewiorka 1, Dawid P. Wysakowicz1, Michał J. Okoniewski2 and Tomasz Gambin1,3,* 1Institute of Computer Science, Warsaw University of Technology, Nowowiejska 15/19, Warsaw 00-665, Poland, 2Scientific IT Services, ETH Zurich, Weinbergstrasse 11, Zurich 8092, Switzerland and 3Department of Medical Genetics, Institute of Mother and Child, Kasprzaka 17a, Warsaw 01-211, Poland *Corresponding author: Tel.: þ48693175804; Fax: þ48222346091; Email: [email protected] Citation details: Wiewiorka,M.S., Wysakowicz,D.P., Okoniewski,M.J. et al. Benchmarking distributed data warehouse so- lutions for storing genomic variant information. Database (2017) Vol. 2017: article ID bax049; doi:10.1093/database/bax049 Received 15 September 2016; Revised 4 April 2017; Accepted 29 May 2017 Abstract Genomic-based personalized medicine encompasses storing, analysing and interpreting genomic variants as its central issues. At a time when thousands of patientss sequenced exomes and genomes are becoming available, there is a growing need for efficient data- base storage and querying. -

Building an Effective Data Warehousing for Financial Sector

Automatic Control and Information Sciences, 2017, Vol. 3, No. 1, 16-25 Available online at http://pubs.sciepub.com/acis/3/1/4 ©Science and Education Publishing DOI:10.12691/acis-3-1-4 Building an Effective Data Warehousing for Financial Sector José Ferreira1, Fernando Almeida2, José Monteiro1,* 1Higher Polytechnic Institute of Gaya, V.N.Gaia, Portugal 2Faculty of Engineering of Oporto University, INESC TEC, Porto, Portugal *Corresponding author: [email protected] Abstract This article presents the implementation process of a Data Warehouse and a multidimensional analysis of business data for a holding company in the financial sector. The goal is to create a business intelligence system that, in a simple, quick but also versatile way, allows the access to updated, aggregated, real and/or projected information, regarding bank account balances. The established system extracts and processes the operational database information which supports cash management information by using Integration Services and Analysis Services tools from Microsoft SQL Server. The end-user interface is a pivot table, properly arranged to explore the information available by the produced cube. The results have shown that the adoption of online analytical processing cubes offers better performance and provides a more automated and robust process to analyze current and provisional aggregated financial data balances compared to the current process based on static reports built from transactional databases. Keywords: data warehouse, OLAP cube, data analysis, information system, business intelligence, pivot tables Cite This Article: José Ferreira, Fernando Almeida, and José Monteiro, “Building an Effective Data Warehousing for Financial Sector.” Automatic Control and Information Sciences, vol. -

Desarrollo De Una Solución Business Intelligence Mediante Un Paradigma De Data Lake

Desarrollo de una solución Business Intelligence mediante un paradigma de Data Lake José María Tagarro Martí Grado de Ingeniería Informática Consultor: Humberto Andrés Sanz Profesor: Atanasi Daradoumis Haralabus 13 de enero de 2019 Esta obra está sujeta a una licencia de Reconocimiento-NoComercial-SinObraDerivada 3.0 España de Creative Commons FICHA DEL TRABAJO FINAL Desarrollo de una solución Business Intelligence mediante un paradigma de Data Título del trabajo: Lake Nombre del autor: José María Tagarro Martí Nombre del consultor: Humberto Andrés Sanz Fecha de entrega (mm/aaaa): 01/2019 Área del Trabajo Final: Business Intelligence Titulación: Grado de Ingeniería Informática Resumen del Trabajo (máximo 250 palabras): Este trabajo implementa una solución de Business Intelligence siguiendo un paradigma de Data Lake sobre la plataforma de Big Data Apache Hadoop con el objetivo de ilustrar sus capacidades tecnológicas para este fin. Los almacenes de datos tradicionales necesitan transformar los datos entrantes antes de ser guardados para que adopten un modelo preestablecido, en un proceso conocido como ETL (Extraer, Transformar, Cargar, por sus siglas en inglés). Sin embargo, el paradigma Data Lake propone el almacenamiento de los datos generados en la organización en su propio formato de origen, de manera que con posterioridad puedan ser transformados y consumidos mediante diferentes tecnologías ad hoc para las distintas necesidades de negocio. Como conclusión, se indican las ventajas e inconvenientes de desplegar una plataforma unificada tanto para análisis Big Data como para las tareas de Business Intelligence, así como la necesidad de emplear soluciones basadas en código y estándares abiertos. Abstract (in English, 250 words or less): This paper implements a Business Intelligence solution following the Data Lake paradigm on Hadoop’s Big Data platform with the aim of showcasing the technology for this purpose. -

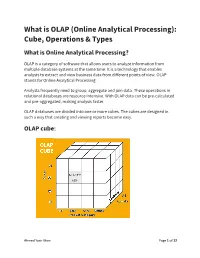

What Is OLAP (Online Analytical Processing): Cube, Operations & Types What Is Online Analytical Processing?

What is OLAP (Online Analytical Processing): Cube, Operations & Types What is Online Analytical Processing? OLAP is a category of software that allows users to analyze information from multiple database systems at the same time. It is a technology that enables analysts to extract and view business data from different points of view. OLAP stands for Online Analytical Processing. Analysts frequently need to group, aggregate and join data. These operations in relational databases are resource intensive. With OLAP data can be pre-calculated and pre-aggregated, making analysis faster. OLAP databases are divided into one or more cubes. The cubes are designed in such a way that creating and viewing reports become easy. OLAP cube: Ahmed Yasir Khan Page 1 of 12 At the core of the OLAP, concept is an OLAP Cube. The OLAP cube is a data structure optimized for very quick data analysis. The OLAP Cube consists of numeric facts called measures which are categorized by dimensions. OLAP Cube is also called the hypercube. Usually, data operations and analysis are performed using the simple spreadsheet, where data values are arranged in row and column format. This is ideal for two- dimensional data. However, OLAP contains multidimensional data, with data usually obtained from a different and unrelated source. Using a spreadsheet is not an optimal option. The cube can store and analyze multidimensional data in a logical and orderly manner. How does it work? A Data warehouse would extract information from multiple data sources and formats like text files, excel sheet, multimedia files, etc. The extracted data is cleaned and transformed. -

SAS 9.1 OLAP Server: Administrator’S Guide, Please Send Them to Us on a Photocopy of This Page, Or Send Us Electronic Mail

SAS® 9.1 OLAP Server Administrator’s Guide The correct bibliographic citation for this manual is as follows: SAS Institute Inc. 2004. SAS ® 9.1 OLAP Server: Administrator’s Guide. Cary, NC: SAS Institute Inc. SAS® 9.1 OLAP Server: Administrator’s Guide Copyright © 2004, SAS Institute Inc., Cary, NC, USA All rights reserved. Produced in the United States of America. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, or otherwise, without the prior written permission of the publisher, SAS Institute Inc. U.S. Government Restricted Rights Notice. Use, duplication, or disclosure of this software and related documentation by the U.S. government is subject to the Agreement with SAS Institute and the restrictions set forth in FAR 52.227–19 Commercial Computer Software-Restricted Rights (June 1987). SAS Institute Inc., SAS Campus Drive, Cary, North Carolina 27513. 1st printing, January 2004 SAS Publishing provides a complete selection of books and electronic products to help customers use SAS software to its fullest potential. For more information about our e-books, e-learning products, CDs, and hard-copy books, visit the SAS Publishing Web site at support.sas.com/pubs or call 1-800-727-3228. SAS® and all other SAS Institute Inc. product or service names are registered trademarks or trademarks of SAS Institute Inc. in the USA and other countries. ® indicates USA registration. Other brand and product names are registered trademarks or trademarks -

Improving Traveling Habits Using an OLAP Cube

Improving traveling habits using an OLAP cube Development of a business intelligence system Marcus Hellman Marcus Hellman Spring 2016 Master Thesis in Computing Science, 30 hp Supervisor: Anders Broberg Extern Supervisor: Kim Nilsson Examiner: Henrik Bjorklund¨ Umea˚ University, Department of Computing Science Abstract The aim of this thesis is to improve the traveling habits of clients using the SpaceTime system when arranging their travels. The improvement of traveling habits refers to lowering costs and emissions generated by the travels. To do this, a business intelligence system, including an OLAP cube, were created to provide the clients with feedback on how they travel. This to make it possible to see if they are improving and how much they have saved, both in money and emissions. Since these kind of systems often are quite complex, studies on best practices and how to keep such systems agile were performed to be able to provide a system of high quality. During this project, it was found that the pre-study and design phase were just as challenging as the creation of the designed components. The result of this project was a business intelligence system, including ETL, a Data warehouse, and an OLAP cube that will be used in the SpaceTime system as well as mock-ups presenting how data from the OLAP cube could be presented in the SpaceTime web-application in the future. Acknowledgements First, I would like to thank SpaceTime and Dohi for giving me the opportunity to do this the- sis. Also, I would like to thank my supervisors Anders Broberg and Kim Nilsson for all the help during this thesis project, providing valuable feedback on my thesis report throughout the project as well as guiding me through the introduction and development of the system. -

Constructing OLAP Cubes Based on Queries

Constructing OLAP Cubes Based on Queries Tapio Niemi Jyrki Nummenmaa Peter Thanisch Department of Computer and Department of Computer and Department of Computer Science, Information Sciences Information Sciences University of Edinburgh FIN-33014 University of Tampere FIN-33014 University of Tampere Edinburgh, EH9 3JZ Finland Finland Scotland +358 32156595 +358 405277999 +44 7968401525 [email protected] [email protected] [email protected] ABSTRACT The design of a cube is based on knowledge of the application area and the types of queries the users are expected to pose. An On-Line Analytical Processing (OLAP) user often follows a Since constructing an OLAP cube can be a difficult task for the train of thought, posing a sequence of related queries against the end user, it is often seen as the duty of the data warehouse data warehouse. Although their details are not known in advance, administrator. This has led to researchers and vendors regarding the general form of those queries is apparent beforehand. Thus, the OLAP cube as a static storage structure for data warehouse the user can outline the relevant portion of the data posing data. This is problematic since the user often wants to make new generalised queries against a cube representing the data kinds of queries, which may also need new OLAP cubes. The warehouse. same cube is not always practical for different analysis tasks, Since existing OLAP design methods are not suitable for non- since the structure of the cube has a notable effect on efficiency professionals, we present a technique that automates cube design and ease of posing queries. -

Sushil Thomas & Steve Wooledge

Sushil Thomas & Steve Wooledge Sushil Thomas & Steve Modern Business Intelligence: Leading the Way for Big Data Success Sushil Thomas & Steve Wooledge Modern Business Intelligence: Leading the Way to Big Data Success Sushil Thomas & Steve Wooledge No part of this book’s contents may be used for any other purpose, or reproduced by any means, electronic or mechanical, without the express prior written permission of Arcadia Data Inc. 999 Baker Way, Suite 120 San Mateo, CA 94404 Visit us on the web: www.arcadiadata.com The text, graphics and examples included herein are for the purpose of illustration and reference only. The specifications on which they are based are subject to change without notice. No legal or accounting advice is provided hereunder. Arcadia Data reserves the right to revise or withdraw this document or any part there- of at any time. Copyright © 2017 Arcadia Data Inc. All rights reserved. Other company and brand products and service names are trademarks or registered trademarks of their respective holders. ISBN: 978-0-692-94721-0 Contents Introduction ...........................................................................................ix Chapter 1: A Brief Overview of the Big Data Ecosystem ..............................1 The Big Data Ecosystem Starts with Apache Hadoop 2 In with the New — and the Old, Too 4 Make Way for Spark 6 Other Platforms: NoSQL, NewSQL, Object Stores 9 Chapter 2: BI and Analytics Meet Business Transformation ...................... 14 What is the Difference between BI and Analytics? 15 A Brief History of BI 17 Wither the RDBMS? Not So Fast… 21 The Present and Future of Enterprise Reporting 22 Self-Service BI 23 Hadoop Analytics Case Study 24 Chapter 3: Rise of the Citizen Data Scientist ...........................................27 The Imperative of User-Friendly Analytics 29 Hadoop as the Platform Game-Changer 31 Data Visualization Comes to the Fore 32 Chapter 4: Democratizing Big Data ....................................................... -

Code Smell Prediction Employing Machine Learning Meets Emerging Java Language Constructs"

Appendix to the paper "Code smell prediction employing machine learning meets emerging Java language constructs" Hanna Grodzicka, Michał Kawa, Zofia Łakomiak, Arkadiusz Ziobrowski, Lech Madeyski (B) The Appendix includes two tables containing the dataset used in the paper "Code smell prediction employing machine learning meets emerging Java lan- guage constructs". The first table contains information about 792 projects selected for R package reproducer [Madeyski and Kitchenham(2019)]. Projects were the base dataset for cre- ating the dataset used in the study (Table I). The second table contains information about 281 projects filtered by Java version from build tool Maven (Table II) which were directly used in the paper. TABLE I: Base projects used to create the new dataset # Orgasation Project name GitHub link Commit hash Build tool Java version 1 adobe aem-core-wcm- www.github.com/adobe/ 1d1f1d70844c9e07cd694f028e87f85d926aba94 other or lack of unknown components aem-core-wcm-components 2 adobe S3Mock www.github.com/adobe/ 5aa299c2b6d0f0fd00f8d03fda560502270afb82 MAVEN 8 S3Mock 3 alexa alexa-skills- www.github.com/alexa/ bf1e9ccc50d1f3f8408f887f70197ee288fd4bd9 MAVEN 8 kit-sdk-for- alexa-skills-kit-sdk- java for-java 4 alibaba ARouter www.github.com/alibaba/ 93b328569bbdbf75e4aa87f0ecf48c69600591b2 GRADLE unknown ARouter 5 alibaba atlas www.github.com/alibaba/ e8c7b3f1ff14b2a1df64321c6992b796cae7d732 GRADLE unknown atlas 6 alibaba canal www.github.com/alibaba/ 08167c95c767fd3c9879584c0230820a8476a7a7 MAVEN 7 canal 7 alibaba cobar www.github.com/alibaba/ -

IBM Big SQL (With Hbase), Splice Major Contributor to the Apache Be a Major Determinant“ Machine (Which Incorporates Hbase Madlib Project

MarketReport Market Report Paper by Bloor Author Philip Howard Publish date December 2017 SQL Engines on Hadoop It is clear that“ Impala, LLAP, Hive, Spark and so on, perform significantly worse than products from vendors with a history in database technology. Author Philip Howard” Executive summary adoop is used for a lot of these are discussed in detail in this different purposes and one paper it is worth briefly explaining H major subset of the overall that SQL support has two aspects: the Hadoop market is to run SQL against version supported (ANSI standard 1992, Hadoop. This might seem contrary 1999, 2003, 2011 and so on) plus the to Hadoop’s NoSQL roots, but the robustness of the engine at supporting truth is that there are lots of existing SQL queries running with multiple investments in SQL applications that concurrent thread and at scale. companies want to preserve; all the Figure 1 illustrates an abbreviated leading business intelligence and version of the results of our research. analytics platforms run using SQL; and This shows various leading vendors, SQL skills, capabilities and developers and our estimates of their product’s are readily available, which is often not positioning relative to performance and The key the case for other languages. SQL support. Use cases are shown by the differentiators“ However, the market for SQL engines on colour of each bubble but for practical between products Hadoop is not mono-cultural. There are reasons this means that no vendor/ multiple use cases for deploying SQL on product is shown for more than two use are the use cases Hadoop and there are more than twenty cases, which is why we describe Figure they support, their different SQL on Hadoop platforms.