Musical Similarity and Commonness Estimation Based on Probabilistic Generative Models of Musical Elements

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

TOKYO AUTO SALON 2017 Taking Place on Fri 13 to Sun 15 Jan at Makuhari Messe with 417 Exhibitors and a New Content “Riding Shotgun”

MEDIA RELEASE 7 December 2016 Tokyo Auto Salon Association Experience the “Car Culture” at TOKYO AUTO SALON 2017 Taking Place on Fri 13 to Sun 15 Jan at Makuhari Messe with 417 Exhibitors and a New Content “Riding Shotgun” Tokyo Auto Salon Association (office with-in San’s Inc., Shinjuku-ku, Tokyo) will host “TOKYO AUTO SALON 2017”, one of the largest annual custom car and car-related product show, taking place over the three-day period from Friday 13 to Sunday 15 January 2017 at MAKUHARI MESSE International Convention Complex (2-1 Nakase, Mihama-ku, Chiba City). TOKYO AUTO SALON 2017, marking the show’s 35th anniversary, features a wide range of 417 automobile-related exhibitors (*as of 1 Dec) showcasing their latest cars, products and technological solutions, as well as embraces numerous events including race cars’ demo run sessions and live performances. On top of that, a brand new thrilling content “Riding Shotgun (ride-along experience in a passenger seat)” will take place at ZOZO Marine Stadium’s peripheral road (car park area). Actor Show Aikawa has been appointed “TOKYO AUTO SALON 2017 Ambassador”, who serves as an honourary publicity representative of the show. It will be his sixth year as the TAS Ambassador since 2012. We also feature Piko-Taro, who has hailed around the world with his viral hit “PPAP”, as a special guest on Friday to give a boost to the curtain-up. During the three-day period of the show, we offer AUTO SALON SPECIAL LIVE every night held on the Makuhari Event Hall. -

RIAJ Yearbook 2018 1 Overview of Production of Recordings and Digital Music Sales in 2017

Statistics RIAJ YEARBOOK Trends 2018 The Recording Industry in Japan 2018 Contents Overview of Production of Recordings and Digital Music Sales in 2017 .................. 1 Statistics by Format (Unit Basis — Value Basis) .............................................................. 4 1. Total Recorded Music — Production on Unit Basis ............................................... 4 2. Total Audio Recordings — Production on Unit Basis ............................................ 4 3. Total CDs — Production on Unit Basis .................................................................... 4 4. Total Recorded Music — Production on Value Basis ............................................. 5 5. Total Audio Recordings — Production on Value Basis .......................................... 5 6. Total CDs — Production on Value Basis ................................................................. 5 7. CD Singles — Production on Unit Basis .................................................................. 6 8. 5" CD Albums — Production on Unit Basis ............................................................ 6 9. Music Videos — Production on Unit Basis ............................................................. 6 10. CD Singles — Production on Value Basis................................................................ 7 11. 5" CD Albums — Production on Value Basis.......................................................... 7 12. Music Videos — Production on Value Basis ........................................................... 7 13. Digital -

166-90-06 Tel: +38(063)804-46-48 E-Mail: [email protected] Icq: 550-846-545 Skype: Doowopteenagedreams Viber: +38(063)804-46-48 Web

tel: +38(097)725-56-34 tel: +38(099)166-90-06 tel: +38(063)804-46-48 e-mail: [email protected] icq: 550-846-545 skype: doowopteenagedreams viber: +38(063)804-46-48 web: http://jdream.dp.ua CAT ORDER PRICE ITEM CNF ARTIST ALBUM LABEL REL G-049 $60,37 1 CD 19 Complete Best Ao&haru (jpn) CD 09/24/2008 G-049 $57,02 1 SHMCD 801 Latino: Limited (jmlp) (ltd) (shm) (jpn) CD 10/02/2015 G-049 $55,33 1 CD 1975 1975 (jpn) CD 01/28/2014 G-049 $153,23 1 SHMCD 100 Best Complete Tracks / Various (jpn)100 Best... Complete Tracks / Various (jpn) (shm) CD 07/08/2014 G-049 $48,93 1 CD 100 New Best Children's Classics 100 New Best Children's Classics AUDIO CD 07/15/2014 G-049 $40,85 1 SHMCD 10cc Deceptive Bends (shm) (jpn) CD 02/26/2013 G-049 $70,28 1 SHMCD 10cc Original Soundtrack (jpn) (ltd) (jmlp) (shm) CD 11/05/2013 G-049 $55,33 1 CD 10-feet Vandalize (jpn) CD 03/04/2008 G-049 $111,15 1 DVD 10th Anniversary-fantasia-in Tokyo Dome10th Anniversary-fantasia-in/... Tokyo Dome / (jpn) [US-Version,DVD Regio 1/A] 05/24/2011 G-049 $37,04 1 CD 12 Cellists Of The Berliner PhilharmonikerSouth American Getaway (jpn) CD 07/08/2014 G-049 $51,22 1 CD 14 Karat Soul Take Me Back (jpn) CD 08/21/2006 G-049 $66,17 1 CD 175r 7 (jpn) CD 02/22/2006 G-049 $68,61 2 CD/DVD 175r Bremen (bonus Dvd) (jpn) CD 04/25/2007 G-049 $66,17 1 CD 175r Bremen (jpn) CD 04/25/2007 G-049 $48,32 1 CD 175r Melody (jpn) CD 09/01/2004 G-049 $45,27 1 CD 175r Omae Ha Sugee (jpn) CD 04/15/2008 G-049 $66,92 1 CD 175r Thank You For The Music (jpn) CD 10/10/2007 G-049 $48,62 1 CD 1966 Quartet Help: Beatles Classics (jpn) CD 06/18/2013 G-049 $46,95 1 CD 20 Feet From Stardom / O. -

The Recording Industry in Japan 2005

RIAJ Yearbook 2005 The Recording Industry in Japan English Edition 2005 Statistics Analysis Trends CONTENTS Overview of Production of Recordings in 2004 ..................................1 Production Statistics by Format (Unit Basis•Value Basis) ............2 1. Total Recorded Music — Unit Basis......................................................................2 2. Total Audio Recordings — Unit Basis ..................................................................2 3. Total CDs — Unit Basis ........................................................................................2 4. Total Recorded Music — Value Basis....................................................................3 5. Total Audio Recordings — Value Basis ................................................................3 6. Total CDs — Value Basis......................................................................................3 7. CD Singles — Unit Basis ......................................................................................4 8. 5" CD Albums — Unit Basis ................................................................................4 0. Reference 1. Video Recordings — Unit Basis ................................................................4 9. CD Singles — Value Basis....................................................................................5 10. 5" CD Albums — Value Basis ..............................................................................5 0. Reference 2. Video Recordings — Value Basis ..............................................................5 -

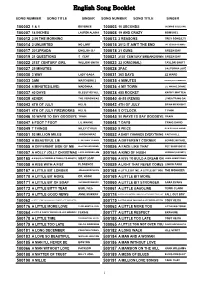

English Song Booklet

English Song Booklet SONG NUMBER SONG TITLE SINGER SONG NUMBER SONG TITLE SINGER 100002 1 & 1 BEYONCE 100003 10 SECONDS JAZMINE SULLIVAN 100007 18 INCHES LAUREN ALAINA 100008 19 AND CRAZY BOMSHEL 100012 2 IN THE MORNING 100013 2 REASONS TREY SONGZ,TI 100014 2 UNLIMITED NO LIMIT 100015 2012 IT AIN'T THE END JAY SEAN,NICKI MINAJ 100017 2012PRADA ENGLISH DJ 100018 21 GUNS GREEN DAY 100019 21 QUESTIONS 5 CENT 100021 21ST CENTURY BREAKDOWN GREEN DAY 100022 21ST CENTURY GIRL WILLOW SMITH 100023 22 (ORIGINAL) TAYLOR SWIFT 100027 25 MINUTES 100028 2PAC CALIFORNIA LOVE 100030 3 WAY LADY GAGA 100031 365 DAYS ZZ WARD 100033 3AM MATCHBOX 2 100035 4 MINUTES MADONNA,JUSTIN TIMBERLAKE 100034 4 MINUTES(LIVE) MADONNA 100036 4 MY TOWN LIL WAYNE,DRAKE 100037 40 DAYS BLESSTHEFALL 100038 455 ROCKET KATHY MATTEA 100039 4EVER THE VERONICAS 100040 4H55 (REMIX) LYNDA TRANG DAI 100043 4TH OF JULY KELIS 100042 4TH OF JULY BRIAN MCKNIGHT 100041 4TH OF JULY FIREWORKS KELIS 100044 5 O'CLOCK T PAIN 100046 50 WAYS TO SAY GOODBYE TRAIN 100045 50 WAYS TO SAY GOODBYE TRAIN 100047 6 FOOT 7 FOOT LIL WAYNE 100048 7 DAYS CRAIG DAVID 100049 7 THINGS MILEY CYRUS 100050 9 PIECE RICK ROSS,LIL WAYNE 100051 93 MILLION MILES JASON MRAZ 100052 A BABY CHANGES EVERYTHING FAITH HILL 100053 A BEAUTIFUL LIE 3 SECONDS TO MARS 100054 A DIFFERENT CORNER GEORGE MICHAEL 100055 A DIFFERENT SIDE OF ME ALLSTAR WEEKEND 100056 A FACE LIKE THAT PET SHOP BOYS 100057 A HOLLY JOLLY CHRISTMAS LADY ANTEBELLUM 500164 A KIND OF HUSH HERMAN'S HERMITS 500165 A KISS IS A TERRIBLE THING (TO WASTE) MEAT LOAF 500166 A KISS TO BUILD A DREAM ON LOUIS ARMSTRONG 100058 A KISS WITH A FIST FLORENCE 100059 A LIGHT THAT NEVER COMES LINKIN PARK 500167 A LITTLE BIT LONGER JONAS BROTHERS 500168 A LITTLE BIT ME, A LITTLE BIT YOU THE MONKEES 500170 A LITTLE BIT MORE DR. -

A Multi-Dimensional Study of the Emotion in Current Japanese Popular Music

Acoust. Sci. & Tech. 34, 3 (2013) #2013 The Acoustical Society of Japan PAPER A multi-dimensional study of the emotion in current Japanese popular music Ryo Yonedaà and Masashi Yamada Graduate School of Engineering, Kanazawa Institute of Technology, 7–1 Ohgigaoka, Nonoich, 921–8501 Japan ( Received 22 March 2012, Accepted for publication 5 September 2012 ) Abstract: Musical psychologists illustrated musical emotion with various numbers of dimensions ranging from two to eight. Most of them concentrated on classical music. Only a few researchers studied emotion in popular music, but the number of pieces they used was very small. In the present study, perceptual experiments were conducted using large sets of popular pieces. In Experiment 1, ten listeners rated musical emotion for 50 J-POP pieces using 17 SD scales. The results of factor analysis showed that the emotional space was constructed by three factors, ‘‘evaluation,’’ ‘‘potency’’ and ‘‘activity.’’ In Experiment 2, three musicians and eight non-musicians rated musical emotion for 169 popular pieces. The set of pieces included not only J-POP tunes but also Enka and western popular tunes. The listeners also rated the suitability for several listening situations. The results of factor analysis showed that the emotional space for the 169 pieces was spanned by the three factors, ‘‘evaluation,’’ ‘‘potency’’ and ‘‘activity,’’ again. The results of multiple-regression analyses suggested that the listeners like to listen to a ‘‘beautiful’’ tune with their lovers and a ‘‘powerful’’ and ‘‘active’’ tune in a situation where people were around them. Keywords: Musical emotion, Popular music, J-POP, Semantic differential method, Factor analysis PACS number: 43.75.Cd [doi:10.1250/ast.34.166] ‘‘activity’’ [3]. -

Ken Hirai Missin' You ~It Will Break My Heart~ Mp3, Flac, Wma

Ken Hirai Missin' You ~It Will Break My Heart~ mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Electronic / Funk / Soul / Pop Album: Missin' You ~It Will Break My Heart~ Country: Japan Released: 2002 Style: Ballad, J-pop MP3 version RAR size: 1160 mb FLAC version RAR size: 1376 mb WMA version RAR size: 1330 mb Rating: 4.3 Votes: 898 Other Formats: MMF VQF VOC MP4 FLAC AIFF AU Tracklist 1 Missin' You ~It Will Break My Heart~ 4:50 2 It Will Break My Heart ~English Version~ 4:50 3 Missin' You ~It Will Break My Heart~ (Instrumental) 4:50 4 When Can I See You 4:05 5 Kiss Of Life (S.O.U.L Remix) 4:30 Credits Backing Vocals – Ken Hirai (tracks: 3) Bass – Nathan East (tracks: 3) Concertmaster – Tetsuya Ochiai (tracks: 5) Electric Guitar – Michael Thompson (tracks: 3) Engineer – Paul Boutin (tracks: 3) Engineer [Vocals Ken Hirai] – Masashi Kudo (tracks: 3) Guitar – Andrew Smith (tracks: 5) Music By – Babyface (tracks: 1), Masahito Nakano (tracks: 5) Music By, Words By – Babyface (tracks: 2) Other [Make Up] – Kenji Ishida Other [Stylist] – Hisashi "Momo" Kitazawa Photography By – Aya Tokunaga Piano – Greg Phillinganes (tracks: 3) Producer, Music By, Drum Programming, Programmed By [Keyboards], Acoustic Guitar, Electric Guitar, Backing Vocals – Babyface (tracks: 3) Recorded By [Rhythm, Asistant], Mixed By [Rhythm, Assistant] – Adrian Hall (tracks: 5) Recorded By [Rhythm], Mixed By [Rhythm] – Marcellus Fernandes (tracks: 5) Recorded By [Strings] – Masahiro Kawagucci (tracks: 5) Remix, Producer [Additional], Synthesizer, Drum Programming, Arranged -

Ray Leeper Concerts/Tours/Events

Ray Leeper Concerts/Tours/Events: Katherine Jenkins / IL DIVO Choreographer 2013 European Tour IL DIVO 2012, 2013 World Choreographer Octagon Tour Matthew Morrison In Concert Choreographer w/ Pittsburgh Symphony Matthew Mirrison 2011 SOLO Choreographer Tour Matthew Morrison / Opening Choreographer for NKOTBBSB Tour So You Think You Can Dance Contributing Choreographer FOX 2011/2012 Tours Franco Dragone's "India" Choreographer Dragone Entertainment Franco Dragone's "One Love" Choreographer Dragone Entertainment / Las Vegas They Gypsy Project Choreographer Big Rock Productions Jump on Broadway Choreographer Radio City Music Hall / Break the Floor Productions DanceBreak 2005 Choreographer SSDCF Cher Choreographer Sony Entertainment Elton John Choreographer Sony Entertainment Geri Halliwell Choreographer Handprint Entertainment Snoop Dogg Choreographer The Box / NYC LMNT Promo Tour Choreographer New Age Entertainment Kelly Levesque Choreographer Warner Brothers Jamie-Lynn Sigler Choreographer New Age Entertainment Ty Taylor Choreographer Radio City Music Hall Gay Men's Chorus of Los Choreographer GMCLA Angeles 2011 Television: Dancing with the Stars 2013 Choreographer ABC Opening Number X-Factor UK Choreographer ITV America's Got Talent Season Choreographer NBC 8, 9 Opening Number w/ Jackie Evancho & Il Divo So You Think You Can Dance Choreographer FOX (Multiple Seasons) Tour Matthew Morrison "Where It Choreographer PBS Special All Began" IL DIVO on "Red and Black" Choreographer ITV Big Bang Theory Choreographer CBS All My Children Choreographer ABC DanceNation Choreographer Lifetime Television The Young & The Restless Choreographer CBS The Queen Latifah Show Choreographer UPN The Today Show Choreographer NBC MTV-Korea Choreographer MTV Productions Film: They Shoot Divas, Don't Choreographer VH-1 Films They? Move Choreographer Soderling Productions This Island Choreographer Kevin Johnson, Dir, Prod. -

Main Article

2009年世界南島研究碩、博士生短期出國研修補助案 曾薇霖研究成果 一、印尼日惹藝術大學碩士班簡介 印尼藝術大學坐落於印度尼西亞擁有上千年的歷史古城-日惹,由西元 1950 設立之 ASRI 藝術學院、西元 1952 年設立之 AMI 音樂學院及西元 1961 年設立 之 ASTI 舞蹈學院三所學院於西元 1984 年 7 月 23 日合併而成立,目前設有 3 所 學院與 11 個學系,為印尼最大及歷史最優久之藝術院校。目前設有視覺藝術, 表演藝術及富有傳統的印尼文化和現代國際風格之媒體藝術課程。目前約有兩千 名學子。為目前印尼最具規模及領導性之藝術學府。印尼藝術大學鄰近日惹哈孟 庫部瓦納(Hamangku buwono)宮廷,此處目前為日惹特別行政區之政治中心,同 時也是文化、藝術的保存與傳承中心,宮廷中眾多樂師與舞蹈家皆於印尼藝術大 學任教。 日惹印尼藝術大學碩博士班(Program Pascasarjana, ISI Yogyakarta,簡稱 PASCA),位於 Jl. Suryodiningratan no. 8 (請參照圖 1 之地圖),距離哈孟庫部瓦納 宮廷僅約一公里,校區佔地約一萬平方公尺,校園腹地雖不大但機能完備,目前 擁有一座多功能學生活動中心、圖書館、課室與供學生使用之研究小間、舞蹈與 戲劇練習室以及甘美朗練習室等等。現任所長為 Prof. Dr. Dwi Marianto, MFA, 副所長為 Dr. Djohan Salim, Msi 與 Dra Budi Astuti 共同輔助處理行政與學生事 宜。日惹印尼藝術大學之碩博士班並不以學生專業領域分組,而是依照學生之研 究性質,分為 Penciptaan(創作組)與 Pengkajian(理論組)兩大類,在課程上以共同 科目居多,並依照領域之不同增設選修課程與安排適合之主修指導教授。 圖 1:左─國立日惹印尼藝術大學碩博士班校區示意圖 右─國立印尼日惹藝術大學校徽 1 PDF created with pdfFactory trial version www.pdffactory.com 2009年世界南島研究碩、博士生短期出國研修補助案 曾薇霖研究成果 二、課程介紹與上課情形 筆者於一年的交換學生課程中,共修習十二學分五堂課,但修習之課程與原 提出之申請計畫略有不同,原因為筆者的課程為碩士班所長 Prof. Dwi Marianto 安排,他在考量筆者之原有學習背景與語言程度上的問題後,將筆者所選修的課 程調整為: Semester I Eksprolasi punyi(音聲探討,2 學分) Antropologi Seni(藝術人類學,2 學分) Penciptaan Seni(創作組主修,4 學分) Semester II Teori kebudayaan(文化理論,2 學分) Pengkajian Seni(理論組主修,4 學分) 以上共計 14 學分,符合碩士班規定之 12 學分要求 在第一學期的三門課程中,除了藝術人類學為理論課程外,另兩門較偏向以演奏 和創作為主。首先是藝術人類學之課程,授課教師為 Prof. Dr. Y. Sumandiyo Hadi, SU,在授課內容上主要是以人類學的基礎理論為主,如 Franz Boas 和 Bronisław Malinowski 的民族誌書寫與文化理論,Immanuel Kant 的批判理論,Edward Burnett Tylor 的文化進化論,James George Frazer 的神話學與宗教學理論等等。 音聲探討是專為音樂主修的碩士生所開設的課程,授課教師為 Prof. Krishina 和 Prof. Tanto,課程內容主要為藉由討論和特定主題的發想,探討任何聲音(器樂 與非器樂)相互組合的可能性。例如以某首詩歌為主題,以某個情境為主題等等。 但也並非隨心所欲的發聲,在課堂中所有的人都被要求必須對自己將要(或已經) 發出或演奏的聲音做出合理的解釋,在此門課程中同時也安排了許多校外參訪與 演出的行程,對於筆者來說是在研究所生涯中少有的“理論與實踐並行”之體驗。 創作組主修對於筆者而言又是在研究所中不曾體驗過的課程,授課教師為 Prof. Kmt Trustho Purwodipuro,他本身為印尼藝術大學卡拉維坦(karawitan)系之 系主任,同時也在宮廷但任樂師領導一職。在一學期的課程中必須創作一首樂曲 並於期末舉辦音樂會發表之,由於筆者本身學習中國音樂,因此被要求嘗試以中 國音樂結合爪哇甘美朗創作樂曲,對筆者而言也是一項全新的挑戰,因筆者雖參 與過甘美朗的演出,但對於樂曲創作上卻是一竅不通,因此筆者從第一學期開始 參與學校的甘美朗團練,並單獨學習 gendar 及 bonang 等樂器,在期末時嘗試以 台灣傳統童謠《西北雨》為素材,編寫一首中國笛與甘美朗樂團之合奏曲。 文化理論之授課教師為 Dr. -

NANA Starring MIKA NAKASHIMA the End Mp3, Flac, Wma

NANA starring MIKA NAKASHIMA The End mp3, flac, wma DOWNLOAD LINKS (Clickable) Genre: Rock / Pop Album: The End Country: Japan Released: 2006 Style: Power Pop, Pop Rock MP3 version RAR size: 1525 mb FLAC version RAR size: 1983 mb WMA version RAR size: 1946 mb Rating: 4.9 Votes: 394 Other Formats: WMA AA MMF DMF AIFF XM AHX Tracklist 1 Hitoiro 5:21 2 Eyes For The Moon 3:39 3 Glamorous Sky 4:26 4 Blowing Out 3:27 5 My Medicine 3:37 6 Neglest Mind 3:57 7 Real World 4:08 8 Isolation 4:42 9 Blood 4:39 10 Hitoiro (Altanative) 5:06 11 My Way 4:19 Notes Released under the name Nana starring Mika Nakashima Other versions Title Category Artist Label Category Country Year (Format) Sony Music NANA starring MIKA The End (CD, AICL 1783 Associated AICL 1783 Japan 2006 NAKASHIMA* Album) Records Sony Music NANA starring MIKA The End (CD, Hong 88697056132 Associated 88697056132 2006 NAKASHIMA* Album) Kong Records The End Sony Music NANA starring MIKA AIJL-5303-4 (2xLP, Album, Associated AIJL-5303-4 Japan 2007 NAKASHIMA* RE) Records Inc. NANA starring MIKA The End (CD, ADCI 80301 SAR ADCI 80301 Japan 2006 NAKASHIMA* Album, Promo) Related Music albums to The End by NANA starring MIKA NAKASHIMA 1. Nana Mouskouri - Alone 2. Mika Nakashima - Love 3. Nana Mouskouri - The Very Best Of Nana Mouskouri 4. Mika Nakashima - Helpless Rain 5. Ko Nakashima - Raft 6. Red Ingle, The Happy Jesters - Starring The Country Music Of Red Ingle Also Starring The Happy Jesters 7. -

The Recording Industry in Japan 2006 CONTENTS

RIAJ YEARBOOK 2006 The Recording Industry in Japan 2006 CONTENTS Overview of Production of Recordings and Digital Music Delivery in 2005 ....1 Statistics by Format (Unit Basis•Value Basis) ..................................................................3 1. Quarterly Sales Figures of Digital Music Delivery in 2005 ................................................3 2. Total Recorded Music — Production on Unit Basis............................................................4 3. Total Audio Recordings — Production on Unit Basis ........................................................4 4. Total CDs — Production on Unit Basis ..............................................................................4 5. Total Recorded Music — Production on Value Basis ........................................................5 6. Total Audio Recordings — Production on Value Basis ......................................................5 7. Total CDs — Production on Value Basis ............................................................................5 8. CD Singles — Production on Unit Basis ............................................................................6 9. 5" CD Albums — Production on Unit Basis........................................................................6 Reference 1. Video Recordings — Production on Unit Basis............................................................6 10. CD Singles — Production on Value Basis ..........................................................................7 11. 5" CD Albums — Production on Value -

![Fairy Inc. Sheet Music Products List Last Updated [2013/03/018] Price (Japanese Yen) a \525 B \788 C \683](https://docslib.b-cdn.net/cover/1957/fairy-inc-sheet-music-products-list-last-updated-2013-03-018-price-japanese-yen-a-525-b-788-c-683-4041957.webp)

Fairy Inc. Sheet Music Products List Last Updated [2013/03/018] Price (Japanese Yen) a \525 B \788 C \683

Fairy inc. Sheet Music Products list Last updated [2013/03/018] Price (Japanese Yen) A \525 B \788 C \683 ST : Standard Version , OD : On Demand Version , OD-PS : Piano solo , OD-PV : Piano & Vocal , OD-GS : Guitar solo , OD-GV : Guitar & Vocal A Band Score Piano Guitar Title Artist Tie-up ST OD ST OD-PS OD-PV ST OD-GS OD-GV A I SHI TE RU no Sign~Watashitachi no Shochiku Distributed film "Mirai Yosouzu ~A I DREAMS COME TRUE A A A Mirai Yosouzu~ SHI TE RU no Sign~" Theme song OLIVIA a little pain - B A A A A inspi'REIRA(TRAPNEST) A Song For James ELLEGARDEN From the album "BRING YOUR BOARD!!" B a walk in the park Amuro Namie - A a Wish to the Moon Joe Hisaishi - A A~Yokatta Hana*Hana - A A Aa Superfly 13th Single A A A Aa Hatsu Koi 3B LAB.☆ - B Aa, Seishun no Hibi Yuzu - B Abakareta Sekai thee michelle gun elephant - B Abayo Courreges tact, BABY... Kishidan - B abnormalize Rin Toshite Shigure Anime"PSYCHO-PASS" Opening theme B B Acro no Oka Dir en grey - B Acropolis ELLEGARDEN From the album "ELEVEN FIRE CRACKERS" B Addicted ELLEGARDEN From the album "Pepperoni Quattro" B ASIAN KUNG-FU After Dark - B GENERATION again YUI Anime "Fullmetal Alchemist" Opening theme A B A A A A A A Again 2 Yuzu - B again×again miwa From 2nd album "guitarium" B B Ageha Cho PornoGraffitti - B Ai desita. Kan Jani Eight TBS Thursday drama 9 "Papadoru!" Theme song B B A A A Ai ga Yobu Hou e PornoGraffitti - B A A Ai Nanda V6 - A Ai no Ai no Hoshi the brilliant green - B Ai no Bakudan B'z - B Ai no Kisetsu Angela Aki NHK TV novel series "Tsubasa" Theme song A A