Fuzzy Clustering of Crowdsourced Test Reports for Apps

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Select Smartphones and Tablets with Qualcomm® Quick Charge™ 2.0 Technology

Select smartphones and tablets with Qualcomm® Quick Charge™ 2.0 technology + Asus Transformer T100 + Samsung Galaxy S6 + Asus Zenfone 2 + Samsung Galaxy S6 Edge + Droid Turbo by Motorola + Samsung Note 4 + Fujitsu Arrows NX + Samsung Note Edge + Fujitsu F-02G + Sharp Aquos Pad + Fujitsu F-03G + Sharp Aquos Zeta + Fujitsu F-05F + Sharp SH01G/02G + Google Nexus 6 + Sony Xperia Z2 (Japan) + HTC Butterfly 2 + Sony Xperia Z2 Tablet (Japan) + HTC One (M8) + Sony Xperia Z3 + HTC One (M9) + Sony Xperia Z3 Tablet + Kyocera Urbano L03 + Sony Xperia Z4 + LeTV One Max + Sony Xperia Z4 Tablet + LeTV One Pro + Xiaomi Mi 3 + LG G Flex 2 + Xiaomi Mi 4 + LG G4 + Xiaomi Mi Note + New Moto X by Motorola + Xiaomi Mi Note Pro + Panasonic CM-1 + Yota Phone 2 + Samsung Galaxy S5 (Japan) These devices contain the hardware necessary to achieve Quick Charge 2.0. It is at the device manufacturer’s discretion to fully enable this feature. A Quick Charge 2.0 certified power adapter is required. Different Quick Charge 2.0 implementations may result in different charging times. www.qualcomm.com/quickcharge Qualcomm Quick Charge is a product of Qualcom Technologies, Inc. Updated 6/2015 Certified Accessories + Air-J Multi Voltage AC Charger + Motorola TurboPower 15 Wall Charger + APE Technology AC/DC Adapter + Naztech N210 Travel Charger + APE Technology Car Charger + Naztech Phantom Vehicle Charger + APE Technology Power Bank + NTT DOCOMO AC Adapter + Aukey PA-U28 Turbo USB Universal Wall Charger + Power Partners AC Adapter + CellTrend Car Charger + Powermod Car Charger -

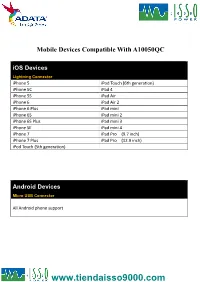

Android Devices

Mobile Devices Compatible With A10050QC iOS Devices Lightning Connector iPhone 5 iPod Touch (6th generation) iPhone 5C iPad 4 iPhone 5S iPad Air iPhone 6 iPad Air 2 iPhone 6 Plus iPad mini iPhone 6S iPad mini 2 iPhone 6S Plus iPad mini 3 iPhone SE iPad mini 4 iPhone 7 iPad Pro (9.7 inch) iPhone 7 Plus iPad Pro (12.9 inch) iPod Touch (5th generation) Android Devices Micro USB Connector All Android phone support Smartphone With Quick Charge 3.0 Technology Type-C Connector Asus ZenFone 3 LG V20 TCL Idol 4S Asus ZenFone 3 Deluxe NuAns NEO VIVO Xplay6 Asus ZenFone 3 Ultra Nubia Z11 Max Wiley Fox Swift 2 Alcatel Idol 4 Nubia Z11miniS Xiaomi Mi 5 Alcatel Idol 4S Nubia Z11 Xiaomi Mi 5s General Mobile GM5+ Qiku Q5 Xiaomi Mi 5s Plus HP Elite x3 Qiku Q5 Plus Xiaomi Mi Note 2 LeEco Le MAX 2 Smartisan M1 Xiaomi MIX LeEco (LeTV) Le MAX Pro Smartisan M1L ZTE Axon 7 Max LeEco Le Pro 3 Sony Xperia XZ ZTE Axon 7 Lenovo ZUK Z2 Pro TCL Idol 4-Pro Smartphone With Quick Charge 3.0 Technology Micro USB Connector HTC One A9 Vodafone Smart platinum 7 Qiku N45 Wiley Fox Swift Sugar F7 Xiaomi Mi Max Compatible With Quick Charge 3.0 Technology Micro USB Connector Asus Zenfone 2 New Moto X by Motorola Sony Xperia Z4 BlackBerry Priv Nextbit Robin Sony Xperia Z4 Tablet Disney Mobile on docomo Panasonic CM-1 Sony Xperia Z5 Droid Turbo by Motorola Ramos Mos1 Sony Xperia Z5 Compact Eben 8848 Samsung Galaxy A8 Sony Xperia Z5 Premium (KDDI Japan) EE 4GEE WiFi (MiFi) Samsung Galaxy Note 4 Vertu Signature Touch Fujitsu Arrows Samsung Galaxy Note 5 Vestel Venus V3 5070 Fujitsu -

Barometer of Mobile Internet Connections in Indonesia Publication of March 14Th 2018

Barometer of mobile Internet connections in Indonesia Publication of March 14th 2018 Year 2017 nPerf is a trademark owned by nPerf SAS, 87 rue de Sèze 69006 LYON – France. Contents 1 Methodology ................................................................................................................................. 2 1.1 The panel ............................................................................................................................... 2 1.2 Speed and latency tests ....................................................................................................... 2 1.2.1 Objectives and operation of the speed and latency tests ............................................ 2 1.2.2 nPerf servers .................................................................................................................. 2 1.3 Tests Quality of Service (QoS) .............................................................................................. 2 1.3.1 The browsing test .......................................................................................................... 2 1.3.2 YouTube streaming test ................................................................................................ 3 1.4 Filtering of test results .......................................................................................................... 3 1.4.1 Filtering of devices ........................................................................................................ 3 2 Overall results 2G/3G/4G ............................................................................................................ -

Compatibility Sheet

COMPATIBILITY SHEET SanDisk Ultra Dual USB Drive Transfer Files Easily from Your Smartphone or Tablet Using the SanDisk Ultra Dual USB Drive, you can easily move files from your Android™ smartphone or tablet1 to your computer, freeing up space for music, photos, or HD videos2 Please check for your phone/tablet or mobile device compatiblity below. If your device is not listed, please check with your device manufacturer for OTG compatibility. Acer Acer A3-A10 Acer EE6 Acer W510 tab Alcatel Alcatel_7049D Flash 2 Pop4S(5095K) Archos Diamond S ASUS ASUS FonePad Note 6 ASUS FonePad 7 LTE ASUS Infinity 2 ASUS MeMo Pad (ME172V) * ASUS MeMo Pad 8 ASUS MeMo Pad 10 ASUS ZenFone 2 ASUS ZenFone 3 Laser ASUS ZenFone 5 (LTE/A500KL) ASUS ZenFone 6 BlackBerry Passport Prevro Z30 Blu Vivo 5R Celkon Celkon Q455 Celkon Q500 Celkon Millenia Epic Q550 CoolPad (酷派) CoolPad 8730 * CoolPad 9190L * CoolPad Note 5 CoolPad X7 大神 * Datawind Ubislate 7Ci Dell Venue 8 Venue 10 Pro Gionee (金立) Gionee E7 * Gionee Elife S5.5 Gionee Elife S7 Gionee Elife E8 Gionee Marathon M3 Gionee S5.5 * Gionee P7 Max HTC HTC Butterfly HTC Butterfly 3 HTC Butterfly S HTC Droid DNA (6435LVW) HTC Droid (htc 6435luw) HTC Desire 10 Pro HTC Desire 500 Dual HTC Desire 601 HTC Desire 620h HTC Desire 700 Dual HTC Desire 816 HTC Desire 816W HTC Desire 828 Dual HTC Desire X * HTC J Butterfly (HTL23) HTC J Butterfly (HTV31) HTC Nexus 9 Tab HTC One (6500LVW) HTC One A9 HTC One E8 HTC One M8 HTC One M9 HTC One M9 Plus HTC One M9 (0PJA1) -

How to Choose the Right Smartphone Within Your Budget

SHOOTOUT HOW TO CHOOSE THE RIghT SMARTPHONE WITHIN YOUR BUDGET The smartphone industry has developed and progressed dramatically since the days when the display in your mobile phone was required only to show the number of the person calling. Let’s look at the latest developments and the key parameters to keep in mind while choosing a smartphone these days — Anuj Sharma ith the progression of the smartphone industry, gradually Display Quality more features are continuously being added/upgraded The display of a smart phone is one of the most important aspect Wto do multilple tasks, such as to view emails, click and to consider when buying a new device because it’s the part where record photos and videos, etc. And with added features there you’ll spend the most time directly interacting with the phone. The is always a question as to which smart phone should one buy smartphone industry rotates around various names to describe the and for what price. There are several aspects to consider while viewing experience on your smartphone screen viz. IPS, LCD, LED, choosing a smart phone. Here’s what you should look out for, Retina, AMOLED etc. Let’s find out what is meant by each of these based on our experience of reviewing phones over the past six terms and how they are different from others. months or so. Also do check for protective coating on screens like Gorilla Glass that prevents breaking your smartphone screen from accidental The Ideal Screen Size drops or Anti-Scratch Resistant/Fingerprint coating that protects Nowadays mostly all manufacturers are targeting bigger screen the screen from scratches or fingerprint smudges. -

Qualcomm® Quick Charge™ Technology Device List

One charging solution is all you need. Waiting for your phone to charge is a thing of the past. Quick Charge technology is ® designed to deliver lightning-fast charging Qualcomm in phones and smart devices featuring Qualcomm® Snapdragon™ mobile platforms ™ and processors, giving you the power—and Quick Charge the time—to do more. Technology TABLE OF CONTENTS Quick Charge 5 Device List Quick Charge 4/4+ Quick Charge 3.0/3+ Updated 09/2021 Quick Charge 2.0 Other Quick Charge Devices Qualcomm Quick Charge and Qualcomm Snapdragon are products of Qualcomm Technologies, Inc. and/or its subsidiaries. Devices • RedMagic 6 • RedMagic 6Pro Chargers • Baseus wall charger (CCGAN100) Controllers* Cypress • CCG3PA-NFET Injoinic-Technology Co Ltd • IP2726S Ismartware • SW2303 Leadtrend • LD6612 Sonix Technology • SNPD1683FJG To learn more visit www.qualcomm.com/quickcharge *Manufacturers may configure power controllers to support Quick Charge 5 with backwards compatibility. Power controllers have been certified by UL and/or Granite River Labs (GRL) to meet compatibility and interoperability requirements. These devices contain the hardware necessary to achieve Quick Charge 5. It is at the device manufacturer’s discretion to fully enable this feature. A Quick Charge 5 certified power adapter is required. Different Quick Charge 5 implementations may result in different charging times. Devices • AGM X3 • Redmi K20 Pro • ASUS ZenFone 6* • Redmi Note 7* • Black Shark 2 • Redmi Note 7 Pro* • BQ Aquaris X2 • Redmi Note 9 Pro • BQ Aquaris X2 Pro • Samsung Galaxy -

How to Enable Jio Volte Calling on Any Android Smartphone ?

Root Update A blog for android, root, Update and Reviews http://www.rootupdate.com How To Enable Jio VoLTE calling on any Android Smartphone ? How to Activate Jio HD VoLTE Calling On any Android smartphone without root . Do you want to activate Hd voice calling VoLTE on your android smartphone ? today we are here with a guide in which we will tell you how you can make calls on VoLTE on any android mobile without root . VolTE stands for Voice over LTE network .VoLTE is a technology in which you can make calls on LTE network . Calls on the Lte networks are much clearer and nice to hear then the ordinary calls . Some geeks believe that Volte calls are only possible if Hardware is compatible but we believe that if a smartphone supports 4G/LTE network then it also support VoLTE calls by some tweaks . So today we are here with a guide on How to activate VoLTE calls on any android device without rooting . 1 / 30 Root Update A blog for android, root, Update and Reviews http://www.rootupdate.com How to Make Calls on VoLTE on any android smartphone For free . There are no special requirements for activating VoLTE calls all you need is a 4G supporting Handset and and 4G activated sim card . Here is how you can check if your smartphone is 4G compatible . How to Enable Jio 4G Volte Calling on Any android Mobile without Using Jio4Gvoice app. Visit imei.info . Then open the dialer of your smartphone and dial *#06# . This will give you the Imei of your phone . -

530.06.374 GIULIA LISTA APP IT GB.Qxp

TELEFONI COMPATIBILI CON LE APPS - GIULIA Marca Modello Software Apple Iphone 5 iOS 9- BT v4.0 Apple Iphone 6 iOS 9- BT v4.0 Apple Iphone 4 iOS 6 – BT v2.1 Apple Ipad Mini 2 iOS 7 - BT v4.0 HTC HTC one M8 Android 5.0.1 - BT v4.0 Samsung Samsung S4 Android 4.2 - BT v4.0 LG LG Nexus 5 Android 4.4.4 (sarà il primo a ricevere Android L) – BT v4.0 Huawei Huawei Ascend P7 Android 4.4.2 – BT v4.0 Nokia Nokia Lumia 630/830 Windows8 BT-v4.0 Lenovo Lenovo Vibe Z2 pro Android 4.4 – BT 4.0 Lenovo Lenovo S850 Android 4.2 – BT 3.0 Lenovo Lenovo Yoga Tablet8 Android 4.2 – BT 4.0 Blackberry BB Curve 9369/9360 BB OS 7.0 – BT 2.1 Blackberry BBQ10 BB OS10-BT v4.0 Nokia Nokia C2-05 BT 2.1+ EDR Nokia Nokia 108 BT v3.0 Apple iPOD NANO 7G 1.0.3 ZTE ZTE Blade S6 Android 5.0.1 Xiaomi Xiaomi Mi 4 Android 5.0.1 OnePlus OnePlus X Android 5.0.1 Doogee Voyager 2 DG310 Android 5.0.1 Elephone Elephone P8000 Android 5.0.1 Apple iPhone 5s iOS 7 - 8 - 9.2.1 - 9.3.1 Blackberry 8900 BlackBerry OS v 4.6.1.10 1 Marca Modello Software Blackberry 9380 Bundle 7.1.0 1887 Blackberry 9790 7.1 Bundle 1332 Blackberry Q5 BlackBerry 10.3.2.2474 Blackberry Z10 Blackberry 10, 10.3.1, 10.3.2 Google Nexus 6 Android 5.0 - 6.0.1 HTC Sensation XE Android 4.0.3 LG G3 Android 5.0 LG G4 Android 5.1 - 6.0 Microsoft Lumia 550 Windows Mobile 10 Nokia Lumia 1320 Windows Phone 8.1 Nokia Lumia 1520 Windows Phone 8 Nokia 301 Nokia OS V 02.51 Nokia X7 Symbian - Nokia Belle Refresh 111.040.1511 Nokia E6-00 Symbian Belle 111.130.0625 Nokia X3-02 Nokia OS 7.05.2015 Samsung Samsung S6 Android 6.0.1 Samsung -

Barometer of Mobile Internet Connections in Poland

Barometer of Mobile Internet Connections in Poland Publication of July 21, 2020 First half 2020 nPerf is a trademark owned by nPerf SAS, 87 rue de Sèze 69006 LYON – France. Contents 1 Summary of results ...................................................................................................................... 2 1.1 nPerf score, all technologies combined ............................................................................... 2 1.2 Our analysis ........................................................................................................................... 3 2 Overall results 2G/3G/4G ............................................................................................................. 3 2.1 Data amount and distribution ............................................................................................... 3 2.2 Success rate 2G/3G/4G ........................................................................................................ 4 2.3 Download speed 2G/3G/4G .................................................................................................. 4 2.4 Upload speed 2G/3G/4G ....................................................................................................... 5 2.5 Latency 2G/3G/4G ................................................................................................................ 5 2.6 Browsing test 2G/3G/4G....................................................................................................... 6 2.7 Streaming test 2G/3G/4G .................................................................................................... -

Benchmarks War! Xiaomi Mi4 Vs Huawei Honor 6 Vs Oneplus One | Gizchina.Com

11/15/2014 Benchmarks war! Xiaomi Mi4 vs Huawei Honor 6 vs OnePlus One | Gizchina.com November 15, 2014 HOME ABOUT US STORE DIRECTORY GIZCHINA.ES GIZCHINA.IT GIZCHINA.DE GIZCHINA.CZ CONTACT US SERVICES Chinese phone, Android smartphone, tablet news and reviews HOT Xiaomi Mi5 already in production? Spy photos show near bezel-less design! Type your query... HOME LATEST LEAKS PHONES REVIEWS TABLETS FORUMS TIP US Homepage. News as it happens. Leaks/Spy/Teaser. Latest news by brand. Phones and Tablets. Tablet News. GizChina Forums are back! Tips and Articles. home » benchmarks » Benchmarks war! Xiaomi Mi4 vs Huawei Honor 6 vs OnePlus One Benchmarks war! Xiaomi Mi4 vs Huawei Honor 6 vs OnePlus One Posted on July 23, 2014 by andi 46 comments Gizchina Follow +1 LIKE & SHARE 119 12 14 2 + 5,515 3 of the best compete head to head in a benchmark war but who will win? The all new Xiaomi Mi4, the GizChina surpassing Huawei Honor 6 or the OnePlus One? Like Try launching a smartphone in China and not have every single detail from it splashed around the 28,448 people like GizChina. web in less than 24 hours. It’s something every Chinese manufacturer has to carefully prepare for, especially when there are worthy rivals waiting in the ranks. The Xiaomi Mi4 hasn’t even been released to buy yet, but someone has managed to run all the most important benchmarks on the phone, and better still compare the new Mi phone to the Huawei Glory 6 and the OnePlus One. Facebook social plugin In China each of these phones costs from just 1999 Yuan ($320), and each boast killer features for that low price. -

Liste Smartphones Mit „Kompass-Funktion“ Stand: Mai 2019 Quelle: Actionbound

Liste Smartphones mit „Kompass-Funktion“ Stand: Mai 2019 Quelle: Actionbound Acer Liquid E3 plus Honor 5X Huawei P10 Lite Acer Liquid Jade plus Honor 6C Pro Huawei P10 Plus Alcatel A3 Honor 7 Huawei P8 Alcatel A5 LED Honor 7X Huawei P8 Lite Alcatel A7 Honor 8 Huawei P8 Lite (2017) Alcatel A7 XL Honor 9 Lite Huawei Y6 (2017) Alcatel Idol 4 Honor View 10 Huawei Y7 Alcatel Idol 4 HTC 10 ID2 ME ID1 Alcatel Idol 4S HTC 10 Evo Kodak Ektra Alcatel Idol 5 HTC Desire 10 Lifestyle Leagoo S9 Alcatel Pop 4 HTC Desire 825 Lenovo K6 Alcatel Pop 4+ HTC One A9 Lenovo Moto Z Alcatel Pop 4S HTC One A9s Lenovo P2 Alcatel Shine Lite HTC One M7 LG G2 D802 Apple iPhone 4 HTC One M8 LG G2 Mini Apple iPhone 4s HTC One M9 LG G3 D855 Apple iPhone 5 HTC One Max LG G4 Apple iPhone 5c HTC One Mini LG G5 Apple iPhone 5s HTC One mini2 LG G5 SE Apple iPhone 6 HTC U Ultra LG G6 Apple iPhone 6 Plus HTC U11 LG Google Nexus 5 16GB Apple iPhone 7 HTC U11 Life LG K10 (2017) Apple iPhone 7 Plus HTC U11 Plus LG L40 D160 Apple iPhone 8 HTC UPlay LG L70 D320 Apple iPhone 8 Plus Huawei Ascend G510 LG L90 D405 Apple iPhone SE Huawei Ascend P7 LG Optimus L9 II D605 Apple iPhone X Huawei Honor 6 LG Optimus L9 II D605 Blackberry DTEK50 Huawei Honor 6A LG Q6 Blackberry DTEK60 Huawei Honor 6C Pro LG V20 Blackberry Key One Huawei Honor 6x (2016) LG V30 Blackberry Motion Huawei Honor 8 LG XMach BQ Aquaris V Huawei Honor 9 LG XPower BQ Aquaris X5 Plus Huawei Honor 9 Lite LG XPower 2 Cat Phones S60 Huawei Mate 10 Meizu M5 Caterpillar Cat S60 Huawei Mate 10 Lite Meizu M5 Note Elephone S9 Huawei Mate 10 Pro Meizu Pro 6S Gigaset GS370 Plus Huawei Mate 9 Meizu Pro 7 Google Nexus 6P Huawei Nova Motorola Moto E Google Pixel Huawei Nova 2 Motorola Moto G Google Pixel 2 Huawei Nova 2S Motorola Moto G (2. -

Smartphone Compatible Dimensions (In ) Acer Liquid E3 Plus Yes 138.0 X

Smartphone Compatible Dimensions (in ) Acer Liquid E3 plus yes 138.0 x 69.0 x 8.9 Acer Liquid yesde plus yes 140.0 x 69.0 x 7.5 Acer Liquid Zest no 134.6 x 67.4 x 10.3 Alcatel 3C no 161.0 x 76.0 x 7.9 Alcatel A3 no 142.0 x 70.5 x 8.6 Alcatel A3 XL no 165.0 x 82.5 x 7.9 Alcatel A5 LED no 146.0 x 72.1 x 7.7 Alcatel A7 no 152.7 x 76.5 x 9.0 Alcatel A7 XL no 159.6 x 81.5 x 8.7 Alcatel Idol 4 yes 147.0 x 72.5 x 7.1 Alcatel Idol 4 yes 147.0 x 72.5 x 7.1 Alcatel Idol 4s no 153.9 x 75.4 x 7.0 Alcatel Idol 4S no 153.9 x 75.4 x 7.0 Alcatel Idol 5 yes 148.0 x 73.0 x 7.5 Alcatel Idol 5S no 148.6 x 72.0 x 7.5 Alcatel Pop 4 yes 140.7 x 71.4 x 8.0 Alcatel Pop 4+ no 151.0 x 77.0 x 8.0 Alcatel Pop 4S no 152.0 x 76.8 x 8.0 Alcatel Shine Lite no 141.5 x 71.2 x 7.5 Apple iPhone 4 yes 115.2 x 58.6 x 9.3 Apple iPhone 4s yes 115.2 x 58.6 x 9.3 Apple iPhone 5 yes 123.8 x 58.6 x 7.6 Apple iPhone 5c yes 124.4 x 59.2 x 9.0 Apple iPhone 5s yes 123.8 x 58.6 x 7.6 Apple iPhone 6 yes 138.1 x 67.0 x 6.9 Apple iPhone 6 Plus no 158.1 x 77.8 x 7.1 Apple iPhone 7 yes 138.3 x 67.1 x 7.1 Apple iPhone 7 Plus no 158.2 x 77.9 x 7.3 Apple iPhone 8 yes 138.4 x 67.3 x 7.3 Apple iPhone 8 Plus no 158.4 x 78.1 x 7.5 Apple iPhone SE yes 123.8 x 58.6 x 7.6 Apple iPhone X yes 143.6 x 70.9 x 7.7 Archos 50D Oxygen no 141.4 x 70.8 x 8.2 Archos Diamond 2 Note no 156.4 x 82.7 x 8.7 Archos Diamond 2 Plus no 148.3 x 73.8 x 8.3 Asus Zenfone Go no 144.5 x 71.0 x 10.0 Blackberry DTEK50 yes 147.0 x 72.5 x 7.4 Blackberry DTEK60 no 153.9 x 75.4 x 7.0 Blackberry Key One yes 149.1 x 72.4 x 9.4 Blackberry