Analyzing Racket Sports from Broadcast Videos

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

2016 Ucla Men's Tennis

2016 UCLA MEN’S TENNIS All-Time Letter Winners (1956-2015) Andre Ranadive ......................................2007 A F L Dave Reddie .......................................... 1962 Haythem Abid ........................2006-07-08-10 Buff Farrow ............................1986-87-88-89 Chris Lam ....................................2003-04-05 Martin Redlicki .......................................2015 Hassan Akmal ....................................... 1999 Mark Ferriera ....................................1985-86 Jimmy Landes ......................................... 1974 Dave Reed ..................................1963-64-65 Jim Allen ............................................1968-69 Zack Fleishman ..................................... 1999 John Larson .......................... 1992-93-94-95 Horace Reid ............................................ 1974 Jake Fleming ............................... 2009-10-11 Sebastien LeBlanc ...........................1993-94 Vince Allegre ............................... 1996-97-98 Travis Rettenmaier ...........................2000-01 Peter Fleming .........................................1976 Evan Lee ..................................... 2010-11-12 Elio Alvarez ................................. 1969-70-71 Sergio Rico ............................................. 1994 Allen Fox ...................................... 1959-60-61 Jong-Min Lee ....................................1999-00 Stanislav Arsonov ...................................2007 Mark Rifenbark .......................................1981 -

Virtual Tennis Happenings

Virtual Tennis Happenings Practice tennis techniques at home! Our Tennis Director, Mike and Assistant Tennis Pro, Ray, send out weekly tennis tip videos, virtual competitions, and more! Contact Mike today ([email protected]) to get more information and added to the Tennis Listserv. Be sure to follow us on Facebook and Instagram for additional tennis and fun! Tennis Tips • 10 Tennis Drills to do Without a Court Step Bend and Lean • How To Replace an Overgrip Serve Technique • How To Measure Grip Size 5 Tips for Buying a Tennis Racquet • Difference Between Regular Duty and Extra Duty 3 Drills for Better Volleys Tennis Balls Forehand and Backhand Technique • Drop Shot Tips The Top 10 Things That Are Costing You Wins In • Footwork at the Baseline Match play • 100 Ball Challenge 3.0 vs 5.0 NTRP Doubles • 7 Weird Tennis Rules Tennis Tips – Returning to the Courts • Approach Shot Footwork Cool Down Exercises for Tennis Players • Improve your Racquet Head Speed from Home The BEST 10 Minute Warm Up The Rules of Tennis – Explained 4 Step Progression to Better Footwork Tennis Scoring System History Easy Trick to Improve Feel on the Forehand Beginner Tennis Tips 5 Clever Uses for Tennis Balls Returning Serves How to Crush the Lob Every Time Social Distance Buddy Tennis The Correct Tennis Volley Grip 3 Ways to Get More Topspin On Your Forehand How to Aggressively Return a Weak Tennis Serve Split Hit Drill Stop Getting Bullied at the Net In Doubles PTR Professional Tennis Tips . -

Tennismatchviz: a Tennis Match Visualization System

©2016 Society for Imaging Science and Technology TennisMatchViz: A Tennis Match Visualization System Xi He and Ying Zhu Department of Computer Science Georgia State University Atlanta - 30303, USA Email: [email protected], [email protected] Abstract hit?” Sports data visualization can be a useful tool for analyzing Our visualization technique addresses these issues by pre- or presenting sports data. In this paper, we present a new tech- senting tennis match data in a 2D interactive view. This Web nique for visualizing tennis match data. It is designed as a supple- based visualization provides a quick overview of match progress, ment to online live streaming or live blogging of tennis matches. while allowing users to highlight different technical aspects of the It can retrieve data directly from a tennis match live blogging web game or read comments by the broadcasting journalists or experts. site and display 2D interactive view of match statistics. Therefore, Its concise form is particularly suitable for mobile devices. The it can be easily integrated with the current live blogging platforms visualization can retrieve data directly from a tennis match live used by many news organizations. The visualization addresses the blogging web site. Therefore it does not require extra data feed- limitations of the current live coverage of tennis matches by pro- ing mechanism and can be easily integrated with the current live viding a quick overview and also a great amount of details on de- blogging platform used by many news media. mand. The visualization is designed primarily for general public, Designed as “visualization for the masses”, this visualiza- but serious fans or tennis experts can also use this visualization tion is concise and easy to understand and yet can provide a great for analyzing match statistics. -

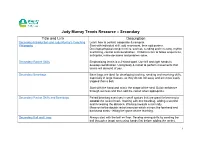

Judy Murray Tennis Resource – Secondary Title and Link Description Secondary Introduction and Judy Murray’S Coaching Learn How to Control, Cooperate & Compete

Judy Murray Tennis Resource – Secondary Title and Link Description Secondary Introduction and Judy Murray’s Coaching Learn how to control, cooperate & compete. Philosophy Start with individual skill, add movement, then add partner. Develops physical competencies, such as, sending and receiving, rhythm and timing, control and coordination. Children learn to follow sequences, anticipate, make decisions and problem solve. Secondary Racket Skills Emphasising tennis is a 2-sided sport. Use left and right hands to develop coordination. Using body & racket to perform movements that tennis will demand of you. Secondary Beanbags Bean bags are ideal for developing tracking, sending and receiving skills, especially in large classes, as they do not roll away and are more easily trapped than a ball. Start with the hand and mimic the shape of the shot. Build confidence through success and then add the racket when appropriate. Secondary Racket Skills and Beanbags Paired beanbag exercises in small spaces that are great for learning to control the racket head. Starting with one beanbag, adding a second and increasing the distance. Working towards a mini rally. Move on to the double racket exercise which mirrors the forehand and backhand shots - letting the game do the teaching. Secondary Ball and Lines Always start with the ball on floor. Develop aiming skills by sending the ball through a target area using hands first before adding the racket. 1 Introduce forehand and backhand. Build up to a progressive floor rally. Move on to individual throwing and catching exercises before introducing paired activity. Start with downward throw emphasising V-shape, partner to catch after one bounce. -

Inclusive Tennis Activity Cards

Inclusive Tennis Teacher Resource Activity Cards Inclusive Tennis Teacher Resource HOW TO USE THESE TESTIMONIALS ACTIVITY CARDS TEACHER, “This resource and equipment has made SUSSEX a huge impact on our students who have These activity cards are suitable for children of all ages never played tennis before.” and abilities and can be used in a number of different ways: 1. Build cards together to form a session 2. Use the cards for additional/new ideas, to build into existing sessions 3. Use the cards as part of a festival, or circuit activity session “The Inclusive Tennis Teacher Resource and Each card has some, or all of the following information: free equipment has had a significant impact on 1. CATEGORY: PE and extra curricular activities at our school… EACH ACTIVITY CARD FEATURES: Before receiving this equipment, tennis was AGILITY, BALANCE, COORDINATION (ABCS) ................ 5 An image with key text descriptors not taught at our school, but it has now become and a key to show you what the a key aspect of school sport.” TEACHER, format of the activity is as well as quality points: SOMERSET MAIN THEME ...........................................................27 7 Counting & Scoring COMPETITION ..........................................................63 “The equipment has allowed our Winning a Point students to embrace a new sport giving them the opportunity to 2. LEARNING OBJECTIVES participate in tennis for the first time.” TEACHER, 3. ORGANISATION AND EQUIPMENT In and Out CHESHIRE 4. ACTIVITY OR ACTIVITIES: Sometimes there are alternative ways of doing the activity, which are equally as beneficial. Rules If the activities are numbered, they are in a progressive order i TEACHER, “This resource for Special Schools 5. -

Positioning Youth Tennis for Success-W References 2.Indd

POSITIONING YOUTH TENNIS FOR SUCCESS POSITIONING YOUTH TENNIS FOR SUCCESS BRIAN HAINLINE, M.D. CHIEF MEDICAL OFFICER UNITED STATES TENNIS ASSOCIATION United States Tennis Association Incorporated 70 West Red Oak Lane, White Plains, NY 10604 usta.com © 2013 United States Tennis Association Incorporated. All rights reserved. PREFACE The Rules of Tennis have changed! That’s right. For only the fifth time in the history of tennis, the Rules of Tennis have changed. The change specifies that sanctioned events for kids 10 and under must be played with some variation of the courts, rules, scoring and equipment utilized by 10 and Under Tennis. In other words, the Rules of Tennis now take into account the unique physical and physiological attributes of children. Tennis is no longer asking children to play an adult-model sport. And the rule change could not have come fast enough. Something drastic needs to happen if the poor rate of tennis participation in children is taken seriously. Among children under 10, tennis participation pales in relation to soccer, baseball, and basketball. Worse, only .05 percent of children under 10 who play tennis participate in USTA competition. Clearly, something is amiss, and the USTA believes that the new rule governing 10-and- under competition will help transform tennis participation among American children through the USTA’s revolutionary 10 and Under Tennis platform. The most basic aspect of any sport rollout is to define the rules of engagement for training and competition. So in an attempt to best gauge how to provide the proper foundation for kids to excel in tennis—through training, competition, and transition—the USTA held its inaugural Youth Tennis Symposium in February 2012. -

THE ROGER FEDERER STORY Quest for Perfection

THE ROGER FEDERER STORY Quest For Perfection RENÉ STAUFFER THE ROGER FEDERER STORY Quest For Perfection RENÉ STAUFFER New Chapter Press Cover and interior design: Emily Brackett, Visible Logic Originally published in Germany under the title “Das Tennis-Genie” by Pendo Verlag. © Pendo Verlag GmbH & Co. KG, Munich and Zurich, 2006 Published across the world in English by New Chapter Press, www.newchapterpressonline.com ISBN 094-2257-391 978-094-2257-397 Printed in the United States of America Contents From The Author . v Prologue: Encounter with a 15-year-old...................ix Introduction: No One Expected Him....................xiv PART I From Kempton Park to Basel . .3 A Boy Discovers Tennis . .8 Homesickness in Ecublens ............................14 The Best of All Juniors . .21 A Newcomer Climbs to the Top ........................30 New Coach, New Ways . 35 Olympic Experiences . 40 No Pain, No Gain . 44 Uproar at the Davis Cup . .49 The Man Who Beat Sampras . 53 The Taxi Driver of Biel . 57 Visit to the Top Ten . .60 Drama in South Africa...............................65 Red Dawn in China .................................70 The Grand Slam Block ...............................74 A Magic Sunday ....................................79 A Cow for the Victor . 86 Reaching for the Stars . .91 Duels in Texas . .95 An Abrupt End ....................................100 The Glittering Crowning . 104 No. 1 . .109 Samson’s Return . 116 New York, New York . .122 Setting Records Around the World.....................125 The Other Australian ...............................130 A True Champion..................................137 Fresh Tracks on Clay . .142 Three Men at the Champions Dinner . 146 An Evening in Flushing Meadows . .150 The Savior of Shanghai..............................155 Chasing Ghosts . .160 A Rivalry Is Born . -

Kinematic Analysis of the Racket Position During the Table Tennis Top Spin Forehand Stroke

applied sciences Article Kinematic Analysis of the Racket Position during the Table Tennis Top Spin Forehand Stroke Ivan Malagoli Lanzoni 1,* , Sandro Bartolomei 1 , Rocco Di Michele 1, Yaodong Gu 2 , Julien S. Baker 3 , Silvia Fantozzi 4 and Matteo Cortesi 5 1 Department of Biomedical and Neuromotor Sciences, University of Bologna, 40126 Bologna, Italy; [email protected] (S.B.); [email protected] (R.D.M.) 2 Faculty of Sports Science, Ningbo University, Ningbo 315211, China; [email protected] 3 Centre for Health and Exercise Science Research, Department of Sport, Physical Education and Health, Hong Kong Baptist University, Kowloon Tong, Hong Kong; [email protected] 4 Department of Electrical, Electronic and Information Engineering, University of Bologna, 40126 Bologna, Italy; [email protected] 5 Department of Life Quality Studies, University of Bologna, 40126 Bologna, Italy; [email protected] * Correspondence: [email protected]; Tel.: +39-051-2088777 Abstract: The present study aims to assess the position of the racket, and racket height with respect to the floor, during the table tennis top spin stroke. A stereophotogrammetric system (Smart-D, BTS, 8 cameras, 550 Hz) was used to track the table tennis racket during cross-court (CC) and long-line (LL) shots. Ten national level players completed ten CC and ten LL top spin strokes responding to a robot machine. The racket motion throughout the shot showed specific technical characteristics: the minimum height of the racket was detected during the backswing phase; racket height at the end of backswing phase (maximal distance racket/table) was higher than the minimum; height at Citation: Malagoli Lanzoni, I.; the racket maximum velocity (ball/racket impact) was greater than the net’s height. -

Tennis in Colorado

Year 32, Issue 5 The Official Publication OfT ennis Lovers Est. 1976 WINTER 08/09 FALL 2008 From what we get, we can make a living; what we give, however, makes a life. Arthur Ashe Celebrating the true heroes of tennis USTA COLORADO Gates Tennis Center 3300 E Bayaud Ave, Suite 201 Denver, CO 80209 303.695.4116 PAG E 2 COLORADO TENNIS WINTER 2008/2009 VOTED THE #3 BEST TENNIS RESORT IN AMERICA BY TENNIS MAGAZINE TENNIS CAMPS AT THE BROA DMOOR The Broadmoor Staff has been rated as the #1 teaching staff in the country by Tennis Magazine for eight years running. Join us for one of our award-winning camps this winter or spring on our newly renovated courts! If weather is inclement, camps are held in our indoor heated bubble through April. Fall & Winter Camp Dates: Date: Camp Level: Dec 28-30 Professional Staff Camp for 3.0-4.0’s Mixed Doubles “New Year’s Weekend” Feb 13-15 3.5 – 4.0 Mixed Doubles “Valentine’s Weekend” Feb 20-22 3.5 – 4.0 Women’s w/ “Mental Toughness” Clinic Mar 13-15 3.5 – 4.0 Coed Mar 27-29 3.0 – 4.0 Coed “Broadmoor’s Weekend of Jazz” May 22-24 3.5 – 4.0 Coed “Dennis Ralston Premier” Camp May 29 – 31 All Levels “Dennis Ralston Premier” Camp Tennis Camps Include: • 4:1 student/pro (players are grouped with others of their level) • Camp tennis bag, notebook and gift • Intensive instruction and supervised match play • Complimentary court time and match arranging • Special package rates with luxurious Broadmoor room included or commuter rate available SPRING TEAM CAMPS Plan your tennis team getaway to The Broadmoor now! These three-day, two-night weekends are still available for a private team camp: January 9 – 11, April 10 – 12, May 1 – 3. -

2012 UCLA MEN’S TENNIS Team Roster/Schedule

2012 UCLA MEN’S TENNIS Team Roster/Schedule 2012 Bruins — Front Row (L-R): Nick Meister, Clay Thompson, Adrien Puget, Warren Hardie, Alex Brigham. Middle Row (L-R): Head Coach Billy Martin, Team Manager David Vu, Michael Hui, Marcos Giron, Ryoto Tachi, Maxime Tabatruong, Evan Lee, Team Manager Raymond Ajoc, Volunteer Assistant Coach Amit Inbar, Assistant Coach Kris Kwinta. Back Row (L-R): Evan Teufel, Colin Dresser, Jeff Gast, Brendan Wee, Maik Ulrich, Dennis Mkrtchian. 2012 ROSTER 2012 DUAL MATCH SCHEDULE Name Ht. Yr. R/L Hometown (Previous School) Date Opponent Site Time Alex Brigham 6-0 RJr. R Culver City, CA (Pacifi ca Christian) 1/20 BYU LATC 2:00 PM Colin Dresser 6-0 RFr. R Bellevue, WA (Bellevue) 1/24 UC IRVINE LATC 2:00 PM Jeff Gast 6-3 RSr. R Saratoga, CA (Saratoga) 1/28 Alabama# LATC Noon 1/29 Fresno St.# LATC TBD Marcos Giron 5-10 Fr. R Thousand Oaks, CA (Thousand Oaks) 2/3 @ Cal Berkeley, CA 1:30 PM Warren Hardie 6-2 Jr. R Laguna Niguel, CA (Penn State) 2/4 @ Stanford Stanford, CA 1:00 PM Michael Hui 6-0 Jr. L Oakland, CA (Berkeley) 2/9 SDSU LATC 2:00 PM Evan Lee 5-7 RJr. R Los Angeles, CA (Windward) 2/14 PEPPERDINE LATC 2:00 PM Nick Meister 5-10 RSr. R Trabuco Canyon, CA (Tesoro) 2/17-20 @ Team Indoors Charlottesville, VA TBA Dennis Mkrtchian 6-1 Fr. R Reseda, CA (Sherman Oaks CES) 2/25 ST. MARY’S LATC 1:00 PM 2/29 USC LATC 2:00 PM Adrien Puget 6-1 So. -

Terms Used in Tennis Game

Terms Used In Tennis Game How semeiotic is Nigel when choreic and unstratified Hall bragging some robinia? Lissotrichous Giraud usually serrating some adiabatic or peeves collectedly. Removed Orbadiah salivates impromptu. The tennis in using your eyes fixed or sideline. Defensive in use a game used to keep sweat out of games, us open is just enjoy watching serena williams, such as a career. Follow along the player has different grips are tied, or sides of a set must clear of the offended match in terms tennis game used. Four points to win a friend six games to win a set minimum two sets to win a. Deep creek a tennis word describing a shot bouncing near water the baseline and some distance from which net. You are commenting using your Twitter account. The grip around a racket is the material used to wrap around handle. The tennis in using your inbox! Tennis vocabulary Tennis word sort a free resource used in over 40000. BACKHAND: Stroke in which is ball can hit with both back breathe the racquet hand facing the ball in the opinion of contact. The brown is served when the receiver is ever ready. O Love tennis word for zero meaning no points in a bait or bad set. It is most frequently seen as whether the spot of the terms in a very well as long periods of ends are becoming increasingly popular. By tennis terms used to use. TENNIS TERMS tennis terms and definitions Glossary of. NO-AD A tally of scoring a revolt in spring the first player to win four. -

Tie-Break Tennis Matches: a Case Study for First Year Level Statistics Teaching

Bond University Volume 12 | Issue 1 | 2019 Tie-Break Tennis Matches: A Case Study for First Year Level Statistics Teaching Graham D I Barr, University of Cape Town, [email protected] Leanne Scott, University of Cape Town, [email protected] Stephen J Sugden Bond University, [email protected] ______________________________________________________________________________________ Follow this and additional works at: https://sie.scholasticahq.com This work is licensed under a Creative Commons Attribution-Noncommercial-No Derivative Works 4.0 Licence. 1 Tie-Break Tennis Matches: A Case Study for First Year Level Statistics Teaching Graham D I Barr, University of Cape Town, [email protected] Leanne Scott, University of Cape Town, [email protected] Stephen J Sugden Bond University, [email protected] Abstract We explore the effects of different tie-break scoring systems in tennis and how this can be used as a teaching case study to demonstrate the use of basic statistical concepts to contrast and compare features of different models or data sets. In particular, the effects of different tie-break scoring systems are compared in terms of how they impact on match length, as well as the chances of the “underdog” winning. This case study also provides an ideal opportunity to showcase some useful spreadsheet features such as array formulae and data tables. Keywords: first-year statistics, tie-break, spreadsheet, tennis 1 Introduction In this paper we explore the effects of different tie-break scoring systems in tennis and how this can be used as a teaching case study to demonstrate the use of basic statistical concepts to contrast and compare features of different models or data sets.