Predicting the Focus of Negation: Model and Error Analysis

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Animacy and Alienability: a Reconsideration of English

Running head: ANIMACY AND ALIENABILITY 1 Animacy and Alienability A Reconsideration of English Possession Jaimee Jones A Senior Thesis submitted in partial fulfillment of the requirements for graduation in the Honors Program Liberty University Spring 2016 ANIMACY AND ALIENABILITY 2 Acceptance of Senior Honors Thesis This Senior Honors Thesis is accepted in partial fulfillment of the requirements for graduation from the Honors Program of Liberty University. ______________________________ Jaeshil Kim, Ph.D. Thesis Chair ______________________________ Paul Müller, Ph.D. Committee Member ______________________________ Jeffrey Ritchey, Ph.D. Committee Member ______________________________ Brenda Ayres, Ph.D. Honors Director ______________________________ Date ANIMACY AND ALIENABILITY 3 Abstract Current scholarship on English possessive constructions, the s-genitive and the of- construction, largely ignores the possessive relationships inherent in certain English compound nouns. Scholars agree that, in general, an animate possessor predicts the s- genitive while an inanimate possessor predicts the of-construction. However, the current literature rarely discusses noun compounds, such as the table leg, which also express possessive relationships. However, pragmatically and syntactically, a compound cannot be considered as a true possessive construction. Thus, this paper will examine why some compounds still display possessive semantics epiphenomenally. The noun compounds that imply possession seem to exhibit relationships prototypical of inalienable possession such as body part, part whole, and spatial relationships. Additionally, the juxtaposition of the possessor and possessum in the compound construction is reminiscent of inalienable possession in other languages. Therefore, this paper proposes that inalienability, a phenomenon not thought to be relevant in English, actually imbues noun compounds whose components exhibit an inalienable relationship with possessive semantics. -

Logophoricity in Finnish

Open Linguistics 2018; 4: 630–656 Research Article Elsi Kaiser* Effects of perspective-taking on pronominal reference to humans and animals: Logophoricity in Finnish https://doi.org/10.1515/opli-2018-0031 Received December 19, 2017; accepted August 28, 2018 Abstract: This paper investigates the logophoric pronoun system of Finnish, with a focus on reference to animals, to further our understanding of the linguistic representation of non-human animals, how perspective-taking is signaled linguistically, and how this relates to features such as [+/-HUMAN]. In contexts where animals are grammatically [-HUMAN] but conceptualized as the perspectival center (whose thoughts, speech or mental state is being reported), can they be referred to with logophoric pronouns? Colloquial Finnish is claimed to have a logophoric pronoun which has the same form as the human-referring pronoun of standard Finnish, hän (she/he). This allows us to test whether a pronoun that may at first blush seem featurally specified to seek [+HUMAN] referents can be used for [-HUMAN] referents when they are logophoric. I used corpus data to compare the claim that hän is logophoric in both standard and colloquial Finnish vs. the claim that the two registers have different logophoric systems. I argue for a unified system where hän is logophoric in both registers, and moreover can be used for logophoric [-HUMAN] referents in both colloquial and standard Finnish. Thus, on its logophoric use, hän does not require its referent to be [+HUMAN]. Keywords: Finnish, logophoric pronouns, logophoricity, anti-logophoricity, animacy, non-human animals, perspective-taking, corpus 1 Introduction A key aspect of being human is our ability to think and reason about our own mental states as well as those of others, and to recognize that others’ perspectives, knowledge or mental states are distinct from our own, an ability known as Theory of Mind (term due to Premack & Woodruff 1978). -

Romani Syntactic Typology Evangelia Adamou, Yaron Matras

Romani Syntactic Typology Evangelia Adamou, Yaron Matras To cite this version: Evangelia Adamou, Yaron Matras. Romani Syntactic Typology. Yaron Matras; Anton Tenser. The Palgrave Handbook of Romani Language and Linguistics, Springer, pp.187-227, 2020, 978-3-030-28104- 5. 10.1007/978-3-030-28105-2_7. halshs-02965238 HAL Id: halshs-02965238 https://halshs.archives-ouvertes.fr/halshs-02965238 Submitted on 13 Oct 2020 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Romani syntactic typology Evangelia Adamou and Yaron Matras 1. State of the art This chapter presents an overview of the principal syntactic-typological features of Romani dialects. It draws on the discussion in Matras (2002, chapter 7) while taking into consideration more recent studies. In particular, we draw on the wealth of morpho- syntactic data that have since become available via the Romani Morpho-Syntax (RMS) database.1 The RMS data are based on responses to the Romani Morpho-Syntax questionnaire recorded from Romani speaking communities across Europe and beyond. We try to take into account a representative sample. We also take into consideration data from free-speech recordings available in the RMS database and the Pangloss Collection. -

The Term Declension, the Three Basic Qualities of Latin Nouns, That

Chapter 2: First Declension Chapter 2 covers the following: the term declension, the three basic qualities of Latin nouns, that is, case, number and gender, basic sentence structure, subject, verb, direct object and so on, the six cases of Latin nouns and the uses of those cases, the formation of the different cases in Latin, and the way adjectives agree with nouns. At the end of this lesson we’ll review the vocabulary you should memorize in this chapter. Declension. As with conjugation, the term declension has two meanings in Latin. It means, first, the process of joining a case ending onto a noun base. Second, it is a term used to refer to one of the five categories of nouns distinguished by the sound ending the noun base: /a/, /ŏ/ or /ŭ/, a consonant or /ĭ/, /ū/, /ē/. First, let’s look at the three basic characteristics of every Latin noun: case, number and gender. All Latin nouns and adjectives have these three grammatical qualities. First, case: how the noun functions in a sentence, that is, is it the subject, the direct object, the object of a preposition or any of many other uses? Second, number: singular or plural. And third, gender: masculine, feminine or neuter. Every noun in Latin will have one case, one number and one gender, and only one of each of these qualities. In other words, a noun in a sentence cannot be both singular and plural, or masculine and feminine. Whenever asked ─ and I will ask ─ you should be able to give the correct answer for all three qualities. -

Minimal Pronouns, Logophoricity and Long-Distance Reflexivisation in Avar

Minimal pronouns, logophoricity and long-distance reflexivisation in Avar* Pavel Rudnev Revised version; 28th January 2015 Abstract This paper discusses two morphologically related anaphoric pronouns inAvar (Avar-Andic, Nakh-Daghestanian) and proposes that one of them should be treated as a minimal pronoun that receives its interpretation from a λ-operator situated on a phasal head whereas the other is a logophoric pro- noun denoting the author of the reported event. Keywords: reflexivity, logophoricity, binding, syntax, semantics, Avar 1 Introduction This paper has two aims. One is to make a descriptive contribution to the crosslin- guistic study of long-distance anaphoric dependencies by presenting an overview of the properties of two kinds of reflexive pronoun in Avar, a Nakh-Daghestanian language spoken natively by about 700,000 people mostly living in the North East Caucasian republic of Daghestan in the Russian Federation. The other goal is to highlight the relevance of the newly introduced data from an understudied lan- guage to the theoretical debate on the nature of reflexivity, long-distance anaphora and logophoricity. The issue at the heart of this paper is the unusual character of theanaphoric system in Avar, which is tripartite. (1) is intended as just a preview with more *The present material was presented at the Utrecht workshop The World of Reflexives in August 2011. I am grateful to the workshop’s audience and participants for their questions and comments. I am indebted to Eric Reuland and an anonymous reviewer for providing valuable feedback on the first draft, as well as to Yakov Testelets for numerous discussions of anaphora-related issues inAvar spanning several years. -

Chapter 6 Mirativity and the Bulgarian Evidential System Elena Karagjosova Freie Universität Berlin

Chapter 6 Mirativity and the Bulgarian evidential system Elena Karagjosova Freie Universität Berlin This paper provides an account of the Bulgarian admirative construction andits place within the Bulgarian evidential system based on (i) new observations on the morphological, temporal, and evidential properties of the admirative, (ii) a criti- cal reexamination of existing approaches to the Bulgarian evidential system, and (iii) insights from a similar mirative construction in Spanish. I argue in particular that admirative sentences are assertions based on evidence of some sort (reporta- tive, inferential, or direct) which are contrasted against the set of beliefs held by the speaker up to the point of receiving the evidence; the speaker’s past beliefs entail a proposition that clashes with the assertion, triggering belief revision and resulting in a sense of surprise. I suggest an analysis of the admirative in terms of a mirative operator that captures the evidential, temporal, aspectual, and modal properties of the construction in a compositional fashion. The analysis suggests that although mirativity and evidentiality can be seen as separate semantic cate- gories, the Bulgarian admirative represents a cross-linguistically relevant case of a mirative extension of evidential verbal forms. Keywords: mirativity, evidentiality, fake past 1 Introduction The Bulgarian evidential system is an ongoing topic of discussion both withre- spect to its interpretation and its morphological buildup. In this paper, I focus on the currently poorly understood admirative construction. The analysis I present is based on largely unacknowledged observations and data involving the mor- phological structure, the syntactic environment, and the evidential meaning of the admirative. Elena Karagjosova. -

On the Grammar of Negation

Please provide footnote text Chapter 2 On the Grammar of Negation 2.1 Functional Perspectives on Negation Negation can be described from several different points of view, and various descriptions may reach diverging conclusions as to whether a proposition is negative or not. A question without a negated predicate, for example, may be interpreted as negative from a pragmatic point of view (rhetorical ques- tion), while a clause with constituent negation, e.g. The soul is non-mortal, is an affirmative predication from an orthodox Aristotelian point of view.1 However, common to most if not all descriptions is that negation is generally approached from the viewpoint of affirmation.2 With regard to the formal expression, “the negative always receives overt expression while positive usually has zero expression” (Greenberg 1966: 50). Furthermore, the negative morpheme tends to come as close to the finite ele- ment of the clause as possible (Dahl 1979: 92). The reason seems to be that ne- gation is intimately connected with finiteness. This relationship will be further explored in section 2.2. To be sure, negation of the predicate, or verb phrase negation, is the most common type of negation in natural language (Givón 2001, 1: 382). Generally, verb phrase negation only negates the asserted and not the presup- posed part of the corresponding affirmation. Typically then, the subject of the predication is excluded from negation. This type of negation, in its unmarked form, has wide scope over the predicate, such that the scope of negation in a clause John didn’t kill the goat may be paraphrased as ‘he did not kill the goat.’ However, while in propositional logic, negation is an operation that simply re- verses the logical value of a proposition, negative clauses in natural language rarely reverse the logical value only. -

Serial Verb Constructions Revisited: a Case Study from Koro

Serial Verb Constructions Revisited: A Case Study from Koro By Jessica Cleary-Kemp A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Linguistics in the Graduate Division of the University of California, Berkeley Committee in charge: Associate Professor Lev D. Michael, Chair Assistant Professor Peter S. Jenks Professor William F. Hanks Summer 2015 © Copyright by Jessica Cleary-Kemp All Rights Reserved Abstract Serial Verb Constructions Revisited: A Case Study from Koro by Jessica Cleary-Kemp Doctor of Philosophy in Linguistics University of California, Berkeley Associate Professor Lev D. Michael, Chair In this dissertation a methodology for identifying and analyzing serial verb constructions (SVCs) is developed, and its application is exemplified through an analysis of SVCs in Koro, an Oceanic language of Papua New Guinea. SVCs involve two main verbs that form a single predicate and share at least one of their arguments. In addition, they have shared values for tense, aspect, and mood, and they denote a single event. The unique syntactic and semantic properties of SVCs present a number of theoretical challenges, and thus they have invited great interest from syntacticians and typologists alike. But characterizing the nature of SVCs and making generalizations about the typology of serializing languages has proven difficult. There is still debate about both the surface properties of SVCs and their underlying syntactic structure. The current work addresses some of these issues by approaching serialization from two angles: the typological and the language-specific. On the typological front, it refines the definition of ‘SVC’ and develops a principled set of cross-linguistically applicable diagnostics. -

The Content of Pronouns: Evidence from Focus

The Content of Pronouns: Evidence from Focus VIi Sauerland Tiibingen University Thispaper is part of an effort to learn something about the semantics of pronominals from their focus properties. At present, I believe to have evidence substantiating the following two claims: Claim 1 is that Bound Pronominals can be hidden definite descriptions, but probably need not necessarily be. Claim 2 is that donkey anaphora must be hidden definite descriptions. The evidence for Claim 1 comes from a new analysis of cases with focus on bound pronouns such as (1) that also featured in earlier work of mine (Sauerland 1998, 1999). (1) On Monday, every boy called his mother. On TUESday, every TEAcher called hislHIS mother. In particular, I show parallels of focus on bound pronouns and bound definite de scriptions (epithets). The evidence for Claim 2 comes from cases like (2) where focus seems to be obligatory. (2) a. Every girl who came by car parked it in the lot. b. Every girl who came by bike parked #it/ITin the lot. 1 Bound Pronouns can DitTer in Meaning 1.1 Necessary_ConditioYLf or the Licensing of Fo cus The results of this paper are compatible with Schwarzschild's (1999) theory of focus. For convenience, however, I use the licensing condition in (3). This is only a necessary condition, not a sufficientcondition for focus licensing. Schwarzschild's (1999) theory entails that condition (3) is necessarily fulfilled for a focussed XP unless there's no YP dominating XP or the YP immediately dominating XP is also focussed.! (3) A focus on XP is licensed only if there are a Fo cus Domain constituent FD dominating XP and a Fo cus Antecedent constituent FA in the preceding © 2000 by Uli Sauerland Brendan Jackson and Tanya Matthews (eds), SALT X 167-1 84, Ithaca, NY: Cornell University. -

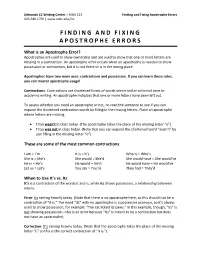

Finding and Fixing Apostrophe Errors 425.640.1750 |

Edmonds CC Writing Center | MUK 113 Finding and Fixing Apostrophe Errors 425.640.1750 | www.edcc.edu/lsc FINDING AND FIXING APOSTROPHE ERRORS What is an Apostrophe Error? Apostrophes are used to show ownership and are used to show that one or more letters are missing in a contraction. An apostrophe error occurs when an apostrophe is needed to show possession or contraction, but it is not there or is in the wrong place. Apostrophes have two main uses: contractions and possession. If you can learn these rules, you can master apostrophe usage! Contractions: Contractions are shortened forms of words which add an informal tone to academic writing. An apostrophe indicates that one or more letters have been left out. To assess whether you need an apostrophe or not, re-read the sentence to see if you can expand the shortened contraction words by filling in the missing letters. Place an apostrophe where letters are missing. Thao wasn’t in class today. (The apostrophe takes the place of the missing letter “o”). Thao was not in class today. (Note that you can expand the shortened word “wasn’t” by just filling in the missing letter “o”). These are some of the most common contractions: I am = I’m It is = It’s Who is = Who’s She is = She’s She would = She’d She would have = She would’ve He is = He’s He would = He’d He would have = He would’ve Let us = Let’s You are = You’re They had = They’d When to Use It’s vs. -

Journal of Linguistics the Meanings of Focus

Journal of Linguistics http://journals.cambridge.org/LIN Additional services for Journal of Linguistics: Email alerts: Click here Subscriptions: Click here Commercial reprints: Click here Terms of use : Click here The meanings of focus: The significance of an interpretationbased category in crosslinguistic analysis DEJAN MATIĆ and DANIEL WEDGWOOD Journal of Linguistics / FirstView Article / January 2006, pp 1 37 DOI: 10.1017/S0022226712000345, Published online: 31 October 2012 Link to this article: http://journals.cambridge.org/abstract_S0022226712000345 How to cite this article: DEJAN MATIĆ and DANIEL WEDGWOOD The meanings of focus: The significance of an interpretationbased category in crosslinguistic analysis. Journal of Linguistics, Available on CJO 2012 doi:10.1017/S0022226712000345 Request Permissions : Click here Downloaded from http://journals.cambridge.org/LIN, IP address: 192.87.79.51 on 13 Nov 2012 J. Linguistics, Page 1 of 37. f Cambridge University Press 2012 doi:10.1017/S0022226712000345 The meanings of focus: The significance of an interpretation-based category in cross-linguistic analysis1 DEJAN MATIC´ Max Planck Institute for Psycholinguistics, Nijmegen DANIEL WEDGWOOD (Received 16 March 2011; revised 23 August 2012) Focus is regularly treated as a cross-linguistically stable category that is merely manifested by different structural means in different languages, such that a common focus feature may be realised through, for example, a morpheme in one language and syntactic movement in another. We demonstrate this conception of focus to be unsustainable on both theoretical and empirical grounds, invoking fundamental argumentation regarding the notions of focus and linguistic category, alongside data from a wide range of languages. -

Tagalog Pala: an Unsurprising Case of Mirativity

Tagalog pala: an unsurprising case of mirativity Scott AnderBois Brown University Similar to many descriptions of miratives cross-linguistically, Schachter & Otanes(1972)’s clas- sic descriptive grammar of Tagalog describes the second position particle pala as “expressing mild surprise at new information, or an unexpected event or situation.” Drawing on recent work on mi- rativity in other languages, however, we show that this characterization needs to be refined in two ways. First, we show that while pala can be used in cases of surprise, pala itself merely encodes the speaker’s sudden revelation with the counterexpectational nature of surprise arising pragmatically or from other aspects of the sentence such as other particles and focus. Second, we present data from imperatives and interrogatives, arguing that this revelation need not concern ‘information’ per se, but rather the illocutionay update the sentence encodes. Finally, we explore the interactions between pala and other elements which express mirativity in some way and/or interact with the mirativity pala expresses. 1. Introduction Like many languages of the Philippines, Tagalog has a prominent set of discourse particles which express a variety of different evidential, attitudinal, illocutionary, and discourse-related meanings. Morphosyntactically, these particles have long been known to be second-position clitics, with a number of authors having explored fine-grained details of their distribution, rela- tive order, and the interaction of this with different types of sentences (e.g. Schachter & Otanes (1972), Billings & Konopasky(2003) Anderson(2005), Billings(2005) Kaufman(2010)). With a few recent exceptions, however, comparatively little has been said about the semantics/prag- matics of these different elements beyond Schachter & Otanes(1972)’s pioneering work (which is quite detailed given their broad scope of their work).