Web Crawling, Analysis and Archiving

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

CHAPTER 12 Making Your Web Site Mashable

CHAPTER 12 Making Your Web Site Mashable This chapter is a guide to content producers who want to make their web sites friendly to mashups. That is, this chapter answers the question, how would you as a content producer make your digital content most effectively remixable and mashable to users and developers? Most of this book is addressed to creators of mashups who are therefore consumers of data and services. Why then should I shift in this chapter to addressing producers of data and services? Well, you have already seen aspects of APIs and web content that make it either easier or harder to remix, and you’ve seen what makes APIs easy and enjoyable to use. Showing content and data producers what would make life easier for consumers of their content provides useful guidance to service providers who might not be fully aware of what it’s like for consumers. The main audience for the book—as consumers (as opposed to producers) of services—should still find this chapter a helpful distillation of best practices for creating mashups. In some ways, this chapter is a review of Chapters 1–11 and a preview of Chapters 13–19. Chapters 1–11 prepared you for how to create mashups in general. I presented a lot of the technologies and showed how to build a reasonably sophisticated mashup with PHP and JavaScript as well as using mashup tools. Some of the discussion in this chapter will be amplified by in-depth discussions in Chapters 13–19. For example, I’ll refer to topics such as geoRSS, iCalendar, and microformats that I discuss in greater detail in those later chapters. -

Life Sciences and the Web: a New Era for Collaboration

Life Sciences and the web: a new era for collaboration The Harvard community has made this article openly available. Please share how this access benefits you. Your story matters Citation Sagotsky, Jonathan A., Le Zhang, Zhihui Wang, Sean Martin, and Thomas S. Deisboeck. 2008. Life Sciences and the web: a new era for collaboration. Molecular Systems Biology 4: 201. Published Version doi:10.1038/msb.2008.39 Citable link http://nrs.harvard.edu/urn-3:HUL.InstRepos:4874800 Terms of Use This article was downloaded from Harvard University’s DASH repository, and is made available under the terms and conditions applicable to Other Posted Material, as set forth at http:// nrs.harvard.edu/urn-3:HUL.InstRepos:dash.current.terms-of- use#LAA Molecular Systems Biology 4; Article number 201; doi:10.1038/msb.2008.39 Citation: Molecular Systems Biology 4:201 & 2008 EMBO and Nature Publishing Group All rights reserved 1744-4292/08 www.molecularsystemsbiology.com PERSPECTIVE Life Sciences and the web: a new era for collaboration Jonathan A Sagotsky1, Le Zhang1, Zhihui Wang1, Sean Martin2 inaccuracies per article compared to Encyclopedia Britannica’s and Thomas S Deisboeck1,* 2.92 errors. Although measures have been taken to improve the editorial process, accuracy and completeness remain valid 1 Complex Biosystems Modeling Laboratory, Harvard-MIT (HST) Athinoula concerns. Perhaps the issue is one in which it has become A Martinos Center for Biomedical Imaging, Massachusetts General Hospital, difficult to establish exactly what has actually been peer Charlestown, MA, USA and reviewed and what has not, given that the low cost of digital 2 Cambridge Semantics Inc., Cambridge, MA, USA publishing on the web has led to an explosive amount * Corresponding author. -

Building a Scalable Index and a Web Search Engine for Music on the Internet Using Open Source Software

Department of Information Science and Technology Building a Scalable Index and a Web Search Engine for Music on the Internet using Open Source software André Parreira Ricardo Thesis submitted in partial fulfillment of the requirements for the degree of Master in Computer Science and Business Management Advisor: Professor Carlos Serrão, Assistant Professor, ISCTE-IUL September, 2010 Acknowledgments I should say that I feel grateful for doing a thesis linked to music, an art which I love and esteem so much. Therefore, I would like to take a moment to thank all the persons who made my accomplishment possible and hence this is also part of their deed too. To my family, first for having instigated in me the curiosity to read, to know, to think and go further. And secondly for allowing me to continue my studies, providing the environment and the financial means to make it possible. To my classmate André Guerreiro, I would like to thank the invaluable brainstorming, the patience and the help through our college years. To my friend Isabel Silva, who gave me a precious help in the final revision of this document. Everyone in ADETTI-IUL for the time and the attention they gave me. Especially the people over Caixa Mágica, because I truly value the expertise transmitted, which was useful to my thesis and I am sure will also help me during my professional course. To my teacher and MSc. advisor, Professor Carlos Serrão, for embracing my will to master in this area and for being always available to help me when I needed some advice. -

Release Notes - January 2018

11/6/2020 Release Notes - January 2018 Documentation Release Notes Guide Release Notes - January 2018 Release Notes - January 2018 New features and Óxes in the Adobe Experience Cloud. NOTE To be notiÓed about the early release notes, subscribe to the Adobe Priority Product Update. Ïe Priority Product Update is sent three to Óve business days prior to the release. Please check back at release time for updates. New information published a×er the release will be marked with the publication date. Experience Cloud Recipes Use Case Date Published Description First-time Setup October 18, 2017 Ïe Órst-time setup recipe walks you through the steps to get started using Experience Cloud solutions. Email Optimization August 15, 2017 Ïe email marketing use case shows you how to implement an integrated email strategy with analytics, optimization, and campaign management. Mobile App Engagement June 1, 2017 Ïe mobile app engagement use case shows you how to integrate your mobile apps with the Adobe Experience Cloud to measure user engagement and deliver personalized experiences to your audiences. https://experienceleague.adobe.com/docs/release-notes/experience-cloud/previous/legacy-rns/2018/01182018.html?lang=en#previous 1/27 11/6/2020 Release Notes - January 2018 Use Case Date Published Description Digital Foundation May 2017 Ïe Digital Foundation use case helps you implement a digital marketing platform with Analytics, optimization, and campaign management. Customer Intelligence April 2017 Ïe Customer Intelligence use case shows you how to create a uniÓed customer proÓle using multiple data sources, and how to use this proÓle to build actionable audiences. Experience Cloud and Core Services Release notes for the core services interface, including Assets, Feed, NotiÓcations, People core service, Mobile Services, Launch, and Dynamic Tag Management. -

Open Search Environments: the Free Alternative to Commercial Search Services

Open Search Environments: The Free Alternative to Commercial Search Services. Adrian O’Riordan ABSTRACT Open search systems present a free and less restricted alternative to commercial search services. This paper explores the space of open search technology, looking in particular at lightweight search protocols and the issue of interoperability. A description of current protocols and formats for engineering open search applications is presented. The suitability of these technologies and issues around their adoption and operation are discussed. This open search approach is especially useful in applications involving the harvesting of resources and information integration. Principal among the technological solutions are OpenSearch, SRU, and OAI-PMH. OpenSearch and SRU realize a federated model to enable content providers and search clients communicate. Applications that use OpenSearch and SRU are presented. Connections are made with other pertinent technologies such as open-source search software and linking and syndication protocols. The deployment of these freely licensed open standards in web and digital library applications is now a genuine alternative to commercial and proprietary systems. INTRODUCTION Web search has become a prominent part of the Internet experience for millions of users. Companies such as Google and Microsoft offer comprehensive search services to users free with advertisements and sponsored links, the only reminder that these are commercial enterprises. Businesses and developers on the other hand are restricted in how they can use these search services to add search capabilities to their own websites or for developing applications with a search feature. The closed nature of the leading web search technology places barriers in the way of developers who want to incorporate search functionality into applications. -

Efficient Focused Web Crawling Approach for Search Engine

Ayar Pranav et al, International Journal of Computer Science and Mobile Computing, Vol.4 Issue.5, May- 2015, pg. 545-551 Available Online at www.ijcsmc.com International Journal of Computer Science and Mobile Computing A Monthly Journal of Computer Science and Information Technology ISSN 2320–088X IJCSMC, Vol. 4, Issue. 5, May 2015, pg.545 – 551 RESEARCH ARTICLE Efficient Focused Web Crawling Approach for Search Engine 1 2 Ayar Pranav , Sandip Chauhan Computer & Science Engineering, Kalol Institute of Technology and Research Canter, Kalol, Gujarat, India 1 [email protected]; 2 [email protected] Abstract— a focused crawler traverses the web, selecting out relevant pages to a predefined topic and neglecting those out of concern. Collecting domain specific documents using focused crawlers has been considered one of most important strategies to find relevant information. While surfing the internet, it is difficult to deal with irrelevant pages and to predict which links lead to quality pages. However most focused crawler use local search algorithm to traverse the web space, but they could easily trapped within limited a sub graph of the web that surrounds the starting URLs also there is problem related to relevant pages that are miss when no links from the starting URLs. There is some relevant pages are miss. To address this problem we design a focused crawler where calculating the frequency of the topic keyword also calculate the synonyms and sub synonyms of the keyword. The weight table is constructed according to the user query. To check the similarity of web pages with respect to topic keywords and priority of extracted link is calculated. -

Distributed Indexing/Searching Workshop Agenda, Attendee List, and Position Papers

Distributed Indexing/Searching Workshop Agenda, Attendee List, and Position Papers Held May 28-19, 1996 in Cambridge, Massachusetts Sponsored by the World Wide Web Consortium Workshop co-chairs: Michael Schwartz, @Home Network Mic Bowman, Transarc Corp. This workshop brings together a cross-section of people concerned with distributed indexing and searching, to explore areas of common concern where standards might be defined. The Call For Participation1 suggested particular focus on repository interfaces that support efficient and powerful distributed indexing and searching. There was a great deal of interest in this workshop. Because of our desire to limit attendance to a workable size group while maximizing breadth of attendee backgrounds, we limited attendance to one person per position paper, and furthermore we limited attendance to one person per institution. In some cases, attendees submitted multiple position papers, with the intention of discussing each of their projects or ideas that were relevant to the workshop. We had not anticipated this interpretation of the Call For Participation; our intention was to use the position papers to select participants, not to provide a forum for enumerating ideas and projects. As a compromise, we decided to choose among the submitted papers and allow multiple position papers per person and per institution, but to restrict attendance as noted above. Hence, in the paper list below there are some cases where one author or institution has multiple position papers. 1 http://www.w3.org/pub/WWW/Search/960528/cfp.html 1 Agenda The Distributed Indexing/Searching Workshop will span two days. The first day's goal is to identify areas for potential standardization through several directed discussion sessions. -

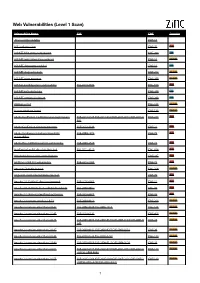

Web Vulnerabilities (Level 1 Scan)

Web Vulnerabilities (Level 1 Scan) Vulnerability Name CVE CWE Severity .htaccess file readable CWE-16 ASP code injection CWE-95 High ASP.NET MVC version disclosure CWE-200 Low ASP.NET application trace enabled CWE-16 Medium ASP.NET debugging enabled CWE-16 Low ASP.NET diagnostic page CWE-200 Medium ASP.NET error message CWE-200 Medium ASP.NET padding oracle vulnerability CVE-2010-3332 CWE-310 High ASP.NET path disclosure CWE-200 Low ASP.NET version disclosure CWE-200 Low AWStats script CWE-538 Medium Access database found CWE-538 Medium Adobe ColdFusion 9 administrative login bypass CVE-2013-0625 CVE-2013-0629CVE-2013-0631 CVE-2013-0 CWE-287 High 632 Adobe ColdFusion directory traversal CVE-2013-3336 CWE-22 High Adobe Coldfusion 8 multiple linked XSS CVE-2009-1872 CWE-79 High vulnerabilies Adobe Flex 3 DOM-based XSS vulnerability CVE-2008-2640 CWE-79 High AjaxControlToolkit directory traversal CVE-2015-4670 CWE-434 High Akeeba backup access control bypass CWE-287 High AmCharts SWF XSS vulnerability CVE-2012-1303 CWE-79 High Amazon S3 public bucket CWE-264 Medium AngularJS client-side template injection CWE-79 High Apache 2.0.39 Win32 directory traversal CVE-2002-0661 CWE-22 High Apache 2.0.43 Win32 file reading vulnerability CVE-2003-0017 CWE-20 High Apache 2.2.14 mod_isapi Dangling Pointer CVE-2010-0425 CWE-20 High Apache 2.x version equal to 2.0.51 CVE-2004-0811 CWE-264 Medium Apache 2.x version older than 2.0.43 CVE-2002-0840 CVE-2002-1156 CWE-538 Medium Apache 2.x version older than 2.0.45 CVE-2003-0132 CWE-400 Medium Apache 2.x version -

Quality Spine Care

Quality Spine Care Healthcare Systems, Quality Reporting, and Risk Adjustment John Ratliff Todd J. Albert Joseph Cheng Jack Knightly Editors 123 Quality Spine Care John Ratliff • Todd J. Albert Joseph Cheng • Jack Knightly Editors Quality Spine Care Healthcare Systems, Quality Reporting, and Risk Adjustment Editors John Ratliff Todd J. Albert Department of Neurosurgery Hospital for Special Surgery Stanford University New York, NY Stanford, CA USA USA Jack Knightly Joseph Cheng Atlantic Neurosurgical Specialists University of Cincinnati Morristown, NJ Cincinnati, OH USA USA ISBN 978-3-319-97989-2 ISBN 978-3-319-97990-8 (eBook) https://doi.org/10.1007/978-3-319-97990-8 Library of Congress Control Number: 2018957706 © Springer Nature Switzerland AG 2019 This work is subject to copyright. All rights are reserved by the Publisher, whether the whole or part of the material is concerned, specifically the rights of translation, reprinting, reuse of illustrations, recitation, broadcasting, reproduction on microfilms or in any other physical way, and transmission or information storage and retrieval, electronic adaptation, computer software, or by similar or dissimilar methodology now known or hereafter developed. The use of general descriptive names, registered names, trademarks, service marks, etc. in this publication does not imply, even in the absence of a specific statement, that such names are exempt from the relevant protective laws and regulations and therefore free for general use. The publisher, the authors, and the editors are safe to assume that the advice and information in this book are believed to be true and accurate at the date of publication. Neither the publisher nor the authors or the editors give a warranty, express or implied, with respect to the material contained herein or for any errors or omissions that may have been made. -

Natural Language Processing Technique for Information Extraction and Analysis

International Journal of Research Studies in Computer Science and Engineering (IJRSCSE) Volume 2, Issue 8, August 2015, PP 32-40 ISSN 2349-4840 (Print) & ISSN 2349-4859 (Online) www.arcjournals.org Natural Language Processing Technique for Information Extraction and Analysis T. Sri Sravya1, T. Sudha2, M. Soumya Harika3 1 M.Tech (C.S.E) Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 2 Head (I/C) of C.S.E & IT Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] 3 M. Tech C.S.E, Assistant Professor, Sri Padmavati Mahila Visvavidyalayam (Women’s University), School of Engineering and Technology, Tirupati. [email protected] Abstract: In the current internet era, there are a large number of systems and sensors which generate data continuously and inform users about their status and the status of devices and surroundings they monitor. Examples include web cameras at traffic intersections, key government installations etc., seismic activity measurement sensors, tsunami early warning systems and many others. A Natural Language Processing based activity, the current project is aimed at extracting entities from data collected from various sources such as social media, internet news articles and other websites and integrating this data into contextual information, providing visualization of this data on a map and further performing co-reference analysis to establish linkage amongst the entities. Keywords: Apache Nutch, Solr, crawling, indexing 1. INTRODUCTION In today’s harsh global business arena, the pace of events has increased rapidly, with technological innovations occurring at ever-increasing speed and considerably shorter life cycles. -

Elie Bursztein, Baptiste Gourdin, John Mitchell Stanford University & LSV-ENS Cachan

Talkback: Reclaiming the Blogsphere Elie Bursztein, Baptiste Gourdin, John Mitchell Stanford University & LSV-ENS Cachan 1 What is a blog ? • A Blog ("Web log") is a site, usually maintained by an individual with • Regular entries • Commentary • LinkBack • Entries displayed in reverse-chronological order. http://elie.im/blog Elie Bursztein, Baptiste Gourdin, John Mitchell TalkBack: reclaiming the blogosphere from spammer http://ly.tl/p21 Key Statistics • 184 Millions blogs • 73% of users read blogs • 50% post comments universalmccann Elie Bursztein, Baptiste Gourdin, John Mitchell TalkBack: reclaiming the blogosphere from spammer http://ly.tl/p21 Anatomy of a blog post Elie Bursztein, Baptiste Gourdin, John Mitchell TalkBack: reclaiming the blogosphere from spammer http://ly.tl/p21 Why blogs are special ? User Elie Bursztein, Baptiste Gourdin, John Mitchell TalkBack: reclaiming the blogosphere from spammer http://ly.tl/p21 Why blogs are special ? User Elie Bursztein, Baptiste Gourdin, John Mitchell TalkBack: reclaiming the blogosphere from spammer http://ly.tl/p21 What is a TrackBack ? Elie Bursztein, Baptiste Gourdin, John Mitchell TalkBack: reclaiming the blogosphere from spammer http://ly.tl/p21 Trackback Illustrated Little Timmy said to me... "What's Trackback, Daddy?" "Wow! Jimmy Lightning has written the best 1. post ever! It's so funny! And it's true! That's "Best Post Ever" why it's so good. I need to tell the world!" "Check it out world! I've "Jimmy written all about Jimmy 2. Lightning is Lightning's post on my Elie Bursztein, Baptiste Gourdin, John Mitchell swell"TalkBack: reclaiming the blogosphere from spammerweblog. My weblog's http://ly.tl/p21 called 'The Unbloggable Blogness of Blogging'. -

Insight MFR By

Manufacturers, Publishers and Suppliers by Product Category 11/6/2017 10/100 Hubs & Switches ASCEND COMMUNICATIONS CIS SECURE COMPUTING INC DIGIUM GEAR HEAD 1 TRIPPLITE ASUS Cisco Press D‐LINK SYSTEMS GEFEN 1VISION SOFTWARE ATEN TECHNOLOGY CISCO SYSTEMS DUALCOMM TECHNOLOGY, INC. GEIST 3COM ATLAS SOUND CLEAR CUBE DYCONN GEOVISION INC. 4XEM CORP. ATLONA CLEARSOUNDS DYNEX PRODUCTS GIGAFAST 8E6 TECHNOLOGIES ATTO TECHNOLOGY CNET TECHNOLOGY EATON GIGAMON SYSTEMS LLC AAXEON TECHNOLOGIES LLC. AUDIOCODES, INC. CODE GREEN NETWORKS E‐CORPORATEGIFTS.COM, INC. GLOBAL MARKETING ACCELL AUDIOVOX CODI INC EDGECORE GOLDENRAM ACCELLION AVAYA COMMAND COMMUNICATIONS EDITSHARE LLC GREAT BAY SOFTWARE INC. ACER AMERICA AVENVIEW CORP COMMUNICATION DEVICES INC. EMC GRIFFIN TECHNOLOGY ACTI CORPORATION AVOCENT COMNET ENDACE USA H3C Technology ADAPTEC AVOCENT‐EMERSON COMPELLENT ENGENIUS HALL RESEARCH ADC KENTROX AVTECH CORPORATION COMPREHENSIVE CABLE ENTERASYS NETWORKS HAVIS SHIELD ADC TELECOMMUNICATIONS AXIOM MEMORY COMPU‐CALL, INC EPIPHAN SYSTEMS HAWKING TECHNOLOGY ADDERTECHNOLOGY AXIS COMMUNICATIONS COMPUTER LAB EQUINOX SYSTEMS HERITAGE TRAVELWARE ADD‐ON COMPUTER PERIPHERALS AZIO CORPORATION COMPUTERLINKS ETHERNET DIRECT HEWLETT PACKARD ENTERPRISE ADDON STORE B & B ELECTRONICS COMTROL ETHERWAN HIKVISION DIGITAL TECHNOLOGY CO. LT ADESSO BELDEN CONNECTGEAR EVANS CONSOLES HITACHI ADTRAN BELKIN COMPONENTS CONNECTPRO EVGA.COM HITACHI DATA SYSTEMS ADVANTECH AUTOMATION CORP. BIDUL & CO CONSTANT TECHNOLOGIES INC Exablaze HOO TOO INC AEROHIVE NETWORKS BLACK BOX COOL GEAR EXACQ TECHNOLOGIES INC HP AJA VIDEO SYSTEMS BLACKMAGIC DESIGN USA CP TECHNOLOGIES EXFO INC HP INC ALCATEL BLADE NETWORK TECHNOLOGIES CPS EXTREME NETWORKS HUAWEI ALCATEL LUCENT BLONDER TONGUE LABORATORIES CREATIVE LABS EXTRON HUAWEI SYMANTEC TECHNOLOGIES ALLIED TELESIS BLUE COAT SYSTEMS CRESTRON ELECTRONICS F5 NETWORKS IBM ALLOY COMPUTER PRODUCTS LLC BOSCH SECURITY CTC UNION TECHNOLOGIES CO FELLOWES ICOMTECH INC ALTINEX, INC.