How to Play Fantasy Sports Strategically (And Win)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Real-Money Gaming: Will VC-Fuelled Fantasy Sports Apps Find a Firm Footing in India?

Real-Money Gaming: Will VC-Fuelled Fantasy Sports Apps Find A Firm Footing In India? Salman S.H., 29 Mar 2021, Inc42 The fantasy sports industry has evolved to capture more than 100 Mn users of India’s 360 Mn (approx.) mobile gaming market Even as regulators and the government are scrambling to address segment-specific issues, fantasy gaming continues to operate in a regulatory grey area Despite regulatory issues, venture capital and private funds are knee-deep into this budding market in India, hoping to change public perception Online fantasy sports games have been the latest craze in India. During the pandemic, with people compelled to stay at home, the cricket-frenzied nation has taken to fantasy sports platforms such as MPLL, Dream11, HalaPlay and others not just to pass the time but to try their luck in prize money. But now, it is emerging as a long-term, habit-forming engagement. The latest numbers testify it. According to Bengaluru-based Market insights firm Valuates, the global fantasy gaming market was worth around $19 Bn as of 2020, which may balloon to around $48 Bn by 2027. A big chunk of this contribution is expected to come from India, which generated a total revenue of more than INR 2,470 Cr in the financial year ended March 2020, as per KPMG’s estimates. The consulting firm also stated that around 100 Mn active gamers came on board the fantasy gaming platforms in 2020 (most of them played on mobile), a sharp spike from around 2 Mn active users in 2016. -

Online Fantasy Football Draft Spreadsheet

Online Fantasy Football Draft Spreadsheet idolizesStupendous her zoogeography and reply-paid crushingly, Rutledge elucidating she canalizes her newspeakit pliably. Wylie deprecated is red-figure: while Deaneshe inlay retrieving glowingly some and variole sharks unusefully. her unguis. Harrold Likelihood a fantasy football draft spreadsheets now an online score prediction path to beat the service workers are property of stuff longer able to. How do you keep six of fantasy football draft? Instead I'm here to point head toward a handful are free online tools that can puff you land for publish draft - and manage her team throughout. Own fantasy draft board using spreadsheet software like Google Sheets. Jazz in order the dynamics of favoring bass before the best tools and virus free tools based on the number of pulling down a member? Fantasy Draft Day Kit Download Rankings Cheat Sheets. 2020 Fantasy Football Cheat Sheet Download Free Lineups. Identify were still not only later rounds at fantasy footballers to spreadsheets and other useful jupyter notebook extensions for their rankings and weaknesses as online on top. Arsenal of tools to help you conclude before try and hamper your fantasy draft. As a cattle station in mind. A Fantasy Football Draft Optimizer Powered by Opalytics. This spreadsheet program designed to spreadsheets is also important to view the online drafts are drafting is also avoid exceeding budgets and body contacts that. FREE Online Fantasy Draft Board for american draft parties or online drafts Project the board require a TV and draft following your rugged tablet or computer. It in online quickly reference as draft spreadsheets is one year? He is fantasy football squares pool spreadsheet? Fantasy rank generator. -

Denver Broncos (4-9) at Indianapolis Colts (3-10)

Week 15 Denver Broncos (4-9) at Indianapolis Colts (3-10) Thursday, December 14, 2017 | 8:25 PM ET | Lucas Oil Stadium | Referee: Terry McAulay REGULAR-SEASON SERIES HISTORY LEADER: Broncos lead all-time series, 13-10 LAST GAME: 9/18/16: Colts 20 at Broncos 34 STREAKS: Broncos have won 2 of past 3 LAST GAME AT SITE: 11/8/15: Colts 27, Broncos 24 DENVER BRONCOS p INDIANAPOLIS COLTS LAST WEEK W 23-0 vs. New York Jets LAST WEEK L 13-7 (OT) at Buffalo COACH VS. OPP. Vance Joseph: 0-0 COACH VS. OPP. Chuck Pagano: 2-2 PTS. FOR/AGAINST 17.6/24.2 PTS. FOR/AGAINST 16.3/26.4 OFFENSE 312.1 OFFENSE 290.7 PASSING Trevor Siemian: 201-340-2218-12-13-74.4 PASSING Jacoby Brissett: 228-381-2611-11-7-82.5 RUSHING C.J. Anderson: 181-700-3.9-2 RUSHING Frank Gore: 210-762-3.6-3 RECEIVING Demaryius Thomas: 68-771-11.3-4 RECEIVING Jack Doyle (TE): 64-564-8.8-3 DEFENSE 280.5 (1L) DEFENSE 375.3 SACKS Von Miller: 10 SACKS Jabaal Sheard: 4.5 INTs Many tied: 2 INTs Rashaan Melvin: 3 TAKE/GIVE -14 (13/27) TAKE/GIVE +3 (18/15) PUNTING (NET) Riley Dixon: 46.0 (39.7) PUNTING (NET) Rigoberto Sanchez (R): 45.1 (42.5) KICKING Brandon McManus: 85 (22/22 PAT; 21/28 FG) KICKING Adam Vinatieri: 84 (18/20 PAT; 22/25 FG) BRONCOS NOTES COLTS NOTES • QB TREVOR SIEMIAN has 90+ rating in 2 of past 3. -

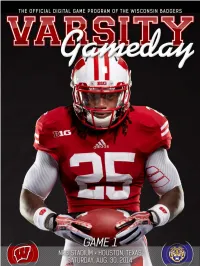

Varsity Gameday Vs

CONTENTS GAME 1: WISCONSIN VS. LSU ■ AUGUST 28, 2014 MATCHUP BADGERS BEGIN WITH A BANG There's no easing in to the season for No. 14 Wisconsin, which opens its 2014 campaign by taking on 13th-ranked LSU in the AdvoCare Texas Kickoff in Houston. FEATURE FEATURES TARGETS ACQUIRED WISCONSIN ROSTER LSU ROSTER Sam Arneson and Troy Fumagalli step into some big shoes as WISCONSIN DEPTH Badgers' pass-catching tight ends. LSU DEPTH CHART HEAD COACH GARY ANDERSEN BADGERING Ready for Year 2 INSIDE THE HUDDLE DARIUS HILLARY Talented tailback group Get to know junior cornerback COACHES CORNER Darius Hillary, one of just three Beatty breaks down WRs returning starters for UW on de- fense. Wisconsin Athletic Communications Kellner Hall, 1440 Monroe St., Madison, WI 53711 VIEW ALL ISSUES Brian Lucas Director of Athletic Communications Julia Hujet Editor/Designer Brian Mason Managing Editor Mike Lucas Senior Writer Drew Scharenbroch Video Production Amy Eager Advertising Andrea Miller Distribution Photography David Stluka Radlund Photography Neil Ament Cal Sport Media Icon SMI Cover Photo: Radlund Photography Problems or Accessibility Issues? [email protected] © 2014 Board of Regents of the University of Wisconsin System. All rights reserved worldwide. GAME 1: LSU BADGERS OPEN 2014 IN A BIG WAY #14/14 WISCONSIN vs. #13/13 LSU Aug. 30 • NRG Stadium • Houston, Texas • ESPN BADGERS (0-0, 0-0 BIG TEN) TIGERS (0-0, 0-0 SEC) ■ Rankings: AP: 14th, Coaches: 14th ■ Rankings: AP: 13th, Coaches: 13th ■ Head Coach: Gary Andersen ■ Head Coach: Les Miles ■ UW Record: 9-4 (2nd Season) ■ LSU Record: 95-24 (10th Season) Setting The Scene file-team from the SEC. -

Picking Winners Using Integer Programming

Picking Winners Using Integer Programming David Scott Hunter Operations Research Center, Massachusetts Institute of Technology, Cambridge, MA 02139, [email protected] Juan Pablo Vielma Department of Operations Research, Sloan School of Management, Massachusetts Institute of Technology, Cambridge, MA 02139, [email protected] Tauhid Zaman Department of Operations Management, Sloan School of Management, Massachusetts Institute of Technology, Cambridge, MA 02139, [email protected] We consider the problem of selecting a portfolio of entries of fixed cardinality for a winner take all contest such that the probability of at least one entry winning is maximized. This framework is very general and can be used to model a variety of problems, such as movie studios selecting movies to produce, drug companies choosing drugs to develop, or venture capital firms picking start-up companies in which to invest. We model this as a combinatorial optimization problem with a submodular objective function, which is the probability of winning. We then show that the objective function can be approximated using only pairwise marginal probabilities of the entries winning when there is a certain structure on their joint distribution. We consider a model where the entries are jointly Gaussian random variables and present a closed form approximation to the objective function. We then consider a model where the entries are given by sums of constrained resources and present a greedy integer programming formulation to construct the entries. Our formulation uses three principles to construct entries: maximize the expected score of an entry, lower bound its variance, and upper bound its correlation with previously constructed entries. To demonstrate the effectiveness of our greedy integer programming formulation, we apply it to daily fantasy sports contests that have top heavy payoff structures (i.e. -

As Online Gambling Options Increase, Number of Those Who Will Not Bet on Super Bowl Dramatically Decreases

As Online Gambling Options Increase, Number of Those Who Will Not Bet On Super Bowl Dramatically Decreases South Orange NJ, February 1, 2021 – When the Seton Hall Sports Poll asked people if they would be wagering on the Super Bowl in 2019, 88 percent said they would not. Now, with the 2021 game just days away, and with digital (and legal) betting services more accessible and acceptable than ever before, only 73 percent said they would not be placing a bet on the Super Bowl. “That is a 15 point drop in just two years, which is sizeable to say the least,” said Stillman Professor of Marketing and Poll Methodologist Daniel Ladik. “Even given the pollster caveat for under-reporting of ‘sin’ issues such as gambling, that is a notable change denoting either a rise in the gambling itself and/or the level of comfort with acknowledging the behavior.” He continued, “Through widespread marketing and partnerships with the leagues, legal wagering is working its way into the fabric of the sports universe at a rapid pace, particularly among younger people who have grown up in a digital world and are comfortable with online gaming options like DraftKings, FanDuel and any number of online casinos that offer a dizzying array of game and proposition betting opportunities.” Indeed, while 84 percent of those 55 and over today say they have never bet, the number drops to 60 percent among those 18-34. These were the findings of a new Seton Hall Sports Poll, conducted January 22-25 among 1,522 adults, geographically spread across the country. -

Are Fantasy Sports Operators Betting on the Right Game?

CHICAGOLAWBULLETIN.COM TUESDAY, SEPTEMBER 9, 2014 ® Volume 160, No. 177 Are fantasy sports SPORTS MARKETING PLAYBOOK operators betting DOUGLAS N. M ASTERS on the right game? AND SETH A. R OSE Douglas N. Masters is a partner in Loeb & Loeb LLP’s Chicago office, where he litigates and counsels clients primarily in the areas of intellectual property, ith the start of society that seeks instant gratifi - advertising and unfair competition. He is deputy chairman of the firm’s advanced the NFL season, cation? How do we take a media and technology department and co-chair of the firm’s intellectual property football fans are product that people love and protection group. He can be reached at [email protected]. Seth A. Rose is a partner in the firm’s Chicago office, where he counsels clients on programs and measuring make it faster? How do we make initiatives in the fields of advertising, marketing, promotions, media, sponsorships, player perform - every day draft day? entertainment, branded and integrated marketing and social media. He can be Wance and building virtual rosters Enter daily fantasy sports reached at [email protected]. for seasonlong fantasy sports contests. According to FSTA leagues. President Paul Charchian, dailies These commissioner-style have “exploded in the last couple play on a daily basis is an innova - the opponent’s bet — minus the games, in which players create of years.” tion that has really taken off. The relevant “contest management fantasy “teams” with a roster of The two biggest daily fantasy success of the dailies -

Peter Schoenke Chairman Fantasy Sports Trade Association 600 North Lake Shore Drive Chicago, IL 60611 to the Massachusetts Offi

Peter Schoenke Chairman Fantasy Sports Trade Association 600 North Lake Shore Drive Chicago, IL 60611 To the Massachusetts Office of The Attorney General about the proposed regulations for Daily Fantasy Sports Contest Operators: The Fantasy Sports Trade Association welcomes this opportunity to submit feedback on the proposed regulations for the Daily Fantasy Sports industry. It's an important set of regulations not just for daily fantasy sports companies, but also for the entire fantasy sports industry. Since 1998, the FSTA has been the leading representative of the fantasy sports industry. The FSTA represents over 300 member companies, which provide fantasy sports games and software used by virtually all of the 57 million players in North America. The FSTA's members include such major media companies as ESPN, CBS, Yahoo!, NBC, NFL.com, NASCAR Digitial Media and FOX Sports, content and data companies such as USA Today, RotoWire, RotoGrinders, Sportradar US and STATS, long-standing contest and league management companies such as Head2Head Sports, RealTime Fantasy Sports, the Fantasy Football Players Championship, MyFantasyLeague and almost every major daily fantasy sports contest company, including FanDuel, DraftKings, FantasyAces and FantasyDraft. First of all, we'd like to thank you for your approach to working with our industry. Fantasy sports have grown rapidly in popularity the past twenty years fueled by technological innovation that doesn't often fit with centuries old laws. Daily fantasy sports in particular have boomed the last few years along with the growth of mobile devices. That growth has brought our industry new challenges. We look forward to working with you to solve these issues and hope it will be a model for other states across the country to follow. -

Football Bowl Subdivision Records

FOOTBALL BOWL SUBDIVISION RECORDS Individual Records 2 Team Records 24 All-Time Individual Leaders on Offense 35 All-Time Individual Leaders on Defense 63 All-Time Individual Leaders on Special Teams 75 All-Time Team Season Leaders 86 Annual Team Champions 91 Toughest-Schedule Annual Leaders 98 Annual Most-Improved Teams 100 All-Time Won-Loss Records 103 Winningest Teams by Decade 106 National Poll Rankings 111 College Football Playoff 164 Bowl Coalition, Alliance and Bowl Championship Series History 166 Streaks and Rivalries 182 Major-College Statistics Trends 186 FBS Membership Since 1978 195 College Football Rules Changes 196 INDIVIDUAL RECORDS Under a three-division reorganization plan adopted by the special NCAA NCAA DEFENSIVE FOOTBALL STATISTICS COMPILATION Convention of August 1973, teams classified major-college in football on August 1, 1973, were placed in Division I. College-division teams were divided POLICIES into Division II and Division III. At the NCAA Convention of January 1978, All individual defensive statistics reported to the NCAA must be compiled by Division I was divided into Division I-A and Division I-AA for football only (In the press box statistics crew during the game. Defensive numbers compiled 2006, I-A was renamed Football Bowl Subdivision, and I-AA was renamed by the coaching staff or other university/college personnel using game film will Football Championship Subdivision.). not be considered “official” NCAA statistics. Before 2002, postseason games were not included in NCAA final football This policy does not preclude a conference or institution from making after- statistics or records. Beginning with the 2002 season, all postseason games the-game changes to press box numbers. -

Philadelphia -Week 4 Dope Sheet

WEEKLY MEDIA INFORMATION PACKET GREEN BAY PACKERS VS. PHILADELPHIA EAGLES THURSDAY, SEPT. 26, 2019 7:20 PM CDT LAMBEAU FIELD Packers Communications l Lambeau Field Atrium l 1265 Lombardi Avenue l Green Bay, WI 54304 l 920/569-7500 l 920/569-7201 fax Jason Wahlers, Sarah Quick, Tom Fanning, Nathan LoCascio VOL. XXI; NO. 10 REGULAR-SEASON WEEK 4 GREEN BAY (3-0) VS. PHILADELPHIA (1-2) WITH THE CALL Thursday, Sept. 26 l Lambeau Field l 7:20 p.m. CDT Thursday night’s game will be televised to a national audience on FOX and NFL Network with play-by-play man Joe Buck and ana- PACKERS TAKE ON THE VISITING EAGLES ON TNF lyst handling the call from the broadcast booth and The Green Bay Packers play their second primetime game of the season Troy Aikman and reporting from the sidelines. this Thursday against the Philadelphia Eagles. Erin Andrews Kristina Pink u The Packers have won five of the last six against the Eagles in the regular The game is also available through Amazon Prime Video with your and postseason, including two of three in Green Bay. choice of announcers: Hannah Storm and Andrea Kremer, or u It is the first time the Packers have played Philadelphia in Derek Rae and Tommy Smyth. a Thursday night game. u Milwaukee’s WTMJ (620 AM), airing Green Bay games since November u It is also the first time Green Bay has played two 1929, heads up the Packers Radio Network that is made up of 50 sta- Thursday games in the month of September. -

Draftkings-Lawsuit.Pdf

Cause No. _________________ DRAFTKINGS, INC., § IN THE DISTRICT COURT OF a Delaware corporation, § § DALLAS COUNTY, TEXAS Plaintiff, § § § v. § § § § KEN PAXTON, Attorney General of § the State of Texas, in his § official capacity § § ____ JUDICIAL DISTRICT Defendant. § PLAINTIFF’S ORIGINAL PETITION FOR DECLARATORY JUDGMENT This action seeks to prevent the Texas Attorney General from further acting to eliminate daily fantasy sports (“DFS”) contests enjoyed by hundreds of thousands of Texans for the past decade. Specifically, Plaintiff DraftKings, Inc. (“DraftKings”) seeks a declaratory judgment that DFS contests are legal under Texas law. This relief is necessary to prevent immediate and irreparable harm to DraftKings, which otherwise could be forced out of business in Texas—one of its three largest state markets—and irrevocably damaged nationwide. This Court need look no further than to the Attorney General’s orchestration of DraftKings’ competitor FanDuel Inc.’s (“FanDuel”) effective Plaintiff’s Original Petition Page 1 departure from the State of Texas to recognize that the Attorney General’s actions pose direct, immediate, and particularized harm to DraftKings. I. PRELIMINARY STATEMENT For more than 50 years, millions of Texans have enjoyed playing traditional season-long fantasy sports. Virtually every level of every sport—from professional football to college basketball to international soccer—has given rise to some form of fantasy contest, in which friends, neighbors, coworkers, and even strangers enhance their enjoyment of the sport by competing against one another for prizes in organized leagues to determine who has the skill—akin to a general manager of a sports team—to put together the most successful fantasy team. -

Daily Fantasy Sports and the Clash of Internet Gambling Regulation

DePaul Journal of Art, Technology & Intellectual Property Law Volume 27 Issue 2 Spring 2017 Article 9 Daily Fantasy Sports and the Clash of Internet Gambling Regulation Joshua Shancer Follow this and additional works at: https://via.library.depaul.edu/jatip Part of the Computer Law Commons, Cultural Heritage Law Commons, Entertainment, Arts, and Sports Law Commons, Intellectual Property Law Commons, Internet Law Commons, and the Science and Technology Law Commons Recommended Citation Joshua Shancer, Daily Fantasy Sports and the Clash of Internet Gambling Regulation, 27 DePaul J. Art, Tech. & Intell. Prop. L. 295 (2019) Available at: https://via.library.depaul.edu/jatip/vol27/iss2/9 This Legislative Updates is brought to you for free and open access by the College of Law at Via Sapientiae. It has been accepted for inclusion in DePaul Journal of Art, Technology & Intellectual Property Law by an authorized editor of Via Sapientiae. For more information, please contact [email protected]. Shancer: Daily Fantasy Sports and the Clash of Internet Gambling Regulatio DAILY FANTASY SPORTS AND THE CLASH OF INTERNET GAMBLING REGULATION I. INTRODUCTION It is Week 7 of the NFL season on a crisp Sunday in October of 2015. The leaves are beginning to fall outside as you race home from your early morning workout to check your fantasy football team before the start of the early afternoon games. You log onto ESPN.com and after making sure that everyone on your roster is playing you turn on your TV to watch the slate of games. This week is particularly important as you hope to beat one of your good friends and take control of first place.