Improving Website Hyperlink Structure Using Server Logs

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Wwe Universe Hack Game Download WWE 12 PC Download

wwe universe hack game download WWE 12 PC Download. WWE 12 PC Download Game is a direct link for windows and torren t GOG. Ocean of games WWE 12 igg games com is an awesome game free to play.Play this awesome game for free and share this website with your friends. Overview of WWE 12 PC Game 2017. Are you a game lover? If yes the you will love this awesome game.This game is also available for Android , iPhone , XBOX , PS4 .We will provide you a highly compressed link for this game so you can download easily .It’s a virus free game feel free to download.You can download this game from Mega,Google Drive or Torrent.If you don’t know how to download this awesome game from Mega,Don’t worry we have made a tutorial for you .If you If you don’t know how to download From Mega Click here. WWE 12 catch framework from the past amusements has been evacuated. Characters now perform distinctive moves in light of their rival’s present physical state. Players now have a window of chance to assault while still in a brought down state and can likewise interfere with moves and Royal Rumble disposals with assaults. Additionally, the sticking meter from the past diversions has been improved to make it more hard to kick out as a wrestler takes more harm. The game’sartificial knowledge has additionally been changed in accordance with keep players from abusing the same move. Moreover, the capacity to store completing moves has returned. -

Professional Wrestling, Sports Entertainment and the Liminal Experience in American Culture

PROFESSIONAL WRESTLING, SPORTS ENTERTAINMENT AND THE LIMINAL EXPERIENCE IN AMERICAN CULTURE By AARON D, FEIGENBAUM A DISSERTATION PRESENTED TO THE GRADUATE SCHOOL OF THE UNIVERSITY OF FLORIDA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY UNIVERSITY OF FLORIDA 2000 Copyright 2000 by Aaron D. Feigenbaum ACKNOWLEDGMENTS There are many people who have helped me along the way, and I would like to express my appreciation to all of them. I would like to begin by thanking the members of my committee - Dr. Heather Gibson, Dr. Amitava Kumar, Dr. Norman Market, and Dr. Anthony Oliver-Smith - for all their help. I especially would like to thank my Chair, Dr. John Moore, for encouraging me to pursue my chosen field of study, guiding me in the right direction, and providing invaluable advice and encouragement. Others at the University of Florida who helped me in a variety of ways include Heather Hall, Jocelyn Shell, Jim Kunetz, and Farshid Safi. I would also like to thank Dr. Winnie Cooke and all my friends from the Teaching Center and Athletic Association for putting up with me the past few years. From the World Wrestling Federation, I would like to thank Vince McMahon, Jr., and Jim Byrne for taking the time to answer my questions and allowing me access to the World Wrestling Federation. A very special thanks goes out to Laura Bryson who provided so much help in many ways. I would like to thank Ed Garea and Paul MacArthur for answering my questions on both the history of professional wrestling and the current sports entertainment product. -

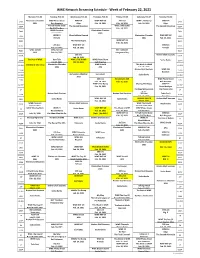

WWE Network Streaming Schedule - Week of February 22, 2021

WWE Network Streaming Schedule - Week of February 22, 2021 Monday, Feb 22 Tuesday, Feb 23 Wednesday, Feb 24 Thursday, Feb 25 Friday, Feb 26 Saturday, Feb 27 Sunday, Feb 28 Elimination Chamber WWE Photo Shoot WWE 24 WWE NXT UK 205 Live WWE's The Bump WWE 24 6AM 6AM 2021 Ron Simmons Edge Feb. 18, 2021 Feb. 19, 2021 Feb. 24, 2021 Edge R-Truth Game Show WWE's The Bump 6:30A The Second Mountain The Second Mountain 6:30A Big E & The Boss Feb. 24, 2021 WWE Chronicle Elimination Chamber 7AM 7AM Bianca Belair 2021 WWE 24 WrestleMania Rewind Elimination Chamber WWE NXT UK 7:30A 7:30A R-Truth 2021 Feb. 25, 2021 WWE NXT UK 8AM The Mania Begins 8AM Feb. 25, 2021 205 Live WWE NXT UK WWE 24 8:30A 8:30A Feb. 19, 2021 Feb. 18, 2021 R-Truth WWE Untold WWE NXT UK NXT TakeOver 9AM 9AM APA Feb. 18, 2021 Vengeance Day 205 Live Broken Skull Sessions 9:30A 9:30A Feb. 19, 2021 The Best of WWE Raw Talk WWE's THE BUMP WWE Photo Shoot 10AM Sasha Banks 10AM Feb 22, 2021 FEB 24, 2021 Kofi Kingston Elimination Chamber WWE Untold This Week in WWE 10:30A The Best of John Cena 10:30A 2021 APA Feb. 25, 2021 Broken Skull Sessions WWE 365 11AM 11AM Ricochet Elimination Chamber Liv Forever 11:30A Sasha Banks 11:30A 2021 205 Live SmackDown 549 WWE Photo Shoot 12N 12N Feb. 19, 2021 Feb. 26, 2010 Kofi Kingston WWE's The Bump Chasing The Magic WWE 24 12:30P 12:30P Feb. -

The Oral Poetics of Professional Wrestling, Or Laying the Smackdown on Homer

Oral Tradition, 29/1 (201X): 127-148 The Oral Poetics of Professional Wrestling, or Laying the Smackdown on Homer William Duffy Since its development in the first half of the twentieth century, Milman Parry and Albert Lord’s theory of “composition in performance” has been central to the study of oral poetry (J. M. Foley 1998:ix-x). This theory and others based on it have been used in the analysis of poetic traditions like those of the West African griots, the Viking skalds, and, most famously, the ancient Greek epics.1 However, scholars have rarely applied Parry-Lord theory to material other than oral poetry, with the notable exceptions of musical forms like jazz, African drumming, and freestyle rap.2 Parry and Lord themselves, on the other hand, referred to the works they catalogued as performances, making it possible to use their ideas beyond poetry and music. The usefulness of Parry-Lord theory in studies of different poetic traditions tempted me to view other genres of performance from this perspective. In this paper I offer up one such genre for analysis —professional wrestling—and show that interpreting the tropes of wrestling through the lens of composition in performance provides information that, in return, can help with analysis of materials more commonly addressed by this theory. Before beginning this effort, it will be useful to identify the qualities that a work must possess to be considered a “composition in performance,” in order to see if professional wrestling qualifies. The first, and probably most important and straightforward, criterion is that, as Lord (1960:13) says, “the moment of composition is the performance.” This disqualifies art forms like theater and ballet, works typically planned in advance and containing words and/or actions that must be performed at precise times and following a precise order. -

Pro Wrestling Over -Sell

TTHHEE PPRROO WWRREESSTTLLIINNGG OOVVEERR--SSEELLLL™ a newsletter for those who want more Issue #1 Monthly Pro Wrestling Editorials & Analysis April 2011 For the 27th time... An in-depth look at WrestleMania XXVII Monthly Top of the card Underscore It's that time of year when we anything is responsible for getting Eddie Edwards captures ROH World begin to talk about the forthcoming WrestleMania past one million buys, WrestleMania, an event that is never it's going to be a combination of Tile in a shocker─ the story that makes the short of talking points. We speculate things. Maybe it'll be the appearances title change significant where it will rank on a long, storied list of stars from the Attitude Era of of highs and lows. We wonder what will wrestling mixed in with the newly Shocking, unexpected surprises seem happen on the show itself and gossip established stars that generate the to come few and far between, especially in the about our own ideas and theories. The need to see the pay-per-view. Perhaps year 2011. One of those moments happened on road to WreslteMania 27 has been a that selling point is the man that lit March 19 in the Manhattan Center of New York bumpy one filled with both anticipation the WrestleMania fire, The Rock. City. Eddie Edwards became the fifteenth Ring and discontent, elements that make the ─ So what match should go on of Honor World Champion after defeating April 3 spectacular in Atlanta one of the last? Oddly enough, that's a question Roderick Strong in what was described as an more newsworthy stories of the year. -

British Bulldogs, Behind SIGNATURE MOVE: F5 Rolled Into One Mass of Humanity

MEMBERS: David Heath (formerly known as Gangrel) BRODUS THE BROOD Edge & Christian, Matt & Jeff Hardy B BRITISH CLAY In 1998, a mystical force appeared in World Wrestling B HT: 6’7” WT: 375 lbs. Entertainment. Led by the David Heath, known in FROM: Planet Funk WWE as Gangrel, Edge & Christian BULLDOGS SIGNATURE MOVE: What the Funk? often entered into WWE events rising from underground surrounded by a circle of ames. They 1960 MEMBERS: Davey Boy Smith, Dynamite Kid As the only living, breathing, rompin’, crept to the ring as their leader sipped blood from his - COMBINED WT: 471 lbs. FROM: England stompin’, Funkasaurus in captivity, chalice and spit it out at the crowd. They often Brodus Clay brings a dangerous participated in bizarre rituals, intimidating and combination of domination and funk -69 frightening the weak. 2010 TITLE HISTORY with him each time he enters the ring. WORLD TAG TEAM Defeated Brutus Beefcake & Greg With the beautiful Naomi and Cameron Opponents were viewed as enemies from another CHAMPIONS Valentine on April 7, 1986 dancing at the big man’s side, it’s nearly world and often victims to their bloodbaths, which impossible not to smile when Clay occurred when the lights in the arena went out and a ▲ ▲ Behind the perfect combination of speed and power, the British makes his way to the ring. red light appeared. When the light came back the Bulldogs became one of the most popular tag teams of their time. victim was laying in the ring covered in blood. In early Clay’s opponents, however, have very Originally competing in promotions throughout Canada and Japan, 1999, they joined Undertaker’s Ministry of Darkness. -

WWE: Redefining Orking-Classw Womanhood Through Commodified Eminismf

Wright State University CORE Scholar The University Honors Program Academic Affairs 4-24-2018 WWE: Redefining orking-ClassW Womanhood through Commodified eminismF Janice M. Sikon Wright State University - Main Campus Follow this and additional works at: https://corescholar.libraries.wright.edu/honors Part of the Women's Studies Commons Repository Citation Sikon, J. M. (2018). WWE: Redefining orking-ClassW Womanhood through Commodified Feminism. Wright State University, Dayton, Ohio. This Thesis is brought to you for free and open access by the Academic Affairs at CORE Scholar. It has been accepted for inclusion in The University Honors Program by an authorized administrator of CORE Scholar. For more information, please contact [email protected]. WWE: Redefining Working-Class Womanhood 1 WWE: Redefining Working-Class Womanhood through Commodified Feminism Janice M. Sikon Wright State University WWE: Redefining Working-Class Womanhood 2 Introduction World Wrestling Entertainment (WWE) is the largest professional wrestling promotion in the world (Bajaj & Banerjee, 2016). Their programs air in 20 languages in over 180 countries, and in the United States approximately 11 million people watch their programs each week (“FAQ,” n.d.). These programs include six hours of televised weekly events and 16 annual pay- per-view events (“WWE Reports,” 2017). In the first quarter of 2017 the company grossed $188.4 million (“WWE Reports,” 2017). They have close ties with the current presidential administration, as Small Business Administrator Linda McMahon was the CEO of the company from 1980 to 2009 (Reuters, 2009) and President Trump has made several appearances on WWE programming in the past (“Donald Trump,” n.d.). -

Lawler WWE 104 Akira Tozawa Raw 105 Alicia

BASE BASE CARDS 101 Tyler Bate WWE 102 Brie Bella WWE 103 Jerry "The King" Lawler WWE 104 Akira Tozawa Raw 105 Alicia Fox Raw 106 Apollo Crews Raw 107 Ariya Daivari Raw 108 Harley Race WWE Legend 109 Big Show Raw 110 Bo Dallas Raw 111 Braun Strowman Raw 112 Bray Wyatt Raw 113 Cesaro Raw 114 Charly Caruso Raw 115 Curt Hawkins Raw 116 Curtis Axel Raw 117 Dana Brooke Raw 118 Darren Young Raw 119 Dean Ambrose Raw 120 Emma Raw 121 Jeff Hardy Raw 122 Goldust Raw 123 Heath Slater Raw 124 JoJo Raw 125 Kalisto Raw 126 Kurt Angle Raw 127 Mark Henry Raw 128 Matt Hardy Raw 129 Mickie James Raw 130 Neville Raw 131 R-Truth Raw 132 Rhyno Raw 133 Roman Reigns Raw 134 Sasha Banks Raw 135 Seth Rollins Raw 136 Sheamus Raw 137 Summer Rae Raw 138 Aiden English SmackDown LIVE 139 Baron Corbin SmackDown LIVE 140 Becky Lynch SmackDown LIVE 141 Charlotte Flair SmackDown LIVE 142 Daniel Bryan SmackDown LIVE 143 Dolph Ziggler SmackDown LIVE 144 Epico SmackDown LIVE 145 Erick Rowan SmackDown LIVE 146 Fandango SmackDown LIVE 147 James Ellsworth SmackDown LIVE 148 Jey Uso SmackDown LIVE 149 Jimmy Uso SmackDown LIVE 150 Jinder Mahal SmackDown LIVE 151 Kevin Owens SmackDown LIVE 152 Konnor SmackDown LIVE 153 Lana SmackDown LIVE 154 Naomi SmackDown LIVE 155 Natalya SmackDown LIVE 156 Nikki Bella SmackDown LIVE 157 Primo SmackDown LIVE 158 Rusev SmackDown LIVE 159 Sami Zayn SmackDown LIVE 160 Shinsuke Nakamura SmackDown LIVE 161 Sin Cara SmackDown LIVE 162 Tyler Breeze SmackDown LIVE 163 Viktor SmackDown LIVE 164 Akam NXT 165 Aleister Black NXT 166 Andrade "Cien" Almas -

Royal Rumble Drinking Game

2018 R0.1 Royal Rumble Drinking Game OFFICIAL RULEBOOK [WIP] ANDREW ABRAMSON & JACOB HAJDU RRDG RULES COMMITTEE | 2018_05_29 Contents 1. Objectives of the Game......................................................................................................................... 3 1.1. General .......................................................................................................................................... 3 2. The Playing Field ................................................................................................................................... 3 2.1. Layout of the Field ........................................................................................................................ 3 2.2. The Table ....................................................................................................................................... 3 3. Equipment and Uniforms ...................................................................................................................... 3 3.1. Drinking Equipment ...................................................................................................................... 3 3.2. Random Drawing Equipment ........................................................................................................ 4 3.3. Clothing ......................................................................................................................................... 4 4. Game Preliminaries .............................................................................................................................. -

How Women Fans of World Wrestling Entertainment Perceive Women Wrestlers Melissa Jacobs Clemson University, [email protected]

Clemson University TigerPrints All Theses Theses 5-2017 "They've Come to Draw Blood" - How Women Fans of World Wrestling Entertainment Perceive Women Wrestlers Melissa Jacobs Clemson University, [email protected] Follow this and additional works at: https://tigerprints.clemson.edu/all_theses Recommended Citation Jacobs, Melissa, ""They've Come to Draw Blood" - How Women Fans of World Wrestling Entertainment Perceive Women Wrestlers" (2017). All Theses. 2638. https://tigerprints.clemson.edu/all_theses/2638 This Thesis is brought to you for free and open access by the Theses at TigerPrints. It has been accepted for inclusion in All Theses by an authorized administrator of TigerPrints. For more information, please contact [email protected]. “THEY’VE COME TO DRAW BLOOD” – HOW WOMEN FANS OF WORLD WRESTLING ENTERTAINMENT PERCEIVE WOMEN WRESTLERS A Thesis Presented to the Graduate School of Clemson University In Partial Fulfillment of the Requirements for the Degree Master of Arts Communication, Technology, and Society by Melissa Jacobs May 2017 Accepted by: Dr. D. Travers Scott, Committee Chair Dr. Erin Ash Dr. Darren Linvill ABSTRACT For a long time, professional wrestling has existed on the outskirts of society, with the idea that it was just for college-aged men. With the rise of the popularity of the World Wrestling Entertainment promotion, professional wrestling entered the mainstream. Celebrities often appear at wrestling shows, and the WWE often hires mainstream musical artists to perform at their biggest shows, WrestleMania and Summer Slam. Despite this still-growing popularity, there still exists a gap between men’s wrestling and women’s wrestling. Often the women aren’t allowed long match times, and for the longest time sometimes weren’t even on the main shows. -

Silverdraft Steps Into the Ring with WWE

CASE STUDY | WWE Silverdraft Steps Into the Ring with WWE WrestleMania 33. Camping World Stadium, Orlando, Fl, 2017 What happens when World-famous wrestlers dance with the Devil? A no holds barred graphics extravaganza! Q & A with WWE Graphics Guru, Perry Harovas World Wrestling Entertainment (WWE) is more than meets the eye; what lies behind the spandex and showmanship? Harovas: For 30 years, WWE has been the premier wresting entertainment company with programming in more than 650 million homes worldwide, 180 countries and in 20 different languages. From a social media perspective, it doesn’t get much bigger than WWE. We have 800+ million followers and WWE is the #1 sports channel on YouTube with over 12 billion views in the last 12 months. We have a 24-hour network and we originated pay per view. Our main event is WrestleMania—one of the top 5 most valuable sports brands in the world, behind only the Super Bowl, the Olympics, and the FIFA World Cup (Forbes). We also do Summer Slam, Royal Rumble, feature films (with and without wrestlers), animated shows, reality shows, video games, live shows, visual effects and custom graphics. In addition, we produce merchandise, toys, board games, and the list goes on… That’s sounds like some heavyweight content generation? Harovas: You could almost compare it to Disney, in terms of our voracious appetite for creating content. Between having our own 24-hour network, multiple brand properties, promos, motion graphics and the production of feature films—it’s truly a massive amount of content. The number of cast, crew, production and staff for RAW (one of the longest running live shows that airs every week of the year), is huge. -

Wwe Royal Rumble Scorecard

WWE ROYAL RUMBLE SCORECARD Presented By: Ring-Rap.com // Facebook.com/RingRap // Twitter: @RingRap MATCH WINNER (5 pts) EXTRAS (2 pts each) TOTAL Preshow Match for the WWE Raw Tag Team Title Any in-fighting? Y N Cesaro & Sheamus (c) vs. Luke Gallows & Karl Anderson Referee shenanigans? (Fight/Disagreement) Y N (Two Referees Assigned to the Match) Preshow Match How many "Women's Revolution" references? Sasha Banks vs. Nia Jax DQ/Pinfall/Submission? Preshow Match Who makes the pin? Becky Lynch & Nikki Bella & Naomi vs. Alexa Bliss & Mickie James & Natalya Who takes the pin? Singles Match for the WWE Cruiserweight Title Will Austin Aries bring a banana to commentary? Y N Rich Swann (c) vs. Neville How many "King of Cruiserweights" references? Singles Match for the WWE Raw Women's Championship DQ/Pinfall/Submission? Charlotte (c) vs. Bayley Will a little girl be on camera crying during this match? Y N Singles Match for the WWE Championship Clean finish? Y N Double turn? Y N AJ Styles (c) vs. John Cena How many finishers are kicked out of? No Disqualification Match for the WWE Universal Championship Is there a brawl through the crowd? Y N Kevin Owens (c) vs. Roman Reigns Does Jericho escape the cage? Y N (Chris Jericho will be suspended in a shark cage above the ring) How many "Sexy Pinata" references? Who are the #1 and #2 entrants? The Royal Rumble Match (Any order, 2 pts each) Who is entrant #10? 30 wrestlers enter the match at predetermined intervals. To be eliminated, Who is entrant #29? one must be thrown over the top rope and both feet must touch the floor.