12 Understanding Javascript Event-Based Interactions With

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Mozilla Firefox Lower Version Free Download Firefox Release Notes

mozilla firefox lower version free download Firefox Release Notes. Release Notes tell you what’s new in Firefox. As always, we welcome your feedback. You can also file a bug in Bugzilla or see the system requirements of this release. Download Firefox — English (US) Your system may not meet the requirements for Firefox, but you can try one of these versions: Download Firefox — English (US) Download Firefox Download Firefox Download Firefox Download Firefox Download Firefox Download Firefox Download Firefox Download Firefox Firefox for Android Firefox for iOS. December 1, 2014. We'd also like to extend a special thank you to all of the new Mozillians who contributed to this release of Firefox! Default search engine changed to Yandex for Belarusian, Kazakh, and Russian locales. Improved search bar (en-US only) Firefox Hello real-time communication client. Easily switch themes/personas directly in the Customizing mode. Wikipedia search now uses HTTPS for secure searching (en-US only) Recover from a locked Firefox process in the "Firefox is already running" dialog on Windows. Fixed. CSS transitions start correctly when started at the same time as changes to display, position, overflow, and similar properties. Fullscreen video on Mac disables display sleep, and dimming, during playback. Various Yosemite visual fixes (see bug 1040250) Changed. Proprietary window.crypto properties/functions re-enabled (to be removed in Firefox 35) Firefox signed by Apple OS X version 2 signature. Developer. WebIDE: Create, edit, and test a new Web application from your browser. Highlight all nodes that match a given selector in the Style Editor and the Inspector's Rules panel. -

Brief Intro to Firebug Firebug at a Glance

Brief Intro to Firebug Firebug at a glance • One of the most popular web debugging tool with a collec6on of powerful tools to edit, debug and monitor HTML, CSS and JavaScript, etc. • Many rich features: • Inspect, Log, Profile • Debug, Analyze, Layout How to install • Get firebug: • Open Firefox, and go to hp://geirebug.com/ • Click Install Firebug, and follow the instruc6ons • Ways to launch Firebug • F12, or Firebug Buon • Right click an element on the page and inspect it with Firebug Firebug Panels • Console: An interac6ve JavaScript Console • HTML: HTML View • CSS: CSS View • Script: JavaScript Debugger • DOM: A list of DOM proper6es (defaults to window object) • Net: HTTP traffic monitoring • Cookies: Cookie View Tasks for HTML Panel • Open twiLer.com and log in with your account. Ac6vate Firebug. • Tasks with HTML Panel • 1. Which <div> tag corresponds to the navigaon bar at the top of the page? • 2. Change the text “Messages” in the navigaon bar to “Tweets” • 3. Find out which <div> tag corresponds to the dashboard which is the le` column of the page. Can you change the width of the dashboard to 200px? • 4. Try to figure out the URL of your profile picture at the top le` corner of the home page, and use this URL to open this picture in a new tab. • 5. Change your name next to your profile picture to something else, and change the text colour to blue. Inspect HTTP Traffic • Open twiLer.com and log in with your account. Ac6vate Firebug. • Tasks with Net Panel • 1. Which request takes the longest 6me to load? • 2. -

Empirical Studies of Javascript-‐ Based Web Applicaxon

Empirical Studies of JavaScript- based Web Applica8on Reliability Karthik Pa@abiraman1 Frolin Ocariza1 Kar.k Bajaj1 Ali Mesbah1 Benjamin Zorn2 1 University of Bri.sh Columbia (UBC), 2MicrosoE Research (MSR) Web 2.0 Applicaons Copyright: Karthik Paabiraman, 2014 Web 2.0 Applicaon: Amazon.com Menu Amazon’s Third party Search bar Web 2.0 applicaons allow rich UI funconality within a single web page own ad gadget ad Func8on Copyright: Karthik Paabiraman, 2014 Modern Web Applicaons: JavaScript • JavaScript: Implementaon of ECMAScript standard – Client-Side JavaScript: used to develop web apps • Executes in client’s browser – send AJAX messages • Responsible for web applicaon’s core func.onality • Not easy to write code in – has many “evil” features 4 Copyright: Karthik Paabiraman, 2014 JavaScript: History Brief History of JavaScript (Source: TomBarker.com) JavaScript (JS) had to “look like Java” only less so, be Java’s dumb kid brother or boy-hostage sidekick. Plus, I had to be done in ten days or something worse than JS would have happened – Brendan Eich (Inventor of JavaScript) Copyright: Karthik Paabiraman, 2014 • • 10000 20000 30000 40000 50000 60000 70000 80000 90000 Thousands of lines of code, oEen > 10,000 97 of the 0 Google YouTube Yahoo Baidu QQ MSN Amazon JavaScript: Prevalence Sina WordPress Alexa Ebay LinkedIn Bing MicrosoE Yandex 163 top 100 websites use JavaScript Copyright: Karthik Paabiraman, 2014 mail.ru PayPal FC2 Flickr IMDb Lines of code Apple Livedoor BBC Sohu go.com Soso Youku AOL CNN MediaFire ESPN MySpace MegaUpload Mozilla 4shared Adobe About LiveJournal Tumblr goDaddy CNET YieldManager Sogou Zedo Ifeng Pirate Bay ImageShack Weather NY Times Nelix JavaScript: “good” or “Evil” ? Vs Eval Calls (Source: Richards et al. -

Development Production Line the Short Story

Development Production Line The Short Story Jene Jasper Copyright © 2007-2018 freedumbytes.dev.net (Free Dumb Bytes) Published 3 July 2018 4.0-beta Edition While every precaution has been taken in the preparation of this installation manual, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. This work is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License. To get an idea of the Development Production Line take a look at the following Application Integration overview and Maven vs SonarQube Quality Assurance reports comparison. 1. Operating System ......................................................................................................... 1 1.1. Windows ........................................................................................................... 1 1.1.1. Resources ................................................................................................ 1 1.1.2. Desktop .................................................................................................. 1 1.1.3. Explorer .................................................................................................. 1 1.1.4. Windows 7 Start Menu ................................................................................ 2 1.1.5. Task Manager replacement ........................................................................... 3 1.1.6. Resource Monitor ..................................................................................... -

Installation and Maintenance Guide

Contract Management Installation and Maintenance Guide Software Version 6.9.1 May 2016 Title: Gimmal Contract Management Installation and Maintenance Guide V6.9.1 © 2016 Gimmal LLC Gimmal® is a registered trademark of Gimmal Group. Microsoft® and SharePoint® are registered trademarks of Microsoft. Gimmal LLC believes the information in this publication is accurate as of its publication date. The information in this publication is provided as is and is subject to change without notice. Gimmal LLC makes no representations or warranties of any kind with respect to the information contained in this publication, and specifically disclaims any implied warranties of merchantability or fitness for a par- ticular purpose. Use, copying, and distribution of any Gimmal software described in this publication requires an appli- cable software license. For the most up-to-date listing of Gimmal product names and information, visit www.gimmal.com. All other trademarks used herein are the property of their respective owners. Software Revision History Publication Date Description October 2015 Updated to Gimmal branding. December 2015 Updated for version 6.8.6 release. March 2016 Updated for version 6.9 release. May 2016 Updated for version 6.9.1 release. If you have questions or comments about this publication, you can email TechnicalPublica- [email protected]. Be sure to identify the guide, version number, section, and page number to which you are referring. Your comments are welcomed and appreciated. Contents About this Guide vii Examples and Conventions . viii Chapter 1 Environment and System Requirements 1 Overview of Requirements . 2 Gimmal Contract Management Physical Environment. 2 Gimmal Contract Management and Documentum Content Management System . -

Here.Is.Only.Xul

Who am I? Alex Olszewski Elucidar Software Co-founder Lead Developer What this presentation is about? I was personally assigned to see how XUL and the Mozilla way measured up to RIA application development standards. This presentation will share my journey and ideas and hopefully open your minds to using these concepts for application development. RIA and what it means Different to many “Web Applications” that have features and functions of “Desktop” applications Easy install (generally requires only application install) or one-time extra(plug in) Updates automatically through network connections Keeps UI state on desktop and application state on server Runs in a browser or known “Sandbox” environment but has ability to access native OS calls to mimic desktop applications Designers can use asynchronous communication to make applications more responsive RIA and what it means(continued) Success of RIA application will ultimately be measured by how will it can match user’s needs, their way of thinking, and their behaviour. To review RIA applications take advantage of the “best” of both web and desktop apps. Sources: http://www.keynote.com/docs/whitepapers/RichInternet_5.pdf http://en.wikipedia.org/wiki/Rich_Internet_application My First Steps • Find working examples Known Mozilla Applications Firefox Thunderbird Standalone Applications Songbird Joost Komodo FindthatFont Prism (formerly webrunner) http://labs.mozilla.com/featured- projects/#prism XulMine-demo app http://benjamin.smedbergs.us/XULRunner/ Mozilla -

Flare-On 3: Challenge 10 Solution - FLAVA

Flare-On 3: Challenge 10 Solution - FLAVA Challenge Authors: FireEye Labs Advanced Vulnerability Analysis Team (FLAVA) Intro The first part of FLAVA challenge is a JavaScript puzzle based on the notorious Angler Exploit Kit (EK) attacks in 2015 and early 2016. All classic techniques leveraged by Angler, along with some other intriguing tricks utilized by certain APT threat actors, are bundled into this challenge. While Angler EK’s activities have quieted down since the takedown of a Russian group “Lurk” in mid 2016, its advanced anti-analysis and anti-detection techniques are still nuggets of wisdom that we think are worth sharing with the community for future reference. Walkthrough Compromising or malvertising on legitimate websites are the most common ways that exploit kits redirect victims to their landing pages. Exploit kits can redirect traffic multiple times before serving the victim the landing page. Once on the landing page, the exploit kit profiles the victim’s environment, and may choose to serve an exploit that affects it. In the case of this challenge, the landing page is only one redirect away from the compromised site (hxxp://10.11.106.81: 18089/flareon_found_in_milpitas.html). Often, Angler’s (and many other EKs’) landing page request has a peculiar, and thus fishy, URL after some seemingly legit HTTP transactions. Layer-0 Walkthrough The landing page may initially seem daunting, and that’s exactly the purpose of obfuscation. But it’s still code, regardless of the forms it may take. After all, it’s supposed to carry out the attack without any user interaction. It’s strongly advised to rewrite the code with meaningful names during analysis, just as one would in IDA when analyzing binaries. -

Javascript for PHP Developers

www.it-ebooks.info www.it-ebooks.info JavaScript for PHP Developers Stoyan Stefanov www.it-ebooks.info JavaScript for PHP Developers by Stoyan Stefanov Copyright © 2013 Stoyan Stefanov. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/ institutional sales department: 800-998-9938 or [email protected]. Editor: Mary Treseler Indexer: Meghan Jones, WordCo Indexing Production Editor: Kara Ebrahim Cover Designer: Randy Comer Copyeditor: Amanda Kersey Interior Designer: David Futato Proofreader: Jasmine Kwityn Illustrator: Rebecca Demarest April 2013: First Edition Revision History for the First Edition: 2013-04-24: First release See http://oreilly.com/catalog/errata.csp?isbn=9781449320195 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. JavaScript for PHP Developers, the image of an eastern gray squirrel, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trade‐ mark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information contained herein. -

Designing for Extensibility and Planning for Conflict

Designing for Extensibility and Planning for Conflict: Experiments in Web-Browser Design Benjamin S. Lerner A dissertation submitted in partial fulfillment of the requirements for the degree of Doctor of Philosophy University of Washington 2011 Program Authorized to Offer Degree: UW Computer Science & Engineering University of Washington Graduate School This is to certify that I have examined this copy of a doctoral dissertation by Benjamin S. Lerner and have found that it is complete and satisfactory in all respects, and that any and all revisions required by the final examining committee have been made. Chair of the Supervisory Committee: Daniel Grossman Reading Committee: Daniel Grossman Steven Gribble John Zahorjan Date: In presenting this dissertation in partial fulfillment of the requirements for the doctoral degree at the University of Washington, I agree that the Library shall make its copies freely available for inspection. I further agree that extensive copying of this dissertation is allowable only for scholarly purposes, consistent with “fair use” as prescribed in the U.S. Copyright Law. Requests for copying or reproduction of this dissertation may be referred to Proquest Information and Learning, 300 North Zeeb Road, Ann Arbor, MI 48106-1346, 1-800-521-0600, to whom the author has granted “the right to reproduce and sell (a) copies of the manuscript in microform and/or (b) printed copies of the manuscript made from microform.” Signature Date University of Washington Abstract Designing for Extensibility and Planning for Conflict: Experiments in Web-Browser Design Benjamin S. Lerner Chair of the Supervisory Committee: Associate Professor Daniel Grossman UW Computer Science & Engineering The past few years have seen a growing trend in application development toward “web ap- plications”, a fuzzy category of programs that currently (but not necessarily) run within web browsers, that rely heavily on network servers for data storage, and that are developed and de- ployed differently from traditional desktop applications. -

Javascript: the Used Parts

JavaScript: The Used Parts by Sharath Chowdary Gude A thesis submitted to the Graduate Faculty of Auburn University in partial fulfillment of the requirements for the Degree of Master of Science Auburn, Alabama Feb May 22,2, 2014 2014 Keywords: JavaScript, Empirical Study, Programming languages Copyright 2014 by Sharath Chowdary Gude Approved by Dr. Munawar Hafiz, Computer Science and Software Engineering Dr. Levent Yilmaz, Computer Science and Software Engineering Dr. Jeff Overbey,Computer Science and Software Engineering Abstract JavaScript is designed as a scripting language which gained mainstream adoption even without creation of proper formal standard. The success of JavaScript could be attributed to the explosion of the internet and simplicity of its usage. Even though web is still its strongest domain, JavaScript is a language constantly evolving. The language is now used in OS free Desktop application development, databases etc. The standards committee wants to revamp the standard with addition of new features and provide solutions to controversial features of language[1]. This needs a large scale empirical studies spanning all diverse paradigms of JavaScript. The interpreted nature of language needs a proper mechanism to perform both static and dynamic analysis. These analyses should be analyzed and interpreted to understand the general usage of language by the programming community and formulate the best ways to evolve the language. The inherent misconceptions among the programming community about the language are to be studied, usage patterns are to be analyzed to generate data on usage of features and thus justifying an argument for need to refine the feature. The thesis explores the features in the language deemed problematic by experts, the way these features are used by the programming community and the need for refining these features backed up with an empirical study. -

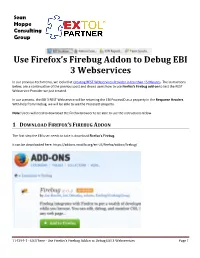

Use Firefox's Firebug Addon to Debug EBI 3 Webservices

Sean Hoppe Consulting Group Use Firefox’s Firebug Addon to Debug EBI 3 Webservices In our previous tech memo, we looked at Creating REST Webservices Provider in less than 15 Minutes. The instructions below, are a continuation of the previous post and shows users how to use Firefox's Firebug add-on to test the REST Webservice Provider we just created. In our scenario, the EBI 3 REST Webservice will be returning the EBI ProcessID as a property in the Response Headers. With help from Firebug, we will be able to see the ProcessID property. Note: Users will need to download the Firefox browser to be able to use the instructions below. 1 DOWNLOAD FIREFOX’S FIREBUG ADDON The first step the EBI user needs to take is download Firefox's Firebug. It can be downloaded here: https://addons.mozilla.org/en-US/firefox/addon/firebug/ 114294-1 - EBIThree - Use Firefox’s Firebug Addon to Debug EBI 3 Webservices Page 1 2 START WEBSERVICE PROVIDER We will want to start the EBI 3 Webservice Provider, if it has not started. Users can refer to Starting Local Server in the previous tech memo: . 114293-1 - Create REST Webservices Provider in less than 15 Minutes 3 ACTIVATE FIREFOX’S FIREBUG Open up the Firefox Browser and activate Firebug Initially, the Firebug icon might be greyed out (below) If the Firebug icon is greyed out, click on it, to activate Firebug (below) Once Firebug is activated, we should see a lower control panel for Firebug. 114294-1 - EBIThree - Use Firefox’s Firebug Addon to Debug EBI 3 Webservices Page 2 4 TEST EBI 3 WEBSERVICE Once the EXTOL Webservice Monitor is started and Firebug is active, we can input the Webservice URL into the browser. -

Well, I'm Back: Choose Firefox Now, Or Later You Won't Get a Choice

165 More Next Blog» Well, I'm Back Robert O'Callahan. Christian. Repatriate Kiwi. Mozilla hacker. FRIDAY, 8 AUGUST 2014 Choose Firefox Now, Or Later You Won't Get A Choice I know it's not the greatest marketing pitch, but it's the truth. Google is bent on establishing platform domination unlike anything we've ever s even from late-1990s Microsoft. Google controls Android, which is winning; Chro which is winning; and key Web properties in Search, Youtube, Gmail and Docs, are all winning. The potential for lock-in is vast and they're already exploiting it, f example by restricting certain Google Docs features (e.g. offline support) to Chro users, and by writing contracts with Android OEMs forcing them to make Chrom default browser. Other bad things are happening that I can't even talk about. Ind people and groups want to do the right thing but the corporation routes around th (E.g. PNaCl and Chromecast avoided Blink's Web standards commitments by d themselves not part of Blink.) If Google achieves a state where the Internet is re accessible through Chrome (or Android apps), that situation will be very difficult escape from, and it will give Google more power than any company has ever ha Microsoft and Apple will try to stop Google but even if they were to succeed, the only to replace one victor with another. So if you want an Internet --- which means, in many ways, a world --- that isn't co by Google, you must stop using Chrome now and encourage others to do the you don't, and Google wins, then in years to come you'll wish you had a choice a only yourself to blame for spurning it now.