ANVIL the Video Annotation Research Tool

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Treatise on Combined Metalworking Techniques: Forged Elements and Chased Raised Shapes Bonnie Gallagher

Rochester Institute of Technology RIT Scholar Works Theses Thesis/Dissertation Collections 1972 Treatise on combined metalworking techniques: forged elements and chased raised shapes Bonnie Gallagher Follow this and additional works at: http://scholarworks.rit.edu/theses Recommended Citation Gallagher, Bonnie, "Treatise on combined metalworking techniques: forged elements and chased raised shapes" (1972). Thesis. Rochester Institute of Technology. Accessed from This Thesis is brought to you for free and open access by the Thesis/Dissertation Collections at RIT Scholar Works. It has been accepted for inclusion in Theses by an authorized administrator of RIT Scholar Works. For more information, please contact [email protected]. TREATISE ON COMBINED METALWORKING TECHNIQUES i FORGED ELEMENTS AND CHASED RAISED SHAPES TREATISE ON. COMBINED METALWORKING TECHNIQUES t FORGED ELEMENTS AND CHASED RAISED SHAPES BONNIE JEANNE GALLAGHER CANDIDATE FOR THE MASTER OF FINE ARTS IN THE COLLEGE OF FINE AND APPLIED ARTS OF THE ROCHESTER INSTITUTE OF TECHNOLOGY AUGUST ( 1972 ADVISOR: HANS CHRISTENSEN t " ^ <bV DEDICATION FORM MUST GIVE FORTH THE SPIRIT FORM IS THE MANNER IN WHICH THE SPIRIT IS EXPRESSED ELIEL SAARINAN IN MEMORY OF MY FATHER, WHO LONGED FOR HIS CHILDREN TO HAVE THE OPPORTUNITY TO HAVE THE EDUCATION HE NEVER HAD THE FORTUNE TO OBTAIN. vi PREFACE Although the processes of raising, forging, and chasing of metal have been covered in most technical books, to date there is no major source which deals with the functional and aesthetic requirements -

FIG. 7001 Flexible Coupling

COUPLINGS FIG. 7001 Flexible Coupling The Gruvlok® Fig. 7001 Coupling forms a flexible grooved end pipe joint connection with the versatility for a wide range of applications. Services include mechanical and plumbing, process piping, mining and oil field piping, and many others. The coupling design supplies optimum strength for working pressures to 1000 PSl (69 bar) without excessive casting weight. The flexible design eases pipe and equipment installation while providing the designed-in benefit of reducing pipeline noise and vibration transmission without the addition of special components. To ease coupling handling and assembly and to assure consistent quality, sizes 1" through 14" couplings have two 180° segment housings, 16" have three 120˚ segment housings, and 18" through 24" sizes have four 90° segment housings, while the 28" O.D. and 30" O.D. For Listings/Approval Details and Limitations, visit our website at www.anvilintl.com or couplings have six 60° segment housings. The 28" O.D. and 30" O.D. are weld-ring couplings. contact an Anvil® Sales Representative. MATERIAL SPECIFICATIONS BOLTS: q Grade “T” Nitrile (Orange color code) SAE J429, Grade 5, Zinc Electroplated -20°F to 180°F (Service Temperature Range)(-29°C to 82°C) ISO 898-1, Class 8.8, Zinc Electroplated followed by a Yellow Chromate Dip Recommended for petroleum applications. Air with oil vapors and HEAVY HEX NUTS: vegetable and mineral oils. ASTM A563, Grade A, Zinc Electroplated NOT FOR USE IN HOT WATER OR HOT AIR ISO 898-2, Class 8.8, Zinc Electroplated followed by a Yellow Chromate Dip q Grade “O” Fluoro-Elastomer (Blue color code) HARDWARE KITS: Size Range: 1" - 12" (C style only) 3 20°F to 300°F (Service Temperature Range)(-29°C to 149°C) q 304 Stainless Steel (available in sizes up to /4") Kit includes: (2) Bolts per ASTM A193, Grade B8 and Recommended for high temperature resistance to oxidizing acids, (2) Heavy Hex Nuts per ASTM A194, Grade 8. -

Aluminum Alloy AA-6061 and RSA-6061 Heat Treatment for Large Mirror Applications

Utah State University DigitalCommons@USU Space Dynamics Lab Publications Space Dynamics Lab 1-1-2013 Aluminum Alloy AA-6061 and RSA-6061 Heat Treatment for Large Mirror Applications T. Newsander B. Crowther G. Gubbels R. Senden Follow this and additional works at: https://digitalcommons.usu.edu/sdl_pubs Recommended Citation Newsander, T.; Crowther, B.; Gubbels, G.; and Senden, R., "Aluminum Alloy AA-6061 and RSA-6061 Heat Treatment for Large Mirror Applications" (2013). Space Dynamics Lab Publications. Paper 102. https://digitalcommons.usu.edu/sdl_pubs/102 This Article is brought to you for free and open access by the Space Dynamics Lab at DigitalCommons@USU. It has been accepted for inclusion in Space Dynamics Lab Publications by an authorized administrator of DigitalCommons@USU. For more information, please contact [email protected]. Aluminum alloy AA-6061 and RSA-6061 heat treatment for large mirror applications T. Newswandera, B. Crowthera, G. Gubbelsb, R. Sendenb aSpace Dynamics Laboratory, 1695 North Research Park Way, North Logan, UT 84341;bRSP Technology, Metaalpark 2, 9936 BV, Delfzijl, The Netherlands ABSTRACT Aluminum mirrors and telescopes can be built to perform well if the material is processed correctly and can be relatively low cost and short schedule. However, the difficulty of making high quality aluminum telescopes increases as the size increases, starting with uniform heat treatment through the thickness of large mirror substrates. A risk reduction effort was started to build and test a ½ meter diameter super polished aluminum mirror. Material selection, the heat treatment process and stabilization are the first critical steps to building a successful mirror. In this study, large aluminum blanks of both conventional AA-6061 per AMS-A-22771 and RSA AA-6061 were built, heat treated and stress relieved. -

Discuss Ways for the Beginning Blacksmith to Start Forging Metal

Digital Demo Outline Objective: Discuss ways for the beginning blacksmith to start forging metal. Talk about strategies for tooling up: Where and how to find an anvil and anvil alternatives, hammers, tongs and forges. Discuss other shop tools that are the most necessary to get started. Do a short demo on the basics for moving the metal. Blacksmithing has a long tradition with many ways to reach the same goal. The information I’m sharing here is based on my own journey into blacksmithing and watching many beginners get started and seeing their challenges and frustrations. How do you want to approach blacksmithing? Do you want to gain experience by making your own tools or would you rather get to forging metal? Something in between? It’s good to think about this and form a game plan. It’s super easy to get bogged down in the process of making tools when all you really want to do is move hot metal. On the other hand, tons of great experience can be gained by making one’s own tools. -Outline of the basic starter kit.- •First hammer, selecting for size and shape. Handle modifications, Head modifications. •Anvil alternatives. Finding a “real” anvil, new vs. used, a big block of steel, section of railroad track and mounting on a stand. Using a ball bearing to show rebound test and a hammer for ring test. •What size tongs to buy and where. 3/8” square, ½” square, ¾” square. Why square and not round. •Forge: Propane or coal? Build or buy? With this class we will focus on propane because it is the easiest to get started with. -

Book of Abstracts Translata 2017 Scientific Committee

Translata III Book of Abstracts Innsbruck, 7 – 9 December, 2017 TRANSLATA III Redefining and Refocusing Translation and Interpreting Studies Book of Abstracts of the 3rd International Conference on Translation and Interpreting Studies December 7th – 9th, 2017 University Innsbruck Department of Translation Studies Translata 2017 Book of Abstracts 3 Edited by: Peter Sandrini Department of Translation studies University of Innsbruck Revised by: Sandra Reiter Department of Translation studies University of Innsbruck ISBN: 978-3-903030-54-1 Publication date: December 2017 Published by: STUDIA Universitätsverlag, Herzog-Siegmund-Ufer 15, A-6020 Innsbruck Druck und Buchbinderei: STUDIA Universitätsbuchhandlung und –verlag License: The Bookof Abstracts of the 3rd Translata Conference is published under the Creative Commons Attribution-ShareAlike 4.0 International License (https://creativecommons.org/licenses) Disclaimer: This publications has been reproduced directly from author- prepared submissions. The authors are responsible for the choice, presentations and wording of views contained in this publication and for opinions expressed therin, which are not necessarily those of the University of Innsbruck or, the organisers or the editor. Edited with: LibreOffice (libreoffice.org) and tuxtrans (tuxtrans.org) 4 Book of Abstracts Translata 2017 Scientific committee Local (in alphabetical order): Erica Autelli Mascha Dabić Maria Koliopoulou Martina Mayer Alena Petrova Peter Sandrini Astrid Schmidhofer Andy Stauder Pius ten Hacken Michael Ustaszewski -

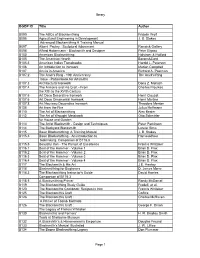

Library BGOP ID Title Author B090 the Abcs of Blacksmithing Fridolin

library BGOP ID Title Author B090 The ABCs of Blacksmithing Fridolin Wolf B095 Agricultural Engineering in Development J. B. Stokes Advanced Blacksmithing:A Training Manual B097 Albert Pauley : Sculptural Adornment Renwick Gallery B098 Alfred Habermann - Blacksmith and Designer Peter Elgass B100 American Blacksmithing Holstrom & Holford B105 The American Hearth Barons&Card B105.5 American Indian Tomahawks Harold L. Peterson B106 An Introduction to Ironwork Marian Campbell B107 Anvils in America Richard A. Postman B107.2 The Anvil's Ring - 10th Anniversary The Anvil's Ring Issue - Patternbook for Artsmiths B107.3 Architectural Ironwork Dona Z. Meilach B107.4 The Armoire and His Craft - From Charles Ffoulkes the XIth to the XVIth Century B107.5 Art Deco Decorative Ironwork Henri Clouzot B107.6 Art Deco Ornamental Ironwork Henri Martine B107.8 Art Nouveau Decorative Ironwork Theodore Menten B108 Art from the Fire Julius Hoffmann B110 The Art of Blacksmithing Alex Bealer B112 The Art of Wrought Metalwork Otto Schmirler for House and Garden B113 The Artist Blacksmith - Design and Techniques Peter Parkinson B114 The Backyard Blacksmith Lorelei Sims B115 Basic Blacksmithing: A Training Manual J. B. Stokes B115.3 Basic Blacksmithing - An introduction to Harries&Heer toolmaking. Companion of B118.3 B115.5 Beautiful Iron - The Pursuit of Excellence Francis Whitaker B116.1 Best of the Hammer - Volume 1 Brian D. Flax B116.2 Best of the Hammer - Volume 2 Brian D. Flax B116.3 Best of the Hammer - Volume 3 Brian D. Flax B116.4 Best of the Hammer - Volume 4 Brian D. Flax B117 The Blacksmith & His Art J.E. -

Activity Report 2013

Project-Team EXPRESSION Expressiveness in Human Centered Data/Media Vannes-Lannion-Lorient Activity Report Team EXPRESSION IRISA Activity Report 2013 2013 Contents 1 Team 3 2 Overall Objectives 4 2.1 Overview . 4 2.2 Key Issues . 4 3 Scientific Foundations 5 3.1 Gesture analysis, synthesis and recognition . 5 3.2 Speech processing and synthesis . 9 3.3 Text processing . 13 4 Application Domains 16 4.1 Expressive gesture . 16 4.2 Expressive speech . 16 4.3 Expression in textual data . 17 5 Software 17 5.1 SMR . 17 5.2 Roots ......................................... 18 5.3 Web based listening test system . 20 5.4 Automatic segmentation system . 21 5.5 Corpus-based Text-to-Speech System . 21 5.6 Recording Studio . 22 5.6.1 Hardware architecture . 23 5.6.2 Software architecture . 23 6 New Results 24 6.1 Data processing and management . 24 6.2 Expressive Gesture . 24 6.2.1 High-fidelity 3D recording, indexing and editing of French Sign Language content - Sign3D project . 24 6.2.2 Using spatial relationships for analysis and editing of motion . 26 6.2.3 Synthesis of human motion by machine learning methods: a review . 27 6.2.4 Character Animation, Perception and Simulation . 28 6.3 Expressive Speech . 29 6.3.1 Optimal corpus design . 30 6.3.2 Pronunciation modeling . 31 6.3.3 Optimal speech unit selection for text-to-speech systems . 32 6.3.4 Experimental evaluation of a statistical speech synthesis system . 32 6.4 Miscellaneous . 33 2 Team EXPRESSION IRISA Activity Report 2013 6.5 Expression in textual data . -

Culture in Language and Cognition

Chapter 37 in Xu Wen and John R. Taylor (Eds.) (2021) The Routledge Handbook of Cognitive Linguistics, pp. 387-407. Downloadable from https://psyarxiv.com/prm7u/ Preprint DOI 10.31234/osf.io/prm7u Culture in language and cognition Chris Sinha Abstract This Handbook chapter provides an overview of the interdisciplinary field of language, cognition and culture. The chapter explores the historical background of research from anthropological, psychological and linguistic perspectives. The key concepts of linguistic relativity, semiotic mediation and extended embodiment are explored and the field of cultural linguistics is outlined. Research methods are critically described. The state of the art in the key research topics of colour, space and time, and self and identities is outlined. Introduction Independence versus interdependence of language, mind and culture Cognitive Linguistics (CL) was forged in the matrix of cognitive sciences as a distinctive and highly interdisciplinary approach in linguistics. Foundational texts such as Lakoff (1987), Langacker (1987) and Talmy (2000) drew upon long but often neglected traditions in cognitive psychology, especially Gestalt psychology (Sinha 2007). A fundamental tenet of CL is that the cognitive capacities and processes that speakers and hearers employ in using language are domain-general: they underpin not only language, but also other areas of cognition and perception. This is in contrast with Generative (or Formal) Linguistics, to which CL historically was a critical reaction, which takes a modular view of both the human language faculty and of its subsystems. For Generative Linguists, not only is the subsystem of syntax autonomous from semantics and phonology, but language as a system is autonomous both from other cognitive processes, and from any influence by the culture and social organization of the language community. -

2012 Conference Abstracts

NLC2012 NEUROBIOLOGY OF LANGUAGE CONFERENCE DONOstIA - san SEbastIAN, SPAIN OctOBER 25TH - 27TH, 2012 ABSTRACTS Welcome to NLC 2012, Donostia-San Sebastián Welcome to the Fourth Annual Neurobiology of Language Conference (NLC) run by the Society for the Neurobiology of Language (SNL). All is working remarkably smoothly thanks to our past president (Greg Hickok), the Board of Directors, the Program Committee, the Nomination Committee, Society Officers, and our meeting planner, Shauney Wilson. A sincere round of thanks to them all! Indeed, another round of thanks to our founders Steve Small and Pascale Tremblay hardly suffices to acknowledge their role in bringing the Society and conference to life. The 3rd Annual NLC in Annapolis was a great success – scientifically and fiscally – with great talks, posters, and a profit to boot (providing a little cushion for future meetings). There were 476 attendees, about one-third of which were students. Indeed, about 40% of SNL members are students – and that’s great because you are the scientists of tomorrow! We want you engaged and present. We thank you and ask for your continued involvement. If there were any complaints, and there weren’t very many, it was the lackluster venue. We believe that the natural beauty of San Sebastián will more than make up for that. The past year has witnessed the launching of our new website (http://www.neurolang.org/) and a monthly newsletter. Read them regularly, and feel free to offer input. It goes without saying that you are the reason this Society was formed and will flourish: please join the Society, please nominate officers and vote for them, please submit abstracts for posters and talks, and please attend the annual meeting whenever possible. -

Abstract Book

CONTENTS Session 1. Submerged conflicts. Ethnography of the invisible resistances in the quotidian p. 3 Session 2. Ethnography of predatory and mafia practices 14 Session 3. Young people practicing everyday multiculturalism. An ethnographic look 16 Session 4. Innovating universities. Everything needs to change, so everything can stay the same? 23 Session 5. NGOs, grass-root activism and social movements. Understanding novel entanglements of public engagements 31 Session 6. Immanence of seduction. For a microinteractionist perspective on charisma 35 Session 7. Lived religion. An ethnographical insight 39 Session 8. Critical ethnographies of schooling 44 Session 9. Subjectivity, surveillance and control. Ethnographic research on forced migration towards Europe 53 Session 10. Ethnographic and artistic practices and the question of the images in contemporary Middle East 59 Session 11. Diffracting ethnography in the anthropocene 62 Session 12. Ethnography of labour chains 64 Session 13. The Chicago School and the study of conflicts in contemporary societies 72 Session 14. States of imagination/Imagined states. Performing the political within and beyond the state 75 Session 15. Ethnographies of waste politics 82 Session 16. Experiencing urban boundaries 87 Session 17. Ethnographic fieldwork as a “location of politics” 98 Session 18. Rethinking ‘Europe’ through an ethnography of its borderlands, periphe- ries and margins 104 Session 19. Detention and qualitative research 111 Session 20. Ethnographies of social sciences as a vocation 119 Session 21. Adjunct Session. Gender and culture in productive and reproductive life 123 Poster session 129 Abstracts 1 SESSION 1 Submerged conflicts. Ethnography of the invisible resistances in the quotidian convenor: Pietro Saitta, Università di Messina, [email protected] Arts of resistance. -

Anvil-Strut Price Sheet AS-3.11 | Effective: March 7, 2011 | Cancels Price Sheet AS-7.10 of July 6, 2010

Anvil-StrUT Price Sheet AS-3.11 | Effective: March 7, 2011 | Cancels Price Sheet AS-7.10 of July 6, 2010 FOR THE MOST CURRENT PRODUCT/PRICING INFORMATION ON ANVIL PRODUCTS, PLEASE VISIT OUR WEBSITE AT WWW.ANVILINTL.COM FREIGHT ALLOWANCE: All prices are F.O.B. point of shipment. On shipments weighing 2,500 pounds or more, rail freight (or motor freight at the lowest published rate) is allowed to all continental U.S. rail points or all U.S. highway points listed in published tariffs (Alaska and Hawaii excluded). In no case will more than actual freight be allowed. NOTE: All orders are accepted on the basis of prices in effect at the time of shipment. NOTICE: To the prices and terms quoted, there will be added any manufacturers or sales tax payable on the transaction under any effective statute. GENERAL • UPC numbers and SPIN commodity identification numbers are available upon request. • To obtain current ISO certifications, listings and other approvals, visit Anvil’s Web site at www.anvilintl.com. • Pricing is subject to change without notice. Go to Anvil’s Web site for the most current list price information, or contact your local Anvil representative. LOSS OR DAMAGE CLAIMS: All goods are shipped at buyer’s risk. Shipments should be carefully examined upon delivery and before the carrier’s delivery receipt is signed. If any loss or damage is evident, the buyer should make an appropriate notation on the delivery receipt and insist that the carrier’s driver initial such exception. The contents of all packages should be carefully examined for concealed damage as soon as possible after delivery, so that the carrier can be requested, within 48 hours after delivery, to arrange for prompt inspection of the damaged goods. -

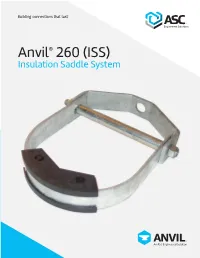

Anvil® 260 (ISS)

® Anvil 260 (ISS) Insulation Saddle System BUILDING CONNECTIONS THAT LAST For over 150 years, Anvil has worked diligently to build a strong, vibrant tradition of making connections — pipe to pipe and people to people. We pride ourselves in providing the finest-quality pipe products and services with integrity and dedication to superior customer service at all levels. We provide expertise and product solutions for a wide range of applications, from plumbing, mechanical, HVAC, industrial and fire protection to mining, oil and gas. Our comprehensive line of products includes: grooved pipe couplings, grooved and plain-end fittings, valves, cast and malleable iron fittings, forged steel fittings, steel pipe nipples and couplings, pipe hangers and supports, channel and strut fittings, mining and oil field fittings, along with much more. As an additional benefit to our customers, Anvil offers a complete and comprehensive Design Services Analysis for mechanical equipment rooms, to help you determine the most effective and cost-efficient piping solutions for your pipe system. At Anvil, we believe that responsive and accessible customer support is what makes the difference between simply delivering products — and delivering solutions. 260 (ISS) Insulation Saddle System Benefits • Reduces overall installation time The Anvil 260 (ISS) Insulation Saddle System reduces your overall installation time and greatly simplifies the way you insulate your copper or steel pipe • More competitive quotes systems. The revolutionary design of the 260 ISS spreads the load evenly • Saves labor for plumbing, over the hanger’s wide base — eliminating the need for wood blocks, mechanical, and insulating contractors shields, or costly hanger adjustments. • Suited for chilled and hot water systems from 40°F to 200°F Fully tested and rated for a temperature range from 40°F to 200°F, the 260 ISS is ideal for use with both chilled and hot water systems.