Platform Agnostic Use Case Based System Validation and Debug

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Writing and Reviewing Use-Case Descriptions

Bittner/Spence_06.fm Page 145 Tuesday, July 30, 2002 12:04 PM PART II WRITING AND REVIEWING USE-CASE DESCRIPTIONS Part I, Getting Started with Use-Case Modeling, introduced the basic con- cepts of use-case modeling, including defining the basic concepts and understanding how to use these concepts to define the vision, find actors and use cases, and to define the basic concepts the system will use. If we go no further, we have an overview of what the system will do, an under- standing of the stakeholders of the system, and an understanding of the ways the system provides value to those stakeholders. What we do not have, if we stop at this point, is an understanding of exactly what the system does. In short, we lack the details needed to actually develop and test the system. Some people, having only come this far, wonder what use-case model- ing is all about and question its value. If one only comes this far with use- case modeling, we are forced to agree; the real value of use-case modeling comes from the descriptions of the interactions of the actors and the system, and from the descriptions of what the system does in response to the actions of the actors. Surprisingly, and disappointingly, many teams stop after developing little more than simple outlines for their use cases and consider themselves done. These same teams encounter problems because their use cases are vague and lack detail, so they blame the use-case approach for having let them down. The failing in these cases is not with the approach, but with its application. -

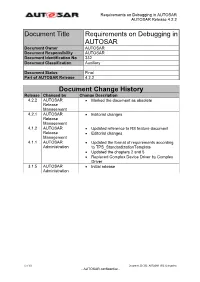

Document Title Requirements on Debugging in AUTOSAR

Requirements on Debugging in AUTOSAR AUTOSAR Release 4.2.2 Document Title Requirements on Debugging in AUTOSAR Document Owner AUTOSAR Document Responsibility AUTOSAR Document Identification No 332 Document Classification Auxiliary Document Status Final Part of AUTOSAR Release 4.2.2 Document Change History Release Changed by Change Description 4.2.2 AUTOSAR Marked the document as obsolete Release Management 4.2.1 AUTOSAR Editorial changes Release Management 4.1.2 AUTOSAR Updated reference to RS feature document Release Editorial changes Management 4.1.1 AUTOSAR Updated the format of requirements according Administration to TPS_StandardizationTemplate Updated the chapters 2 and 5 Replaced Complex Device Driver by Complex Driver 3.1.5 AUTOSAR Initial release Administration 1 of 19 Document ID 332: AUTOSAR_SRS_Debugging - AUTOSAR confidential - Requirements on Debugging in AUTOSAR AUTOSAR Release 4.2.2 Disclaimer This specification and the material contained in it, as released by AUTOSAR, is for the purpose of information only. AUTOSAR and the companies that have contributed to it shall not be liable for any use of the specification. The material contained in this specification is protected by copyright and other types of Intellectual Property Rights. The commercial exploitation of the material contained in this specification requires a license to such Intellectual Property Rights. This specification may be utilized or reproduced without any modification, in any form or by any means, for informational purposes only. For any other purpose, no part of the specification may be utilized or reproduced, in any form or by any means, without permission in writing from the publisher. The AUTOSAR specifications have been developed for automotive applications only. -

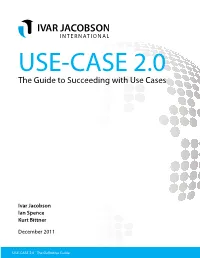

The Guide to Succeeding with Use Cases

USE-CASE 2.0 The Guide to Succeeding with Use Cases Ivar Jacobson Ian Spence Kurt Bittner December 2011 USE-CASE 2.0 The Definitive Guide About this Guide 3 How to read this Guide 3 What is Use-Case 2.0? 4 First Principles 5 Principle 1: Keep it simple by telling stories 5 Principle 2: Understand the big picture 5 Principle 3: Focus on value 7 Principle 4: Build the system in slices 8 Principle 5: Deliver the system in increments 10 Principle 6: Adapt to meet the team’s needs 11 Use-Case 2.0 Content 13 Things to Work With 13 Work Products 18 Things to do 23 Using Use-Case 2.0 30 Use-Case 2.0: Applicable for all types of system 30 Use-Case 2.0: Handling all types of requirement 31 Use-Case 2.0: Applicable for all development approaches 31 Use-Case 2.0: Scaling to meet your needs – scaling in, scaling out and scaling up 39 Conclusion 40 Appendix 1: Work Products 41 Supporting Information 42 Test Case 44 Use-Case Model 46 Use-Case Narrative 47 Use-Case Realization 49 Glossary of Terms 51 Acknowledgements 52 General 52 People 52 Bibliography 53 About the Authors 54 USE-CASE 2.0 The Definitive Guide Page 2 © 2005-2011 IvAr JacobSon InternationAl SA. All rights reserved. About this Guide This guide describes how to apply use cases in an agile and scalable fashion. It builds on the current state of the art to present an evolution of the use-case technique that we call Use-Case 2.0. -

INCOSE: the FAR Approach “Functional Analysis/Allocation and Requirements Flowdown Using Use Case Realizations”

in Proceedings of the 16th Intern. Symposium of the International Council on Systems Engineering (INCOSE'06), Orlando, FL, USA, Jul 2006. The FAR Approach – Functional Analysis/Allocation and Requirements Flowdown Using Use Case Realizations Magnus Eriksson1,2, Kjell Borg1, Jürgen Börstler2 1BAE Systems Hägglunds AB 2Umeå University SE-891 82 Örnsköldsvik SE-901 87 Umeå Sweden Sweden {magnus.eriksson, kjell.borg}@baesystems.se {magnuse, jubo}@cs.umu.se Copyright © 2006 by Magnus Eriksson, Kjell Borg and Jürgen Börstler. Published and used by INCOSE with permission. Abstract. This paper describes a use case driven approach for functional analysis/allocation and requirements flowdown. The approach utilizes use cases and use case realizations for functional architecture modeling, which in turn form the basis for design synthesis and requirements flowdown. We refer to this approach as the FAR (Functional Architecture by use case Realizations) approach. The FAR approach is currently applied in several large-scale defense projects within BAE Systems Hägglunds AB and the experience so far is quite positive. The approach is illustrated throughout the paper using the well known Automatic Teller Machine (ATM) example. INTRODUCTION Organizations developing software intensive defense systems, for example vehicles, are today faced with a number of challenges. These challenges are related to the characteristics of both the market place and the system domain. • Systems are growing ever more complex, consisting of tightly integrated mechanical, electrical/electronic and software components. • Systems have very long life spans, typically 30 years or longer. • Due to reduced acquisition budgets, these systems are often developed in relatively short series; ranging from only a few to several hundred units. -

Plantuml Language Reference Guide (Version 1.2021.2)

Drawing UML with PlantUML PlantUML Language Reference Guide (Version 1.2021.2) PlantUML is a component that allows to quickly write : • Sequence diagram • Usecase diagram • Class diagram • Object diagram • Activity diagram • Component diagram • Deployment diagram • State diagram • Timing diagram The following non-UML diagrams are also supported: • JSON Data • YAML Data • Network diagram (nwdiag) • Wireframe graphical interface • Archimate diagram • Specification and Description Language (SDL) • Ditaa diagram • Gantt diagram • MindMap diagram • Work Breakdown Structure diagram • Mathematic with AsciiMath or JLaTeXMath notation • Entity Relationship diagram Diagrams are defined using a simple and intuitive language. 1 SEQUENCE DIAGRAM 1 Sequence Diagram 1.1 Basic examples The sequence -> is used to draw a message between two participants. Participants do not have to be explicitly declared. To have a dotted arrow, you use --> It is also possible to use <- and <--. That does not change the drawing, but may improve readability. Note that this is only true for sequence diagrams, rules are different for the other diagrams. @startuml Alice -> Bob: Authentication Request Bob --> Alice: Authentication Response Alice -> Bob: Another authentication Request Alice <-- Bob: Another authentication Response @enduml 1.2 Declaring participant If the keyword participant is used to declare a participant, more control on that participant is possible. The order of declaration will be the (default) order of display. Using these other keywords to declare participants -

Use Case Tutorial Version X.X ● April 18, 2016

Use Case Tutorial Version X.x ● April 18, 2016 Company Name Limited Street City, State ZIP Country phone: +1 000 123 4567 Company Name Limited Street City, State ZIP Country phone: +1 000 123 4567 Company Name Limited Street City, State ZIP Country phone: +1 000 123 4567 www.website.com [Company Name] [Document Name] [Project Name] [Version Number] Table of Contents Introduction ..............................................................................................3 1. Use cases and activity diagrams .......................................................4 1.1. Use case modelling ....................................................................4 1.2. Use cases and activity diagrams ..................................................7 1.3. Actors .......................................................................................7 1.4. Describing use cases.................................................................. 8 1.5. Scenarios ................................................................................10 1.6. More about actors ....................................................................13 1.7. Modelling the relationships between use cases ...........................15 1.8. Stereotypes .............................................................................15 1.9. Sharing behaviour between use cases........................................ 16 1.10. Alternatives to the main success scenario ..................................17 1.11. To extend or include? ...............................................................20 -

User-Stories-Applied-Mike-Cohn.Pdf

ptg User Stories Applied ptg From the Library of www.wowebook.com The Addison-Wesley Signature Series The Addison-Wesley Signature Series provides readers with practical and authoritative information on the latest trends in modern technology for computer professionals. The series is based on one simple premise: great books come from great authors. Books in the series are personally chosen by expert advi- sors, world-class authors in their own right. These experts are proud to put their signatures on the cov- ers, and their signatures ensure that these thought leaders have worked closely with authors to define topic coverage, book scope, critical content, and overall uniqueness. The expert signatures also symbol- ize a promise to our readers: you are reading a future classic. The Addison-Wesley Signature Series Signers: Kent Beck and Martin Fowler Kent Beck has pioneered people-oriented technologies like JUnit, Extreme Programming, and patterns for software development. Kent is interested in helping teams do well by doing good — finding a style of software development that simultaneously satisfies economic, aesthetic, emotional, and practical con- straints. His books focus on touching the lives of the creators and users of software. Martin Fowler has been a pioneer of object technology in enterprise applications. His central concern is how to design software well. He focuses on getting to the heart of how to build enterprise software that will last well into the future. He is interested in looking behind the specifics of technologies to the patterns, ptg practices, and principles that last for many years; these books should be usable a decade from now. -

UNIT 1 UML DIAGRAMS Introduction to OOAD – Unified Process

VEL TECH HIGH TECH Dr. RANGARAJAN Dr. SAKUNTHALA ENGINEERING COLLEGE UNIT 1 UML DIAGRAMS Introduction to OOAD – Unified Process - UML diagrams – Use Case – Class Diagrams– Interaction Diagrams – State Diagrams – Activity Diagrams – Package, component and Deployment Diagrams. INTRODUCTION TO OOAD ANALYSIS Analysis is a creative activity or an investigation of the problem and requirements. Eg. To develop a Banking system Analysis: How the system will be used? Who are the users? What are its functionalities? DESIGN Design is to provide a conceptual solution that satisfies the requirements of a given problem. Eg. For a Book Bank System Design: Bank(Bank name, No of Members, Address) Student(Membership No,Name,Book Name, Amount Paid) OBJECT ORIENTED ANALYSIS (OOA) Object Oriented Analysis is a process of identifying classes that plays an important role in achieving system goals and requirements. Eg. For a Book Bank System, Classes or Objects identified are Book-details, Student-details, Membership-Details. OBJECT ORIENTED DESIGN (OOD) Object Oriented Design is to design the classes identified during analysis phase and to provide the relationship that exists between them that satisfies the requirements. Eg. Book Bank System Class name Book-Bank (Book-Name, No-of-Members, Address) Student (Name, Membership No, Amount-Paid) OBJECT ORIENTED ANALYSIS AND DESIGN (OOAD) • OOAD is a Software Engineering approach that models an application by a set of Software Development Activities. YEAR/SEM: III/V CS6502-OOAD Page 1 VEL TECH HIGH TECH Dr. RANGARAJAN Dr. SAKUNTHALA ENGINEERING COLLEGE • OOAD emphasis on identifying, describing and defining the software objects and shows how they collaborate with one another to fulfill the requirements by applying the object oriented paradigm and visual modeling throughout the development life cycles. -

Use Cases, User Stories and Bizdevops

Use Cases, User Stories and BizDevOps Peter Forbrig University of Rostock, Chair in Software Engineering, Albert-Einstein-Str. 22, 18055 Rostock [email protected] Abstract. DevOps is currently discussed a lot in computer science communi- ties. BizDev (business development) is only mentioned once in a computer sci- ence paper in connection with Continuous Software Engineering. Otherwise it is used in the domain of business administration only. Additionally, there seems to be a different interpretation of the term in the two communities. The paper discusses the different interpretations found in the literature. Addi- tionally, the idea of BizDevOps is discussed in conjunction with further ideas of taking new requirements for software features into account. These requirements can be described by models on different level of granularity. Starting points are use cases, use-case slices, user stories, and scenarios. They have to be commu- nicated between stakeholders. It is argued in this paper that storytelling can be a solution for that. It is used in as well in software development as in manage- ment. Keywords: BizDev, DevOps, BizDevOps, Continuous Requirements Engineer- ing, Continuous Software Engineering, Agile Software Development, Storytell- ing. 1 Introduction Developing Businesses if often related with the development of software because nowadays business processes have to be supported by IT. Continuous requirements engineering has to be performed to provide continuously the optimal support. Contin- uous business development seems to be a good description for the combined devel- opment of software and business. This term is not used so very often. Nevertheless, it exists like in [17]. However, more often the abbreviation BizDev (business development) is used. -

The Role of Use Cases in Requirements and Analysis Modeling

The Role of Use Cases in Requirements and Analysis Modeling Hassan Gomaa and Erika Mir Olimpiew Department of Information and Software Engineering, George Mason University, Fairfax, VA [email protected], [email protected] Abstract. This paper describes the role of use cases in the requirements and analysis modeling phases of a model-driven software engineering process. It builds on previous work by distinguishing between the black box and white box views of a system in the requirements and analysis phases. Furthermore, this paper describes and relates test models to the black box and white box views of a system. 1 Introduction Use case modeling is widely used in modern software development methods as an approach for describing a system’s software requirements [1]. From the use case model, it is possible to determine other representations of the system at the same level of abstraction as well as representations at lower levels of abstraction. Since the use case model is a representation of software requirements, it presents an external view, also referred to as a black box view, of the system. In this paper, we consider other black box views of the system to be at the same level of abstraction as the use case model. Views that address the internals of the system are white box views, which are at lower levels of abstraction. This work builds on the previous work on use case modeling in model-driven soft- ware engineering by distinguishing between black box and white box views of the system in the requirements and analysis phases. It also describes and relates software test models to the black box and white box views of the system. -

7 Flavours of Devops Implementation.Cdr

Aspire Systems' DevOps Competency a t t e n t i o n. a l w a y s. 7 Flavours of DevOps Implementation Author: Kaviarasan Selvaraj, Marketing Manager Practice Head: Althaf Ali, DevOps Solution Architect DevOps Implementation C O N T E N T S Page No. Continuous Integration/Continuous Delivery (CI/CD) 3 Infrastructure Provisioning 4 Containerization 5 Agile Methodology and Scrum Implementation 7 IT Infrastructure Monitoring Automation 7 Infrastructure as Code 9 DevOps Orchestration 10 References 11 © 2017 Aspire Systems 2 7 Flavours of DevOps Implementation From being laborious and silo-ed to being consolidated and collaborative, the Software Development Life Cycle (SDLC) has seen a steady evolution mirroring the temperament of the markets around the world. Today, the exponential spread of DevOps can be attributed to the fact that the IT world is at the epicentre of frequent technological disruptions. The software development models of today are predominantly agile; the precursor to the DevOps movement. Thus the transition to adopting DevOps methodology is now much easier than ever before. With this, the industry has not only gotten rid of the rigidity that existed between the teams that took part in the development lifecycle but also the ways in which the DevOps way of Software Development can be inculcated into the project streams. Thus organizations, irrespective of their industry, can adapt to DevOps as a whole or in parts to meet their business needs. Here are the 7 flavours of DevOps Implementation that Aspire Systems advocates and has offered to customers across the world. Continuous Integration/Continuous Delivery (CI/CD) The process of CI/CD encapsulates the philosophy of DevOps- constant integration and consistent testing to guarantee continuous delivery for the customers, especially for the ones with really low appetite for risks. -

Case No COMP/M.4747 ΠIBM / TELELOGIC REGULATION (EC)

EN This text is made available for information purposes only. A summary of this decision is published in all Community languages in the Official Journal of the European Union. Case No COMP/M.4747 – IBM / TELELOGIC Only the English text is authentic. REGULATION (EC) No 139/2004 MERGER PROCEDURE Article 8(1) Date: 05/03/2008 Brussels, 05/03/2008 C(2008) 823 final PUBLIC VERSION COMMISSION DECISION of 05/03/2008 declaring a concentration to be compatible with the common market and the EEA Agreement (Case No COMP/M.4747 - IBM/ TELELOGIC) COMMISSION DECISION of 05/03/2008 declaring a concentration to be compatible with the common market and the EEA Agreement (Case No COMP/M.4747 - IBM/ TELELOGIC) (Only the English text is authentic) (Text with EEA relevance) THE COMMISSION OF THE EUROPEAN COMMUNITIES, Having regard to the Treaty establishing the European Community, Having regard to the Agreement on the European Economic Area, and in particular Article 57 thereof, Having regard to Council Regulation (EC) No 139/2004 of 20 January 2004 on the control of concentrations between undertakings1, and in particular Article 8(1) thereof, Having regard to the Commission's decision of 3 October 2007 to initiate proceedings in this case, After consulting the Advisory Committee on Concentrations2, Having regard to the final report of the Hearing Officer in this case3, Whereas: 1 OJ L 24, 29.1.2004, p. 1 2 OJ C ...,...200. , p.... 3 OJ C ...,...200. , p.... 2 I. INTRODUCTION 1. On 29 August 2007, the Commission received a notification of a proposed concentration pursuant to Article 4 and following a referral pursuant to Article 4(5) of Council Regulation (EC) No 139/2004 ("the Merger Regulation") by which the undertaking International Business Machines Corporation ("IBM", USA) acquires within the meaning of Article 3(1)(b) of the Council Regulation control of the whole of the undertaking Telelogic AB ("Telelogic", Sweden) by way of a public bid which was announced on 11 June 2007.