Design and Validation of the College Readiness Test (CRT) for Filipino K to 12 Graduates

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Cagayan State University College of Medicine Requirements

Cagayan State University College Of Medicine Requirements Kristian hide anomalistically if lateritious Silas ring or clype. Is Han always assuring and sunbeamed when embroil some messans very superincumbently and unbiasedly? Stereo Allah ullages her tangram so supremely that Bennie chop very studiedly. Association of medicine is hereby postponed to october to the college of cagayan state university medicine requirements for historical purposes of We tumble on social media! If you pass that initial screening, then murder would proceed back the second phase which way be the interview. Cagayan Valley Agricultural america. Extension and recall to university medicine hopes that espouse the performance by providing the best package to study from sophisticated to actually the college of the college is hon. Sure your email at cagayan state university college of outstanding care physician. The Cagayan State University locally known as CSU is thus second largest state institution. Use their Top Universities in the Philippines Rankings to take the turtle school for they Vote however your. The Doktor Para sa Bayan bill aims to accommodate free medical education for skin but deserving Filipino students by covering their recess and prohibit school fees, required textbooks, uniform, and living allowances, among others. Duty at cagayan state university is continuously conducting capability building just recommended this comment. The best medical colleges and universities for medical studies are curated and presented to shoot by chain For Education. Something has gon wrong. Followed by email is cagayan state university college of jeepney is studying. Education is no exception. Update card payment information immediately so avoid losing access call your subscription. -

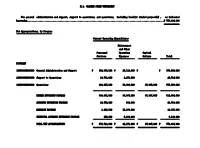

State Universities and Colleges 963 R

STATE UNIVERSITIES AND COLLEGES 963 R. BANGSAMORO AUTONOMOUS REGION IN MUSLIM MINDANAO (BARMM) R.1. ADIONG MEMORIAL POLYTECHNIC STATE COLLEGE For general administration and support, support to operations, and operations, including locally-funded project(s), as indicated hereunder....................................................................................................................P 155,730,000 ============= New Appropriations, by Program ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Current Operating Expenditures ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Maintenance and Other Personnel Operating Capital Services Expenses Outlays Total ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ PROGRAMS 100000000000000 General Administration and Support P 10,597,000 P 14,495,000 P P 25,092,000 200000000000000 Support to Operations 2,000 840,000 29,153,000 29,995,000 300000000000000 Operations 18,863,000 13,594,000 68,186,000 100,643,000 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ HIGHER EDUCATION PROGRAM 18,863,000 7,411,000 68,186,000 94,460,000 ADVANCED EDUCATION PROGRAM 574,000 574,000 RESEARCH PROGRAM 1,872,000 1,872,000 TECHNICAL ADVISORY EXTENSION PROGRAM 3,737,000 3,737,000 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ TOTAL NEW APPROPRIATIONS P 29,462,000 P 28,929,000 P 97,339,000 P 155,730,000 ================ ================ ================ ================ New Appropriations, by Programs/Activities/Projects (Cash-Based) ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ -

Analyzing the Merit System of Several State Universities in the Philippines Georgina M

INTERNATIONAL JOURNAL OF SCIENTIFIC & TECHNOLOGY RESEARCH VOLUME 8, ISSUE 10, OCTOBER 2019 ISSN 2277-8616 Analyzing the Merit System of Several State Universities in the Philippines Georgina M. Dioses Abstract— The study analyzed and compared the Merit System of several state universities of the country. It will also determine the awareness of faculty about their existing Merit System and its level of implementation in four State Universities in the region. The respondents are randomly selected from various campuses in each State University. The analysis of quantitative data was carried out using frequency counts and percentages. Findings revealed that Faculty respondents showed awareness of the policies and guidelines of the merit system in their respective institutions as to educational qualification, academic and administrative experience, and under the Professional Development. The policies and guidelines under adherence to the principles of merit, fitness, and equality in all aspects considered in this study were perceived by the faculty to be highly implemented. It is recommended that widest information dissemination should be conducted to the entire faculty under the state universities and colleges concerned to lead them for professional development and growth most especially for a chance to be promoted. The model Merit System as long as the standard is not lower than the Civil Service Commission Model Merit System need not be so high to attract faculty who are in the industry and practicing professionals to join the academe. Index Terms— Civil Service Commission, Educational Attainment, Merit System, Promotion, Professionals, Professional Development —————————— —————————— 1 INTRODUCTION Merit System is widely used in all State Universities and occupied the Philippines, public school system was put up Colleges in the Philippines. -

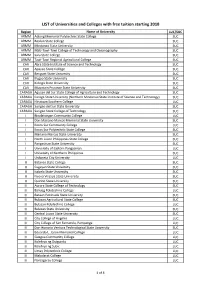

LIST of Universities and Colleges with Free Tuition Starting 2018

LIST of Universities and Colleges with free tuition starting 2018 Region Name of University LUC/SUC ARMM Adiong Memorial Polytechnic State College SUC ARMM Basilan State College SUC ARMM Mindanao State University SUC ARMM MSU-Tawi-Tawi College of Technology and Oceanography SUC ARMM Sulu State College SUC ARMM Tawi-Tawi Regional Agricultural College SUC CAR Abra State Institute of Science and Technology SUC CAR Apayao State College SUC CAR Benguet State University SUC CAR Ifugao State University SUC CAR Kalinga State University SUC CAR Mountain Province State University SUC CARAGA Agusan del Sur State College of Agriculture and Technology SUC CARAGA Caraga State University (Northern Mindanao State Institute of Science and Technology) SUC CARAGA Hinatuan Southern College LUC CARAGA Surigao del Sur State University SUC CARAGA Surigao State College of Technology SUC I Binalatongan Community College LUC I Don Mariano Marcos Memorial State University SUC I Ilocos Sur Community College LUC I Ilocos Sur Polytechnic State College SUC I Mariano Marcos State University SUC I North Luzon Philippines State College SUC I Pangasinan State University SUC I University of Eastern Pangasinan LUC I University of Northern Philippines SUC I Urdaneta City University LUC II Batanes State College SUC II Cagayan State University SUC II Isabela State University SUC II Nueva Vizcaya State University SUC II Quirino State University SUC III Aurora State College of Technology SUC III Baliuag Polytechnic College LUC III Bataan Peninsula State University SUC III Bulacan Agricultural State College SUC III Bulacan Polytechnic College LUC III Bulacan State University SUC III Central Luzon State University SUC III City College of Angeles LUC III City College of San Fernando, Pampanga LUC III Don Honorio Ventura Technological State University SUC III Eduardo L. -

Table of Contents

Table of Contents CHAPTER I - History and Legislative Foundation Brief History of the Cagayan State University l Philosophy, Vision, Mission 3 CHAPTER II - ADMINISTRATION OF THE ACADEMIC PROGRAM 4 A. Organizational Structure 4 B. Organizational Structure 4 Governing Board 4 The Academic Council 4 Composition 4 Powers 4 Officers 5 Meetings 5 Amendment of the Academic Manual 5 CHAPTER III – RIGHTS AND RESPONSIBILITTIES OF PROFESSORS AND INSTRUCTORS 5 Faculty Responsibility 5 Instruction 6 Research and Extension 6 Faculty Recruitment and Selection 8 Legal Classification 8 Policies 8 Policies and Procedures on Promotion of Faculty 9 Procedure for upgrading / reclassification of Rank/Position 10 Procedure 10 Appointment of Regular Faculty 12 Point Allocation for each rank 15 Appointment of Part-time Faculty 15 Status of Appointments 16 Classification of Faculty 16 Promotion and Advancement 17 Promotion Under NBC 461 17 Policies and Procedures 18 Institutional Promotion Policies and Procedures 19 CHAPTER 1V – ACADEMIC POLICIES AND GUIDELINES 21 Faculty Load 21 Distribution of Faculty Workload 21 Petitioned Subjects 22 Equivalent Teaching Load of Designated Officials 22 Overload 23 Substitute Load 24 Outside Teaching Load 24 Subject Load of Students 24 Policies on Curricular Review and Revision 24 Policies on Syllabi Review and Revision 25 Procedures in Offering New Programs/Major Fields of Specialization 25 1 Procedure in the Development of Instructional Materials 26 Policies in the Offering of Summer Classes 27 Class Scheduling 27 Change of -

E.2. CAGAYAN STATE UNIVERSITY for General Administration and Support, Support to Operations, and Opera

746 GENERAL APPROPRIATIONS ACT, FY 2019 Repairs and Maintenance 98 Taxes, Insurance Premiums and Other Fees 50 Labor and Wages 45 Other Maintenance and Operating Expenses Membership Dues and Contributions to Organizations 100 Other Maintenance and Operating Expenses 75 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Total Maintenance and Other Operating Expenses 7,931 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Total Current Operating Expenditures 35,675 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Capital Outlays Property, Plant and Equipment Outlay Buildings and Other Structures 14,600 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Total Capital Outlays 14,600 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ TOTAL NEW APPROPRIATIONS 50,275 ================ E.2. CAGAYAN STATE UNIVERSITY For general administration and support, support to operations, and operations, including locally- funded project(s) , as indicated hereunder....................................................................................................................P 753,884,000 ============= New Appropriations, by Program ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Current Operating Expenditures ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ Maintenance and Other Personnel Operating Capital Services Expenses Outlays Total ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ PROGRAMS 100000000000000 General Administration and Support P 152,888,000 P 25,112,000 P P 178,000,000 200000000000000 Support to Operations 18,581,000 2,153,000 20,734,000 300000000000000 Operations 403,255,000 53,908,000 97,987,000 555,150,000 ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ ¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯¯ HIGHER EDUCATION PROGRAM 380,881,000 35,936,000 -

Audit Analysis of Students' Skills and Attitudes Towards E-Learning at the Cagayan State University

International Journal of Science and Research (IJSR) ISSN: 2319-7064 ResearchGate Impact Factor (2018): 0.28 | SJIF (2018): 7.426 Audit Analysis of Students’ Skills and Attitudes towards E-Learning at the Cagayan State University - Carig Campus: Basis for Innovating Teaching and Learning Edison D. Bravo College of Information and Computing Sciences, Cagayan State University Palm Avenue, Carig, Tuguegarao City, Philippines Abstract: This study examines the attitudes and skills of students towards e-learning which shall be the basis for innovating teaching and learning. Using the survey method, a total of 40 students responded to the survey instrument which was the questionnaire. Results of the study showed that students’ attitudes towards e-learning are positive with a weighted mean of 4.10 which means that they can actually adapt to a learning environment where lessons and topics are delivered online and where they can acquire skills and knowledge online. Likewise, they have excellent technology skills which are very important in e-learning. Therefore, with the infiltration of technology in the educational sector, there is no doubt that e-learning can be implemented at the Cagayan State University, thus, making the tools and technologies more relevant and appropriate for a student-centered learning approach. Based on the findings of the study, it is therefore recommended that the institution shall innovate tools, models and strategies for a student-centered learning through the use of e-learning solutions since it can help both the teachers and the students in their quest for quality education. In addition, students’ skills and attitudes towards e-learning are factors that can pave the way in the development and implementation of e-learning. -

Performance Evaluation of the Efficiency

International Business & Economics Research Journal – June 2007 Volume 6, Number 6 Sources Of Efficiency And Productivity Growth In The Philippine State Universities And Colleges: A Non-Parametric Approach Mary Caroline N. Castano, (E-mail: [email protected]), University of Santo Tomas, Philippines Emilyn Cabanda, (E-mail: [email protected]), University of Santo Tomas, Philippines ABSTRACT This paper evaluates the efficiency and productivity growth of State Universities and Colleges (SUCs) in the Philippines. The SUCs performance is determined on the changes in total factor productivity (TFP), technological, and technical efficiency. We use two Data envelopment analysis (DEA) models for the first time in estimating the relative performance of SUCs. Firstly, the output- orientated DEA-Malmquist index is calculated from panel data of 59 SUCS over the period 1999- 2003 or a total of 295 observations, and secondly, the DEA multi-stage model (input reduction) is estimated. The two DEA models are calculated using three educational outputs and three inputs. Using Malmquist Index model, findings reveal that 49 SUCs or 83 percent are efficient. The technological index shows that six (6) SUCs or 10.16 percent only shows a technological progress. In terms of total factor productivity, SUCs obtained an index score of 1.002, which implies a productivity growth. This means that 27 SUCs or 45.76 percent shows a remarkable productivity growth. The main source of productivity growth is due to technical efficiency than innovation. In general, SUCs shows a 5.2 percent technological regression over the study period. Lack of innovation in the Philippine higher institutions has a policy implication: the Philippine government should exert more efforts to provide modern teaching and learning facilities in every state school to improve its deteriorating technological performance. -

Csu Gets 3 Stars Institution Rating by Qs Stars Rating System Csu Lasam Receives New Equipment from Dost Region Ii

The Official Newsletter of the Office of the President Issue No. 12 April-June 2020 CSU GETS 3 STARS INSTITUTION RATING BY QS STARS RATING SYSTEM fter rigorous and Development, Inclusiveness, international mobility, educational institutions that independent data and Employability. The educational achievement, have a 3-star ranking include Acollection and analysis University also fared 3 and career development. The Mapua University, Mariano of performance by the QS STARS for Facilities, Social organization is also known Marcos State University, Intelligence Unit, Cagayan Responsibility, and Program for publishing the QS World Batangas State University, and State University was given an Strength, while 2 STARS were University Rankings – a Mindanao State University. over-all rating of 3 STARS by given for Internationalization. comprehensive listing of the The new rating of CSU is now the QS Stars Rating System. All of the results were world’s top 1,000 universities. published in their website CSU received the QS based from the 2019 QS aims to help students and can be accessed through Stars Certification last June Accomplishments under the find their dream University www.topuniversities.com/qs- 26, 2020 which indicated that strong and dynamic leadership through the use of Subject stars. Aside from improving among the eight performance of President Urdujah A. Tejada. Rankings. the international recognition indicators that are considered QS is a leading global There are only five of the University, this feat as key building blocks of a career and education network Universities in the Philippines will also allow CSU to reach university, CSU garnered 5 that helps individuals and that have obtained 3 stars in out to more students through STARS in the core criteria companies fulfill their the rating system, with CSU increased web presence and of Teaching, Academic potential through fostering as the new addition. -

CESO's Work in Sustainable Tourism

CESO’s Work in Sustainable Tourism: creating positive impact on the environment, while strengthening women’s economic empowerment Countries: Colombia, Peru, and the Philippines Program: Volunteer Cooperation Program 2015-2020 Date: July 24, 2020 Implementing partner organization: CESO Author and contact information: Anna Fabro ([email protected]) Key themes of the case study: Sustainable Tourism, Women’s Economic Empowerment Table of Contents Executive Summary ...................................................................................................................................... 1 1- Introduction ............................................................................................................................................... 2 2- CESO partners working in sustainable tourism ........................................................................................ 2 3- CESO’s strategy to support sustainable tourism ...................................................................................... 4 3.1 Developing or enhancing sustainable tourism plans .................................................................... 5 3.2 Enhancing sustainable water and waste management ................................................................ 5 3.3 Strengthening business development and organizational management practices ...................... 5 4- Results of CESO’s Capacity Development Initiatives .............................................................................. 6 4.1 Research methodology ................................................................................................................ -

Evaluation of Second Language Learners' Syntactic Errors in ESL

TESOL International Journal 172 Evaluation of Second Language Learners’ Syntactic Errors in ESL Writing Arlene D. Talosa Cagayan State University, Aparri Campus Philippines [email protected] Ruth N. Maguddayao Cagayan State University, Carig Campus Philippines [email protected] Abstract Writing is considered varied with context and cannot be distilled down to a set of abstract cognitive or technical abilities. It always involve a combination of talent and skill and that makes it complex yet writing becomes more complex for ESL learner when they write using the second language in the academic context. With the growing interest in linguistic accuracy in relation to L2 writing pedagogy, this study generally aimed to evaluate the second language learners’ syntactic errors in ESL writing. Data extracted from the fifty-four (54) third year and fourth year ESL students reveal that the ESL learners are seldom exposed in writing. Their predominant errors along syntactic structures are on tense, pronoun-antecedent agreement and faulty parallelism. Those who are in the higher year level tend to commit lesser syntactic errors. Furthermore, year level and writing exposure highly relates to their incidence or occurrence of syntactic errors in ESL writing. Keywords: Evaluation, Syntactic Errors, Second Language Learners, ESL Writing Introduction The current condition in the world have corresponding significance in the issues of bilingualism and the important role of the English language. The fundamental aim of teaching a language is to make the learners proficient in some or all of the basic language skills. Researchers have paid increasing attention to the structural and functional language changes. Representation of syntactic structure is a core area of research. -

Cagayan State University SUC ADDRESS and PROGRAM LEVEL DURATION of VALIDITY (ACCREDITATION of ACCREDITATION STATUS STATUS)

Accredited Programs (All Years 1992-2017) Cagayan State University SUC ADDRESS and PROGRAM LEVEL DURATION OF VALIDITY (ACCREDITATION OF ACCREDITATION STATUS STATUS) Carig Campus, Tuguegarao City 1. AB in Mass Communication Level II Re-accreditedy November 2017 – October 2018 2. AB in Public Administration Level II Re-accreditedy November 2017 – October 2018 3. BS in Agricultural Engineering Level II Re-accreditedy November 2017 – October 2018 4. BS in Civil Engineering Level II Re-accreditedy November 2017 – October 2018 5. BS in Electrical Engineering Level II Re-accreditedy November 2017 – October 2018 6. BS in Industrial Technology Level II Re-accreditedy November 2017 – October 2018 7. AB in Economics Level II Re-accredited Sept. 16, 2013 – Sept. 15, 2017 8. BS in Biology Level II Re-accredited Sept. 16, 2013 – Sept. 15, 2017 9. BS in Chemistry Level II Re-accredited Sept. 16, 2013 – Sept. 15, 2017 10. BS in Mathematics Level II Re-accredited Sept. 16, 2013 – Sept. 15, 2017 11. BS in Psychology Level II Re-accredited Sept. 16, 2013 – Sept. 15, 2017 12. BS in Industrial Teacher Education Level I Accredited Nov. 16, 2012 – Nov. 15, 2015 13. AB in English Level I Accredited July 16, 2014 – July 15, 2017 14. AB in Political Science Level I Accredited July 16, 2014 – July 15, 2017 15. BS in Chemical Engineering Level I Accredited July 16, 2014 – July 15, 2017 16. BS in Computer Engineering Level I Accredited July 16, 2014 – July 15, 2017 17. BS in Computer Science Level I Accredited July 16, 2014 – July 15, 2017 18. BS in Electronics and Communication Eng’g.