Underwater Photogrammetry and Object Modeling: a Case Study of Xlendi Wreck in Malta

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Introduction – Grand Harbour Marina

introduction – grand harbour marina Grand Harbour Marina offers a stunning base in historic Vittoriosa, Today, the harbour is just as sought-after by some of the finest yachts Malta, at the very heart of the Mediterranean. The marina lies on in the world. Superbly serviced, well sheltered and with spectacular the east coast of Malta within one of the largest natural harbours in views of the historic three cities and the capital, Grand Harbour is the world. It is favourably sheltered with deep water and immediate a perfect location in the middle of the Mediterranean. access to the waterfront, restaurants, bars and casino. With berths for yachts up to 100m (325ft) in length, the marina offers The site of the marina has an illustrious past. It was originally used all the world-class facilities you would expect from a company with by the Knights of St John, who arrived in Malta in 1530 after being the maritime heritage of Camper & Nicholsons. exiled by the Ottomans from their home in Rhodes. The Galley’s The waters around the island are perfect for a wide range of activities, Creek, as it was then known, was used by the Knights as a safe including yacht cruising and racing, water-skiing, scuba diving and haven for their fleet of galleons. sports-fishing. Ashore, amid an environment of outstanding natural In the 1800s this same harbour was re-named Dockyard Creek by the beauty, Malta offers a cosmopolitan selection of first-class hotels, British Colonial Government and was subsequently used as the home restaurants, bars and spas, as well as sports pursuits such as port of the British Mediterranean Fleet. -

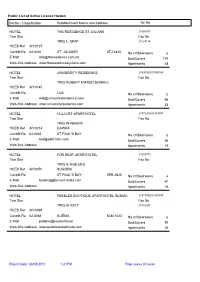

Public List of Active Licence Holders Tel No Sector / Classification

Public List of Active Licence Holders Sector / Classification Establishment Name and Address Tel No HOTEL THE RESIDENCE ST JULIANS 21360031 Two Star Fax No TRIQ L. APAP 21374114 HCEB Ref AH/0137 Contrib Ref 02-0044 ST. JULIAN'S STJ 3325 No Of Bedrooms 0 E Mail [email protected] Bed/Covers 124 Web-Site Address www.theresidencestjulians.com Apartments 48 HOTEL UNIVERSITY RESIDENCE 21430360/21436168 Two Star Fax No TRIQ ROBERT MIFSUD BONNICI HCEB Ref AH/0145 Contrib Ref LIJA No Of Bedrooms 0 E Mail [email protected] Bed/Covers 66 Web-Site Address www.universityresidence.com Apartments 33 HOTEL HULI CRT APARTHOTEL 21572200/21583741 Two Star Fax No TRIQ IN-NAKKRI HCEB Ref AH/0214 QAWRA Contrib Ref 02-0069 ST.PAUL'S BAY No Of Bedrooms 0 E Mail [email protected] Bed/Covers 56 Web-Site Address Apartments 19 HOTEL FOR REST APARTHOTEL 21575773 Two Star Fax No TRIQ IL-HGEJJEG HCEB Ref AH/0370 BUGIBBA Contrib Ref ST.PAUL'S BAY SPB 2825 No Of Bedrooms 4 E Mail [email protected] Bed/Covers 40 Web-Site Address Apartments 16 HOTEL PEBBLES BOUTIQUE APARTHOTEL SLIEMA 21311889/21335975 Two Star Fax No TRIQ IX-XATT 21316907 HCEB Ref AH/0395 Contrib Ref 02-0068 SLIEMA SLM 1022 No Of Bedrooms 0 E Mail [email protected] Bed/Covers 92 Web-Site Address www.pebbleshotelmalta.com Apartments 26 Report Date: 30/08/2019 1-21PM Page xxxxx of xxxxx Public List of Active Licence Holders Sector / Classification Establishment Name and Address Tel No HOTEL ALBORADA APARTHOTEL (BED & BREAKFAST) 21334619/21334563 Two Star 28 Fax No TRIQ IL-KBIRA -

Module 1 Gozo Today

Unit 1 - Gozo Today Josianne Vella Preamble: This first unit brings a brief overview of the Island’s physical and human geography, including a brief historic overview of the economic activities in Gozo. Various means of access to, and across the island as well as some of the major places of interest have been interspersed with information on the Island’s customs and unique language. ‘For over 5,000 years people have lived here, and have changed and shaped the land, the wild plants and animals, the crops and the constructions and buildings on it. All that speaks of the past and the traditions of the Islands, of the natural world too, is heritage.’ Haslam, S. M. & Borg, J., 2002. ‘Let’s Go and Look After our Nature, our Heritage!’. Ministry of Agriculture & Fisheries - Socjeta Agraria, Malta. The Island of Gozo Location: Gozo (Għawdex) is the second largest island of the Maltese Archipelago. The archipelago consists of the Islands of Malta, Gozo and Comino as well as a few other uninhabited islets. It is roughly situated in the middle of the Mediterranean Sea, about 93km south of Sicily, 350 kilometres due north of Tripoli and about 290 km from the nearest point on the North African mainland. Size: The total surface area of the Islands amounts to 315.6 square kilometres and are among the smallest inhabited islands in the Mediterranean. With a coastline of 47 km, Gozo occupies an area of 66 square kilometres and is 14 km at its longest and 7 km at its widest. IRMCo, Malta e-Module Gozo Unit 1 Page 1/8 Climate: The prevailing climate in the Maltese Islands is typically Mediterranean, with a mild, wet winter and a long, dry summer. -

Malta & Gozo Directions

DIRECTIONS Malta & Gozo Up-to-date DIRECTIONS Inspired IDEAS User-friendly MAPS A ROUGH GUIDES SERIES Malta & Gozo DIRECTIONS WRITTEN AND RESEARCHED BY Victor Paul Borg NEW YORK • LONDON • DELHI www.roughguides.com 2 Tips for reading this e-book Your e-book Reader has many options for viewing and navigating through an e-book. Explore the dropdown menus and toolbar at the top and the status bar at the bottom of the display window to familiarize yourself with these. The following guidelines are provided to assist users who are not familiar with PDF files. For a complete user guide, see the Help menu of your Reader. • You can read the pages in this e-book one at a time, or as two pages facing each other, as in a regular book. To select how you’d like to view the pages, click on the View menu on the top panel and choose the Single Page, Continuous, Facing or Continuous – Facing option. • You can scroll through the pages or use the arrows at the top or bottom of the display window to turn pages. You can also type a page number into the status bar at the bottom and be taken directly there. Or else use the arrows or the PageUp and PageDown keys on your keyboard. • You can view thumbnail images of all the pages by clicking on the Thumbnail tab on the left. Clicking on the thumbnail of a particular page will take you there. • You can use the Zoom In and Zoom Out tools (magnifying glass) to magnify or reduce the print size: click on the tool, then enclose what you want to magnify or reduce in a rectangle. -

PDF Compressor Pro PDF Compressor Pro

PDF Compressor Pro PDF Compressor Pro Joseph Bezzina Ten singular stories from the village of Santa Luċija • Gozo with input by Ricky Bugeja – Kelly Cassar – Gordon Formosa – Graziella Grech – Rodienne Grech – Joe Mizzi – Mario Mizzi – Lucienne Sultana – Marilyn Sultana Cover picture: Aimee Grech Designs (monochrome): Luke Azzopardi Designs (colour): Aimee Grech Photos: Joseph Bezzina – Paul Camilleri – Joe Mizzi Santa Luċija–Gozo FONDAZZJONI FOLKLORISTIA TA’ KLULA 2011 PDF Compressor Pro First published in 2011 by the FONDAZZJONI FOLKLORISTIA TA’ KLULA 23 Triq Ta’ Klula, Santa Luċija, Gozo. kcm 3060. Malta. and eco-gozo © Joseph Bezzina • 2011 All rights reserved. No part of this book may be reproduced, stored in a retrieval system, or transmited in any form or by any means, known or yet to be invented, for any purpose whatsoever, without the writen permission of the author and that of the Fondazzjoni Folkloristika Ta’ Klula which permission must be obtained beforehand. Cataloguing in Publication Data Bezzina, Joseph, 1950- Ten singular stories from Santa Luċija, Gozo / Joseph Bezzina with input by Ricky Bugeja – Kelly Cassar – Gordon Formosa – Graziella Grech – Rodienne Grech – Joe Mizzi – Mario Mizzi – Lucienne Sultana – Marilyn Sultana. – Santa Luċija, Gozo : Fondazzjoni Folkloristika Ta’ Klula – Eco Gozo, 2011 40 p. : col. ill. ; 18 cm. 1. Santa Luċija (Gozo) – History 2. Santa Luċija (Gozo) – Churches 3. Churches – Gozo, Malta I. Title DDC: 945.8573 LC: DG999.N336C358B4 Melitensia Classiication: MZ8 SLC Computer seting in font Arno Pro Production Joseph Bezzina Printed and bound in Malta Gozo Press, Għajnsielem-Gozo. GSM 9016 PDF Compressor Pro contents 1 he spring at Għajn Għabdun . -

Ancient Anchors from Malta and Gozo Elaine Azzopardi, Timmy Gambin, Renata Zerafa

Elaine Azzopardi, Timmy Gambin, Renata Zerafa Ancient anchors from Malta and Gozo Elaine Azzopardi, Timmy Gambin, Renata Zerafa In 2011, the national archaeological collection managed by Heritage Malta included 24 lead anchor stocks. They are the remains of ancient wooden anchors used on boats that sheltered in the harbours and bays of the Maltese Islands. This paper includes a gazetteer documenting these stocks with the aim of highlighting their value to maritime archaeology and to create a tool that will facilitate further study. Anchors form part of the basic equipment of seagoing and the placing of these in a broader context will help vessels. Although they are used to ‘attach’ a vessel to further our knowledge of the maritime activity within the seabed, an anchored boat is not stationary as it an area. moves with the wind and currents. Anchors can also When undertaking such a study it is important be deployed whilst underway to increase stability and to keep the following in mind: manoeuvrability in bad weather. Furthermore, their 1) There is a correlation between the popularity of use as votive offerings (Frost 2001) and inscriptions of dive sites and the discovery of anchor stocks. names of deities on stocks highlight their importance 2) Although many stocks were given to heritage to ancient seafarers. authorities others were kept or melted down One cannot be sure how many anchors ships to produce diving weights. This distorts the carried. Numbers found differ from site to site. For picture of quantification and distribution. example, the second millennium BC Ulu Burun 3) Sedimentation in many Maltese bays has shipwreck was carrying 24 stone anchors but it is not buried archaeological layers. -

Pottery from Roman Malta

Cover Much of what is known about Malta’s ancient material culture has come to light as a result of antiquarian research or early archaeological work – a time where little attention Anastasi MALTA ARCHAEOLOGICAL REVIEW SUPPLEMENT 1 was paid to stratigraphic context. This situation has in part contributed to the problem of reliably sourcing and dating Maltese Roman-period pottery, particularly locally produced forms common on nearly all ancient Maltese sites. Pottery from Roman Malta presents a comprehensive study of Maltese pottery forms from key stratified deposits spanning the first century BC to mid-fourth century AD. Ceramic material from three Maltese sites was analysed and quantified in a bid to understand Maltese pottery production during the Roman period, and trace the type and volume of ceramic-borne goods that were circulating the central Mediterranean during the period. A short review of the islands’ recent literature on Roman pottery is discussed, followed by a detailed Pottery from Roman Malta contextual summary of the archaeological contexts presented in this study. The work is supplemented by a detailed illustrated catalogue of all the forms identified within the assemblages, presenting the wide range of locally produced and imported pottery types typical of the Maltese Roman period. Maxine Anastasi is a Lecturer at the Department of Classics and Archaeology, University of Malta. She was awarded a DPhil in Archaeology from the University of Oxford for her dissertation on small-island economies in the Central Mediterranean. Her research primarily focuses on Roman pottery in the central Mediterranean, with a particular Malta from Roman Pottery emphasis on Maltese assemblages. -

Church Winter Time Comino 5.45 Fontana 6.00 Fontana 7.00 Fontana

Church Winter time Comino 5.45 Fontana 6.00 Fontana 7.00 Fontana 8.30 Fontana 10.30 Fontana 16.30 Għajnsielem - Lourdes Church 17.00 Għajnsielem – Lourdes Church 8.30 Għajnsielem - Parish 6.00 Għajnsielem - Parish 8.00 Għajnsielem - Parish 10.00 Għajnsielem - Parish 17.00 Għajnsielem - Saint Anthony of Padua (OFM) 6.00 Għajnsielem - Saint Anthony of Padua (OFM) 7.00 Għajnsielem - Saint Anthony of Padua (OFM) 8.30 Għajnsielem - Saint Anthony of Padua (OFM) 11.00 Għajnsielem - Saint Anthony of Padua (OFM) 17.30 Għarb - Parish 5.30 Għarb - Parish 7.00 Għarb - Parish 8.30 Għarb - Parish 10.00 Għarb - Parish 17.00 Għarb - Ta’ Pinu Sancturay 6.15 Għarb - Ta’ Pinu Sancturay 8.30 Għarb - Ta’ Pinu Sancturay 10.00 Għarb - Ta’ Pinu Sancturay 11.15 Għarb - Ta’ Pinu Sancturay 17.00 Għarb - Taż-Żejt 9.00 Għasri - Parish 6.00 Għasri - Parish 8.30 Għasri - Parish 10.00 Għasri - Parish 17.30 Għasri - Tal-Wied 17.00 Kerċem - Parish 6.00 Kerċem - Parish 7.00 Kerċem - Parish 8.30 Kerċem - Parish 10.30 Kerċem - Parish 18.00 Kerċem - Saint Lucy 7.00 Kerċem - Saint Lucy 10.30 Marsalforn - Saint Paul 8.00 Marsalforn - Saint Paul 10.00 Marsalforn - Saint Paul 11.00 Marsalforn - Saint Paul 18.00 Munxar 6.00 Munxar 7.00 Munxar 8.30 Munxar 11.00 Munxar 16.00 Nadur - Franciscan Sisters 6.15 Nadur - Franciscan Sisters 10.15 (English Mass) Nadur - Parish 5.15 Nadur - Parish 6.30 Nadur - Parish 8.00 Nadur - Parish 9.15 Nadur - Parish 10.30 Nadur - Parish 11.45 Nadur - Parish 16.30 Nadur - Parish 19.00 Nadur - Sacred Heart 7.00 Qala - Conception Church 6.00 Qala - Parish -

Following Paul from Shipwreck on Malta to Martyrdom in Rome MALTA • SICILY • ITALY Led by Dr

Following Paul from Shipwreck on Malta to Martyrdom in Rome MALTA • SICILY • ITALY Led by Dr. Carl Rasmussen MAY 11-22, 2021 organized by Following Paul from Shipwreck on Malta to Martyrdom in Rome / May 11-22, 2021 Malta Following Paul from Shipwreck on Malta to Martyrdom in Rome MAY 11-22, 2021 Fri 14 May Ferry to POZZALLO (SICILY) - SYRACUSE – Ferry to REGGIO CALABRIA Early check out, pick up our box breakfasts, meet the English-speaking assistant at our hotel and transfer to the port of Malta. 06:30am Take a ferry VR-100 from Malta to Pozzallo (Sicily) 08:15am Drive to Syracuse (where Paul stayed for three days, Acts 28.12). Meet our guide and visit the archeological park of Syracuse. Drive to Messina (approx. 165km) and take the ferry to Reggio Calabria on the Italian mainland (= Rhegium; Acts 28:13, where Paul stopped). Meet our guide and visit the Museum of Magna Grecia. Check-in to our hotel in Reggio Calabria. Dr. Carl and Mary Rasmussen Dinner at our hotel and overnight. Greetings! Mary and I are excited to invite you to join our handcrafted adult “study” trip entitled Following Paul from Shipwreck on Malta to Sat 15 May PAESTUM - to POMPEII Martyrdom in Rome. We begin our tour on Malta where we will explore the Breakfast and checkout. Drive to Paestum (435km). Visit the archeological bays where the shipwreck of Paul may have occurred as well as the Island of area and the museum of Paestum. Paestum was a major ancient Greek city Malta. Mark Gatt, who discovered an anchor that may have been jettisoned on the coast of the Tyrrhenian Sea in Magna Graecia (southern Italy). -

L-4 Ta' Diċembru, 2019

L-4 ta’ Diċembru, 2019 25,349 PROĊESS SĦIĦ FULL PROCESS Applikazzjonijiet għal Żvilupp Sħiħ Full Development Applications Din hija lista sħiħa ta’ applikazzjonijiet li waslu għand This is a list of complete applications received by the l-Awtorità tal-Ippjanar. L-applikazzjonijiet huma mqassmin Planning Authority. The applications are set out by locality. bil-lokalità. Rappreżentazzjonijiet fuq dawn l-applikazzjonijiet Any representations on these applications should be sent in għandhom isiru bil-miktub u jintbagħtu fl-uffiċini tal-Awtorità writing and received at the Planning Authority offices or tal-Ippjanar jew fl-indirizz elettroniku ([email protected]. through e-mail address ([email protected]) within mt) fil-perjodu ta’ żmien speċifikat hawn taħt, u għandu the period specified below, quoting the reference number. jiġi kkwotat in-numru ta’ referenza. Rappreżentazzjonijiet Representations may also be submitted anonymously. jistgħu jkunu sottomessi anonimament. Is-sottomissjonijiet kollha lill-Awtorità tal-Ippjanar, All submissions to the Planning Authority, submitted sottomessi fiż-żmien speċifikat, jiġu kkunsidrati u magħmula within the specified period, will be taken into consideration pubbliċi. and will be made public. L-avviżi li ġejjin qed jiġu ppubblikati skont Regolamenti The following notices are being published in accordance 6(1), 11(1), 11(2)(a) u 11(3) tar-Regolamenti dwar l-Ippjanar with Regulations 6(1), 11(1), 11(2)(a), and 11(3) of the tal-Iżvilupp, 2016 (Proċedura ta’ Applikazzjonijiet u Development Planning (Procedure for Applications and d-Deċiżjoni Relattiva) (A.L.162 tal-2016). their Determination) Regulations, 2016 (L.N.162 of 2016). Rappreżentazzjonijiet fuq l-applikazzjonijiet li ġejjin Any representations on the following applications should għandhom isiru sat-13 ta’ Jannar, 2020. -

Annual Report 2017 Contents

ANNUAL REPORT 2017 CONTENTS 3 4 9 CEO Water Water Report Resources Quality 14 20 32 Compliance Network Corporate Infrastructure Services Directorate 38 45 48 Strategic Human Unaudited Information Resources Financial Directorate Statement 2017 ANNUAL REPORT CEO REPORT CEO Report 2017 was a busy year for the Water Services quality across Malta. Secondly, RO plants will be Corporation, during which it worked hard to become upgraded to further improve energy efficiency and more efficient without compromising the high quality production capacity. Furthermore, a new RO plant service we offer our customers. will be commissioned in Gozo. This RO will ensure self-sufficiency in water production for the whole During a year, in which the Corporation celebrated island of Gozo. The potable water supply network to its 25th anniversary, it proudly inaugurated the North remote areas near Siġġiewi, Qrendi and Haż-Żebbuġ Wastewater Treatment Polishing Plant in Mellieħa. will be extended. Moreover, ground water galleries This is allowing farmers in the Northern region to will also be upgraded to prevent saline intrusion. benefit from highly polished water, also known as The project also includes the extension of the sewer ‘New Water’. network to remote areas that are currently not WSC also had the opportunity to establish a number connected to the network. Areas with performance of key performance indicator dashboards, produced issues will also be addressed. inhouse, to ensure guaranteed improvement. These Having only been recently appointed to lead the dashboards allow the Corporation to be more Water Services Corporation, I fully recognize that the efficient, both in terms of performance as well as privileges of running Malta’s water company come customer service. -

Of the Maltese Islands (Hymenoptera, Formicidae)

** STEPHEN P. SCHEMBRI * and CEDRIC A. COLLINGWOOD A REVISION OF THE MYRMECOFAUNA OF THE MALTESE ISLANDS (HYMENOPTERA, FORMICIDAE) INTRODUCTION In 1924, Emery published a short paper in which he listed seven species of ants identified by him from a small collection made by Sil- vestri on Malta (EMERY, 1924). No further work was carried out until 1968 when Baroni Urbani published a comprehensive work on the ant fauna of the Maltese group in which he added another 23 species, bring- ing the total number to 30 (BARONI URBANI, 1968a). In a further paper, BARONI URBANI (1968b) discussed the biogeography of the Maltese myrmecofauna. Collections made over the period 1975-1979 by one of us (SPS) have included 29 out of the 30 species previously recorded, and have added a further 14 to the Maltese list, bringing the total to 44 species. The present work discusses these new findings and extends the distri- bution records of BARONI URBANI (1968a). Many of the Maltese po- pulations show interesting and sometimes puzzling morphological va- riations when compared to populations of the same species from the European mainland, and these are also discussed. SPECIES LIST The name of the species is given first followed by previous re- cords from the Maltese Islands. Full data are included for the less common species. Notes on taxonomy and ecology are included where relevant. Species marked with an asterisk have not previously been recorded from the area. * 72 Brared Street, Birkirkara, Malta. ** Ministry of Agriculture, Lawnswood, Leeds, England. S.P. SCHEMBRI - C.A. COLLINGWOOD - stations searched for ants.