An Interoperable & Optimal Data Grid Solution For

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Vanamala Venkataswamy

Vanamala Venkataswamy Email: [email protected] Website: http://www.cs.virginia.edu/~vv3xu Research Interest Job Scheduling, Reinforcement Learning, Cloud Computing, Distributed Storage. Applied Machine Learning: Deep Reinforcement Learning for job scheduling in data-centers powered by renewable energy sources, a.k.a Green Data-centers. Education Ph.D., Computer Science. University of Virginia (UVa), Charlottesville, VA. GPA 3.9/4.0 Advisor: Prof. Andrew Grimshaw. 2016-Present M.S., Computer Science. University of Southern California (USC), Los Angeles, CA. 2009-2010 B.E., Information Technology. Visvesvaraya Technological University, India. 2000-2004 Research and Professional Experience TOMORROW’S PROFESSOR TODAY (TPT), UVA AUG 2020 - AUG -2021 • Currently attending workshops and seminars designed to facilitate the transition from student to academic professional. The program focuses on improving preparedness primarily in teaching at the college level, with emphases in professional development and adjustment to a university career. SYSTEM SOFTWARE INTERN, LANCIUM INC. MAY 2019 - AUG -2019 • Configured Lancium’s cloud backend system for accepting jobs from users and scheduling jobs to matching resources in the data center. • Developed scheduler software to match users' job requests' to available matching GPU resources. • Assisted with the beta testing and release of Lancium’s cloud backend software. • Assisted with troubleshooting users' jobs and maintaining the cloud backend. PROGRAMMER ANALYST, UVA, CHARLOTTESVILLE, VA. 2011- 2016 • Developed grid command-line tools and web services for GenesisII software. • Developed framework for unit testing and regression testing for GenesisII software. • Administration and Maintenance of Cross Campus Grid (XCG) and XSEDE grid spanning multiple institutions and supercomputing facilities nationwide. • Interacted with grid users (users from UVa and other institutions) to troubleshoot issues while using grid resources. -

CSI Web Server for Linux

INSTRUCTION CSI Web Server for Linux Installation Guide Revision: 3/18 MANUAL Copyright © 2006- 2018 Campbell Scientific, Inc. License for Use This software is protected by United States copyright law and international copyright treaty provisions. The installation and use of this software constitutes an agreement to abide by the provisions of this license agreement. Campbell Scientific grants you a non-exclusive license to use this software in accordance with the following: (1) The purchase of this software allows you to install and use a single instance of the software on one physical computer or one virtual machine only. (2) This software cannot be loaded on a network server for the purposes of distribution or for access to the software by multiple operators. If the software can be used from any computer other than the computer on which it is installed, you must license a copy of the software for each additional computer from which the software may be accessed. (3) If this copy of the software is an upgrade from a previous version, you must possess a valid license for the earlier version of software. You may continue to use the earlier copy of software only if the upgrade copy and earlier version are installed and used on the same computer. The earlier version of software may not be installed and used on a separate computer or transferred to another party. (4) This software package is licensed as a single product. Its component parts may not be separated for use on more than one computer. (5) You may make one (1) backup copy of this software onto media similar to the original distribution, to protect your investment in the software in case of damage or loss. -

Mahasen: Distributed Storage Resource Broker K

Mahasen: Distributed Storage Resource Broker K. Perera, T. Kishanthan, H. Perera, D. Madola, Malaka Walpola, Srinath Perera To cite this version: K. Perera, T. Kishanthan, H. Perera, D. Madola, Malaka Walpola, et al.. Mahasen: Distributed Storage Resource Broker. 10th International Conference on Network and Parallel Computing (NPC), Sep 2013, Guiyang, China. pp.380-392, 10.1007/978-3-642-40820-5_32. hal-01513774 HAL Id: hal-01513774 https://hal.inria.fr/hal-01513774 Submitted on 25 Apr 2017 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Distributed under a Creative Commons Attribution| 4.0 International License Mahasen: Distributed Storage Resource Broker K.D.A.K.S.Perera1, T Kishanthan1, H.A.S.Perera1, D.T.H.V.Madola1, Malaka Walpola1, Srinath Perera2 1 Computer Science and Engineering Department, University Of Moratuwa, Sri Lanka. {shelanrc, kshanth2101, ashansa.perera, hirunimadola, malaka.uom}@gmail.com 2 WSO2 Lanka, No 59, Flower Road, Colombo 07, Sri Lanka [email protected] Abstract. Modern day systems are facing an avalanche of data, and they are being forced to handle more and more data intensive use cases. These data comes in many forms and shapes: Sensors (RFID, Near Field Communication, Weather Sensors), transaction logs, Web, social networks etc. -

21St Century C

www.it-ebooks.info www.it-ebooks.info 21st Century C Ben Klemens Beijing • Cambridge • Farnham • Köln • Sebastopol • Tokyo www.it-ebooks.info 21st Century C by Ben Klemens Copyright © 2013 Ben Klemens. All rights reserved. Printed in the United States of America. Published by O’Reilly Media, Inc., 1005 Gravenstein Highway North, Sebastopol, CA 95472. O’Reilly books may be purchased for educational, business, or sales promotional use. Online editions are also available for most titles (http://my.safaribooksonline.com). For more information, contact our corporate/institutional sales department: 800-998-9938 or [email protected]. Editor: Nathan Jepson Indexer: Ellen Troutman Production Editor: Rachel Steely Cover Designer: Karen Montgomery Copyeditor: Linley Dolby Interior Designer: David Futato Proofreader: Teresa Horton Illustrators: Robert Romano and Rebecca Demarest November 2012: First Edition. Revision History for the First Edition: 2012-10-12 First release See http://oreilly.com/catalog/errata.csp?isbn=9781449327149 for release details. Nutshell Handbook, the Nutshell Handbook logo, and the O’Reilly logo are registered trademarks of O’Reilly Media, Inc. 21st Century C, the image of a common spotted cuscus, and related trade dress are trademarks of O’Reilly Media, Inc. Many of the designations used by manufacturers and sellers to distinguish their products are claimed as trademarks. Where those designations appear in this book, and O’Reilly Media, Inc., was aware of a trademark claim, the designations have been printed in caps or initial caps. While every precaution has been taken in the preparation of this book, the publisher and author assume no responsibility for errors or omissions, or for damages resulting from the use of the information con- tained herein. -

LNCS 8147, Pp

Mahasen: Distributed Storage Resource Broker K.D.A.K.S. Perera1, T. Kishanthan1, H.A.S. Perera1, D.T.H.V. Madola1, Malaka Walpola1, and Srinath Perera2 1 Computer Science and Engineering Department, University Of Moratuwa, Sri Lanka {shelanrc,kshanth2101,ashansa.perera,hirunimadola, malaka.uom}@gmail.com 2 WSO2 Lanka, No. 59, Flower Road, Colombo 07, Sri Lanka [email protected] Abstract. Modern day systems are facing an avalanche of data, and they are be- ing forced to handle more and more data intensive use cases. These data comes in many forms and shapes: Sensors (RFID, Near Field Communication, Weath- er Sensors), transaction logs, Web, social networks etc. As an example, weather sensors across the world generate a large amount of data throughout the year. Handling these and similar data require scalable, efficient, reliable and very large storages with support for efficient metadata based searching. This paper present Mahasen, a highly scalable storage for high volume data intensive ap- plications built on top of a peer-to-peer layer. In addition to scalable storage, Mahasen also supports efficient searching, built on top of the Distributed Hash table (DHT) 1 Introduction Currently United States collects weather data from many sources like Doppler readers deployed across the country, aircrafts, mobile towers and Balloons etc. These sensors keep generating a sizable amount of data. Processing them efficiently as needed is pushing our understanding about large-scale data processing to its limits. Among many challenges data poses, a prominent one is storing the data and index- ing them so that scientist and researchers can come and ask for specific type of data collected at a given time and in a given region. -

PYTHON FULL STACK DEVELOPER TRAINING by Nirvana Enterprises

PYTHON FULL STACK DEVELOPER TRAINING By Nirvana Enterprises 1 Python Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com About the Course As part of Python Full Stack Develop- more. You will learn how to Use CSS and ment program, you will learn the Front Bootstrap (a front-end framework that End technologies you need to know, in- simplifies web design) to create beauti- cluding HTML5, CSS3, Javascript, jQuery, fully styled sites quickly. Use Javascript Bootstrap, Python, Django Basics, Djan- to interact with sites on the Front-End go Templates, Django Forms, Django and also learn to use jQuery to quickly Admin Customization, ORM, Class Based work with the DOM. The Course includes Views, REST APIs, User Authentication. 3 industry level practice projects, and in- You will also learn how to build an ad- terview preparation, and extreme coding vanced API that handles creating and practices. This prepares you for your next updating user profiles, changing pass- Fortune 500 company project as a Full words, creating objects, uploading im- Stack Python Developer. Setup a project ages, filtering and searching objects, and with Docker and Docker-Compose. 2 Python Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com Key Course Highlights Concept & Logic development Learn Python, Django, HTML, with 160 Hours of Training by CSS, Javascript, Bootstrap & Experts MongoDB 3 Industry projects on Python, Develop Cloud Native Applica- Django, Testing, AWS, Angular, -

High Performance Computing Modernization Program Mass Storage

High Performance Computing Modernization Program Mass Storage September 21, 2012 Designing Storage Architectures for Digital Collections Library of Congress Gene McGill, [email protected] Arctic Region Supercomputing Center/HTL What is the HPCMP? • Five large DoD Supercomputing Resource Centers (DSRCs) and various smaller facilities – Air Force Research Lab (AFRL DSRC), Dayton, OH – Army Research Lab (ARL DSRC), Aberdeen Proving Ground, MD – US Army Engineer Research and Development Center (ERDC DSRC), Vicksburg, MS – Navy DoD Supercomputing Resource Center (Navy DSRC), Stennis Space Center, MS – Maui High Performance Computing Center (MHPCC DSRC), Kihei, Maui, HI • ARSC HEUE Test Lab (ARSC HTL), Fairbanks, AK • Other smaller, more focused centers What is the HPCMP? (cont) • ~1,500 users • Diverse scientific & engineering applications, systems are general-purpose, no single usage profile • Ten large, unclassified, HPC clusters – Total TFLOPs: 1421; 6.1 PBs local, high speed disk; • Mass Storage servers – SAM-QFS, SL8500, SL3000, T10000[A|B|C] + Remote DR. • 25 PB 1st copy, nearly all files get DR copy – Less than 2% of the users own 50% of the PBs – Growth is accelerating Legacy Architecture • Batch jobs run on HPC clusters – Jobs use fast, expensive, local disk – Insufficient space on HPCs to store restart files between jobs – Users store files on mass storage servers that shouldn’t live long-term • Restart file, intermediate results, … • … and users rarely go back and remove what they don’t need • Users tell us: – Tools to manage the millions of files on mass storage servers are lacking – HPC clusters not good for pre- & post-processing, this discourages post- processing and saving only high-value PP’d files – Users don’t think all files need a DR copy • Program management wants to live within current tape slots The HPCMP Enhanced User Environment • We don’t want to be an Archive like this group thinks of them! – We are implementing tools that could be used for an Archive… – … for the purpose of reducing rate of growth of data. -

JAVA FULL STACK DEVELOPER TRAINING by Nirvana Enterprises

JAVA FULL STACK DEVELOPER TRAINING By Nirvana Enterprises 1 Java Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com About the Course This is a full stack web development (Java IDE) and Tomcat Embedded Web course using Angular, Spring Boot, and Server. The course will also give you ex- Spring Security Frameworks. You will pertise in MongoDB, and Docker so you be using Angular (Frontend Framework), can build, test, and deploy applications TypeScript Basics, Angular CLI(To create quickly using containers. The Course in- Angular projects), Spring Boot (REST API cludes 3 industry level practice projects, Framework), Spring (Dependency Man- and interview preparation, and extreme agement), Spring Security (Authentica- coding practices. This prepares you for tion and Authorization - Basic and JWT), your next Fortune 500 company project BootStrap (Styling Pages), Maven (depen- as a Full Stack Java Developer. dencies management), Node (npm), Vi- sual Studio Code (TypeScript IDE), Eclipse 2 Java Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com Key Course Highlights Concept & Logic development Learn Core Java, Advanced Java, with 160 Hours of Training by SpringBoot, HTML, CSS, Javas- Experts cript, Bootstrap & MongoDB 3 Industry-level projects on Core Develop Cloud Native Applica- Java, Testing, Automation, AWS, tion - on AWS Cloud Angular, MongoDB & Docker Earn a Certificate in Java Full Architecture & SDLC - Microser- Stack Development on success- vices & DevOps ful completion of the program Guaranteed Placement within Cloud Platform & Deployment - months of successful comple- AWS Cloud, Docker & Jenkins tion of the program 3 Java Full Stack Developer Training 732.889.4242 [email protected] www.nirvanaenterprises.com Learning Outcomes Develop a working application on Build cloud-native application by Shopping Cart for ECommerce and seeding the code to Cloud (SCM), in Healthcare using full stack with like AWS. -

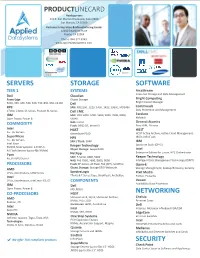

View Line Card

PRODUCTLINECARD Headquarters 100 E. San Marcos Boulevard, Suite #400 San Marcos, CA 92069 Customer Integra�on & Manufacturing Center 12180 Dearborn Place Poway, CA 92064 Phone: 844.371.4949 www.applieddatasystems.com SERVERS STORAGE SOFTWARE TIER 1 SYSTEMS ArcaStream Scale-Out Storage and Data Management Dell Cloudian PowerEdge Object Storage Bright Compu�ng Bright Cluster Manager R230, 330, 430, 530, 630, 730, 830, 930, C4130 Dell HPE SAN: MD1200, 1220, 1400, 1420, 3060e, MD3460 Commvault Data Protec�on and Management C7000, C3000, DL Series, Proliant BL Series Dell EMC IBM SAN: VNX 5200, 5400, 5600, 5800, 7600, 8000, Excelero Open Power, Power 8 VMAX NVMesh NAS : Isilon General Atomics COMMODITY Flash: DSSD D5, XtremIO Easy HSM, Nirvana Intel HGST HGST 1u - 2u Servers Ac�veScale P100 HGST Ac�ve Archive, Ac�ve Cloud Management, SuperMicro HPE JBOD, InfiniFlash 1u - 8u Servers SAN / Flash: 3PAR IBM Intel Xeon Spectrum Scale (GPFS) NVIDIA Tesla Systems: 1-9 GPUs Keeper Technology All Flash Servers (up to 48x NVMe) Object Storage: keeperSAFE Intel Enterprise Edi�on for Lustre, HPC Orchestrator Tyan NetApp 4u, 8 x GPU Server SAN: E-Series 2800, 5000 Keeper Technology NAS: FAS 2500, 2600, 8000, 9000 Intelligent Data Management Technology (IDMT) PROCESSORS Flash: EF-Series, All Flash FAS (AFF), SolidFire Open-E StorageGRID Webscale AMD Object Storage: Storage Management, Backup/Recovery, Security CPUs, Accelerators, 6300 Series SpectraLogic Pixit Media Tfinity & T-Series Tape, BlackPearl, Arc�cBlue Intel PixStor, PixCache CPUs, Accelerators, -

AONS II: Continuing the Trend Towards Preservation Software 'Nirvana'

AONS II: continuing the trend towards preservation software ‘Nirvana’ David Pearson Web Archiving and Digital Preservation Branch, National Library of Australia, Canberra ACT 2600, Australia. [email protected] Abstract. File format obsolescence is a major risk factor threatening the sustainability of and access to digital information. While the preservation community has become increasingly interested in tools for migration and transformation of file formats, the National Library of Australia is developing mechanisms specifically focused on monitoring and assessing the risks of file format obsolescence. This paper reports on the AONS II project, undertaken by the National Library of Australia (NLA) in conjunction with the Australian Partnership for Sustainable Repositories (APSR). The project aimed to develop a software tool which allows users to automatically monitor the status of file formats in their repositories, make risk assessments based on a core set of obsolescence risk questions, and receive notifications when file format risks change or other related events occur. This paper calls for the preservation community to develop a co-operating file format obsolescence community which includes registries, software tool creators and end users to effectively curate digital content in order to maintain long-term access. 1. Introduction Cycles of change in file formats impinge on even the most casual users of digital data. Technological change and format obsolescence are potentially major problems for every repository manager and data user. This is particularly true given the ever-increasing volume of digital materials, the plethora of file formats, the dynamic nature of computing environments, and the unremitting but often unpredictable drivers that cause formats to become obsolete. -

Grid Resource Broker

GRID RESOURCE BROKER ABHISHEK SINGH CSE Deptt. University at Buffalo Fall - 2006 Agenda • Grid Resource Broker- Architecture and Algorithm • Adaptable Resource Broker ( Drawback and Proposed solution) • SDSC Storage Resource Broker (SRB) • Future Enhancements A Sample Grid Grid Resource Broker INTERFACE Resource Resource Information Filter Lookup Services App/User Resource Resource Ranker Make match Fig 1. Resource Broker Architecture showing main components of the Design. Information Service : GIIS Tell me about the computing resources belonging to the HPC Lab that are uniprocessor Linux workstation, with low CPU load and available memory < 250 Mbyte XYZ.abc GRIS GRIS GIIS GRIS GRIS WEB Information Service: GRIS Tell me about the features of “xyz.abc” WEB GRIS Xyz.abc Grid Resource Broker INTERFACE Resource Resource Information Filter Lookup Services App/User Resource Resource Ranker Make match Fig 1. Resource Broker Architecture showing main components of the Design. Resource Brokering Algorithm • Input :- one or more job request • Action :- Select and submit job to most appropriate resources. • Output: none 1. Contact GIIS server(s) to obtain a list of available clusters. 2. Contact each resource's GRIS for static for static and dynamic resource information( hardware and software characteristics, current queue and load, etc.) 3. For each job :- (a) Select the cluster to which the job will be submitted. i. Filter out clusters that do not fulfill the requirements on memory, disk space architecture etc, and clusters that the user are not authorize to use. ii. Estimate the Total Time to Delivery (TTD) for each remaining resource. iii. Select the cluster with the shortest predicted TTD. -

Collaborative Design Fundamentals for Software Engineers Iv

A.HAMILTON-WRIGHT,K.RAYMOND ANDD.A.STACEY COLLABORATIVEDESIGN FUNDAMENTALSFOR SOFTWAREENGINEERS ONLINE TEXT FOR CIS*2250: SOFTWAREDESIGNII UNIVERSITYOFGUELPH,SCHOOLOFCOMPUTERSCIENCE Copyright © 2020 A. Hamilton-Wright, K. Raymond and D.A. Stacey published by university of guelph, school of computer science https://qemg.uoguelph.ca/cis2250book Provided under a Creative Commons Attribution-NonCommercial-NoDerivatives license. https://creativecommons.org/licenses/by-nc-nd/4.0/ First printing, July 2020 Contents 1 Introduction to the Course 1 1.1 What is Software Design? 1 1.1.1 Concept Maps of the Main Concepts in the Course 1 1.1.2 Other Tools and Tips for Success 3 1.1.3 Goals for the Semester 3 1.2 Course Logistics 4 1.2.1 Lectures 4 1.2.2 Labs 4 1.2.3 Readings 5 1.2.4 Design Challenges 5 1.2.5 Team Project 6 1.3 Getting Prepared for the Term 6 1.4 Review Questions 7 1.5 Review Questions - Chapter 1: Introduction to the course 7 I Initial Organization 9 2 Pair Programming 13 2.1 What is Pair Programming? 13 2.2 Why Pair Programming? 13 2.3 The Pair Programming Protocol 14 2.3.1 Pair Programming: Stage 1 14 2.3.2 Pair Programming: Stage 2 14 collaborative design fundamentals for software engineers iv 2.4 The Partners in Pair Programming 14 2.4.1 The Pilot (Driver) 15 2.4.2 The Co-pilot 15 2.5 Using Pair Programming for the Team Project 15 2.6 Pair Programming Hints 16 2.6.1 Let the pilot have time to type 16 2.6.2 Start your pairing session by establishing a few rules and guidelines for behaviour 16 2.6.3 There will be disagreements 16