Protecting Non-Volatile Memory Against Both Hard and Soft Errors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Class Notes Class: IX Topic: INPUT, OUTPUT, MEMORY and STORAGE DEVICES of a COMPUTER SYSTEM Subject: INFORMATION TECHNOLOGY

Class Notes Class: IX Topic: INPUT, OUTPUT, MEMORY AND STORAGE DEVICES OF A COMPUTER SYSTEM Subject: INFORMATION TECHNOLOGY Q1. A collection of eight bits is called BYTE Q2. Which of the following is an example of non-volatile memory? a) ROM b)RAM c) LSI d) VLSI Q3. Which of the following is unit of measurement used with computer system? a) Byte b) Megabyte c) Gigabyte d) All of the above Q4. Which of the following statement is false? a) Secondary storage in non-volatile. b) Primary storage is volatile. c) When the computer is turned off, data and instructions stored in primary storage are erased. d) None of the above. Q5. The secondary storage devices can only store data but they cannot perform a) Arithmetic operation b) Logic operation c) Fetch operation d) Either of above Q6. Which of the following does not represent an I/O device a) Speaker which beep b) Plotter C) Joystick d) ALU Q7. Which of the following is a correct definition of volatile memory? a) It loses its content at high temperatures. b) It is to be kept in airtight boxes. c) It loses its contents on failure of power supply d) It does not lose its contents on failure of power supply Q8. One thousand byte represent a a) Megabyte b) Gigabyte c) Kilobyte d) None of these Q9.What does a storage unit provide? a) A place to show data b) A place to store currently worked on information b) A place to store information Q10. What are four basic components of a computer? a) Input devices, Output devices, printing and typing b) Input devices, processing unit, storage and Output devices c) Input devices, CPU, Output devices and RAM Q11. -

The Era of Expeditious Nanoram-Based Computers Enhancement of Operating System Performance in Nanotechnology Environment

International Journal of Applied Engineering Research ISSN 0973-4562 Volume 13, Number 1 (2018) pp. 375-384 © Research India Publications. http://www.ripublication.com The Era of Expeditious NanoRAM-Based Computers Enhancement of Operating System Performance in Nanotechnology Environment Mona Nabil ElGohary PH.D Student, Computer Science Department Faculty of Computers and Information, Helwan University, Cairo, Egypt. 1ORCID: 0000-0002-1996-4673 Dr. Wessam ElBehaidy Assistant Professor, Computer Science Department, Faculty of Computers and Information, Helwan University, Cairo, Egypt. Ass. Prof. Hala Abdel-Galil Associative Professor, Computer Science Department Faculty of Computers and Information, Helwan University, Cairo, Egypt. Prof. Dr. Mostafa-Sami M. Mostafa Professor of Computer Science Faculty of Computers and Information, Helwan University, Cairo, Egypt. Abstract They announced that by 2018 will produce the first NanoRAM. The availability of a new generation of memory that is 1000 times faster than traditional DDRAM which can deliver This new NanoRam has many excellent properties that would terabytes of storage capacity, and consumes very little power, make an excellent replacement for the current DDRAM: being has the potential to change the future of the computer’s non-volatile, its large capacity, high speed read / write cycles. operating system. This paper studies the different changes that All the properties are introduced in the next section. will arise on the operating system functions; memory By replacing this NanoRAM instead of DDRAM in the CPU, management and job scheduling (especially context switch) this will affect the functionality of the operating system; such when integrating NanoRAM into the computer system. It is as main memory management, virtual memory, job scheduling, also looking forward to evaluating the possible enhancements secondary storage management; and thus the efficiency of the of computer’s performance with NanoRAM. -

Let's Talk About Storage & Recovery Methods for Non-Volatile Memory

Let’s Talk About Storage & Recovery Methods for Non-Volatile Memory Database Systems Joy Arulraj Andrew Pavlo Subramanya R. Dulloor [email protected] [email protected] [email protected] Carnegie Mellon University Carnegie Mellon University Intel Labs ABSTRACT of power, the DBMS must write that data to a non-volatile device, The advent of non-volatile memory (NVM) will fundamentally such as a SSD or HDD. Such devices only support slow, bulk data change the dichotomy between memory and durable storage in transfers as blocks. Contrast this with volatile DRAM, where a database management systems (DBMSs). These new NVM devices DBMS can quickly read and write a single byte from these devices, are almost as fast as DRAM, but all writes to it are potentially but all data is lost once power is lost. persistent even after power loss. Existing DBMSs are unable to take In addition, there are inherent physical limitations that prevent full advantage of this technology because their internal architectures DRAM from scaling to capacities beyond today’s levels [46]. Using are predicated on the assumption that memory is volatile. With a large amount of DRAM also consumes a lot of energy since it NVM, many of the components of legacy DBMSs are unnecessary requires periodic refreshing to preserve data even if it is not actively and will degrade the performance of data intensive applications. used. Studies have shown that DRAM consumes about 40% of the To better understand these issues, we implemented three engines overall power consumed by a server [42]. in a modular DBMS testbed that are based on different storage Although flash-based SSDs have better storage capacities and use management architectures: (1) in-place updates, (2) copy-on-write less energy than DRAM, they have other issues that make them less updates, and (3) log-structured updates. -

Computer Conservation Society

Issue Number 88 Winter 2019/20 Computer Conservation Society Aims and Objectives The Computer Conservation Society (CCS) is a co-operative venture between BCS, The Chartered Institute for IT; the Science Museum of London; and the Science and Industry Museum (SIM) in Manchester. The CCS was constituted in September 1989 as a Specialist Group of the British Computer Society. It is thus covered by the Royal Charter and charitable status of BCS. The objects of the Computer Conservation Society (“Society”) are: To promote the conservation, restoration and reconstruction of historic computing systems and to identify existing computing systems which may need to be archived in the future; To develop awareness of the importance of historic computing systems; To develop expertise in the conservation, restoration and reconstruction of historic computing systems; To represent the interests of the Society with other bodies; To promote the study of historic computing systems, their use and the history of the computer industry; To publish information of relevance to these objectives for the information of Society members and the wider public. Membership is open to anyone interested in computer conservation and the history of computing. The CCS is funded and supported by a grant from BCS and from donations. There are a number of active projects on specific computer restorations and early computer technologies and software. Younger people are especially encouraged to take part in order to achieve skills transfer. The CCS also enjoys a close relationship with the National Museum of Computing. Resurrection The Journal of the Computer Conservation Society ISSN 0958-7403 Number 88 Winter 2019/20 Contents Society Activity 2 News Round-Up 9 The Data Curator 10 Paul Cockshott From Tea Shops to Computer Company: The Improbable 15 Story of LEO John Aeberhard Book Review: Early Computing in Britain Ferranti Ltd. -

Open Poremba-Dissertation.Pdf

The Pennsylvania State University The Graduate School ARCHITECTING BYTE-ADDRESSABLE NON-VOLATILE MEMORIES FOR MAIN MEMORY A Dissertation in Computer Science and Engineering by Matthew Poremba c 2015 Matthew Poremba Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy May 2015 The dissertation of Matthew Poremba was reviewed and approved∗ by the following: Yuan Xie Professor of Computer Science and Engineering Dissertation Co-Advisor, Co-Chair of Committee John Sampson Assistant Professor of Computer Science and Engineering Dissertation Co-Advisor, Co-Chair of Committee Mary Jane Irwin Professor of Computer Science and Engineering Robert E. Noll Professor Evan Pugh Professor Vijaykrishnan Narayanan Professor of Computer Science and Engineering Kennith Jenkins Professor of Electrical Engineering Lee Coraor Associate Professor of Computer Science and Engineering Director of Academic Affairs ∗Signatures are on file in the Graduate School. Abstract New breakthroughs in memory technology in recent years has lead to increased research efforts in so-called byte-addressable non-volatile memories (NVM). As a result, questions of how and where these types of NVMs can be used have been raised. Simultaneously, semiconductor scaling has lead to an increased number of CPU cores on a processor die as a way to utilize the area. This has increased the pressure on the memory system and causing growth in the amount of main memory that is available in a computer system. This growth has escalated the amount of power consumed by the system by the de facto DRAM type memory. Moreover, DRAM memories have run into physical limitations on scalability due to the nature of their operation. -

Flash Memory and Micro SD Card

Flash Memory and Micro SD Card Presented by: Krishna Goyal (200601195) Anirudh Tripathi (200601141) OUTLINE • Memory • Volatile and Nonvolatile memory • EPROM and EEPROM memory • Flash memory • NAND and NOR Flash memory • Flash Memory operations • Advantage and Disadvantage of Embedded Over Stand Alone Flash Memory • Micro SD card • Summary • References Memory • The terms “storage” or “memory” refer to the parts of a digital computer that retain physical state (data) for some interval of time, possibly even after electrical power to the computer is turned off. • A computer system's memory is crucial to its operation; without memory, a computer could not read programs or retain data. Memory stores data electronically in memory cells contained in chips. It is usually measured in kilobytes, megabytes, or gigabytes. • Memory is classified into volatile and non-volatile memory. Memory Classification VOLATILE NON-VOLATILE SRAM ROM PROM DRAM EPROM EEPROM NVRAM Flash Memory Floppy Disk MRAM Hard Disk Magnetic Devices Volatile Memory • The most widely used form of primary storage today is a volatile form of random access memory, meaning that when the computer is shut down, anything contained in random access memory (RAM) is lost. • DRAM used for main memory • SRAM used for cache Non-Volatile memory • EEPROM, EPROM, FeRAM, FLASH, NVSRAM and ROM are different types of non-volatile memory. • The main differences are in the memories relative cost per bit and the flexibility to accommodate code changes. • nonvolatile memory, NVM or non-volatile storage, is computer memory that can retain the stored information even when not powered. EPROM • Erasable Programmable Read Only Memory also known as UV-EPROM is a form of non-volatile memory. -

The Acquisition and Analysis of Random Access Memory

Currently “In Submission” to JDFP (some content may change before publication) THE ACQUISITION AND ANALYSIS OF RANDOM ACCESS MEMORY Timothy Vidas Naval Postgraduate School Monterey, CA ABSTRACT Mainstream operating systems (and the hardware they run on) fail to purge the contents of portions of volatile memory when that portion is no longer required for operation. Similar to how many file systems simply mark a file as deleted instead of actually purging the space that the file occupies on disk, Random Access Memory (RAM) is commonly littered with old information in unallocated space waiting to be reused. Additionally, RAM contains constructs and caching regions that include a wealth of state related information. The availability of this information along with techniques to recover it, provide new methods for investigation. This article discusses the benefits and drawbacks of traditional incident response methods compared to an augmented model that includes the capture and subsequent analysis of a suspect system’s memory, provides a foundation for analyzing captured memory, and provides suggestions for related work in an effort to encourage forward progress in this relatively new area of digital forensics. KEYWORDS: memory, random access memory, memory analysis, digital forensics, Windows forensics, incident response, best practices Tim Vidas is a Research Associate at the Naval Postgraduate School. He has been focusing research in the field of digital forensics for a few years and is now primarily working on in the area of trusted operating systems and kernels. In addition to research, he likes to teach and has a wide set of IT related interests. He maintains several affiliations like ACM, CERT, and Infragard and holds several certifications such as CISSP, Sec+ and EnCE. -

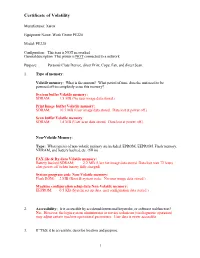

Certificate of Volatility

Certificate of Volatility Manufacturer: Xerox Equipment Name: Work Centre PE220 Model: PE220 Configuration: This item is NOT networked General description: This printer is NOT connected to a network. Purpose: Personal Class Device, direct Print, Copy, Fax, and direct Scan. 1. Type of memory: Volatile memory: What is the amount? What period of time does the unit need to be powered off to completely erase this memory? System buffer Volatile memory: SDRAM: 1.5 MB (No user image data stored.) Print Image buffer Volatile memory: SDRAM: 10.5 MB (User image data stored. Data lost at power off.) Scan buffer Volatile memory: SDRAM: 1.8 MB (User scan data stored. Data lost at power off.) Non-Volatile Memory: Type: What type(s) of non-volatile memory are included, EPROM, EEPROM, Flash memory, NVRAM, and battery backed, etc. (fill in) FAX file & Rx data Volatile memory: Battery backed SDRAM: 2.2 MB (User fax image data stored. Data lost max 72 hours after power off (when battery fully charged) System program code Non-Volatile memory: Flash ROM: 2 MB (Boot & system code. No user image data stored.) Machine configuration setup data Non-Volatile memory: EEPROM: 0.5 KB (System set up data. user configuration data stored.) 2. Accessibility: Is it accessible by accidental/intentional keystroke, or software malfunction? No. However, the login system administrator or service technician (via diagnostic operation) may adjust certain machine operational parameters. User data is never accessible. 3. If "YES, it is accessible, describe location and purpose. 1 Certificate of Volatility Purpose: typical uses for non-volatile memory location are system identification number and system configuration, boot, and initialization parameters, for example (battery-backed NVRAM on SUNs); put in for future design needs, internal depot repair, clock circuit, "nice" to have, or to flag unauthorized software, etc. -

Secpm: a Secure and Persistent Memory System for Non-Volatile Memory

SecPM: a Secure and Persistent Memory System for Non-volatile Memory Pengfei Zuo, Yu HuaB Wuhan National Laboratory for Optoelectronics School of Computer, Huazhong University of Science and Technology, Wuhan, China BCorresponding author: Yu Hua ([email protected]) Abstract cessor and memory controller usually reorder memory In the non-volatile memory, ensuring the security and writes. The partial update and reordering cause the crash correctness of persistent data is fundamental. However, inconsistency in NVM [15, 35]. Hence, the cache line the security and persistence issues are usually studied flushes, memory barriers, and log-based mechanisms are independently in existing work. To achieve both data used to ensure the crash consistency [17, 33]. security and persistence, simply combining existing per- • Security. The non-volatility of NVM also causes the sistence schemes with memory encryption is inefficient security problem of data remanence vulnerability [36,7], due to crash inconsistency and significant performance since NVM still retains data after systems power down. degradation. To bridge the gap between security and In general, when using encryption to protect the data persistence, this paper proposes SecPM, a Secure and security, the encrypted data are stored in disks, while Persistent Memory system, which consists of a counter raw data are retained in main memory [12]. In the legacy cache write-through (CWT) scheme and a locality-aware DRAM-based memory, if a DRAM DIMM is stolen, data counter write reduction (CWR) scheme. Specifically, are quickly lost due to the volatility. Unlike it, if an SecPM leverages the CWT scheme to guarantee the NVM DIMM is stolen, an attacker can directly stream crash consistency via ensuring both the data and its out the data from the DIMM. -

A Survey of Architectural Approaches for Managing Embedded DRAM and Non-Volatile On-Chip Caches Sparsh Mittal, Jeffrey S

A Survey Of Architectural Approaches for Managing Embedded DRAM and Non-volatile On-chip Caches Sparsh Mittal, Jeffrey S. Vetter, Dong Li To cite this version: Sparsh Mittal, Jeffrey S. Vetter, Dong Li. A Survey Of Architectural Approaches for Managing Embedded DRAM and Non-volatile On-chip Caches. IEEE Transactions on Parallel and Distributed Systems, Institute of Electrical and Electronics Engineers, 2015, pp.14. 10.1109/TPDS.2014.2324563. hal-01102387 HAL Id: hal-01102387 https://hal.archives-ouvertes.fr/hal-01102387 Submitted on 12 Jan 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. This is the author's version of an article that has been published in this journal. Changes were made to this version by the publisher prior to publication. The final version of record is available at http://dx.doi.org/10.1109/TPDS.2014.2324563 IEEE TRANSACTIONS ON PARALLEL AND DISTRIBUTING SYSTEMS 1 A Survey Of Architectural Approaches for Managing Embedded DRAM and Non-volatile On-chip Caches Sparsh Mittal, Member, IEEE, Jeffrey S. Vetter, Senior Member, IEEE, and Dong Li Abstract—Recent trends of CMOS scaling and increasing number of on-chip cores have led to a large increase in the size of on- chip caches. -

A Study About Non-Volatile Memories

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 29 July 2016 doi:10.20944/preprints201607.0093.v1 1 Article 2 A Study about Non‐Volatile Memories 3 Dileep Kumar* 4 Department of Information Media, The University of Suwon, Hwaseong‐Si South Korea ; [email protected] 5 * Correspondence: [email protected] ; Tel.: +82‐31‐229‐8212 6 7 8 Abstract: This paper presents an upcoming nonvolatile memories (NVM) overview. Non‐volatile 9 memory devices are electrically programmable and erasable to store charge in a location within the 10 device and to retain that charge when voltage supply from the device is disconnected. The 11 non‐volatile memory is typically a semiconductor memory comprising thousands of individual 12 transistors configured on a substrate to form a matrix of rows and columns of memory cells. 13 Non‐volatile memories are used in digital computing devices for the storage of data. In this paper 14 we have given introduction including a brief survey on upcoming NVMʹs such as FeRAM, MRAM, 15 CBRAM, PRAM, SONOS, RRAM, Racetrack memory and NRAM. In future Non‐volatile memory 16 may eliminate the need for comparatively slow forms of secondary storage systems, which include 17 hard disks. 18 Keywords: Non‐volatile Memories; NAND Flash Memories; Storage Memories 19 PACS: J0101 20 21 22 1. Introduction 23 Memory is divided into two main parts: volatile and nonvolatile. Volatile memory loses any 24 data when the system is turned off; it requires constant power to remain viable. Most kinds of 25 random access memory (RAM) fall into this category. -

1. Types of Computers Contents

1. Types of Computers Contents 1 Classes of computers 1 1.1 Classes by size ............................................. 1 1.1.1 Microcomputers (personal computers) ............................ 1 1.1.2 Minicomputers (midrange computers) ............................ 1 1.1.3 Mainframe computers ..................................... 1 1.1.4 Supercomputers ........................................ 1 1.2 Classes by function .......................................... 2 1.2.1 Servers ............................................ 2 1.2.2 Workstations ......................................... 2 1.2.3 Information appliances .................................... 2 1.2.4 Embedded computers ..................................... 2 1.3 See also ................................................ 2 1.4 References .............................................. 2 1.5 External links ............................................. 2 2 List of computer size categories 3 2.1 Supercomputers ............................................ 3 2.2 Mainframe computers ........................................ 3 2.3 Minicomputers ............................................ 3 2.4 Microcomputers ........................................... 3 2.5 Mobile computers ........................................... 3 2.6 Others ................................................. 4 2.7 Distinctive marks ........................................... 4 2.8 Categories ............................................... 4 2.9 See also ................................................ 4 2.10 References