The Properties and Promizes of UTF-8 M.J

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

SAS 9.3 UTF-8 Encoding Support and Related Issue Troubleshooting

SAS 9.3 UTF-8 Encoding Support and Related Issue Troubleshooting Jason (Jianduan) Liang SAS certified: Platform Administrator, Advanced Programmer for SAS 9 Agenda Introduction UTF-8 and other encodings SAS options for encoding and configuration Other Considerations for UTF-8 data Encoding issues troubleshooting techniques (tips) Introduction What is UTF-8? . A character encoding capable of encoding all possible characters Why UTF-8? . Dominant encoding of the www (86.5%) SAS system options for encoding . Encoding – instructs SAS how to read, process and store data . Locale - instructs SAS how to present or display currency, date and time, set timezone values UTF-8 and other Encodings ASSCII (American Standard Code for Information Interchange) . 7-bit . 128 - character set . Examples (code point-char-hex): 32-Space-20; 63-?-3F; 64-@-40; 65-A-41 UTF-8 and other Encodings ISO 8859-1 (Latin-1) for Western European languages Windows-1252 (Latin-1) for Western European languages . 8-bit (1 byte, 256 character set) . Identical to asscii for the first 128 chars . Extended ascii chars examples: . 155-£-A3; 161- ©-A9 . SAS option encoding value: wlatin1 (latin1) UTF-8 and other Encodings UTF-8 and other Encodings Problems . Only covers English and Western Europe languages, ISO-8859-2, …15 . Multiple encoding is required to support national languages . Same character encoded differently, same code point represents different chars Unicode . Unicode – assign a unique code/number to every possible character of all languages . Examples of unicode points: o U+0020 – Space U+0041 – A o U+00A9 - © U+C3BF - ÿ UTF-8 and other Encodings UTF-8 . -

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding A

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding Adobe-Symbol-Encoding csHPPSMath Adobe-Zapf-Dingbats-Encoding csZapfDingbats Arabic ISO-8859-6, csISOLatinArabic, iso-ir-127, ECMA-114, ASMO-708 ASCII US-ASCII, ANSI_X3.4-1968, iso-ir-6, ANSI_X3.4-1986, ISO646-US, us, IBM367, csASCI big-endian ISO-10646-UCS-2, BigEndian, 68k, PowerPC, Mac, Macintosh Big5 csBig5, cn-big5, x-x-big5 Big5Plus Big5+, csBig5Plus BMP ISO-10646-UCS-2, BMPstring CCSID-1027 csCCSID1027, IBM1027 CCSID-1047 csCCSID1047, IBM1047 CCSID-290 csCCSID290, CCSID290, IBM290 CCSID-300 csCCSID300, CCSID300, IBM300 CCSID-930 csCCSID930, CCSID930, IBM930 CCSID-935 csCCSID935, CCSID935, IBM935 CCSID-937 csCCSID937, CCSID937, IBM937 CCSID-939 csCCSID939, CCSID939, IBM939 CCSID-942 csCCSID942, CCSID942, IBM942 ChineseAutoDetect csChineseAutoDetect: Candidate encodings: GB2312, Big5, GB18030, UTF32:UTF8, UCS2, UTF32 EUC-H, csCNS11643EUC, EUC-TW, TW-EUC, H-EUC, CNS-11643-1992, EUC-H-1992, csCNS11643-1992-EUC, EUC-TW-1992, CNS-11643 TW-EUC-1992, H-EUC-1992 CNS-11643-1986 EUC-H-1986, csCNS11643_1986_EUC, EUC-TW-1986, TW-EUC-1986, H-EUC-1986 CP10000 csCP10000, windows-10000 CP10001 csCP10001, windows-10001 CP10002 csCP10002, windows-10002 CP10003 csCP10003, windows-10003 CP10004 csCP10004, windows-10004 CP10005 csCP10005, windows-10005 CP10006 csCP10006, windows-10006 CP10007 csCP10007, windows-10007 CP10008 csCP10008, windows-10008 CP10010 csCP10010, windows-10010 CP10017 csCP10017, windows-10017 CP10029 csCP10029, windows-10029 CP10079 csCP10079, windows-10079 -

JS Character Encodings

JS � Character Encodings Anna Henningsen · @addaleax · she/her 1 It’s good to be back! 2 ??? https://travis-ci.org/node-ffi-napi/get-symbol-from-current-process-h/jobs/641550176 3 So … what’s a character encoding? People are good with text, computers are good with numbers Text List of characters “Encoding” List of bytes List of integers 4 So … what’s a character encoding? People are good with text, computers are good with numbers Hello [‘H’,’e’,’l’,’l’,’o’] 68 65 6c 6c 6f [72, 101, 108, 108, 111] 5 So … what’s a character encoding? People are good with text, computers are good with numbers 你好! [‘你’,’好’] ??? ??? 6 ASCII 0 0x00 <NUL> … … … 65 0x41 A 66 0x42 B 67 0x43 C … … … 97 0x61 a 98 0x62 b … … … 127 0x7F <DEL> 7 ASCII ● 7-bit ● Covers most English-language use cases ● … and that’s pretty much it 8 ISO-8859-*, Windows code pages ● Idea: Usually, transmission has 8 bit per byte available, so create ASCII-extending charsets for more languages ISO-8859-1 (Western) ISO-8859-5 (Cyrillic) Windows-1251 (Cyrillic) (aka Latin-1) … … … … 0xD0 Ð а Р 0xD1 Ñ б С 0xD2 Ò в Т … … … … 9 GBK ● Idea: Also extend ASCII, but use 2-byte for Chinese characters … … 0x41 A 0x42 B … … 0xC4 0xE3 你 0xC4 0xE4 匿 … … 10 https://xkcd.com/927/ 11 Unicode: Multiple encodings! 4d c3 bc 6c 6c (UTF-8) U+004D M “Müll” U+00FC ü 4d 00 fc 00 6c 00 6c 00 (UTF-16LE) U+006C l U+006C l 00 4d 00 fc 00 6c 00 6c (UTF-16BE) 12 Unicode ● New idea: Don’t create a gazillion charsets, and drop 1-byte/2-byte restriction ● Shared character set for multiple encodings: U+XXXX with 4 hex digits, e.g. -

CONTENTS 1. Introduction...1 2. RPL Principles

CONTENTS 1. Introduction...................................... 1 2. RPL Principles.................................... 2 2.1 Origins..................................... 2 2.2 Mathematical Control........................ 3 2.3 Formal Definitions ......................... 5 2.4 Execution................................... 6 2.4.1 EVAL............................... 8 2.4.2 Data Class Objects................. 9 2.4.3 Identifier Class Objects........... 9 2.4.4 Procedure Class Objects............ 10 2.4.5 Object Skipover and SEMI........... 10 2.4.6 RPL Pointers....................... 11 2.5 Memory Management........................... 11 2.6 User RPL and System RPL..................... 13 2.7 Programming in System RPL................... 14 2.8 Sample RPL Program.......................... 16 2.8.1 The Source File.................... 16 2.8.2 Compiling the Program.............. 18 3. Object Structures................................. 19 3.1 Object Types................................ 19 3.1.1 Identifier Object.................. 19 3.1.2 Temporary Identifier Object........ 19 3.1.3 ROM Pointer Object................. 20 3.1.4 Binary Integer Object.............. 20 3.1.5 Real Number Object................. 20 3.1.6 Extended Real Number Object........ 21 3.1.7 Complex Number Object.............. 22 3.1.8 Extended Complex Number Object............................. 22 3.1.9 Array Object....................... 23 3.1.10 Linked Array Object................ 23 3.1.11 Character String Object............ 25 3.1.12 Hex String Object.................. 25 3.1.13 Character Object................... 25 3.1.14 Unit Object........................ 26 3.1.15 Code Object........................ 26 3.1.16 Primitive Code Object.............. 27 3.1.17 Program Object..................... 27 3.1.18 List Object........................ 28 3.1.19 Symbolic Object.................... 28 3.1.20 Directory Object................... 29 3.1.21 Graphics Object.................... 29 3.2 Terminology and Abbreviations............... 30 4. -

Unicode Unicode

You are at: ALA.org » PLA » Professional Tools » PLA Tech Notes » Unicode Unicode By Richard W. Boss Unicode is an international character-encoding standard designed to support the electronic exchange, processing, storage, and display of the written texts of all of the world’s languages and scripts, including scientific, mathematical, and technical symbols. Unicode is an encoding standard, not a software program or a font. The standard is independent of operating systems, programming languages, database management systems, and hardware platforms. Before Unicode, there were hundreds of different encoding schemes; no single one could contain enough characters. The European Union alone required several for its languages. The Importance of Unicode for Libraries While academic libraries have had holdings in a number of languages and scripts for many decades, public libraries—as the result of the increasingly diverse communities that they serve-- have begun to purchase books in a wide variety of languages and scripts. There is hardly a metropolitan area in North America that does not have immigrants from a score of countries. Even public libraries in small communities are serving an increasing number of people for whom English is not their primary language. Unicode is also important for resource sharing. Libraries need to be able to borrow or lend materials in a wide variety of languages and scripts on behalf of patrons who cannot find what they need in their own libraries. The ability to send and receive bibliographic information among Unicode-conforming libraries is a major breakthrough. Even libraries that have no interest in Unicode for themselves should appreciate the fact that vendors of integrated library systems and other information technologies that support Unicode are able to market their products globally, therefore, strengthening them financially and making it possible to maintain aggressive product development programs. -

Windows NLS Considerations Version 2.1

Windows NLS Considerations version 2.1 Radoslav Rusinov [email protected] Windows NLS Considerations Contents 1. Introduction ............................................................................................................................................... 3 1.1. Windows and Code Pages .................................................................................................................... 3 1.2. CharacterSet ........................................................................................................................................ 3 1.3. Encoding Scheme ................................................................................................................................ 3 1.4. Fonts ................................................................................................................................................... 4 1.5. So Why Are There Different Charactersets? ........................................................................................ 4 1.6. What are the Difference Between 7 bit, 8 bit and Unicode Charactersets? ........................................... 4 2. NLS_LANG .............................................................................................................................................. 4 2.1. Setting the Character Set in NLS_LANG ............................................................................................ 4 2.2. Where is the Character Conversion Done? ......................................................................................... -

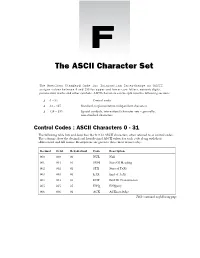

The ASCII Character Set

The ASCII Character Set The American Standard Code for Information Interchange or ASCII assigns values between 0 and 255 for upper and lower case letters, numeric digits, punctuation marks and other symbols. ASCII characters can be split into the following sections: ❑ 0 – 31 Control codes ❑ 32 – 127 Standard, implementation-independent characters ❑ 128 – 255 Special symbols, international character sets – generally, non-standard characters. Control Codes : ASCII Characters 0 - 31 The following table lists and describes the first 32 ASCII characters, often referred to as control codes. The columns show the decimal and hexadecimal ASCII values for each code along with their abbreviated and full names. Descriptions are given to those most in use today. Decimal Octal Hexadecimal Code Description 000 000 00 NUL Null 001 001 01 SOH Start Of Heading 002 002 02 STX Start of TeXt 003 003 03 ETX End of TeXt 004 004 04 EOT End Of Transmission 005 005 05 ENQ ENQuiry 006 006 06 ACK ACKnowledge Table continued on following page Appendix F Decimal Octal Hexadecimal Code Description 007 007 07 BEL BELl. Caused teletype machines to ring a bell. Causes a beep in many common terminals and terminal emulation programs. 008 010 08 BS BackSpace. Moves the cursor move backwards (left) one space. 009 011 09 HT Horizontal Tab. Moves the cursor right to the next tab stop. The spacing of tab stops is dependent on the output device, but is often either 8 or 10 characters wide. 010 012 0A LF Line Feed. Moves the cursor to a new line. On Unix systems, moves to a new line AND all the way to the left. -

UTF-8 for User Defined Fields

Proposed Updates to ANSI/NIST-ITL 1-2005 UTF8, GPS, Tracking Subcommittee Scott Hills, Aware, Inc. Rob Mungovan, Aware, Inc. Dale Hapeman, DOD-BFC Tony Misslin, Identix Bonny Scheier, Sabre Ralph Lessman, Smiths-Heimann UTF-8 for User Defined Fields Change to Section 6.1 File Format The second paragraph restricts text or character data in Type 2 and Type 9 through Type 16 records to 7-bit ASCII code. This should be modified to allow 8-bit UTF-8 characters in all user defined fields within these records. Proposed wording change to paragraph 2: After the first sentence: “The text or character data in Type-2, and Type-9 through Type-16 records will normally be recorded using 7-bit ASCII code in variable length fields with specified upper limits on the size of the fields.” … Add this… “Eight bit UTF-8 characters shall be allowed to support international character sets for all user defined fields in all record types. By definition this excludes record types 1, 3, 4, 5, 6 and 8.” The third paragraph should be changed to suggest UTF-8 as the preferred way of storing data that cannot be represented in 7-bit ASCII. Proposed wording change to paragraph 3: After the first sentence: “For data interchange between non-English speaking agencies, character sets other than 7-bit ASCII may be used in textual fields contained in Type-2 and Type-9 through Type- 16 records.” … Add this… “UTF-8 is the preferred method of storing textual data that cannot be represented as 7 bit ASCII.” Change to Section 7.2.3 International character sets … at the very end of this section add this text… “Usage of UTF-8 is allowed as an alternative to the technique that requires the usage of the ASCII “STX” and “ETX” characters to signify the beginning or end of of international characters. -

LG Programmer’S Reference Manual

LG Programmer’s Reference Manual Line Matrix Series Printers Trademark Acknowledgements ANSI is a registered trademark of American National Standards Institute, Inc. Code V is a trademark of Quality Micro Systems. Chatillon is a trademark of John Chatillon & Sons, Inc. Ethernet is a trademark of Xerox Corporation. IBM is a registered trademark of International Business Machines Corporation. IGP is a registered trademark of Printronix, LLC. Intelligent Printer Data Stream and IPDS are trademarks of International Business Machines Corporation. LinePrinter Plus is a registered trademark of Printronix, LLC. MS-DOS is a registered trademark of Microsoft Corporation. PC-DOS is a trademark of International Business Machines Corporation. PGL is a registered trademark of Printronix, LLC. PrintNet is a registered trademark of Printronix, LLC. Printronix is a registered trademark of Printronix, LLC. PSA is a trademark of Printronix, LLC. QMS is a registered trademark of Quality Micro Systems. RibbonMinder is a trademark of Printronix, LLC. Torx is a registered trademark of Camcar/Textron Inc. Utica is a registered trademark of Cooper Power Tools. Printronix, LLC. makes no representations or warranties of any kind regarding this material, including, but not limited to, implied warranties of merchantability and fitness for a particular purpose. Printronix, LLC. shall not be held responsible for errors contained herein or any omissions from this material or for any damages, whether direct, indirect, incidental or consequential, in connection with the furnishing, distribution, performance or use of this material. The information in this manual is subject to change without notice. This document contains proprietary information protected by copyright. No part of this document may be reproduced, copied, translated or incorporated in any other material in any form or by any means, whether manual, graphic, electronic, mechanical or otherwise, without the prior written consent of Printronix, LLC. -

Exploiting Unicode-Enabled Software

Unraveling Unicode: A Bag of Tricks for Bug Hunting Black Hat USA July 2009 Chris Weber www.lookout.net [email protected] Casaba Security Can you tell the difference? Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber How about now? Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber The Transformers When good input turns bad <scrİpt> becomes <script> Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber Agenda Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber Unicode Transformations Agenda • Unicode crash course • Root Causes • Attack Vectors • Tools – Find Unicode issues in Web-testing – Visual Spoofing Detection Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber Unicode Transformations Agenda • Unicode crash course • Root Causes • Attack Vectors • Tools Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber Unicode Crash Course The Unicode Attack Surface • End users • Applications • Databases • Programming languages • Operating Systems Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber Unicode Crash Course Unthink it Black Hat USA - July 2009 www.casabasecurity.com © 2009 Chris Weber Unicode Crash Course • A large and complex standard code points canonical mappings encodings decomposition types categorization case folding normalization best-fit mapping binary properties 17 planes case mapping private use ranges conversion tables script blocks bi-directional properties escapings Black Hat USA - July 2009 © 2009 Chris