ESE 531: Digital Signal Processing

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Efficient Supersampling Antialiasing for High-Performance Architectures

Efficient Supersampling Antialiasing for High-Performance Architectures TR91-023 April, 1991 Steven Molnar The University of North Carolina at Chapel Hill Department of Computer Science CB#3175, Sitterson Hall Chapel Hill, NC 27599-3175 This work was supported by DARPA/ISTO Order No. 6090, NSF Grant No. DCI- 8601152 and IBM. UNC is an Equa.l Opportunity/Affirmative Action Institution. EFFICIENT SUPERSAMPLING ANTIALIASING FOR HIGH PERFORMANCE ARCHITECTURES Steven Molnar Department of Computer Science University of North Carolina Chapel Hill, NC 27599-3175 Abstract Techniques are presented for increasing the efficiency of supersampling antialiasing in high-performance graphics architectures. The traditional approach is to sample each pixel with multiple, regularly spaced or jittered samples, and to blend the sample values into a final value using a weighted average [FUCH85][DEER88][MAMM89][HAEB90]. This paper describes a new type of antialiasing kernel that is optimized for the constraints of hardware systems and produces higher quality images with fewer sample points than traditional methods. The central idea is to compute a Poisson-disk distribution of sample points for a small region of the screen (typically pixel-sized, or the size of a few pixels). Sample points are then assigned to pixels so that the density of samples points (rather than weights) for each pixel approximates a Gaussian (or other) reconstruction filter as closely as possible. The result is a supersampling kernel that implements importance sampling with Poisson-disk-distributed samples. The method incurs no additional run-time expense over standard weighted-average supersampling methods, supports successive-refinement, and can be implemented on any high-performance system that point samples accurately and has sufficient frame-buffer storage for two color buffers. -

Design of Optimal Feedback Filters with Guaranteed Closed-Loop Stability for Oversampled Noise-Shaping Subband Quantizers

49th IEEE Conference on Decision and Control December 15-17, 2010 Hilton Atlanta Hotel, Atlanta, GA, USA Design of Optimal Feedback Filters with Guaranteed Closed-Loop Stability for Oversampled Noise-Shaping Subband Quantizers SanderWahls HolgerBoche. Abstract—We consider the oversampled noise-shaping sub- T T T T ] ] ] ] band quantizer (ONSQ) with the quantization noise being q q v q,q v q,q + Quant- + + + + modeled as white noise. The problem at hand is to design an q - q izer q q - q q ,...,w ,...,w optimal feedback filter. Optimal feedback filters with regard 1 1 ,...,v ,...,v to the current standard model of the ONSQ inhabit several + q,1 q,1 w=[w + w=[w =[v =[v shortcomings: the stability of the feedback loop is not ensured - q q v v and the noise is considered independent of both the input and u ŋ u ŋ -1 -1 the feedback filter. Another previously unknown disadvantage, q z F(z) q q z F(z) q which we prove in our paper, is that optimal feedback filters in the standard model are never unique. The goal of this paper is (a) Real model: η is the qnt. error (b) Linearized model: η is white noise to show how these shortcomings can be overcome. The stability issue is addressed by showing that every feedback filter can be Figure 1: Noise-Shaping Quantizer “stabilized” in the sense that we can find another feedback filter which stabilizes the feedback loop and achieves a performance w v E (z) p 1 q,1 p R (z) equal (or arbitrarily close) to the original filter. -

Comparing Oversampling Techniques to Handle the Class Imbalance Problem: a Customer Churn Prediction Case Study

Received September 14, 2016, accepted October 1, 2016, date of publication October 26, 2016, date of current version November 28, 2016. Digital Object Identifier 10.1109/ACCESS.2016.2619719 Comparing Oversampling Techniques to Handle the Class Imbalance Problem: A Customer Churn Prediction Case Study ADNAN AMIN1, SAJID ANWAR1, AWAIS ADNAN1, MUHAMMAD NAWAZ1, NEWTON HOWARD2, JUNAID QADIR3, (Senior Member, IEEE), AHMAD HAWALAH4, AND AMIR HUSSAIN5, (Senior Member, IEEE) 1Center for Excellence in Information Technology, Institute of Management Sciences, Peshawar 25000, Pakistan 2Nuffield Department of Surgical Sciences, University of Oxford, Oxford, OX3 9DU, U.K. 3Information Technology University, Arfa Software Technology Park, Lahore 54000, Pakistan 4College of Computer Science and Engineering, Taibah University, Medina 344, Saudi Arabia 5Division of Computing Science and Maths, University of Stirling, Stirling, FK9 4LA, U.K. Corresponding author: A. Amin ([email protected]) The work of A. Hussain was supported by the U.K. Engineering and Physical Sciences Research Council under Grant EP/M026981/1. ABSTRACT Customer retention is a major issue for various service-based organizations particularly telecom industry, wherein predictive models for observing the behavior of customers are one of the great instruments in customer retention process and inferring the future behavior of the customers. However, the performances of predictive models are greatly affected when the real-world data set is highly imbalanced. A data set is called imbalanced if the samples size from one class is very much smaller or larger than the other classes. The most commonly used technique is over/under sampling for handling the class-imbalance problem (CIP) in various domains. -

CSMOUTE: Combined Synthetic Oversampling and Undersampling Technique for Imbalanced Data Classification

CSMOUTE: Combined Synthetic Oversampling and Undersampling Technique for Imbalanced Data Classification Michał Koziarski Department of Electronics AGH University of Science and Technology Al. Mickiewicza 30, 30-059 Kraków, Poland Email: [email protected] Abstract—In this paper we propose a novel data-level algo- ity observations (oversampling). Secondly, the algorithm-level rithm for handling data imbalance in the classification task, Syn- methods, which adjust the training procedure of the learning thetic Majority Undersampling Technique (SMUTE). SMUTE algorithms to better accommodate for the data imbalance. In leverages the concept of interpolation of nearby instances, previ- ously introduced in the oversampling setting in SMOTE. Further- this paper we focus on the former. Specifically, we propose more, we combine both in the Combined Synthetic Oversampling a novel undersampling algorithm, Synthetic Majority Under- and Undersampling Technique (CSMOUTE), which integrates sampling Technique (SMUTE), which leverages the concept of SMOTE oversampling with SMUTE undersampling. The results interpolation of nearby instances, previously introduced in the of the conducted experimental study demonstrate the usefulness oversampling setting in SMOTE [5]. Secondly, we propose a of both the SMUTE and the CSMOUTE algorithms, especially when combined with more complex classifiers, namely MLP and Combined Synthetic Oversampling and Undersampling Tech- SVM, and when applied on datasets consisting of a large number nique (CSMOUTE), which integrates SMOTE oversampling of outliers. This leads us to a conclusion that the proposed with SMUTE undersampling. The aim of this paper is to approach shows promise for further extensions accommodating serve as a preliminary study of the potential usefulness of local data characteristics, a direction discussed in more detail in the proposed approach, with the final goal of extending it to the paper. -

A Subjective Evaluation of Noise-Shaping Quantization for Adaptive

332 IEEE TRANSACTIONS ON COMMUNICATIONS, VOL. 36, NO. 3, MARCH 1988 A Subjective Evaluation of Noise-S haping Quantization for Adaptive Intra-/Interframe DPCM Coding of Color Television Signals BERND GIROD, H AKAN ALMER, LEIF BENGTSSON, BJORN CHRISTENSSON, AND PETER WEISS Abstract-Nonuniform quantizers for just not visible reconstruction quantizers for just not visible eri-ors have been presented in errors in an adaptive intra-/interframe DPCM scheme for component- [12]-[I41 for the luminance and in [IS], [I61 for the color coded color television signals are presented, both for conventional DPCM difference signals. An investigation concerning the visibility and for noise-shaping DPCM. Noise feedback filters that minimize the of quantization errors for an adaiptive intra-/interframe lumi- visibility of reconstruction errors by spectral shaping are designed for Y, nance DPCM coder has been reported by Westerkamp [17]. R-Y, and B-Y. A closed-form description of the “masking function” is In this paper, we present quantization characteristics for the derived which leads to the one-parameter “6 quantizer” characteristic. luminance and the color dilference signals that lead to just not Subjective tests that were carried out to determine visibility thresholds for visible reconstruction errors in sin adaptive intra-/interframe reconstruction errors for conventional DPCM and for noise shaping DPCM scheme. These quantizers have been determined by DPCM show significant gains by noise shaping. For a transmission rate means of subjective tests. In order to evaluate the improve- of around 30 Mbitsls, reconstruction errors are almost always below the ments that can be achieved by reconstruction noise shaping visibility threshold if variable length encoding of the prediction error is [l 11, we compare the quantization characteristics for just not combined with noise shaping within a 3:l:l system. -

Signal Sampling

FYS3240 PC-based instrumentation and microcontrollers Signal sampling Spring 2017 – Lecture #5 Bekkeng, 30.01.2017 Content – Aliasing – Sampling – Analog to Digital Conversion (ADC) – Filtering – Oversampling – Triggering Analog Signal Information Three types of information: • Level • Shape • Frequency Sampling Considerations – An analog signal is continuous – A sampled signal is a series of discrete samples acquired at a specified sampling rate – The faster we sample the more our sampled signal will look like our actual signal Actual Signal – If not sampled fast enough a problem known as aliasing will occur Sampled Signal Aliasing Adequately Sampled SignalSignal Aliased Signal Bandwidth of a filter • The bandwidth B of a filter is defined to be between the -3 dB points Sampling & Nyquist’s Theorem • Nyquist’s sampling theorem: – The sample frequency should be at least twice the highest frequency contained in the signal Δf • Or, more correctly: The sample frequency fs should be at least twice the bandwidth Δf of your signal 0 f • In mathematical terms: fs ≥ 2 *Δf, where Δf = fhigh – flow • However, to accurately represent the shape of the ECG signal signal, or to determine peak maximum and peak locations, a higher sampling rate is required – Typically a sample rate of 10 times the bandwidth of the signal is required. Illustration from wikipedia Sampling Example Aliased Signal 100Hz Sine Wave Sampled at 100Hz Adequately Sampled for Frequency Only (Same # of cycles) 100Hz Sine Wave Sampled at 200Hz Adequately Sampled for Frequency and Shape 100Hz Sine Wave Sampled at 1kHz Hardware Filtering • Filtering – To remove unwanted signals from the signal that you are trying to measure • Analog anti-aliasing low-pass filtering before the A/D converter – To remove all signal frequencies that are higher than the input bandwidth of the device. -

Quantization Noise Shaping on Arbitrary Frame Expansions

Hindawi Publishing Corporation EURASIP Journal on Applied Signal Processing Volume 2006, Article ID 53807, Pages 1–12 DOI 10.1155/ASP/2006/53807 Quantization Noise Shaping on Arbitrary Frame Expansions Petros T. Boufounos and Alan V. Oppenheim Digital Signal Processing Group, Massachusetts Institute of Technology, 77 Massachusetts Avenue, Room 36-615, Cambridge, MA 02139, USA Received 2 October 2004; Revised 10 June 2005; Accepted 12 July 2005 Quantization noise shaping is commonly used in oversampled A/D and D/A converters with uniform sampling. This paper consid- ers quantization noise shaping for arbitrary finite frame expansions based on generalizing the view of first-order classical oversam- pled noise shaping as a compensation of the quantization error through projections. Two levels of generalization are developed, one a special case of the other, and two different cost models are proposed to evaluate the quantizer structures. Within our framework, the synthesis frame vectors are assumed given, and the computational complexity is in the initial determination of frame vector ordering, carried out off-line as part of the quantizer design. We consider the extension of the results to infinite shift-invariant frames and consider in particular filtering and oversampled filter banks. Copyright © 2006 P. T. Boufounos and A. V. Oppenheim. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited. 1. INTRODUCTION coefficient through a projection onto the space defined by another synthesis frame vector. This requires only knowl- Quantization methods for frame expansions have received edge of the synthesis frame set and a prespecified order- considerable attention in the last few years. -

Color Error Diffusion with Generalized Optimum Noise

COLOR ERROR DIFFUSION WITH GENERALIZED OPTIMUM NOISE SHAPING Niranjan Damera-Venkata Brian L. Evans Halftoning and Image Pro cessing Group Emb edded Signal Pro cessing Lab oratory Hewlett-Packard Lab oratories Dept. of Electrical and Computer Engineering 1501 Page Mill Road The University of Texas at Austin Palo Alto, CA 94304 Austin, TX 78712-1084 E-mail: [email protected] E-mail: [email protected] In this pap er, we derive the optimum matrix-valued er- ABSTRACT ror lter using the matrix gain mo del [3 ] to mo del the noise We optimize the noise shaping b ehavior of color error dif- shaping b ehavior of color error di usion and a generalized fusion by designing an optimized error lter based on a linear spatially-invariant mo del not necessarily separable prop osed noise shaping mo del for color error di usion and for the human color vision. We also incorp orate the con- a generalized linear spatially-invariant mo del of the hu- straint that all of the RGB quantization error b e di used. man visual system. Our approach allows the error lter We show that the optimum error lter may be obtained to have matrix-valued co ecients and di use quantization as a solution to a matrix version of the Yule-Walker equa- error across channels in an opp onent color representation. tions. A gradient descent algorithm is prop osed to solve the Thus, the noise is shap ed into frequency regions of reduced generalized Yule-Walker equations. In the sp ecial case that human color sensitivity. -

Noise Shaping

INF4420 ΔΣ data converters Spring 2012 Jørgen Andreas Michaelsen ([email protected]) Outline Oversampling Noise shaping Circuit design issues Higher order noise shaping Introduction So far we have considered so called Nyquist data converters. Quantization noise is a fundamental limit. Improving the resolution of the converter, translates to increasing the number of quantization steps (bits). Requires better -1 N+1 component matching, AOL > β 2 , and N+1 -1 -1 GBW > fs ln 2 π β . Introduction ΔΣ modulator based data converters relies on oversampling and noise shaping to improve the resolution. Oversampling means that the data rate is increased to several times what is required by the Nyquist sampling theorem. Noise shaping means that the quantization noise is moved away from the signal band that we are interested in. Introduction We can make a high resolution data converter with few quantization steps! The most obvious trade-off is the increase in speed and more complex digital processing. However, this is a good fit for CMOS. We can apply this to both DACs and ADCs. Oversampling The total quantization noise depends only on the number of steps. Not the bandwidth. If we increase the sampling rate, the quantization noise will not increase and it will spread over a larger area. The power spectral density will decrease. Oversampling Take a regular ADC and run it at a much higher speed than twice the Nyquist frequency. Quantization noise is reduced because only a fraction remains in the signal bandwidth, fb. Oversampling Doubling the OSR improves SNR by 0.5 bit Increasing the resolution by oversampling is not practical. -

Sonically Optimized Noise Shaping Techniques

Sonically Optimized Noise Shaping Techniques Second edition Alexey Lukin [email protected] 2002 In this article we compare different commercially available noise shaping algo- rithms and introduce a new highly optimized frequency response curve for noise shap- ing filter implemented in our ExtraBit Mastering Processor. Contents INTRODUCTION ......................................................................................................................... 2 TESTED NOISE SHAPERS ......................................................................................................... 2 CRITERIONS OF COMPARISON............................................................................................... 3 RESULTS...................................................................................................................................... 4 NOISE STATISTICS & PERCEIVED LOUDNESS .............................................................................. 4 NOISE SPECTRUMS ..................................................................................................................... 5 No noise shaping (TPDF dithering)..................................................................................... 5 Cool Edit (C1 curve) ............................................................................................................5 Cool Edit (C3 curve) ............................................................................................................6 POW-R (pow-r1 mode)........................................................................................................ -

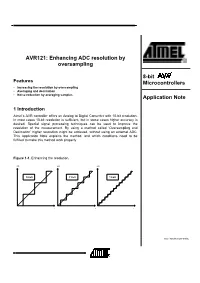

Enhancing ADC Resolution by Oversampling

AVR121: Enhancing ADC resolution by oversampling 8-bit Features Microcontrollers • Increasing the resolution by oversampling • Averaging and decimation • Noise reduction by averaging samples Application Note 1 Introduction Atmel’s AVR controller offers an Analog to Digital Converter with 10-bit resolution. In most cases 10-bit resolution is sufficient, but in some cases higher accuracy is desired. Special signal processing techniques can be used to improve the resolution of the measurement. By using a method called ‘Oversampling and Decimation’ higher resolution might be achieved, without using an external ADC. This Application Note explains the method, and which conditions need to be fulfilled to make this method work properly. Figure 1-1. Enhancing the resolution. A/D A/D A/D 10-bit 11-bit 12-bit t t t Rev. 8003A-AVR-09/05 2 Theory of operation Before reading the rest of this Application Note, the reader is encouraged to read Application Note AVR120 - ‘Calibration of the ADC’, and the ADC section in the AVR datasheet. The following examples and numbers are calculated for Single Ended Input in a Free Running Mode. ADC Noise Reduction Mode is not used. This method is also valid in the other modes, though the numbers in the following examples will be different. The ADCs reference voltage and the ADCs resolution define the ADC step size. The ADC’s reference voltage, VREF, may be selected to AVCC, an internal 2.56V / 1.1V reference, or a reference voltage at the AREF pin. A lower VREF provides a higher voltage precision but minimizes the dynamic range of the input signal. -

Undersampling and Oversampling in Sample Based Shape Modeling

Undersampling and Oversampling in Sample Based Shape Modeling Tamal K. Dey Joachim Giesen Samrat Goswami James Hudson Rephael Wenger Wulue Zhao Ohio State University Columbus, OH 43210 Abstract early paper on the problem was by Boissonat [11] who pro- posed a ‘sculpting’ of the Delaunay triangulation for recon- Shape modeling is an integral part of many visualization struction. A more refined sculpting strategy was designed problems. Recent advances in scanning technology and a by Edelsbrunner and Muck¨ e [16] in their -shape algorithm. number of surface reconstruction algorithms have opened up Bajaj, Bernardini and Xu [9] used -shapes for reconstruct- a new paradigm for modeling shapes from samples. Many of ing scalar fields and 3D CAD models. In [15] Edelsbrun- the problems currently faced in this modeling paradigm can ner reported the design of a commercial software WRAP be traced back to two anomalies in sampling, namely under- that eliminated the need for uniform samples in -shapes. sampling and oversampling. Boundaries, non-smoothness Hoppe et al. [24] reconstructed the surface using the zero and small features create undersampling problems, whereas level set of a distance function defined over the samples. oversampling leads to too many triangles. We use Voronoi Curless and Levoy [14] used a distance function to con- cell geometry as a unified guide to detect undersampling and struct an implicit surface from multiple range scans. Turk oversampling. We apply these detections in surface recon- and Levoy [31] devised an incremental algorithm that itera- struction and model simplification. Guarantees of the algo- tively improves a reconstruction by erosion and zippering.