Practical Clustered Shading

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Just Cause 3 Mods Download Dropzone Folders & Install Help

just cause 3 mods download Dropzone folders & Install help. Everything you need to start modding your Just Cause 3 on PC! Includes a guide on how to install mods! Open the file using 7zip, which can be downloaded here: http://www.7-zip.org/download.html. Highlight all files inside and drag them into a new folder. Read the 'How To' or the installation for further instructions. HOW TO - These folders. Library Right click Just Cause 3 Properties Local files Browse Local Files. This will open your Just Cause 3 installation folder. Copy all the dropzone folders into your Just Cause 3 installation folder. Unless the mod you downloaded effects the DLC, it will be copied into your 'dropzone' folder. Open the file using 7zip (Download below) 1 - Mod effects base game: Navaigate into mod until you find a folder with a lower case name. Drag this folder into your 'dropzone' folder. Finished! Run the game. 2 - Mod effects DLC: Navigate the mod until you find a folder called '__UNKNOWN' Copy/Drag this folder into the correct DLC folder. A) Mod effects Mech Land Assault DLC: 'dropzone_mech_dlc' B) Mod effects Sky Fortress DLC: 'dropzone_sky_fortress' C) Mod effects Sea Heist DLC: 'dropzone_sea_heist' Once you have copied in the mod, YOU MUST RUN THE 'DLCPACKER.EXE' If you forget to run it, the mods for your DLC will not install. If you run any DLCPacker.exe in any of the DLC folders, it will install all mods inside all DLC dropzone folders. Only exception to the DLC '__UNKNOWN' folder is if the folder is lowercase. -

Over 1080 Eligible Titles! Games Eligible for This Promotion - Last Updated 3/14/19 GAME PS4 XB1 NSW .HACK G.U

Over 1080 eligible titles! Games Eligible for this Promotion - Last Updated 3/14/19 GAME PS4 XB1 NSW .HACK G.U. LAST RECODE 1-2-SWITCH 25TH WARD SILVER CASE SE 3D BILLARDS & SNOOKER 3D MINI GOLF 428 SHIBUYA SCRAMBLE 7 DAYS TO DIE 8 TO GLORY 8-BIT ARMIES COLLECTOR ED 8-BIT ARMIES COLLECTORS 8-BIT HORDES 8-BIT INVADERS A PLAGUE TALE A WAY OUT ABZU AC EZIO COLLECTION ACE COMBAT 7 ACES OF LUFTWARE ADR1FT ADV TM PRTS OF ENCHIRIDION ADVENTURE TIME FJ INVT ADVENTURE TIME INVESTIG AEGIS OF EARTH: PROTO AEREA COLLECTORS AGATHA CHRISTIE ABC MUR AGATHA CHRSTIE: ABC MRD AGONY AIR CONFLICTS 2-PACK AIR CONFLICTS DBL PK AIR CONFLICTS PACFC CRS AIR CONFLICTS SECRT WAR AIR CONFLICTS VIETNAM AIR MISSIONS HIND AIRPORT SIMULATOR AKIBAS BEAT ALEKHINES GUN ALEKHINE'S GUN ALIEN ISOLATION AMAZING SPIDERMAN 2 AMBULANCE SIMULATOR AMERICAN NINJA WAR Some Restrictions Apply. This is only a guide. Trade values are constantly changing. Please consult your local EB Games for the most updated trade values. Over 1080 eligible titles! Games Eligible for this Promotion - Last Updated 3/14/19 GAME PS4 XB1 NSW AMERICAN NINJA WARRIOR AMONG THE SLEEP ANGRY BIRDS STAR WARS ANIMA: GATE OF MEMORIES ANTHEM AQUA MOTO RACING ARAGAMI ARAGAMI SHADOW ARC OF ALCHEMIST ARCANIA CMPLT TALES ARK ARK PARK ARK SURVIVAL EVOLVED ARMAGALLANT: DECK DSTNY ARMELLO ARMS ARSLAN WARRIORS LGND ASSASSINS CREED 3 REM ASSASSINS CREED CHRONCL ASSASSINS CREED CHRONIC ASSASSINS CREED IV ASSASSINS CREED ODYSSEY ASSASSINS CREED ORIGINS ASSASSINS CREED SYNDICA ASSASSINS CREED SYNDICT ASSAULT SUIT LEYNOS ASSETTO CORSA ASTRO BOT ATELIER FIRIS ATELIER LYDIE & SUELLE ATELIER SOPHIE: ALCHMST ATTACK ON TITAN ATTACK ON TITAN 2 ATV DRIFT AND TRICK ATV DRIFT TRICKS ATV DRIFTS TRICKS ATV RENEGADES AVEN COLONY AXIOM VERGE SE AZURE STRIKER GUNVOLT SP BACK TO THE FUTURE Some Restrictions Apply. -

Just Cause 3 Download Mac

1 / 4 Just Cause 3 Download Mac Download Guide For Just Cause 3 APK latest version 1.02 for Android, Windows PC, Mac. Ultimate Guide for Just cause 3 tips PRO 2017 New Version !. Here are the Just Cause 3 System Requirements (Minimum) · CPU: Intel Core i5-2500k, 3.3GHz / AMD Phenom II X6 1075T 3GHz · CPU SPEED: Info · RAM: 8 GB .... Aug 25, 2019 — Trying to run Just Cause 3 on a playonmac version of steam but is stuck ... Even if it worked Mac doesn't have proper implementation of Vulkan ... all the buttons are greyed out. when i press "Download Packages Manually" the .... Jun 16, 2015 — Download the best games on Windows & Mac. A vast selection of titles, DRM-free, with free goodies, and lots of pure customer love.. Dec 23, 2016 · Just Cause 3 Serial Key Cd Key Free Download Crack Full ... Just Cause 3 Torrent Download is one of the best action adventure PC game.. [FREE] Download Just Cause 3 for Xbox One. Working on Windows, Mac, iOS and Android. Jul 26, 2016 — Avalanche Studios has released a brand new patch for Just Cause 3 on ... This update is now available for download on PS4 and should go .... Arrow Based Vocals FNF MOD (Weeks 1, 2 and 3) – Download (Friday Night ... lot of arrows is not "spammy" - Just cause you don't have fun cause it's "too hard" doesn't make the chart bad. ... A story. it says for mac but the only thing i . arrows.. Square Enix; ESRB Rating M - Mature (Blood, Intense Violence, Strong Language); Genre Action Adventure; DRM Steam. -

E3 2016 Schedule PACIFIC TIME

Start Time Time Blocks E3 Streaming Sites: 8:30 AM 10m YouTube Gaming: https://www.youtube.com/watch?v=Jjoq7snv24I E3 Schedule IGN: http://www.ign.com/events/e3 Square Enix Presents: https://www.youtube.com/user/SquareEnixPresents Sunday, June 12 - Thursday, June 16, 2016 PACIFIC TIME Nintendo Treehouse: https://www.youtube.com/user/Nintendo TIME SUNDAY MONDAY TUESDAY WEDNESDAY THURSDAY PACIFIC IGN YOUTUBE/E3 IGN YOUTUBE/E3 IGN SQUARE ENIX PRESENTS NINTENDO TREEHOUSE LIVE YOUTUBE/E3 IGN SQUARE ENIX PRESENTS NINTENDO TREEHOUSE LIVE YOUTUBE/E3 IGN SQUARE ENIX PRESENTS 8:30 AM Nintendo Pre-Show 8:40 AM | 8:50 AM | 9:00 AM This 1/2 Hour (Times Vary): Microsoft Pre-Show Nintendo Treehouse Live Treehouse - Pokémon Sun/Moon 9:10 AM Injustice 2, Only One | | The Legend of Zelda (Wii U) 9:20 AM Croteam’s Next Game | | | 9:30 AM Xbox Press Conference Xbox Press Conference | | 9:40 AM | | | | 9:50 AM | | | | 10:00 AM | | | | Final Fantasy XV Active Time Report (Japanese) Pokémon Go NieR: Automata Discussion with Developers 10:10 AM | | | | | | | 10:20 AM | | | | | Misc. Games Throughout | 10:30 AM | | | | | The Day. Inlcuding: | 10:40 AM | | | | | Monster Hunter Generations | 10:50 AM | | | | | Dragon Quest VII | 11:00 AM This Hour (Times Vary): Xbox Post-Show Nintendo Post-Show | Destiny: Rise of Iron Hitman Live Stream Tokyo Mirage Sessions #FE TBA Game Demo I Am Setsuna 11:10 AM Dishonored 2, Recore | | | | | | | | 11:20 AM Twisted Pixel’s Next Game | | | TBA Game Demo | | TBA Game Demo | 11:30 AM Titanfall 2 | | | | | | | Deus Ex Universe: Bridging -

Swedish Game Developer Index 2017-2018 Second Edition Published by Swedish Games Industry Research, Text & Design: Jacob Kroon Cover Illustration: Anna Nilsson

Swedish Game Developer Index 2017-2018 Second Edition Published by Swedish Games Industry Research, text & design: Jacob Kroon Cover Illustration: Anna Nilsson Dataspelsbranschen Swedish Games Industry Klara norra kyrkogata 31, Box 22307 SE-104 22 Stockholm www.dataspelsbranschen.se Contact: [email protected] 2 Table of Contents Summary 4 Preface 5 Revenue & Profit 8 Key Figures 10 Number of Companies 14 Employment 14 Gender Distribution 16 Employees & Revenue per Company 18 Biggest Companies 20 Platforms 22 Actual Consumer Sales Value 23 Game Developer Map 24 Globally 26 The Nordic Industry 28 Future 30 Copyright Infringement 34 Threats & Challenges 36 Conclusion 39 Method 39 Timeline 40 Glossary 42 3 Summary The Game Developer Index analyses Swedish game few decades, the video game business has grown developers’ operations and international sector trends from a hobby for enthusiasts to a global industry with over a year period by compiling the companies’ annual cultural and economic significance. The 2017 Game accounts. Swedish game development is an export Developer Index summarizes the Swedish companies’ business active in a highly globalized market. In a last reported business year (2016). The report in brief: Revenue increased to EUR 1.33 billion during 2016, doubling in the space of three years Most companies are profitable and the sector reports total profits for the eighth year in a row Jobs increased by 16 per cent, over 550 full time positions, to 4291 employees Compound annual growth rate since 2006 is 35 per cent Small and medium sized companies are behind 25 per cent of the earnings and half of the number of employees More than 70 new companies result in 282 active companies in total, an increase by 19 per cent Almost 10 per cent of the companies are working with VR in some capacity Game development is a growth industry with over half Swedish game developers are characterized by of the companies established post 2010. -

Finalists in 21 Categories Announced for Third Annual SXSW Gaming Awards

P.O. Box 685289 Austin, Texas | 78768 T: 512.467.7979 F: 512.451.0754 sxsw.com Finalists in 21 Categories Announced for Third Annual SXSW Gaming Awards YouTube megastar Séan “Jacksepticeye” William McLoughlin and esports host Rachel “Seltzer” Quirico to host the SXSW Gaming Awards ceremony The Witcher 3: Wild Hunt and Bloodborne lead in total nominations AUSTIN, Texas (January 25, 2016) — South by Southwest (SXSW) Gaming today announced the finalists for the third annual SXSW Gaming Awards, presented by Windows Games DX, Curse, G2A, IGN, Porter Novelli and Imaginary Forces. Taking place Saturday, March 19 at 8 p.m. CST in the Austin Grand Ballroom on the 6th Floor of the Hilton Downtown Austin, the Gaming Awards will honor indie and major game studio titles in 21 categories. The SXSW Gaming Awards are free and open to the public of all ages with a SXSW Guest Pass and streamed online at http://sxsw.is/23g6kEc. All Interactive, Music, Film, Gold, and Platinum badgeholders receive early entry and preferred seating. The SXSW Gaming Awards is an extension of the SXSW Gaming event. New for 2016: SXSW Gaming takes place March 17-19, 2016 inside the Austin Convention Center downtown (500 E Cesar Chavez Street). “First, congratulations are in order for all of our entrants and finalists. This year we saw a record number of entries and an incredibly diverse set of games,” said Justin Burnham, SXSW Gaming Producer. "This year’s show – thanks to the help of our hosts and finalists, is going to be one of the best yet. -

Candidate Paper.Docx

Evaluating Cloud-Based Gaming Solutions Item Type Thesis Authors Truong, Daniel Download date 30/09/2021 10:55:03 Link to Item http://hdl.handle.net/20.500.12648/1782 Evaluating Cloud-Based Gaming Solutions A Master’s Thesis Project Presented to the Department of Communications and Humanities In Partial Fulfillment of the Requirements for the Master of Science Degree State University of New York Polytechnic Institute By Daniel Truong May 2021 SUNY Polytechnic Institute Department of Communications and Humanities Approved and recommended for acceptance as a thesis in partial fulfillment of the requirements for the degree of Master of Science in Information Design and Technology. _____________________________________June 11, 2021 Date _____________________________________ Dr. Kathryn Stam Thesis Advisor _____________________________________ Date _____________________________________ Dr. Ibrahim Yucel Second Reader Abstract Recently, tech companies such as Google and Microsoft have invested resources into offering cloud-based delivery of video games. Delivery of games over such a medium negates the need of requiring dedicated video game consoles or computers with robust 3D graphics hardware. Tangible hardware requirements for traditional video game playing are currently undergoing a supply shortage due to a multitude of factors, particularly related to the COVID-19 pandemic. This project evaluates the cost of using cloud services vs. using a physical video game console. Also, this article evaluates whether players can come up with a custom solution utilizing VPS (virtual private server) providers such as Amazon Web Services. By utilizing the diffusion of innovations theory, we evaluate how the common actors of the video game industry try to replicate the traditional video game playing experience, but in a cloud setting. -

Thehunter: Call of the Wild Coming to Consoles on October 2, 2017

theHunter: Call of the Wild Coming to Consoles on October 2, 2017 STOCKHOLM (AUGUST 22, 2017) – Avalanche Studios and Expansive Worlds are excited to reveal the release date for theHunter: Call of the Wild for Xbox One and PlayStation®4. It will arrive on consoles October 2, 2017 and is priced at $39.99. The console version contains the main game plus the Tents & Ground Blinds DLC and the ATV DLC. Find the official trailer here. Originally developed for PC and released via digital and retail outlets earlier this year, theHunter: Call of the Wild is the ultimate hunting experience, featuring open-world gameplay across two massive reserves set in Germany and the Pacific Northwest. The game is rendered by Apex – Avalanche Studios Open World Engine, enabling a level of visual fidelity never before seen in a hunting game. theHunter: Call of the Wild is a stand- alone, one-time purchase which will be supported by optional DLC content following the release. For an in-depth look at all features of the game, make sure to catch the Gameplay Trailer and Layton Lake Trailer for the PC version. KEY FEATURES A Next-Generation Hunting Experience. · theHunter: Call of the Wild offers the most immersive hunting experience ever created. Step into a beautiful open world teeming with life, from majestic deer and awe-inspiring bison down to the countless birds, critters and insects of the wilderness. · Experience complex animal behavior, dynamic weather events, full day and night cycles, simulated ballistics, highly realistic acoustics, scents carried by a sophisticated wind system, and much more. -

Playstation 4 Xbox One Nintendo Switch Pc Game 3Ds

Lista aggiornata al 30/06/2020. Potrebbe subire delle variazioni. Maggiori dettagli in negozio. PLAYSTATION 4 XBOX ONE NINTENDO SWITCH PC GAME 3DS PLAYSTATION 4 11-11 Memories Retold - P4 2Dark - Limited Edition - P4 428 Shibuya Scramble - P4 7 Days to Die - P4 8 To Glory - Bull Riding - P4 A Plague Tale: Innocence - P4 A Way Out - P4 A.O.T. 2 - P4 A.O.T. 2 – Final Battle - P4 A.O.T. Wings of Freedom - P4 ABZÛ - P4 Ace Combat 7 - NON PUBBLICARE - P4 Ace Combat 7 - P4 ACE COMBAT® 7: SKIES UNKNOWN Collector's Edition - P4 Aces of the Luftwaffe - Squadron Extended Edition - P4 Adam’s Venture: Origini - P4 Adventure Time: Finn & Jake Detective - P4 Aerea - Collectors Edition - P4 Agatha Christie: The ABC Murders - P4 Age Of Wonders: Planetfall - Day One Edition - P4 Agents of Mayhem - P4 Agents Of Mayhem - Special Edition - P4 Agony - P4 Air Conflicts Vietnam Ultimate Edition - P4 Alien: Isolation Ripley Edition - P4 Among the Sleep - P4 Angry Birds Star Wars - P4 Anima Gate of Memories: The Nameless Chronicles - P4 Anima: Gate Of Memories - P4 Anthem - Legion of Dawn Edition - P4 Anthem - P4 Apex Construct - P4 Aragami - P4 Arcania - The Complete Tale - P4 ARK Park - P4 ARK: Survival Evolved - Collector's Edition - P4 ARK: Survival Evolved - Explorer Edition - P4 ARK: Survival Evolved - P4 Armello - P4 Arslan The Warriors of Legends - P4 Ash of Gods: Redemption - P4 Assassin’s Creed 4 Black Flag - P4 Assassin's Creed - The Ezio Collection - P4 Assassin's Creed Chronicles - P4 Assassin's Creed III Remastered - P4 Assassin's Creed Odyssey -

Studying the Urgent Updates of Popular Games on the Steam Platform

Noname manuscript No. (will be inserted by the editor) Studying the Urgent Updates of Popular Games on the Steam Platform Dayi Lin · Cor-Paul Bezemer · Ahmed E. Hassan Received: date / Accepted: date Abstract The steadily increasing popularity of computer games has led to the rise of a multi-billion dollar industry. This increasing popularity is partly enabled by online digital distribution platforms for games, such as Steam. These platforms offer an insight into the development and test processes of game developers. In particular, we can extract the update cycle of a game and study what makes developers deviate from that cycle by releasing so-called urgent updates. An urgent update is a software update that fixes problems that are deemed critical enough to not be left unfixed until a regular-cycle update. Urgent updates are made in a state of emergency and outside the regular development and test timelines which causes unnecessary stress on the development team. Hence, avoiding the need for an urgent update is important for game developers. We define urgent updates as 0-day updates (updates that are released on the same day), updates that are released faster than the regular cycle, or self-admitted hotfixes. We conduct an empirical study of the urgent updates of the 50 most popular games from Steam, the dominant digital game delivery platform. As urgent updates are re- flections of mistakes in the development and test processes, a better understanding of urgent updates can in turn stimulate the improvement of these processes, and even- tually save resources for game developers. In this paper, we argue that the update strategy that is chosen by a game developer affects the number of urgent updates that are released. -

GAME DEVELOPER INDEX 2015 Based on 2014 Swedish Annual Reports Table of Contents

GAME DEVELOPER INDEX 2015 Based on 2014 Swedish Annual Reports Table of Contents SUMMARY 2 PREFACE 4 TURNOVER AND PROFIT 5-6 NEW EMPLOYMENT 8 NUMBER OF COMPANIES 8 GENDER DISTRIBUTION 9 TURNOVER PER COMPANY 9 EMPOYEES PER COMPANY 9 BIGGEST PLAYERS 12 DISTRIBUTION PLATFORMS 12 REVIEWS 13 GAME DEVELOPER MAP 15-16 FUTURE 17-20 ACTUAL REVENUES 22 PIRACY 22 GLOBALLY 23 THREATS 25 CONCLUSION AND METHODOLOGY 26 TIMELINE - A SELECTION 27 GLOSSARY 28 On the cover: Unravel, Coldwood Interactive 1 Summary The Game Developer Index analyzes the activities of Swedish game developers, as well as international trends, throughout the year by compiling the annual accounts of the companies. Swedish game development is an export industry operating in a highly globalized market. The gaming business has grown from a hobby of enthusiasts to a world-wide industry with cultural and economic significance. The Game Developer Index 2015 summarizes the reports of the latest financial year. The summary in brief: • Turnover of Swedish game developers increased by 35 percent to EUR 930 million in 2014. • The majority of the companies are profitable and the industry has reported a total profit for six years running. • Employment increased by 23 percent, or 583 full-time positions, to a total of 3,117 employees. • The proportion of women increased by 39 percent, compared with 17 percent for men. • The compound annual growth rate (CAGR) in the period 2006-2014 is 39 percent. • Forty-three new companies have been added, which amounts to 213 active companies - an increase of 25 percent. • The total value, including acquisitions, of Swedish game developers was over EUR 2.75 billion in 2014. -

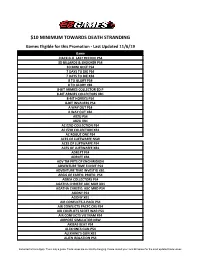

10 MINIMUM TOWARDS DEATH STRANDING Games Eligible for This Promotion - Last Updated 11/6/19 Game .HACK G.U

$10 MINIMUM TOWARDS DEATH STRANDING Games Eligible for this Promotion - Last Updated 11/6/19 Game .HACK G.U. LAST RECODE PS4 3D BILLARDS & SNOOKER PS4 3D MINI GOLF PS4 7 DAYS TO DIE PS4 7 DAYS TO DIE XB1 8 TO GLORY PS4 8 TO GLORY XB1 8-BIT ARMIES COLLECTOR ED P 8-BIT ARMIES COLLECTORS XB1 8-BIT HORDES PS4 8-BIT INVADERS PS4 A WAY OUT PS4 A WAY OUT XB1 ABZU PS4 ABZU XB1 AC EZIO COLLECTION PS4 AC EZIO COLLECTION XB1 AC ROGUE ONE PS4 ACES OF LUFTWAFFE NSW ACES OF LUFTWAFFE PS4 ACES OF LUFTWAFFE XB1 ADR1FT PS4 ADR1FT XB1 ADV TM PRTS OF ENCHIRIDION ADVENTURE TIME FJ INVT PS4 ADVENTURE TIME INVESTIG XB1 AEGIS OF EARTH: PROTO PS4 AEREA COLLECTORS PS4 AGATHA CHRISTIE ABC MUR XB1 AGATHA CHRSTIE: ABC MRD PS4 AGONY PS4 AGONY XB1 AIR CONFLICTS 2-PACK PS4 AIR CONFLICTS PACFC CRS PS4 AIR CONFLICTS SECRT WAR PS4 AIR CONFLICTS VIETNAM PS4 AIRPORT SIMULATOR NSW AKIBAS BEAT PS4 ALEKHINES GUN PS4 ALEKHINE'S GUN XB1 ALIEN ISOLATION PS4 Some Restrictions Apply. This is only a guide. Trade values are constantly changing. Please consult your local EB Games for the most updated trade values. $10 MINIMUM TOWARDS DEATH STRANDING Games Eligible for this Promotion - Last Updated 11/6/19 Game ALIEN ISOLATION XB1 AMAZING SPIDERMAN 2 PS4 AMAZING SPIDERMAN 2 XB1 AMERICAN NINJA WAR XB1 AMERICAN NINJA WARRIOR NSW AMERICAN NINJA WARRIOR PS4 AMONG THE SLEEP NSW AMONG THE SLEEP PS4 AMONG THE SLEEP XB1 ANGRY BIRDS STAR WARS PS4 ANGRY BIRDS STAR WARS XB1 ANTHEM PS4 ANTHEM XB1 AQUA MOTO RACING NSW ARAGAMI PS4 ARCANIA CMPLT TALES PS4 ARK PARK PSVR ARK PS4 ARK SURVIVAL EVOLVED