View Publication

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Analysis and Synthesis of a Parallel Sorting Engine Redacted for Privacy Abstract Approv, John M

AN ABSTRACT OF THE THESIS OF Byoungchul Ahn for the degree of Doctor of Philosophy in Electrical and Computer Engineering, presented on May 3. 1989. Title: The Analysis and Synthesis of a Parallel Sorting Engine Redacted for Privacy Abstract approv, John M. Murray / Thisthesisisconcerned withthe development of a unique parallelsort-mergesystemsuitablefor implementationinVLSI. Two new sorting subsystems, a high performance VLSI sorter and a four-waymerger,werealsorealizedduringthedevelopment process. In addition, the analysis of several existing parallel sorting architectures and algorithms was carried out. Algorithmic time complexity, VLSI processor performance, and chiparearequirementsfortheexistingsortingsystemswere evaluated.The rebound sorting algorithm was determined to be the mostefficientamongthoseconsidered. The reboundsorter algorithm was implementedinhardware asasystolicarraywith external expansion capability. The second phase of the research involved analyzing several parallel merge algorithms andtheirbuffer management schemes. The dominant considerations for this phase of the research were the achievement of minimum VLSI chiparea,design complexity, and logicdelay. Itwasdeterminedthattheproposedmerger architecture could be implemented inseveral ways. Selecting the appropriate microarchitecture for the merger, given the constraints of chip area and performance, was the major problem.The tradeoffs associated with this process are outlined. Finally,apipelinedsort-merge system was implementedin VLSI by combining a rebound sorter -

Algoritmi Za Sortiranje U Programskom Jeziku C++ Završni Rad

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Repository of the University of Rijeka SVEUČILIŠTE U RIJECI FILOZOFSKI FAKULTET U RIJECI ODSJEK ZA POLITEHNIKU Algoritmi za sortiranje u programskom jeziku C++ Završni rad Mentor završnog rada: doc. dr. sc. Marko Maliković Student: Alen Jakus Rijeka, 2016. SVEUČILIŠTE U RIJECI Filozofski fakultet Odsjek za politehniku Rijeka, Sveučilišna avenija 4 Povjerenstvo za završne i diplomske ispite U Rijeci, 07. travnja, 2016. ZADATAK ZAVRŠNOG RADA (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Zadatak: Algoritmi za sortiranje u programskom jeziku C++ Rješenjem zadatka potrebno je obuhvatiti sljedeće: 1. Napraviti pregled algoritama za sortiranje. 2. Opisati odabrane algoritme za sortiranje. 3. Dijagramima prikazati rad odabranih algoritama za sortiranje. 4. Opis osnovnih svojstava programskog jezika C++. 5. Detaljan opis tipova podataka, izvedenih oblika podataka, naredbi i drugih elemenata iz programskog jezika C++ koji se koriste u rješenjima odabranih problema. 6. Opis rješenja koja su dobivena iz napisanih programa. 7. Cjelokupan kôd u programskom jeziku C++. U završnom se radu obvezno treba pridržavati Pravilnika o diplomskom radu i Uputa za izradu završnog rada sveučilišnog dodiplomskog studija. Zadatak uručen pristupniku: 07. travnja 2016. godine Rok predaje završnog rada: ____________________ Datum predaje završnog rada: ____________________ Zadatak zadao: Doc. dr. sc. Marko Maliković 2 FILOZOFSKI FAKULTET U RIJECI Odsjek za politehniku U Rijeci, 07. travnja 2016. godine ZADATAK ZA ZAVRŠNI RAD (na sveučilišnom preddiplomskom studiju politehnike) Pristupnik: Alen Jakus Naslov završnog rada: Algoritmi za sortiranje u programskom jeziku C++ Kratak opis zadatka: Napravite pregled algoritama za sortiranje. Opišite odabrane algoritme za sortiranje. -

Parallel Sorting Algorithms + Topic Overview

+ Design of Parallel Algorithms Parallel Sorting Algorithms + Topic Overview n Issues in Sorting on Parallel Computers n Sorting Networks n Bubble Sort and its Variants n Quicksort n Bucket and Sample Sort n Other Sorting Algorithms + Sorting: Overview n One of the most commonly used and well-studied kernels. n Sorting can be comparison-based or noncomparison-based. n The fundamental operation of comparison-based sorting is compare-exchange. n The lower bound on any comparison-based sort of n numbers is Θ(nlog n) . n We focus here on comparison-based sorting algorithms. + Sorting: Basics What is a parallel sorted sequence? Where are the input and output lists stored? n We assume that the input and output lists are distributed. n The sorted list is partitioned with the property that each partitioned list is sorted and each element in processor Pi's list is less than that in Pj's list if i < j. + Sorting: Parallel Compare Exchange Operation A parallel compare-exchange operation. Processes Pi and Pj send their elements to each other. Process Pi keeps min{ai,aj}, and Pj keeps max{ai, aj}. + Sorting: Basics What is the parallel counterpart to a sequential comparator? n If each processor has one element, the compare exchange operation stores the smaller element at the processor with smaller id. This can be done in ts + tw time. n If we have more than one element per processor, we call this operation a compare split. Assume each of two processors have n/p elements. n After the compare-split operation, the smaller n/p elements are at processor Pi and the larger n/p elements at Pj, where i < j. -

Longest Increasing Subsequence

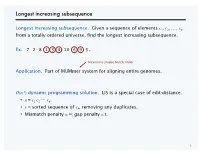

Longest increasing subsequence Longest increasing subsequence. Given a sequence of elements c1, c2, …, cn from a totally-ordered universe, find the longest increasing subsequence. Ex. 7 2 8 1 3 4 10 6 9 5. Maximum Unique Match finder Application. Part of MUMmer system for aligning entire genomes. O(n 2) dynamic programming solution. LIS is a special case of edit-distance. ・x = c1 c2 ⋯ cn. ・y = sorted sequence of ck, removing any duplicates. ・Mismatch penalty = ∞; gap penalty = 1. 1 Patience solitaire Patience. Deal cards c1, c2, …, cn into piles according to two rules: ・Can't place a higher-valued card onto a lowered-valued card. ・Can form a new pile and put a card onto it. Goal. Form as few piles as possible. first card to deal 2 Patience: greedy algorithm Greedy algorithm. Place each card on leftmost pile that fits. first card to deal 3 Patience: greedy algorithm Greedy algorithm. Place each card on leftmost pile that fits. Observation. At any stage during greedy algorithm, top cards of piles increase from left to right. first card to deal top cards 4 Patience-LIS: weak duality Weak duality. In any legal game of patience, the number of piles ≥ length of any increasing subsequence. Pf. ・Cards within a pile form a decreasing subsequence. ・Any increasing sequence can use at most one card from each pile. ▪ decreasing subsequence 5 Patience-LIS: strong duality Theorem. [Hammersley 1972] Min number of piles = max length of an IS; moreover greedy algorithm finds both. at time of insertion Pf. Each card maintains a pointer to top card in previous pile. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm In computer science, a sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) that require sorted lists to work correctly; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation, or reordering, of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2004). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and lower bounds. Classification Sorting algorithms used in computer science are often classified by: • Computational complexity (worst, average and best behaviour) of element comparisons in terms of the size of the list . For typical sorting algorithms good behavior is and bad behavior is . -

![Arxiv:1812.03318V1 [Cs.SE] 8 Dec 2018 Rived from Merge Sort and Insertion Sort, Designed to Work Well on Many Kinds of Real-World Data](https://docslib.b-cdn.net/cover/6225/arxiv-1812-03318v1-cs-se-8-dec-2018-rived-from-merge-sort-and-insertion-sort-designed-to-work-well-on-many-kinds-of-real-world-data-1046225.webp)

Arxiv:1812.03318V1 [Cs.SE] 8 Dec 2018 Rived from Merge Sort and Insertion Sort, Designed to Work Well on Many Kinds of Real-World Data

A Verified Timsort C Implementation in Isabelle/HOL Yu Zhang1, Yongwang Zhao1 , and David Sanan2 1 School of Computer Science and Engineering, Beihang University, Beijing, China [email protected] 2 School of Computer Science and Engineering, Nanyang Technological University, Singapore Abstract. Formal verification of traditional algorithms are of great significance due to their wide application in state-of-the-art software. Timsort is a complicated and hybrid stable sorting algorithm, derived from merge sort and insertion sort. Although Timsort implementation in OpenJDK has been formally verified, there is still not a standard and formally verified Timsort implementation in C programming language. This paper studies Timsort implementation and its formal verification using a generic imperative language - Simpl in Isabelle/HOL. Then, we manually generate an C implementation of Timsort from the verified Simpl specification. Due to the C-like concrete syntax of Simpl, the code generation is straightforward. The C implementation has also been tested by a set of random test cases. Keywords: Program Verification · Timsort · Isabelle/HOL 1 Introduction Formal verification has been considered as a promising way to the reliability of programs. With development of verification tools, it is possible to perform fully formal verification of large and complex programs in recent years [2,3]. Formal verification of traditional algorithms are of great significance due to their wide application in state-of-the-art software. The goal of this paper is the functional verification of sorting algorithms as well as generation of C source code. We investigated Timsort algorithm which is a hybrid stable sorting algorithm, de- arXiv:1812.03318v1 [cs.SE] 8 Dec 2018 rived from merge sort and insertion sort, designed to work well on many kinds of real-world data. -

How to Sort out Your Life in O(N) Time

How to sort out your life in O(n) time arel Číže @kaja47K funkcionaklne.cz I said, "Kiss me, you're beautiful - These are truly the last days" Godspeed You! Black Emperor, The Dead Flag Blues Everyone, deep in their hearts, is waiting for the end of the world to come. Haruki Murakami, 1Q84 ... Free lunch 1965 – 2022 Cramming More Components onto Integrated Circuits http://www.cs.utexas.edu/~fussell/courses/cs352h/papers/moore.pdf He pays his staff in junk. William S. Burroughs, Naked Lunch Sorting? quicksort and chill HS 1964 QS 1959 MS 1945 RS 1887 quicksort, mergesort, heapsort, radix sort, multi- way merge sort, samplesort, insertion sort, selection sort, library sort, counting sort, bucketsort, bitonic merge sort, Batcher odd-even sort, odd–even transposition sort, radix quick sort, radix merge sort*, burst sort binary search tree, B-tree, R-tree, VP tree, trie, log-structured merge tree, skip list, YOLO tree* vs. hashing Robin Hood hashing https://cs.uwaterloo.ca/research/tr/1986/CS-86-14.pdf xs.sorted.take(k) (take (sort xs) k) qsort(lotOfIntegers) It may be the wrong decision, but fuck it, it's mine. (Mark Z. Danielewski, House of Leaves) I tell you, my man, this is the American Dream in action! We’d be fools not to ride this strange torpedo all the way out to the end. (HST, FALILV) Linear time sorting? I owe the discovery of Uqbar to the conjunction of a mirror and an Encyclopedia. (Jorge Luis Borges, Tlön, Uqbar, Orbis Tertius) Sorting out graph processing https://github.com/frankmcsherry/blog/blob/master/posts/2015-08-15.md Radix Sort Revisited http://www.codercorner.com/RadixSortRevisited.htm Sketchy radix sort https://github.com/kaja47/sketches (thinking|drinking|WTF)* I know they accuse me of arrogance, and perhaps misanthropy, and perhaps of madness. -

Gsoc 2018 Project Proposal

GSoC 2018 Project Proposal Description: Implement the project idea sorting algorithms benchmark and implementation (2018) Applicant Information: Name: Kefan Yang Country of Residence: Canada University: Simon Fraser University Year of Study: Third year Major: Computing Science Self Introduction: I am Kefan Yang, a third-year computing science student from Simon Fraser University, Canada. I have rich experience as a full-stack web developer, so I am familiar with different kinds of database, such as PostgreSQL, MySQL and MongoDB. I have a much better understand of database system other than how to use it. In the database course I took in the university, I implemented a simple SQL database. It supports basic SQL statements like select, insert, delete and update, and uses a B+ tree to index the records by default. The size of each node in the B+ tree is set the disk block size to maximize performance of disk operation. Also, 1 several kinds of merging algorithms are used to perform a cross table query. More details about this database project can be found here. Additionally, I have very solid foundation of basic algorithms and data structure. I’ve participated in division 1 contest of 2017 ACM-ICPC Pacific Northwest Regionals as a representative of Simon Fraser University, which clearly shows my talents in understanding and applying different kinds of algorithms. I believe the contest experience will be a big help for this project. Benefits to the PostgreSQL Community: Sorting routine is an important part of many modules in PostgreSQL. Currently, PostgreSQL is using median-of-three quicksort introduced by Bentley and Mcllroy in 1993 [1], which is somewhat outdated. -

Sorting Algorithm 1 Sorting Algorithm

Sorting algorithm 1 Sorting algorithm A sorting algorithm is an algorithm that puts elements of a list in a certain order. The most-used orders are numerical order and lexicographical order. Efficient sorting is important for optimizing the use of other algorithms (such as search and merge algorithms) which require input data to be in sorted lists; it is also often useful for canonicalizing data and for producing human-readable output. More formally, the output must satisfy two conditions: 1. The output is in nondecreasing order (each element is no smaller than the previous element according to the desired total order); 2. The output is a permutation (reordering) of the input. Since the dawn of computing, the sorting problem has attracted a great deal of research, perhaps due to the complexity of solving it efficiently despite its simple, familiar statement. For example, bubble sort was analyzed as early as 1956.[1] Although many consider it a solved problem, useful new sorting algorithms are still being invented (for example, library sort was first published in 2006). Sorting algorithms are prevalent in introductory computer science classes, where the abundance of algorithms for the problem provides a gentle introduction to a variety of core algorithm concepts, such as big O notation, divide and conquer algorithms, data structures, randomized algorithms, best, worst and average case analysis, time-space tradeoffs, and upper and lower bounds. Classification Sorting algorithms are often classified by: • Computational complexity (worst, average and best behavior) of element comparisons in terms of the size of the list (n). For typical serial sorting algorithms good behavior is O(n log n), with parallel sort in O(log2 n), and bad behavior is O(n2). -

From Merge Sort to Timsort Nicolas Auger, Cyril Nicaud, Carine Pivoteau

Merge Strategies: from Merge Sort to TimSort Nicolas Auger, Cyril Nicaud, Carine Pivoteau To cite this version: Nicolas Auger, Cyril Nicaud, Carine Pivoteau. Merge Strategies: from Merge Sort to TimSort. 2015. hal-01212839v2 HAL Id: hal-01212839 https://hal-upec-upem.archives-ouvertes.fr/hal-01212839v2 Preprint submitted on 9 Dec 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Merge Strategies: from Merge Sort to TimSort Nicolas Auger, Cyril Nicaud, and Carine Pivoteau Universit´eParis-Est, LIGM (UMR 8049), F77454 Marne-la-Vall´ee,France fauger,nicaud,[email protected] Abstract. The introduction of TimSort as the standard algorithm for sorting in Java and Python questions the generally accepted idea that merge algorithms are not competitive for sorting in practice. In an at- tempt to better understand TimSort algorithm, we define a framework to study the merging cost of sorting algorithms that relies on merges of monotonic subsequences of the input. We design a simpler yet competi- tive algorithm in the spirit of TimSort based on the same kind of ideas. As a benefit, our framework allows to establish the announced running time of TimSort, that is, O(n log n). -

An Efficient Implementation of Batcher's Odd-Even Merge

254 IEEE TRANSACTIONS ON COMPUTERS, VOL. c-32, NO. 3, MARCH 1983 An Efficient Implementation of Batcher's Odd- Even Merge Algorithm and Its Application in Parallel Sorting Schemes MANOJ KUMAR, MEMBER, IEEE, AND DANIEL S. HIRSCHBERG Abstract-An algorithm is presented to merge two subfiles ofsize mitted from one processor to another only through this inter- n/2 each, stored in the left and the right halves of a linearly connected connection pattern. The processors connected directly by the processor array, in 3n /2 route steps and log n compare-exchange A steps. This algorithm is extended to merge two horizontally adjacent interconnection pattern will be referred as neighbors. pro- subfiles of size m X n/2 each, stored in an m X n mesh-connected cessor can communicate with its neighbor with a route in- processor array in row-major order, in m + 2n route steps and log mn struction which executes in t. time. The processors also have compare-exchange steps. These algorithms are faster than their a compare-exchange instruction which compares the contents counterparts proposed so far. of any two ofeach processor's internal registers and places the Next, an algorithm is presented to merge two vertically aligned This executes subfiles, stored in a mesh-connected processor array in row-major smaller of them in a specified register. instruction order. Finally, a sorting scheme is proposed that requires 11 n route in t, time. steps and 2 log2 n compare-exchange steps to sort n2 elements stored Illiac IV has a similar architecture [1]. The processor at in an n X n mesh-connected processor array. -

Anagnostou Msc2009.Pdf

Αναγνώστου Χρήστος, Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Αναγνώστου Χρήστος Μεταπτυχιακός Φοιτητής ∆ιπλωματική Εργασία Επιβλέπων: Παπαρρίζος Κωνσταντίνος, Καθηγητής Τμήμα Εφαρμοσμένης Πληροφορικής Πρόγραμμα Μεταπτυχιακών Σπουδών Ειδίκευσης Πανεπιστήμιο Μακεδονίας Θεσσαλονίκη Ιανουάριος 2009 Πανεπιστήμιο Μακεδονίας – ΜΠΣΕ Τμήματος Εφαρμοσμένης Πληροφορικής Αναγνώστου Χρήστος, Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Θα ήθελα να ευχαριστήσω θερμά τον επιβλέποντα καθηγητή μου και καθηγητή του τμήματος Εφαρμοσμένης Πληροφορικής του Πανεπιστημίου Μακεδονίας, κ. Παπαρρίζο Κωνσταντίνο τόσο για την πολύτιμη βοήθειά του κατά τη διάρκεια εκπόνησης της εργασίας αυτής όσο και για τη γενικότερη καθοδήγησή του. Επίσης, θα ήθελα να ευχαριστήσω την οικογένεια και τους φίλους μου που με βοήθησαν σε όλη αυτή την προσπάθεια. Πανεπιστήμιο Μακεδονίας – ΜΠΣΕ Τμήματος Εφαρμοσμένης Πληροφορικής Αναγνώστου Χρήστος, Ανάλυση Επεξεργασία και Παρουσίαση των Αλγορίθμων Ταξινόμησης Heapsort και WeakHeapsort Περιεχόμενα 1 Περίληψη ............................................................................................1 2 Εισαγωγή ............................................................................................2 2.1 Αντικείμενο της εργασίας..............................................................................2 2.2 Διάρθρωση Της Εργασίας..............................................................................3