Stock Chart Pattern Recognition with Deep Learning

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Forecasting Direction of Exchange Rate Fluctuations with Two Dimensional Patterns and Currency Strength

FORECASTING DIRECTION OF EXCHANGE RATE FLUCTUATIONS WITH TWO DIMENSIONAL PATTERNS AND CURRENCY STRENGTH A THESIS SUBMITTED TO THE GRADUATE SCHOOL OF NATURAL AND APPLIED SCIENCES OF MIDDLE EAST TECHNICAL UNIVERSITY BY MUSTAFA ONUR ÖZORHAN IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PILOSOPHY IN COMPUTER ENGINEERING MAY 2017 Approval of the thesis: FORECASTING DIRECTION OF EXCHANGE RATE FLUCTUATIONS WITH TWO DIMENSIONAL PATTERNS AND CURRENCY STRENGTH submitted by MUSTAFA ONUR ÖZORHAN in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Computer Engineering Department, Middle East Technical University by, Prof. Dr. Gülbin Dural Ünver _______________ Dean, Graduate School of Natural and Applied Sciences Prof. Dr. Adnan Yazıcı _______________ Head of Department, Computer Engineering Prof. Dr. İsmail Hakkı Toroslu _______________ Supervisor, Computer Engineering Department, METU Examining Committee Members: Prof. Dr. Tolga Can _______________ Computer Engineering Department, METU Prof. Dr. İsmail Hakkı Toroslu _______________ Computer Engineering Department, METU Assoc. Prof. Dr. Cem İyigün _______________ Industrial Engineering Department, METU Assoc. Prof. Dr. Tansel Özyer _______________ Computer Engineering Department, TOBB University of Economics and Technology Assist. Prof. Dr. Murat Özbayoğlu _______________ Computer Engineering Department, TOBB University of Economics and Technology Date: ___24.05.2017___ I hereby declare that all information in this document has been obtained and presented in accordance with academic rules and ethical conduct. I also declare that, as required by these rules and conduct, I have fully cited and referenced all material and results that are not original to this work. Name, Last name: MUSTAFA ONUR ÖZORHAN Signature: iv ABSTRACT FORECASTING DIRECTION OF EXCHANGE RATE FLUCTUATIONS WITH TWO DIMENSIONAL PATTERNS AND CURRENCY STRENGTH Özorhan, Mustafa Onur Ph.D., Department of Computer Engineering Supervisor: Prof. -

Point and Figure Relative Strength Signals

February 2016 Point and Figure Relative Strength Signals JOHN LEWIS / CMT, Dorsey, Wright & Associates Relative Strength, also known as momentum, has been proven to be one of the premier investment factors in use today. Numerous studies by both academics and investment ABOUT US professionals have demonstrated that winning securities continue to outperform. This phenomenon has been found Dorsey, Wright & Associates, a Nasdaq Company, is a in equity markets all over the globe as well as commodity registered investment advisory firm based in Richmond, markets and in asset allocation strategies. Momentum works Virginia. Since 1987, Dorsey Wright has been a leading well within and across markets. advisor to financial professionals on Wall Street and investment managers worldwide. Relative Strength strategies focus on purchasing securities that have already demonstrated the ability to outperform Dorsey Wright offers comprehensive investment research a broad market benchmark or the other securities in the and analysis through their Global Technical Research Platform investment universe. As a result, a momentum strategy and provides research, modeling and indexes that apply requires investors to purchase securities that have already Dorsey Wright’s expertise in Relative Strength to various appreciated quite a bit in price. financial products including exchange-traded funds, mutual funds, UITs, structured products, and separately managed There are many different ways to calculate and quantify accounts. Dorsey Wright’s expertise is technical analysis. The momentum. This is similar to a value strategy. There are Company uses Point and Figure Charting, Relative Strength many different metrics that can be used to determine a Analysis, and numerous other tools to analyze market data security’s value. -

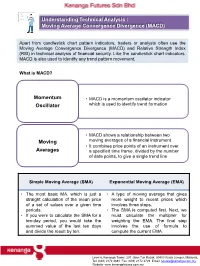

Moving Average Convergence Divergence (MACD)

Understanding Technical Analysis : Moving Average Convergence Divergence (MACD) Apart from candlestick chart pattern indicators, traders or analysts often use the Hi 74.57 Moving Average Convergence Divergence (MACD) and Relative Strength Index Potential supply (RSI) in technical analysis of financial security. Like the candlestickdisruption chart indicators, due to MACD is also used to identify any trend pattern movement. attacks on two oil Brent tankers near Iran What is MACD? WTI Momentum • MACD is a momentum oscillator indicator WTI Oscillator which is used to identify trend formation Lo 2,237.40 (24 Mar 2020) • MACD shows a relationship betweenLo 18,591.93 two (24 Mar 2020) Moving moving averages of a financial instrument. • It combines price points of an instrument over Averages a specified time frame, divided by the number of data points, to give a single trend line Simple Moving Average (SMA) Exponential Moving Average (EMA) • The most basic MA, which is just a • A type of moving average that gives straight calculation of the mean price more weight to recent prices which of a set of values over a given time involves three steps. periods. • The SMA is computed first. Next, we • If you were to calculate the SMA for a must calculate the multiplier for ten-day period, you would take the weighting the EMA. The final step summed value of the last ten days involves the use of formula to and divide the result by ten. compute the current EMA. Level 6, Kenanga Tower, 237 Jalan Tun Razak, 50400 Kuala Lumpur, Malaysia. Tel: (603) 2172 3888 Fax: (603) 2172 2729 Email: [email protected] Website: www.kenangafutures.com.my How MACD works? MACD is generated by subtracting the long term EMAs (26 period) from the short term EMAs (12 periods) to form the main line known as MACD line. -

Candlesticks, Fibonacci, and Chart Pattern Trading Tools

ffirs.qxd 6/17/03 8:17 AM Page iii CANDLESTICKS, FIBONACCI, AND CHART PATTERN TRADING TOOLS A SYNERGISTIC STRATEGY TO ENHANCE PROFITS AND REDUCE RISK ROBERT FISCHER JENS FISCHER JOHN WILEY & SONS, INC. ffirs.qxd 6/17/03 8:17 AM Page iii ffirs.qxd 6/17/03 8:17 AM Page i CANDLESTICKS, FIBONACCI, AND CHART PATTERN TRADING TOOLS ffirs.qxd 6/17/03 8:17 AM Page ii Founded in 1870, John Wiley & Sons is the oldest independent publishing company in the United States. With offices in North America, Europe, Australia, and Asia, Wiley is globally committed to developing and market- ing print and electronic products and services for our customers’ professional and personal knowledge and understanding. The Wiley Trading series features books by traders who have survived the market’s ever-changing temperament and have prospered—some by re- investing systems, others by getting back to basics. Whether a novice trader, professional, or somewhere in-between, these books will provide the advice and strategies needed to prosper today and well into the future. For a list of available titles, visit our web site at www.WileyFinance.com. ffirs.qxd 6/17/03 8:17 AM Page iii CANDLESTICKS, FIBONACCI, AND CHART PATTERN TRADING TOOLS A SYNERGISTIC STRATEGY TO ENHANCE PROFITS AND REDUCE RISK ROBERT FISCHER JENS FISCHER JOHN WILEY & SONS, INC. ffirs.qxd 6/17/03 8:17 AM Page iv Copyright © 2003 by Robert Fischer, Dr. Jens Fischer. All rights reserved. Published by John Wiley & Sons, Inc., Hoboken, New Jersey. Published simultaneously in Canada. PHI-spirals, PHI-ellipse, PHI-channel, and www.fibotrader.com are registered trademarks and protected by U.S. -

Accumulation/Distribution Line -- Chart School

Accumulation/Distribution Line -- Chart School Chart School Accumulation/Distribution Line Introduction - Volume and the Flow of Money There are many indicators available to measure volume and the flow of money for a particular stock, index or security. One of the most popular volume indicators over the years has been the Accumulation/Distribution Line. The basic premise behind volume indicators, including the Accumulation/Distribution Line, is that volume precedes price. Volume reflects the amount of shares traded in a particular stock and is a direct reflection of the money flowing into and out of a stock. Many times before a stock advances, there will be period of increased volume just prior to the move. Most volume or money flow indicators are designed to identify early increases in positive or negative volume flow to gain an edge before the price moves. (Note: the terms "money flow" and "volume flow" are essentially interchangeable.) Methodology (Click here to see a live example of the Acc/Dist Line) The Accumulation/Distribution Line was developed by Marc Chaikin to assess the cumulative flow of money into and out of a security. In order to fully appreciate the methodology behind the Accumulation/Distribution Line, it may be helpful to examine one of the earliest volume indicators and see how it compares. In 1963, Joe Granville developed On Balance Volume (OBV), which was one of the earliest and most popular indicators to measure positive and negative volume flow. OBV is a relatively simple indicator that adds the corresponding period's volume when the close is up and subtracts it when the close is down. -

Profiting with Chart Patterns.Book

Profiting with Chart Patterns by Ed Downs Profiting with Chart Patterns November 2016 Edition BK-02-01-01 Support Worldwide Technical Support and Product Information www.nirvanasystems.com Nirvana Systems Corporate Headquarters 9111Jollyville Rd, Suite 275, Austin, Texas 78759 USA Tel: 512 345 2545 Fax: 512 345 4225 Sales Information For product information or to place an order, please contact 800 880 0338 or 512 345 2566. You may also fax 512 345 4225 or send email to [email protected]. Technical Support Information For assistance in installing or using Nirvana products, please contact 512 345 2592. You may also fax 512 345 4225 or send email to [email protected]. To comment on the documentation, send email to [email protected]. © 2016 Nirvana Systems Inc. All rights reserved. Contents Chapter 1 Confirming Entries & Managing Exits What is a Pattern? ..........................................................................................................1-1 Psychology of Support Bounces ....................................................................................1-2 Goals of Chart Pattern Analysis.....................................................................................1-3 Chapter 2 Tools of the Trade 7 Chart Pattern Method..................................................................................................2-1 Pattern Timeframe .........................................................................................................2-2 Prior Move Test .............................................................................................................2-3 -

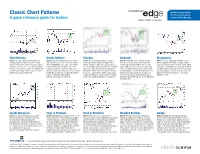

Classic Chart Patterns Chart Pattern Recognition a Quick Reference Guide for Traders Tools Provided by Recognia

StreetSmart Edge features Classic Chart Patterns Chart Pattern Recognition A quick reference guide for traders tools provided by Recognia. www.schwab.com/ssedge Triple Bottoms Double Bottoms Triangles Diamonds Megaphones Bullish: The Triple Bottom starts with prices Bullish: This pattern marks the reversal of a Bullish: Two converging trendlines as prices Bullish or Bearish: These patterns usually Bullish: The rare Megaphone Bottom—a.k.a. moving downward, followed by three sharp prior downtrend. The price forms two distinct reach lower/stable highs and higher lows. form over several months and volume will Broadening Pattern—can be recognized by its lows, all at about the same price level. Volume lows at roughly the same price level. Volume Volume diminishes and price swings between remain high during formation. Prices create successively higher highs and lower lows, which diminishes at each successive low and finally reflects weakening of downward pressure, an increasingly narrow range. Before the triangle higher highs and lower lows in a broadening form after a downward move. The bullish pattern bursts as the price rises above the highest high, tending to diminish as it forms, with some reaches its apex, the price breaks out above pattern, then the trading range narrows after is confirmed when, usually on the third upswing, confirming as a sign of bullish price reversal. pickup at each low and less on the second low. the upper trendline with a noticeable increase peaking highs and uptrending lows trend. The prices break above the prior high but fail to fall Bearish Counterpart: Triple Top. Finally the price breaks out above the highest in volume, confirming the bullish continuation breakout direction signals the resolution to below this level again. -

Power of Chart Patterns 12Th February, 2019 Chart Patterns: Chart Patterns Are Used As Either Reversal Or Continuation Signals

Power of Chart Patterns 12th February, 2019 Chart Patterns: Chart patterns are used as either reversal or continuation signals. There are 3 main types of chart pattern which are currently used by technical analysts. Traditional chart pattern, Harmonic pattern & Candlestick pattern. Chart patterns provide a framework to analyze the battle raging between bulls and bears. More importantly, chart patterns and technical analysis can help determine who is winning the battle, allowing traders and investors to position themselves accordingly. Use Trend lines, view higher time frames and closings to initiate positional trades. Source –Own observations and findings Focus – Traditional Chart Patterns • Continuation Patterns • Cup with Handle • Flag, Pennant, Price Channel • Symmetrical Triangle • Ascending Triangle • Descending Triangle • Rectangle Source - Own observations and findings Focus – Traditional Chart Patterns • Reversal Patterns • Falling Wedge • Rising Wedge • Head and Shoulders Top • Head and Shoulders Bottom • Rounding Bottom • Double Top Reversal • Double Bottom Reversal • Triple Top Reversal • Triple Bottom Reversal Source - Own observations and findings 200.20 Falling wedge- bullish HINDALCO [N1363] 200.20, -2.77% 0.00 Price Lnr IRIS 02/09/16 Fri Op 155.00 160 Hi 164.70 Lo 154.50 155 Cl 157.25 150 145 140 135 130 125 120 115 28 110 105 100 95.00 90.00 85.00 8 80.00 75.00 70.00 1 2 65.00 Source : www.SpiderSoftwareIndia.Com 2 60.00 Vol Cr 10.00 Qt 4.37 5.00 1.24 RSI(14,E,9) 1 2 80.00 Rs 77.62 Rs 75.23 60.00 40.00 15:M A M J J A S -

Forex Investement and Security

Investment and Securities Trading Simulation An Interactive Qualifying Project Report submitted to the Faculty of WORCESTER POLYTECHNIC INSTITUTE in partial fulfillment of the requirements for the Degree of Bachelor of Science by Jean Friend Diego Lugo Greg Mannke Date: May 1, 2011 Approved: Professor Hossein Hakim Abstract: Investing in the Foreign Exchange market, also known as the FOREX market, is extremely risky. Due to a high amount of people trying to invest in currency movements, just one unwatched position can result in a completely wiped out bank account. In order to prevent the loss of funds, a trading plan must be followed in order to gain a maximum profit in the market. This project complies a series of steps to become a successful FOREX trader, including setting stop losses, using indicators, and other types of research. 1 Acknowledgement: We would like to thank Hakim Hossein, Professor, Electrical & Computer Engineering Department, Worcester Polytechnic Institute for his guidance throughout the course of this project and his contributions to this project. 2 Table of Contents 1 Introduction .............................................................................................................................. 6 1.1 Introduction ....................................................................................................................... 6 1.2 Project Description ............................................................................................................. 9 2 Background .................................................................................................................................. -

Fortune-Forcaster-Book.Pdf

CONTENTS Chapter 1 | Synopsis of Course Book Material Chapter 2 | Stock Analysis Technicals vs Fundamentals The Importance of Time Frames Chapter 3 | Stock Charting and Trade Execution Basics Chart Types Candlesticks Chart Indicators/Overlays Technical Overlays Indicators Order Types Chapter 4 | Chart Patterns Support and Resistance Trendlines Cup with Handle Flags/Tightening Patterns Head and Shoulders Flat Top Breakout Flat Bottom Breakdown Chapter 5 | Styles of Trading Position Trading Swing Trading Day Trading Scalp Trading What Style Are You? Chapter 6 | Trading Rules F O R T U N E F O R C A S T E R | S T O C K M A R K E T S I D E H U S T L E | K Y L E D E N N I S Chapter 7 | Evaluating Market Sentiment Russell 2000 Dow Jones Industrial Average NASDAQ 100 S&P 500 VIX, etc Evaluating the Market Environment Chapter 8 | Swing Trading Strategies Breakouts/Breakdown Strategy Fundamental Trend Strategy Rubber Band Strategy Trading Patterns with a Biotech Focus (Kyle Dennis) Simplifying Charting to Perfect Entry Points Identifying Breakout Trades Reliable Chart Patterns (Fibonacci Retracements and Double Bottom Reversals) Chapter 9 | Day Trading Strategies Opening Range Breakout Strategy Double Bottom Strategy Red to Green Strategy VWAP Strategy Options Sweep Strategy Chapter 10 | Managing Risk Planning Your Trades Stop-Loss and Take-Profit Points How to Use Stop-Loss Points 2% Rule Trim and Trail Chapter 11 | Introduction to Options Chapter 12 | Utilizing Level 2 to Improve Your Entries -

Bullish Pattern Created by Connecting Two Or More Lows, with Each Successive Low Higher Than the Previous Low

1 Account Value: The account value is how much one’s account is worth. ADP Non Farm Employment Change: This U.S. report is a measure of non-farm private employment. It was developed in order to help meet the need for timely and accurate estimates of short-term movements in the labor market. Analyst: When analyzing the market, analysts can generally be divided into two camps – fundamentals and technicals. Fundamental analysts are those who mainly look at the fundamental aspects of an economy in forming their opinions. They stay on top of the markets by reading and analyzing what the current economic data say about current market conditions, what is fundamentally driving the market, and where it’s headed. Technical analysts are those who primarily rely on chart indicators and patterns to help predict where price will move next. Some tools that technical analysts use are Fibonacci retracement, candlesticks and momentum indicators. Ascending Trend Channel: An ascending trend channel is a basic chart pattern used in technical analysis. Ascending trend channels are a useful tool due to their ability to predict overall changes in trend. As long as prices remain within the ascending trend channel, the upward trend in price can be expected to continue. As soon as prices exceed either trendline forming the channel, however, a strong signal either to buy or to sell is generated. A break through the upper trendline generates a strong buy signal, while a break through the lower trendline generates a strong sell signal. Ascending Trend Line: A bullish pattern created by connecting two or more lows, with each successive low higher than the previous low. -

Simple Moving Average Weighted Moving Average

Essential Technical 5Indicators to Master Mastering the basics of technical indicators Technical indicators can appear arcane, opaque and difficult to understand. But it needn’t be this way and once mastered they are indispensable tools for traders. Whilst the construction of indicators and oscillators is often complex, applying them and understanding how to use them to generate valuable trading signals is a lot more straightforward. In this short guide we will examine five different indicators and explain how to use them. Indicators can generally be divided into two camps – trend following and momentum. Trend following indicators tend to lag price action. Momentum measures the rate that prices change and is said to lead price action. The first two indicators in this guide are trend-following in nature. These are the different moving averages and Bollinger Bands. Our final two indicators – the RSI and Stochastics – are momentum indicators. The exception and one that we deal with third is the MACD, or moving average convergence divergence, which combines aspects of trend-following and momentum to produce one of the most popular and valuable technical indicators. 1 Moving Averages A moving average is one of the simplest and most popular indicators that traders use. The aim is to filter out ‘noise’ by smoothing short-term fluctuations in price action. A moving average is a lagging indicator – it is good at showing a trend but signals tend to be given after a change in the price action. There are 3 major types of moving average - the Simple or Arithmetic, the Weighted and the Exponential.