A High-Bandwidth, Low-Latency System for Anonymous Broadcasting by Zachary James Newman B.S., Columbia University (2014)

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1944-01-21.Pdf

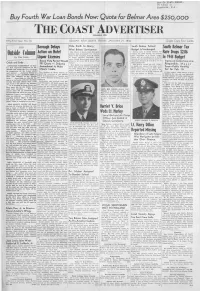

Mon Co Hist. Assoc. 70 r c.u i Si Picel’o iii, Buy Fourth War Loan Bonds Now: Quota for Belmar Area $250,000 Th e Co a st A d v e r t is e r (Established 1892) Fifty-First Year, No. 36 BELMAR, NEW JERSEY, FRIDAY, JANUARY 21, 1944 Single Copy Four Cents Borough Delays Viola Smith to Marry •'i , i ■ South Belmar School South Belmar Tax W est Belmar Serviceman Budget Is Unchanged Action on Hotel Mrs. D avid G. Sm ith, 518% Sixteenth School costs in South Belmar will Rate Drops $2.06 avenue, has announced the engage remain the same during the ensuing ment of her daughter, Viola M. Smith, year, according to a school budget Liquor Licenses to Louis Leonard Warwick, chief which will be considered at a public In 1944 Budget mate, United States navy, son of Mrs. hearing by the South Belmar board of Buena Vista Permit Would Grace A. Warwick, 125 H street, West education Jan u ary 28 at 7:30 p. m. at Improved Collections Are Odds and Ends . Belm ar. the borough hall. Fill Quota —- Debates The district school tax will remain Responsible, Mayor Miss Smith is a graduate of Asbury OFFSHORE FISHERMEN are hop at $9,000, the amount last year, with Amendment to Make Park high school and is employed at I Says— Public Hearing ing that improved conditions iin the $5,000 estimated revenue from state the New Jersey Bell Telephone com Atlantic will enable the Navy to cease Minors Liable. funds and a balance on hand at the Set for Feb. -

Bob White Lodge 87 Centennial History Book

Bob White Lodge 87 Centennial History Book In Honor of a Centuries of Service 1915-2015 A brief history of the oldest Lodge in the Deep South, serving sixteen counties in Georgia and South Carolina for the Georgia-Carolina Council. By Reed Powell Bob White Lodge 87 Established 1936 The History of the Order………………………….. 2 Lodge History…………………………………………… 3 The Oldest Lodge in the Deep South…………. 13 The Legend of the Cabin…………………………… 15 Past Lodge Chiefs…………………………………….. 20 Patches…………………………………………………..… 22 Lodge Awards…………………………………………… 26 Author’s Note…………………………………………… 48 1 The History of the Order Seeking to establish a society for the recognition of the most meritorious campers, Carrol A. Edson and E. Urner Goodman (below) founded the Order of the Arrow at Treasure Island Scout Camp in Pennsylvania in 1915. Goodman stated that the cheerful service of Billy Clark led him create the Order of the Arrow. Billy was helping in the medical tent at camp. As he carried the bed pans full of human waste, he fell and the bed pans emptied on him. Demonstrating a cheerful spirit, Billy started laughing at his predicament. Goodman knew that Scouts who equally demonstrated the Scouting Spirit should be recognized. It was originally called Wimachtendienk or Brotherhood. According to Ken Davis’s Brotherhood of Cheerful Service: A History of the Order of the Arrow, the Order’s ceremonies and rituals were influenced by Chris- tian beliefs, college fraternities, the Lennie Lenape tribe, and freemasonry. From 1915 to 1934, the Order was recognized as an experimental program by the Boy Scouts of America. The National Council adopted the Order as an offi- cial part of the BSA program in 1934. -

FLUVANNA COUNTY PLANNING COMMISSION WORK SESSION and REGULAR MEETING MINUTES CIRCUIT COURT ROOM—FLUVANNA COUNTY COURTS BUILDING 6:00 P.M

FLUVANNA COUNTY PLANNING COMMISSION WORK SESSION AND REGULAR MEETING MINUTES CIRCUIT COURT ROOM—FLUVANNA COUNTY COURTS BUILDING 6:00 p.m. Work Session 7:00 p.m. Regular Meeting April 10, 2018 MEMBERS PRESENT: Barry Bibb, Chairman Ed Zimmer, Vice Chairman Lewis Johnson Sue Cotellessa Howard Lagomarsino Patricia Eager, Board of Supervisors Representative ALSO PRESENT: Jason Stewart, Planning and Zoning Administrator Brad Robinson, Senior Planner James Newman, Planner Fred Payne, County Attorney Stephanie Keuther, Senior Program Support Assistant Absent: None Open the Work Session: (Mr. Barry Bibb, Chairman) Pledge of Allegiance, Moment of Silence Director Comments: None Public Comments: None Work Session: ZTA – Density Updates – Presented by James Newman, Planner James Newman: gave a brief presentation showing different amounts of housing density. Zimmer: Is 1 unit per 2 acres a by-right density? Newman: In A-1 you have to have at least two acres per dwelling unit Eager: And that’s with road frontage on a state road? Newman: Yes. Payne: The density is one unit per two acres even though the lot size may be different. The difference is, where I live in Two Rivers the density is less than one unit per two acres even though the lots are about ¾ of an acre, because it’s a cluster subdivision. For the one that was just shown the lot size is two acres and the density is two acres. But you could have it be a quarter of that and still have the rest as an open space and still be at a density of two acres even though the lot sizes are small. -

James Newman, Zoning Administrator DATE

MEMORANDUM TO: Chairman Gantt and Planning Commissioners FROM: James Newman, Zoning Administrator DATE: August 7, 2019 for the August 14, 2019 meeting RE: SUP 2019-01 Catholic Student Center requests a Special Use Permit (SUP) for a religious institution (a rectory) at 1604 College Avenue/ GPIN 7779-64-4797 in a Residential-4 Zoning District (R4). This is an amendment to approved SUP 99-07 for a school/church facility at 1614 College Avenue/GPIN. ISSUE Should the Planning Commission recommend approval of the proposed special use permit for a religious institution? 1604 College Avenue highlighted in red 2 RECOMMENDATION Recommend to the City Council approval of the SUP subject to the following conditions: 1. The rectory shall be used by a priest who works with Catholic ministry associated with the University of Mary Washington. Housing in the rectory shall be solely for the priest. 2. The property shall be developed in substantial accordance with the Generalized Development Plan, entitled “Special Use Permit Generalized Development Plan for Catholic Student Center,” by Legacy Engineering dated July 25, 2019 3. GPIN parcel 7779-64-4797 shall remain a separate subdivided residential lot or parcel of land, and shall not be consolidated with the other parcel(s) on which this use is conducted. 4. The landowner shall not expand the existing residential building. 5. The front yard of this corner lot shall not be paved, and will be left as open space, except as shown on the GDP. 6. The 1604 College Avenue property may be used for small group activities or meetings with up to twenty participants (not including the resident of the home), but group activities or meetings with over twenty participants shall not take place on this property. -

The Felix Archive

KEEP THE CAT FREE Founded 1949 [email protected] Felixonline.co.uk THE 2021 FELIX SEX Fill it SURVEY IS NOW OPEN out here FelixISSUE 1772 FRIDAY 21ST MAY 2021 Union Council vote not to condemn Shell exhibit COUNCIL VOTES NOT TO CONDEMN SHELL SCIENCE MUSEUM GREENWASHING By Calum Drysdale FELIX EXCLUSIVE EUROVISION he Union Council voted last week against rais- ing even the mildest objection to the funding Meritocracy is a myth - of an exhibition on carbon capture technolo- 2021 gy at the Science Museum by Shell, the fossil Engineering department VIEWING GUIDE fuel giant. T Calum Drysdale Editor in Chief The Council voted last Tuesday by a margin of 48 to 21 with 31 abstentions against a paper proposed by he Engineering Department has issued a course on DON’T MISS Ansh Bhatnagar, a physics masters student and long Tmicroagressions that it says is recommended to all term campaigner for Imperial to divest from fossil fuel staff and students. The course is 15 minutes long and OUR 6 PAGE investment. The paper would have required the sabbat- covers what microaggressions and microaffirmations PULLOUT WITH ical officers, students taking a year out of their studies to are as well as giving examples of harmful behaviours. work in paid leadership positions in the Union, to write The course was reported on in The Telegraph which ALL THE ACTS, A a letter to the Science Museum “Expressing the urgency described it as “a list of phrases to avoid on campus”. of the climate crisisand the need for all of us to step up The Telegraph also quoted Toby Young, the general sec- SWEEPSTAKE AND and take action” as well as “Expressing the Union’s dis- retary of the Free Speech Union, who said that “protec- approval of Shell’s sponsorship of this exhibition”. -

Changes for the Betterment of the Fraternity Alabama Alpha Determined to Be the Best

inside alumnus spotlight ~ p. 2 the Psi thank You for Your support ~ p. 3 Ψ new initiates ~ p. 3 phi kappa psi s the uniVersitY oF alaBaMa sprinG 2011 alabama alpha Granted one More Year in Chapter house • Chapter Looks Forward to Upcoming Football Season • Thank You, Generous Alumni, for Contributing to the Sustaining Membership Program ince my last report, the timeline has become clearer for our Finally, I want to thank the many brothers who generously support chapter house relocation to the Alpha Tau Omega house. The us financially, even during these difficult economic times in our sATW s will begin occupying their house this fall. Zeta Beta country. Alabama Alpha’s Sustaining Membership Program is our Taus will also begin a year-long renovation of their house this sum - annual contribution campaign. The money raised through this cam - mer. Due to this the University gave us the option to either move to paign helps publish this newsletter, provides funds for ongoing the ATW house this fall and let ZBT occupy our current house for maintenance to our facility, and supplements the programming one year, or ZBT would move to the ATW house for one year, and offered by the undergraduate chapter. The Sustaining Membership we could remain in our current location for one more academic year Program raised $12,750 in 2010 from 39 generous then move into the ATW house in the fall of 2012. We chose the lat - brothers. These gifts are acknowledged on page 3 in ter option. Delaying our move one year will give us more time to The Psi, but thank you, again, for your support. -

Redox DAS Artist List for Period

Page: Redox D.A.S. Artist List for01.10.2020 period: - 31.10.2020 Date time: Title: Artist: min:sec 01.10.2020 00:01:07 A WALK IN THE PARK NICK STRAKER BAND 00:03:44 01.10.2020 00:04:58 GEORGY GIRL BOBBY VINTON 00:02:13 01.10.2020 00:07:11 BOOGIE WOOGIE DANCIN SHOES CLAUDIAMAXI BARRY 00:04:52 01.10.2020 00:12:03 GLEJ LJUBEZEN KINGSTON 00:03:45 01.10.2020 00:15:46 CUBA GIBSON BROTHERS 00:07:15 01.10.2020 00:22:59 BAD GIRLS RADIORAMA 00:04:18 01.10.2020 00:27:17 ČE NE BOŠ PROBU NIPKE 00:02:56 01.10.2020 00:30:14 TO LETO BO MOJE MAX FEAT JAN PLESTENJAK IN EVA BOTO00:03:56 01.10.2020 00:34:08 I WILL FOLLOW YOU BOYS NEXT DOOR 00:04:34 01.10.2020 00:38:37 FEELS CALVIN HARRIS FEAT PHARRELL WILLIAMS00:03:40 AND KATY PERRY AND BIG 01.10.2020 00:42:18 TATTOO BIG FOOT MAMA 00:05:21 01.10.2020 00:47:39 WHEN SANDRO SMILES JANETTE CRISCUOLI 00:03:16 01.10.2020 00:50:56 LITER CVIČKA MIRAN RUDAN 00:03:03 01.10.2020 00:54:00 CARELESS WHISPER WHAM FEAT GEORGE MICHAEL 00:04:53 01.10.2020 00:58:49 WATERMELON SUGAR HARRY STYLES 00:02:52 01.10.2020 01:01:41 ŠE IMAM TE RAD NUDE 00:03:56 01.10.2020 03:21:24 NO ORDINARY WORLD JOE COCKER 00:03:44 01.10.2020 03:25:07 VARAJ ME VARAJ SANJA GROHAR 00:02:44 01.10.2020 03:27:51 I LOVE YOU YOU LOVE ME ANTHONY QUINN 00:02:32 01.10.2020 03:30:22 KO LISTJE ODPADLO BO MIRAN RUDAN 00:03:02 01.10.2020 03:33:24 POROPOMPERO CRYSTAL GRASS 00:04:10 01.10.2020 03:37:31 MOJE ORGLICE JANKO ROPRET 00:03:22 01.10.2020 03:41:01 WARRIOR RADIORAMA 00:04:15 01.10.2020 03:45:16 LUNA POWER DANCERS 00:03:36 01.10.2020 03:48:52 HANDS UP / -

2021 Country Profiles

Eurovision Obsession Presents: ESC 2021 Country Profiles Albania Competing Broadcaster: Radio Televizioni Shqiptar (RTSh) Debut: 2004 Best Finish: 4th place (2012) Number of Entries: 17 Worst Finish: 17th place (2008, 2009, 2015) A Brief History: Albania has had moderate success in the Contest, qualifying for the Final more often than not, but ultimately not placing well. Albania achieved its highest ever placing, 4th, in Baku with Suus . Song Title: Karma Performing Artist: Anxhela Peristeri Composer(s): Kledi Bahiti Lyricist(s): Olti Curri About the Performing Artist: Peristeri's music career started in 2001 after her participation in Miss Albania . She is no stranger to competition, winning the celebrity singing competition Your Face Sounds Familiar and often placed well at Kënga Magjike (Magic Song) including a win in 2017. Semi-Final 2, Running Order 11 Grand Final Running Order 02 Australia Competing Broadcaster: Special Broadcasting Service (SBS) Debut: 2015 Best Finish: 2nd place (2016) Number of Entries: 6 Worst Finish: 20th place (2018) A Brief History: Australia made its debut in 2015 as a special guest marking the Contest's 60th Anniversary and over 30 years of SBS broadcasting ESC. It has since been one of the most successful countries, qualifying each year and earning four Top Ten finishes. Song Title: Technicolour Performing Artist: Montaigne [Jess Cerro] Composer(s): Jess Cerro, Dave Hammer Lyricist(s): Jess Cerro, Dave Hammer About the Performing Artist: Montaigne has built a reputation across her native Australia as a stunning performer, unique songwriter, and musical experimenter. She has released three albums to critical and commercial success; she performs across Australia at various music and art festivals. -

Our Lady of Grace Roman Catholic Church !

! OUR LADY OF GRACE ROMAN CATHOLIC CHURCH ! 100-05 159 TH AVENUE, HOWARD BEACH, NY 11414 Rev. Marc Swartvagher, Pastor Rev. Mr. Alexander Breviario, Parish Deacon MASS SCHEDULE VMonday to Saturday at 8:30 a.m. Saturday Vigil at 5:00 pm., Sunday at 8:00 a.m., 10:00 a.m., 12 Noon & 6:00 p.m. PARISH OFFICE ! OUR LADY OF GRACE ! OFFICE OF RELIGIOUS EDUCATION ! 100 R05 159th Avenue CATHOLIC ACADEMY ! Mrs. Jeanne Antonino, Director ! Howard Beach, NY 11414 Ms. Marybeth McManus, Principal ! 158 R20 101st Street (718) T 843 R6218 158 R20 101st Street Howard Beach, NY 11414 fax (718) 738 R8208 Howard Beach, NY 11414 (718) 835 R2165 Monday to Friday 9:30 a.m. R 5:00 p.m. (718) 848 R7440 www.olghb.org / educaon www.olghb.org www.AMCAhb.org [email protected] [email protected] [email protected] ! ! May 5, 2019 ! Third Sunday of Easter ! MASS INTENTIONS MASS INTENTIONS (cont’d) Sunday, May 5, 2019—Third Sunday of Easter Saturday, May 11, 2019 R R R R R Acts 5:27 32, 40b 41/Ps 30:2, 4, 5 6, 11 12, 13 [2a]/Rv 5:11 14/Jn Acts 9:31 R42/Ps 116: 12 R13, 14 R15, 16 R17 [12]/Jn 6:60 R69 R R ! 21:1 19 or 21:1 14 ! 8:30 am Salvator Esposito 5:00 pm Krystyna Zielinski By Mr. and Mrs. Dimkiolo Saturday By Mary and Karen Francesquini Vigil Juanita Quintana Sunday, May 12, 2019—Fourth Sunday of Easter R R R R By Quintana Family Acts 13:14, 43 52/Ps 100:1 2, 3, 5 [3c]/Rv 7:9, 14b 17/Jn 10:27 30 Alfred Molini By Eileen and Peter Monteleone 5:00 pm All Living and Deceased Mothers Andrew Ognibene Saturday All Living and Deceased Mothers By Judy, -

Annual Report 2016 History of Asf

ANNUAL REPORT 2016 HISTORY OF ASF ASF | Created by the Mercury 7 Astronauts The Astronaut Scholarship Foundation (ASF) was created in 1984 by: the six surviving Mercury 7 astronauts (Scott Carpenter, Gordon Cooper, John Glenn, Walter Schirra, ASTRONAUT SCHOLARSHIP FOUNDATION Alan Shepard and Deke Slayton); Betty Grissom (widow of the seventh astronaut, Virgil - “Gus” Grissom); William Douglas, M.D. (The Project Mercury flight surgeon); and Henri Landwirth (Orlando businessman and friend). Together they represented a wealth of MISSION collective influence which was particularly suited to encouraging university students pursuing scientific excellence. Their mission was to ensure the United States would be To aid the United States in retaining its world leadership the global leader in technology for decades to come. in technology and innovation by supporting the very best and brightest scholars in science, technology, engineering Since that time, astronauts from the Mercury, Gemini, Apollo, Skylab and Space Shuttle and mathematics while commemorating the legacy programs have also embraced this noble mission. Through their generous service and support, ASF can partner with industry leaders, universities and individual donors to of America’s pioneering astronauts. reward the best and brightest university students pursuing degrees in science, technology, engineering and mathematics (STEM) with substantial scholarships. The prestigious Astronaut Scholarship is known nationwide for being among the largest merit-based monetary scholarships awarded -

Text Begins by Discussing Spe Beginnings on Edrunningspeeddemosarchive.Com As a Community

Re-curating the Accident: Speedrunning as Community and Practice Rainforest Scully-Blaker A Thesis in The Department of Communication Studies Presented in Partial Fulfillment of the Requirements for the Degree of Master of Arts (Media Studies) at Concordia University Montreal, Quebec, Canada September 2016 © Rainforest Scully-Blaker, 2016 Scully-Blaker iii Abstract Re-curating the Accident: Speedrunning as Community and Practice Rainforest Scully-Blaker This thesis is concerned with speedrunning, the practice of completing a video game as quickly as possible without the use of cheats or cheat devices as well as the community of players that unite around this sort of play. As video games become increasingly ubiquitous in popular media and culture, the project of accounting for and analysing how people interact with these pieces of software becomes more relevant than ever before. As such, this thesis emerges as an initiatory treatment of a relatively niche segment of game culture that has gone underrepresented in extant game and media scholarship. The text begins by discussing spe beginnings on edrunningSpeedDemosArchive.com as a community. and By examiningchronicling its the growth communitys with the emergence of contemporary content hosting sites like YouTube and Twitch, this thesis presents speedrunning as a collaborative and fast-growing community of practice made up of players who revel in playing games quickly. From there, an analysis of space and speed, both natural and virtual, is undertaken with a view to understanding how speedrunning as a practice relates to games as narrative spaces. Discussions of rule systems in games and within the speedrunning community itself follow. -

The Relation of John Newman Edwards and Jesse James Emily Cegielski College of Dupage

ESSAI Volume 16 Article 10 Spring 2018 The Obituary of a Folklore: The Relation of John Newman Edwards and Jesse James Emily Cegielski College of DuPage Follow this and additional works at: https://dc.cod.edu/essai Recommended Citation Cegielski, Emily (2018) "The Obituary of a Folklore: The Relation of John Newman Edwards and Jesse James," ESSAI: Vol. 16 , Article 10. Available at: https://dc.cod.edu/essai/vol16/iss1/10 This Selection is brought to you for free and open access by the College Publications at DigitalCommons@COD. It has been accepted for inclusion in ESSAI by an authorized editor of DigitalCommons@COD. For more information, please contact [email protected]. Cegielski: The Obituary of a Folklore: Ewards and Jesse James The Obituary of a Folklore: The Relation of John Newman Edwards and Jesse James by Emily Cegielski (English 1102) n April 3, 1882, Jesse James, the famous American outlaw, was murdered. Jesse James was a classic Robin Hood figure. Swashbuckling robber supposedly stealing from the rich and Ogiving to the poor, he was a notable part of American folklore during the 19th century. James’ obituary was written by John Newman Edwards was an editor for the Kansas City Times during 1872-1882, who also wrote other articles for the historical bandit. Edwards writes in the obituary in 1882, “No one among all the hired cowards... dared face this wonderful outlaw… until he had disarmed himself and turned his back to his assassins, the first and only time in a career which has passed from the realms of an almost fabulous romance into that of history” (1882).