David L. Debertin*

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Look Back at the Personal Computer's First Decade—1975 To

PROFE MS SSI E ON ST A Y L S S D A N S A S O K C R I A O thth T I W O T N E N 20 A Look Back at the Personal Computer’s First Decade—1975 to 1985 By Elizabeth M. Ferrarini IN JANUARY 1975, A POPULAR ELECTRONICS MAGAZINE COVER STORY about the $300 Altair 8800 kit by Micro Instrumentation and Telemetry PC TRIVIA officially gave birth to the personal computer (PC) industry. It came ▼ Bill Gates and Paul Allen wrote the first Microsoft BASIC with two boards and slots for 16 more in the open chassis. One board for the Altair 8800. held the Intel 8080 processor chip and the other held 256 bytes. Other ▼ Steve Wozniak hand-built the first Apple from $20 worth PC kit companies included IMSAI, Cromemco, Heathkit, and of parts. In 1985, 200 Apple II's sold every five minutes. Southwest Technical Products. ▼ Initial press photo for the IBM PC showed two kids During that same year, Steve Jobs and Steve Wozniak created the sprawled on the living room carpet playing games. The ad 4K Apple I based on the 6502 processor chips. The two Steves added was quickly changed to one appropriate for corporate color and redesign to come up with the venerable Apple II. Equipped America. with VisiCalc, the first PC spreadsheet program, the Apple II got a lot ▼ In 1982, Time magazine's annual Man of the Year cover of people thinking about PCs as business tools. This model had a didn't go to a political figure or a celebrity, but a faceless built-in keyboard, a graphics display, and eight expansion slots. -

KAYPRO II THEORY of OPERATION by Dana Cotant Micro Cornucopia P.O

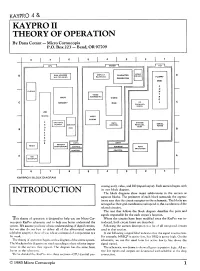

KAYPRO 4 & KAYPRO II THEORY OF OPERATION By Dana Cotant Micro Cornucopia P.O. Box 223 — Bend, OR 97709 CPU VIDEO VIDEO RAM ADDRESS DISPLAY CHARACTER DRIVER MULTIPLEXING BLANKING FLOPPY GENERATION DISK C 0 CLOCKS N VIDEO T ADDRESSING R MAIN VIDEO 0 OP L MEMORY RAM PARALLEL PORTS VIDEO CLOCKS DATA SYSTEM SERIAL CONTROL PARALLEL PORTS PORT DATA ADDRESS PORT SE LECTION CONTROL KAYPRO II BLOCK DIAGRAM cessing unit), video, and 1/0 (input/output). Each section begins with its own block diagram. INTRODUCTION The block diagrams show major subdivisions in the section as separate blocks. The perimeter of each block surrounds the approx- imate area that the circuit occupies on the schematic. The blocks are arranged so their grid coordinates correspond to the coordinates of the related circuitry. The text that follows the block diagram describes the parts and signals responsible for the each circuit's function. This theory of operation is designed to help you use Micro Cor- Where the circuits have been modified since the KayPro was in- nucopia's KayPro schematic and to help you better understand the troduced, both circuit forms are described. system. We assume you have a basic understanding of digital circuits, Following the section description is a list of all integrated circuits but we also do our best to define all of the abbreviated symbols used in that section. (alphabet soup) for those of you whose command of computerese is a A star following a signal label indicates that the signal is active low. bit weak. For example, MREQ* is active low, hut DRQ is active high. -

EPROM Programmer for the Kaypro

$3.00 June 1984 TABLE OF CONTENTS EPROM Programmer for the Kaypro .................................. 5 Digital Plotters, A Graphic Description ................................ 8 I/O Byte: A Primer ..................................................... .1 0 Sticky Kaypros .......................................................... 12 Pascal Procedures ........................................................ 14 SBASIC Column ......................................................... 18 Kaypro Column ......................................................... 24 86 World ................................................................ 28 FOR1Hwords ........................................................... 30 Talking Serially to Your Parallel Printer ................................ 33 Introduction to Business COBOL ...................................... 34 C'ing Clearly ............................................................. 36 Parallel Printing with the Xerox 820 .................................... 41 Xerox 820, A New Double.. Density Monitor .......................... 42 On 'Your Own ........................................................... 48 Technical Tips ........................................................... 57 "THE ORIGINAL BIG BOARD" OEM - INDUSTRIAL - BUSINESS - SCIENTIFIC SINGLE BOARD COMPUTER KIT! Z-80 CPU! 64K RAM! (DO NOT CONFUSE WITH ANY OF OUR FLATTERING IMITATORSI) .,.: U) w o::J w a: Z o >Q. o (,) w w a: &L ~ Z cs: a: ;a: Q w !:: ~ :::i ~ Q THE BIG BOARD PROJECT: With thousands sold worldwide and over two years -

Especially for the Kaypro from Micro Cornucopia the Following Folks Are Reaching You for Only 20 Cents Per Word

$3.00 June 1983 TABLE OF CONTENTS 256K In Detail - Part I . .. 4 Packet Radio ....................................... 10 Bringing Up the BB II . 15 dBase II ........................................... 28 Superfile . 29 WordStar, Volumes of Hints ........................... 31 MicroWyl ......................................... 33 A Two-Faced Drive for the BB I ......................... 34 REGULAR FEATURES Letters. .. 2 C'ing Clearly . 12 Pascal Procedures . 16 On Your Own ............ 19 FORTHwords ............ 20 KayPro ................. 24 Technical Tips ........... 38 "THE ORIGINAL BIG BOARD" OEM - INDUSTRIAL - BUSINESS - SCIENTIFIC SINGLE BOARD COMPUTER KIT! Z-80 CPU! 64K RAM! (DO NOT CONFUSE WITH ANY OF OUR FLATTERING IMITATORS!) THE BIG BOARD PROJECT: With thousands sold worldwide and over two years of field experience, the Big (64KKIT Board may just be one of the most reliable single board computers available today. This is the same design that 00 was licensed by Xerox Corp. as the basis for their 820 computer. $319** BASIC I/O) The Big Board gives you the right mix of most needed computing features all on one board. The Big Board was designed from scratch to run the latest version of CP/M*. Just imagine all the off-the-shelf software that can be SIZE: 8'12 x 133/. IN. run on the Big Board without any modifications needed. SAME AS AN 8 IN. DRIVE. REQUIRES: +5V @ 3 AMPS FULLY SOCKETED! FEATURES: (Remember, all this on one board!) + - 12V @.5 AMPS. 64K RAM 24 X 80 CHARACTER VIDEO Uses Industry standard 4116 RAM·s. All 64K is available 10 Ihe user, our VIDEO With a crisp, flicker-free display that looks extremely sharp even on small and EPROM sections do not make holes In system RAM. -

CP/M-80 Kaypro

$3.00 June-July 1985 . No. 24 TABLE OF CONTENTS C'ing Into Turbo Pascal ....................................... 4 Soldering: The First Steps. .. 36 Eight Inch Drives On The Kaypro .............................. 38 Kaypro BIOS Patch. .. 40 Alternative Power Supply For The Kaypro . .. 42 48 Lines On A BBI ........ .. 44 Adding An 8" SSSD Drive To A Morrow MD-2 ................... 50 Review: The Ztime-I .......................................... 55 BDOS Vectors (Mucking Around Inside CP1M) ................. 62 The Pascal Runoff 77 Regular Features The S-100 Bus 9 Technical Tips ........... 70 In The Public Domain... .. 13 Culture Corner. .. 76 C'ing Clearly ............ 16 The Xerox 820 Column ... 19 The Slicer Column ........ 24 Future Tense The KayproColumn ..... 33 Tidbits. .. .. 79 Pascal Procedures ........ 57 68000 Vrs. 80X86 .. ... 83 FORTH words 61 MSX In The USA . .. 84 On Your Own ........... 68 The Last Page ............ 88 NEW LOWER PRICES! NOW IN "UNKIT"* FORM TOO! "BIG BOARD II" 4 MHz Z80·A SINGLE BOARD COMPUTER WITH "SASI" HARD·DISK INTERFACE $795 ASSEMBLED & TESTED $545 "UNKIT"* $245 PC BOARD WITH 16 PARTS Jim Ferguson, the designer of the "Big Board" distributed by Digital SIZE: 8.75" X 15.5" Research Computers, has produced a stunning new computer that POWER: +5V @ 3A, +-12V @ 0.1A Cal-Tex Computers has been shipping for a year. Called "Big Board II", it has the following features: • "SASI" Interface for Winchester Disks Our "Big Board II" implements the Host portion of the "Shugart Associates Systems • 4 MHz Z80-A CPU and Peripheral Chips Interface." Adding a Winchester disk drive is no harder than attaching a floppy-disk The new Ferguson computer runs at 4 MHz. -

KAYPRO 10 User's Guide, Copying Fi Les from One User Area to Another, for the Prodedure

I - ~------- - - - • - --- - -- --~ - - - - ~ -- - - - --- ---_---.. ---------- --- ---------- o o o ,.' . ''', ',. THE ---- -- -- -------- - -- -- ---- - ------- . ----- ------ -- - =-- -- ------ ---_... -------- - - ~ USER'S GUDE ., . © January 1984 Part Number 1409 E.O.81-176-18-F COPYRIGHT AND TRADEMARK © 1983 Kaypro Corporation. KAYPRO 10 is a registered trademark of Kaypro Corporation. DISCLAIMER Kaypro Corporation hereby disclaims any and all liability resulting from the failure of other manufacturers' software to be operative within and upon the KAYPRO 10 computer, due to Kaypro's inability to have tested each entry of software. LIMITED WARRANTY Kaypro Corporation warrants each new instrument or computer against defects in material or workmanship for a period of ninety days from date of delivery to the original customer. Fuses are excluded from this warranty. This warranty is specifically limited to the replacement or repair of any such defects, without charge, when the complete instrument is returned to one of our authorized dealers or Kaypro Corporation, 533 Stevens Avenue, Solana Beach, California 92075, transportation charges prepaid. This express warranty excludes all other warranties, express or implied, in cluding, but not limited to, implied warranties of merchantability, and fitness for purpose, and KAYPRO CORPORATION IS NOT LIABLE FOR A BREACH OF WARRANTY IN AN AMOUNT EXCEEDING THE PURCHASE PRICE OF THE GOODS. KAYPRO CORPORATION SHALL NOT BE LIABLE FOR INCIDENTAL OR CONSEQUENTIAL DAMAGES. No liability is assumed for -

Microcomputers in Development: a Manager's Guide

Microcomputers in Development: A Manager's Guide Marcus D. Ingle, Noel Berge, and Marcia Hamilton Kumarianfl P-ress 29 Bishop Road West Hartford, Connecticut 06119 Dedications To Diana who is so special in many ways, Aric who helps me learn, Aaron who makes it fun, and Danika who has it all together. Marcus To my Love and Best Friend - Nancy. Noel I am so grateful for the patience, support and gentle harassment provided by my children, Daniel and Elizabeth, and by my husband Dennis. Marcia Copyright © 1983 by Kumarian Press 29 Bishop Road, West Hartford, Connecticut 06119 All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without prior written permission of the publisher. Printed in the United States of America Cover de.ign by Marilyn Penrod This manuscript was prepared on a Kaypro microcomputer using Wordstar and printed on a C. Itoh printer using prestige elite type. Library of Congress Cataloging in Publication Data Ingle, Marcus. Microcomputers in development. Bibliography: p: 1. Microcomputers. 2. Economic development projects Management-Data processing. I. Berge, Noel, 1943- II.Hamilton, Marcia, 1943- III. Title. QA76.5.1445 1983 658.4'038 83-19558 ISBN 0-931816-03-3 ii TABLE OF CONTENTS Table of Contents iii Foreword v[ ( Authors' Pre fac- ix Acknowledgement s xf INTRODUCTION 1 Some Implications 2 What a Microcomputer is Not 2 Who Should Use T~i Guide? 3 The Purpose and Scope of the Guide 5 What the Guide Does and Does Not Do 6 CHAPTER I: THE IMANAGEMENT POTENTIAL OF USER-FRIENDLY MICROCOMPUTERS 9 The Context if Development Management ]I Generic Management Functions 13 The Importance of User-Friendliness in Microcomputer Systems 24 Structured Flexibility 24 User-Friendly Skill. -

First Osborne Group (FOG) Records

http://oac.cdlib.org/findaid/ark:/13030/c8611668 No online items First Osborne Group (FOG) records Finding aid prepared by Jack Doran and Sara Chabino Lott Processing of this collection was made possible through generous funding from the National Archives’ National Historical Publications & Records Commission: Access to Historical Records grant. Computer History Museum 1401 N. Shoreline Blvd. Mountain View, CA, 94043 (650) 810-1010 [email protected] August, 2019 First Osborne Group (FOG) X4071.2007 1 records Title: First Osborne Group (FOG) records Identifier/Call Number: X4071.2007 Contributing Institution: Computer History Museum Language of Material: English Physical Description: 26.57 Linear feet, 3 record cartons, 5 manuscript boxes, 2 periodical boxes, 18 software boxes Date (bulk): Bulk, 1981-1993 Date (inclusive): 1979-1997 Abstract: The First Osborne Group (FOG) records contain software and documentation created primarily between 1981 and 1993. This material was created or authored by FOG members for other members using hardware compatible with CP/M and later MS and PC-DOS software. The majority of the collection consists of software written by FOG members to be shared through the library. Also collected are textual materials held by the library, some internal correspondence, and an incomplete collection of the FOG newsletters. creator: First Osborne Group. Processing Information Collection surveyed by Sydney Gulbronson Olson, 2017. Collection processed by Jack Doran, 2019. Access Restrictions The collection is open for research. Publication Rights The Computer History Museum (CHM) can only claim physical ownership of the collection. Users are responsible for satisfying any claims of the copyright holder. Requests for copying and permission to publish, quote, or reproduce any portion of the Computer History Museum’s collection must be obtained jointly from both the copyright holder (if applicable) and the Computer History Museum as owner of the material. -

Related Links History of the Radio Shack Computers

Home Page Links Search About Buy/Sell! Timeline: Show Images Radio Shack TRS-80 Model II 1970 Datapoint 2200 Catalog: 26-4002 1971 Kenbak-1 Announced: May 1979 1972 HP-9830A Released: October 1979 Micral Price: $3450 (32K RAM) 1973 Scelbi-8H $3899 (64K RAM) 1974 Mark-8 CPU: Zilog Z-80A, 4 MHz MITS Altair 8800 RAM: 32K, 64K SwTPC 6800 Ports: Two serial ports 1975 Sphere One parallel port IMSAI 8080 IBM 5100 Display: Built-in 12" monochrome monitor MOS KIM-1 40 X 24 or 80 X 24 text. Sol-20 Storage: One 500K 8-inch built-in floppy drive. Hewlett-Packard 9825 External Expansion w/ 3 floppy bays. PolyMorphic OS: TRS-DOS, BASIC. 1976 Cromemco Z-1 Apple I The Digital Group Rockwell AIM 65 Compucolor 8001 ELF, SuperELF Wameco QM-1A Vector Graphic Vector-1 RCA COSMAC VIP Apple II 1977 Commodore PET Radio Shack TRS-80 Atari VCS (2600) NorthStar Horizon Heathkit H8 Intel MCS-85 Heathkit H11 Bally Home Library Computer Netronics ELF II IBM 5110 VideoBrain Family Computer The TRS-80 Model II microcomputer system, designed and manufactured by Radio Shack in Fort Worth, TX, was not intended to replace or obsolete Compucolor II the Model I, it was designed to take up where the Model I left off - a machine with increased capacity and speed in every respect, targeted directly at the Exidy Sorcerer small-business application market. Ohio Scientific 1978 Superboard II Synertek SYM-1 The Model II contains a single-sided full-height Shugart 8-inch floppy drive, which holds 500K bytes of data, compared to only 87K bytes on the 5-1/4 Interact Model One inch drives of the Model I. -

Xerox 820-II

This equipment (except the rigid disk drive unit) has been certified to comply with the limits for Class B Computing Device, pursuant to Subpart J of Part 15 of FCC rules. Only peripherals (computer input/output devices, terminals, printers, etc.) certified to comply with the Class B limits may be attached to this computer. Operation with non-certified perpherals is likely to result in interference to radio and TV reception. The Xerox 820-11 generates and uses radio frequency and if not installed and used properly, i.e., in strict accordance with the manufacturer's instructions, may cause interference to radio and television reception. It has been type tested and found to comply with the limits for a Class B Computing Device in accordance with the specifications in Subpart J of Part 15 of FCC rules, which are designed to provide reasonable protection against such interference in a residential installation. If this equipment does cause interference to radio or television reception, which can be determined by turning the equipment off and on, you are encouraged to try to correct the interference by one or more of the following measures: • Reorient the receiving antenna. • Relocate the computer with respect to the receiver. • Plug the computer into a different outlet so that computer and receiver are on different branch circuits. If necessary, you may consult Xerox or an experienced radio television technician for additional suggestions. You may find the following booklet prepared by the Federal Communications Commission helpful: "How to Identify and Resolve Radio-TV Interference Problems". This booklet is available from the U.S. -

TABLE of CONTENTS BB II EPROM Program

$3.00 TABLE OF CONTENTS BB II EPROM Program .......................................4 Big Board Fixes .............................................8 Relocating Your CP/M .... "................................... 9 The Disk is the Media ...... : ................................12 Serial Print Driver ..........................................14 Talking Serially ...................." ....... " ................. 15 Pascal/Z Review ......' .....................................19 Bringing Up WordStar .... ".................................. 23 A Cheap RAM Disk for the BB I .............................. 24 REGULAR FEATURES Letters .......................2 Xerox 820 Notes .............10 FORTHwords ...............14 C'ing Clearly .... " ...........22 Technical Tips ...............25 On Your Own ...............27 Want Ads ...................27 UNI FORTH is the best implementation of the FORTH language available at any price--and it is now available specifically customized for the Big Board, Big Board II, and other single-board computers! Just look at these standard features: • All source code is supplied except for a small kernel. • Versatile cursor-addressed editor. Menu driven, with You can easily modify, add or delete functions. tabulation, word delete, multi-line transfer, string Adheres to the FORTH-79 international standard. search/delete/replace. Fully optimzied for the Z-80. • Full Z-80 assembler using Zilog/Mostek mnemonics. • Stand-alone. No operating system is needed. Excep Structured programming constructs and support for tionally -

Osborne 1 Computer

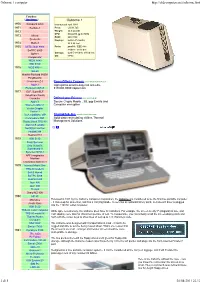

Osborne 1 computer http://oldcomputers.net/osborne.html Timeline: ( Show Images ) Osborne 1 1970 Datapoint 2200 Introduced: April 1981 1971 Kenbak-1 Price: US $1,795 1972 Weight: 24.5 pounds CPU: Zilog Z80 @ 4.0 MHz 1973 Micral RAM: 64K RAM Scelbi-8H Display: built-in 5" monitor 1974 Mark-8 53 X 24 text 1975 MITS Altair 8800 Ports: parallel / IEEE-488 SwTPC 6800 modem / serial port Sphere Storage: dual 5-1/4 inch, 91K drives OS: CP/M Compucolor IMSAI 8080 IBM 5100 1976 MOS KIM-1 Sol-20 Hewlett-Packard 9825A PolyMorphic Cromemco Z-1 Roma Offerta Coupon www.GROUPON.it/Roma Apple I Ogni giorno sconti esagerati Giá oltre Rockwell AIM 65 319.000.000€ risparmiati. 1977 ELF, SuperELF VideoBrain Family Computer Defend your Privacy www.eurocrypt.pt Apple II Secure Crypto Mobile , 3G, pgp Emails and Wameco QM-1A Computer encryption Vector Graphic Vector-1 RCA COSMAC VIP ThermoTek, Inc. www.thermotekusa.com Commodore PET Solid state recirculating chillers Thermal Radio Shack TRS-80 Management Solutions Atari VCS (2600) NorthStar Horizon Heathkit H8 Heathkit H11 1978 IBM 5110 Exidy Sorcerer Ohio Scientific Superboard II Synertek SYM-1 APF Imagination Machine Cromemco System 3 1979 Interact Model One TRS-80 model II Bell & Howell SwTPC S/09 Heathkit H89 Atari 400 Atari 800 TI-99/4 Sharp MZ 80K 1980 HP-85 MicroAce Released in 1981 by the Osborne Computer Corporation, the Osborne 1 is considered to be the first true portable computer Acorn Atom - it closes-up for protection, and has a carrying handle.